What is Meta Learning?

Meta-learning, also known as "learning how to learn," is a cutting-edge approach in machine learning that focuses on algorithms that learn from their experiences and adapt to new data more effectively.

Why is Meta-learning important?

As machine learning systems become more complex and sophisticated to tackle diverse problems, it becomes crucial to find better ways to optimize their learning process.

Meta-learning comes into play here, enabling AI models to become more efficient, adaptable, and versatile in diverse contexts.

How does Meta-learning work?

Meta-learning enhances traditional machine learning methods by adding a meta-layer to optimize learning algorithms.

This allows AI models to improve through experience and derive optimal solutions for new tasks by learning from previous tasks or similar datasets.

Meta-learning incorporates various techniques, such as memory-augmented neural networks, optimization algorithms, and learning-to-learn approaches, among others.

Examples of Meta-learning in Practice

There are numerous applications of Meta-learning in diverse fields, such as robotics, natural language processing, and computer vision. For instance:

In robotics, Meta-learning helps robots learn motor skills and adapt to new tasks quickly, with minimal human intervention.

In natural language processing, Meta-learning enhances text classification, sentiment analysis, and language modeling by connecting prior knowledge with new tasks.

In computer vision, Meta-learning enables object recognition, image synthesis, and style transfer by learning from diverse datasets and tasks.

Meta Classifiers and Regressors

In this section, we will go over the concepts of meta-classifiers and meta-regressors.

Meta-Classifiers, Unraveled

Meta-classifiers play a central role in ensemble machine learning techniques. They act as a higher-level classifier that takes the predictions of multiple base-level classifiers to generate a final prediction.

Imagine a team of experts where each member has their unique way of assessing a situation—the meta-classifier would be the leader who considers everyone's input and makes an educated, final decision.

Furthermore, this process allows the incorporation of diverse perspectives and methodologies, often improving the overall prediction's accuracy and robustness.

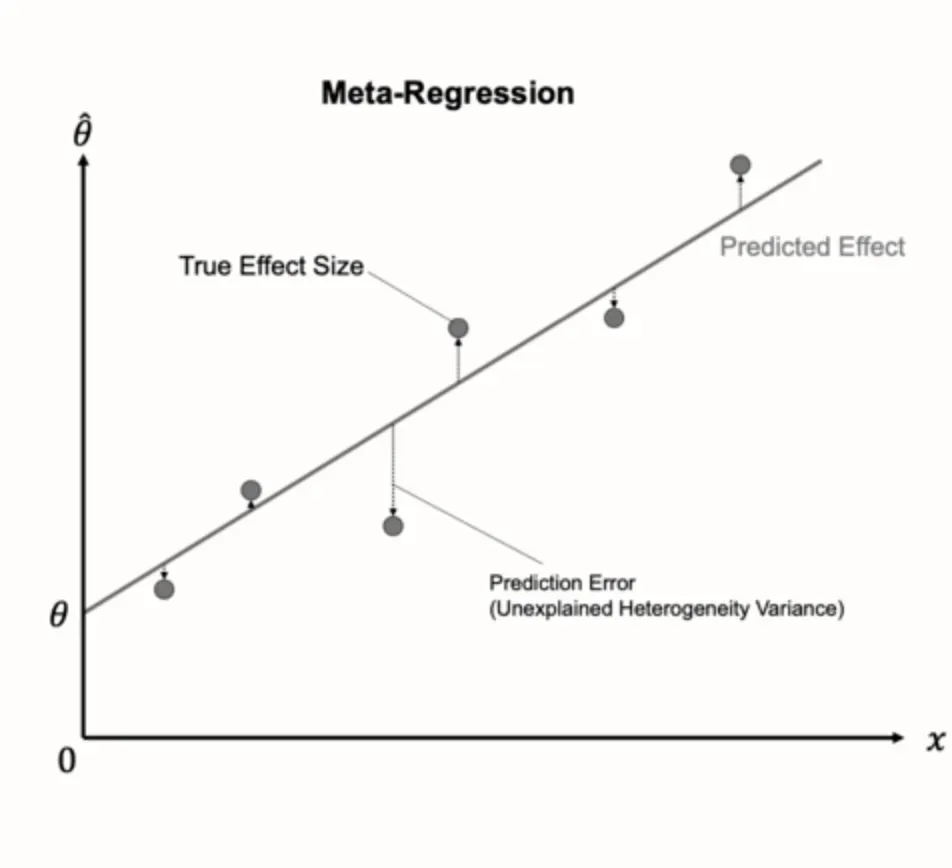

The Workings of Meta-Regressors

Meta-regressors operate on a similar principle as meta-classifiers, but it's their realm of application that sets them apart.

While meta-classifiers deal with categorical predictions (like deciding whether an email is spam or not), meta-regressors function in numeric predictions.

For instance, in a real estate context, multiple base models might estimate a house's price—the meta-regressor then takes these estimates and generates a final price prediction. Their application is often found in regression problems where the target variable is continuous.

A Boost to Overcoming Bias and Variance

Meta-classifiers and meta-regressors help tackle bias and variance within individual models—an essential factor in reducing errors and improving predictive performance.

By utilizing the diversity of multiple models, they help to strike a balance, resulting in better generalizability on unseen data.

Meta Classifiers and Regressors in Action

Meta-classifiers and meta-regressors aren't limited to theory; they're being employed frequently in practice too.

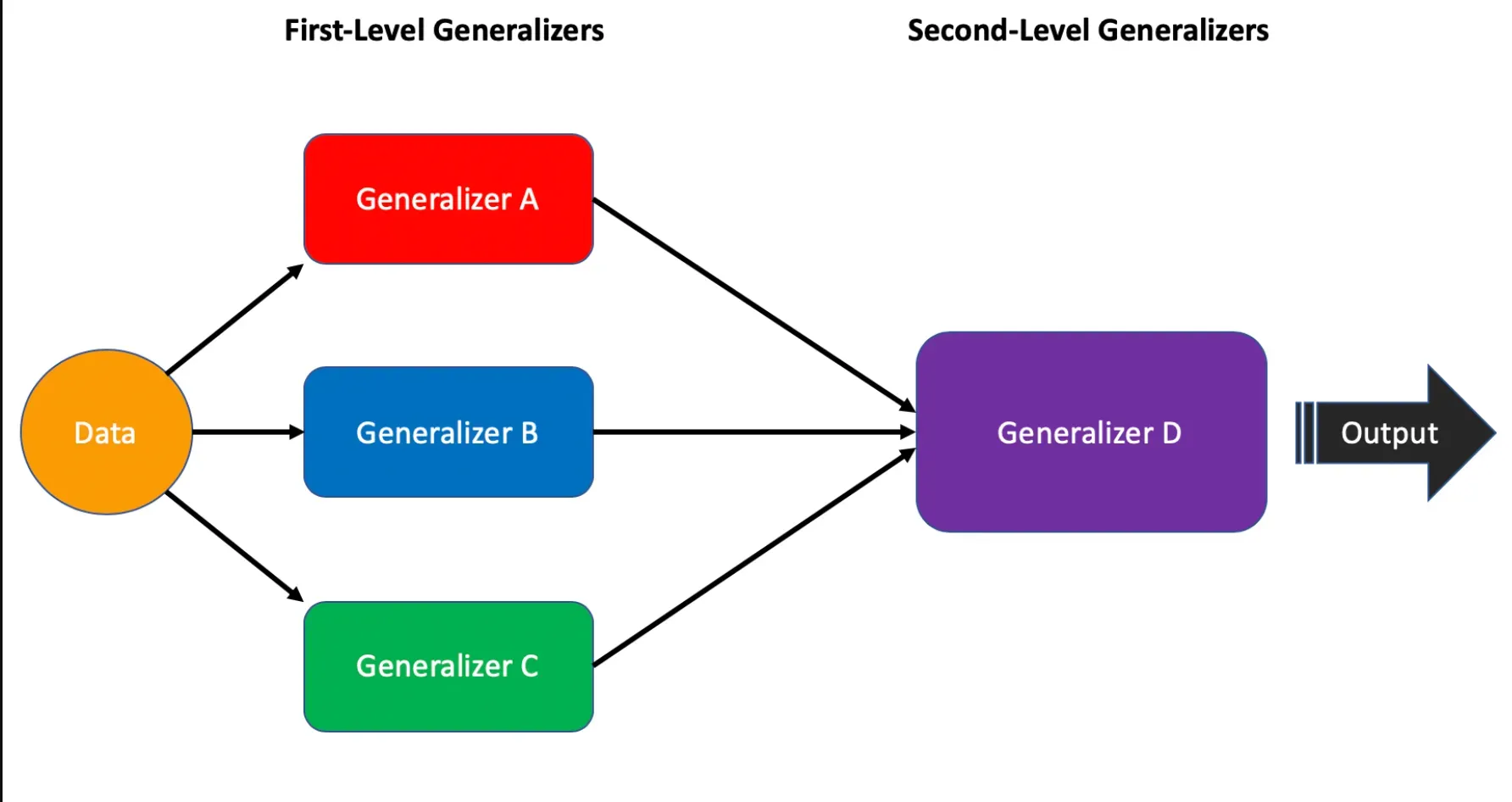

For instance, Random Forests—a widely used machine learning algorithm—is a type of meta-classifier that leverages multiple decision trees for final prediction. Similarly, stacking or stacked generalization methodology, relies on a meta-learner (either meta-classifier or meta-regressor), which takes predictions from different models to output a final prediction.

Expanding the Envelope of Creation

The field of machine learning is continuously evolving, and the introduction of meta-classifiers and meta-regressors have provided valuable avenues to innovate and design more robust, efficient learning systems.

As we look forward to more advancements in artificial intelligence, the role of these meta-learners becomes more crucial in managing complex prediction tasks effectively.

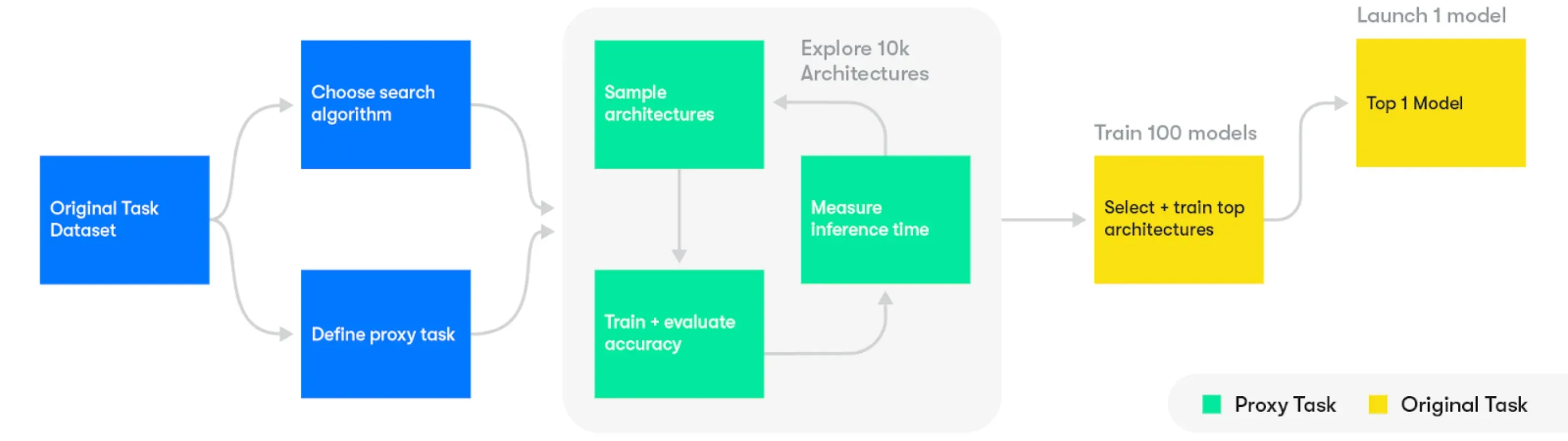

Techniques in Meta Learning

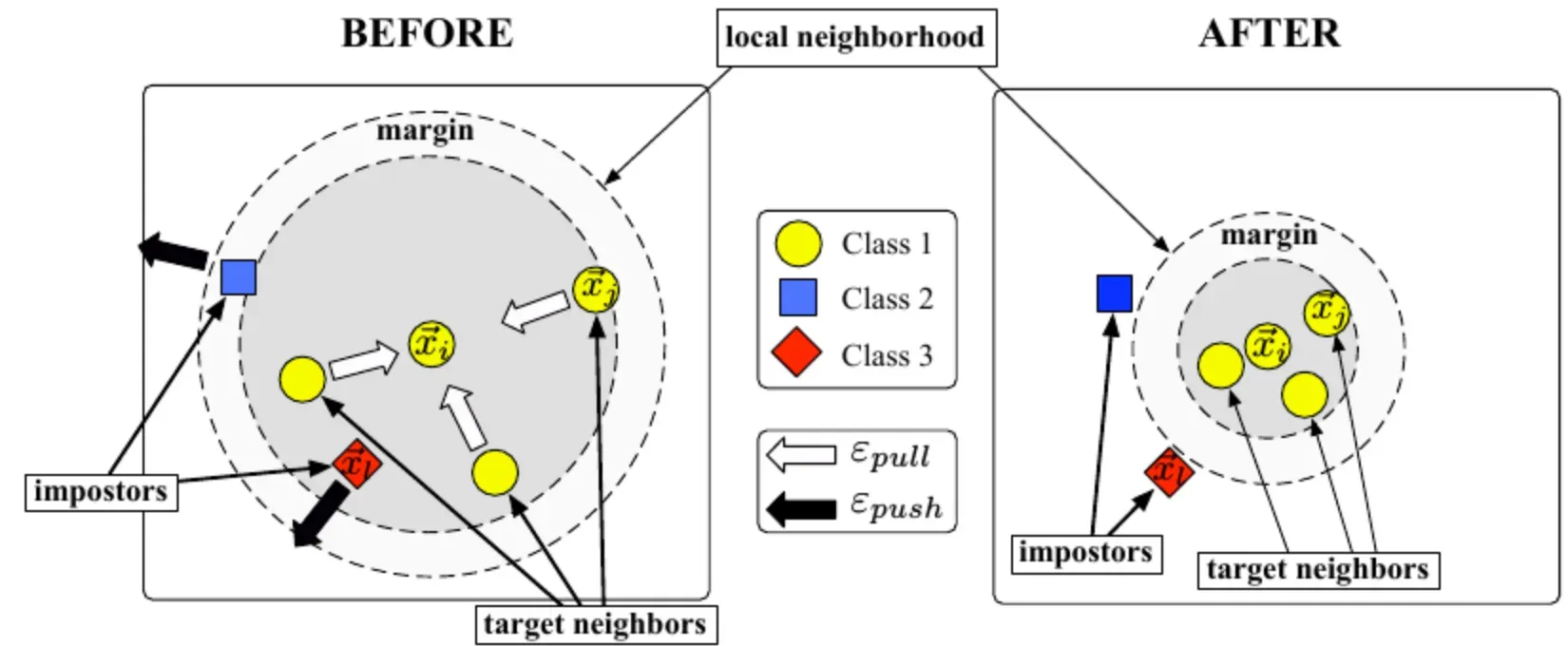

Metric Learning

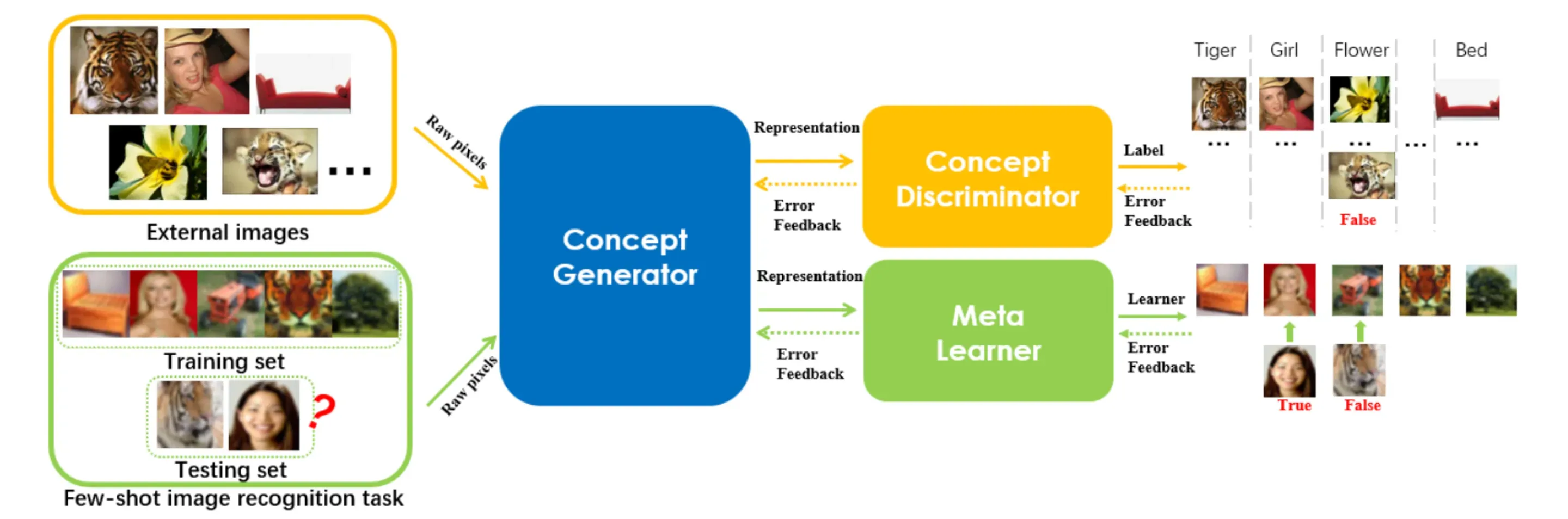

Metric learning involves learning a metric space for predictions and is particularly useful in few-shot classification tasks.

It is similar to nearest neighbors algorithms, such as k-Nearest Neighbors classifier and k-means clustering.

Model-Agnostic Meta-Learning (MAML)

MAML is a task-agnostic algorithm used for training the parameters of a model to enable quick learning with a small number of gradient updates.

It adapts the model to new tasks by training it with examples.

Recurrent Neural Networks (RNNs)

Recurrent neural networks (RNNs) are a type of artificial intelligence used for sequential or time-series data.

They find applications in language translation, speech recognition, and handwriting recognition tasks. In meta-learning, RNNs are utilized to process sequentially collected data from datasets as new inputs.

Stacking or Stacked Generalization

Stacking is a subfield of ensemble learning that leverages supervised and unsupervised learning.

It involves training learning algorithms with available data, creating a combiner algorithm to combine their predictions, and using the combiner algorithm to make final predictions.

Convolutional Siamese Neural Network

A convolutional siamese neural network consists of twin networks trained jointly to understand the relationship between pairs of input data samples.

The twin networks have shared weights and network parameters, enabling the learning of an efficient embedding that reveals the relationship between the data points.

Matching Networks

Matching networks learn a classifier for a small support set, mapping labeled support sets and unlabeled examples to their respective labels without the need for fine-tuning for new class types.

LSTM Meta-Learner

An LSTM meta-learning algorithm aims to find the optimal optimization algorithm for training another learner neural network classifier in the few-shot regime.

It learns appropriate parameter updates for a specific scenario where a set number of updates will be made, as well as a general initialization for quick convergence.

Advantages of Meta Learning

In this section, we'll explore the advantages of meta-learning.

Rapid Learning and Adaptation

Meta-learning enables models to learn quickly from smaller datasets, making it suitable for tasks where vast data resources aren't available.

This allows models to adapt rapidly to new tasks with minimal training.

Transfer of Prior Knowledge

Meta-learning allows for knowledge transfer across tasks, as models can leverage previous experiences to boost their performance on new and related tasks, leading to more efficient and effective learning.

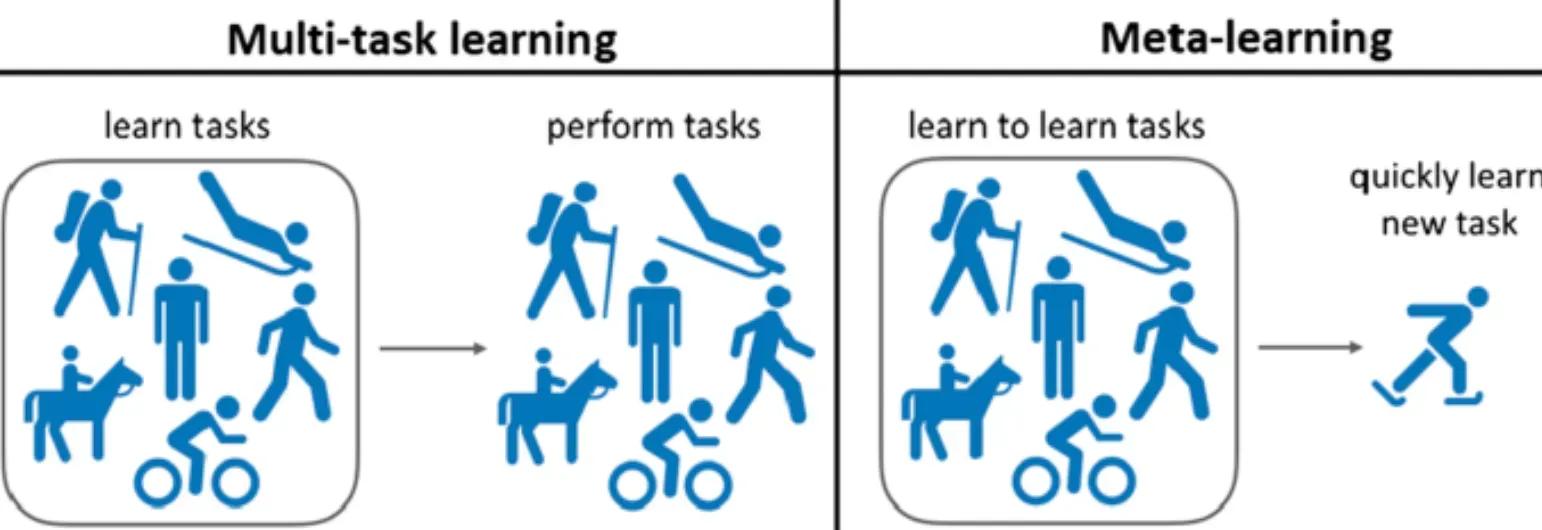

Multi-task Learning

Through meta-learning, models can learn multiple tasks simultaneously or sequentially.

This versatility is essential as it permits an efficient use of computational resources while tackling different problems.

Robustness to Changing Environments

Meta-learning approaches provide enhanced robustness, allowing models to handle situations involving non-stationary data or changing environments.

This adaptive nature ensures that models maintain optimal performance even in dynamic scenarios.

Lifelong Learning

Lifelong learning is encouraged through meta-learning, as it permits models to continuously update their knowledge base and refine their learning algorithms. This leads to ever-improving performance and adapting to new challenges over time.

In summary, the advantages of meta-learning make it a favorable choice for developing versatile, adaptive, and resource-efficient machine learning models capable of tackling diverse tasks and challenges.

Use Cases of Meta-Learning

In this section, we'll uncover some practical applications of meta-learning.

Rapid Model Adaptation

Meta-learning is used to rapidly adapt machine learning models to new tasks with few training examples.

This scenario is prevalent in domains like healthcare, where machine learning models may need to diagnose rare diseases based on limited case data.

Multi-task Learning

One robust application of meta-learning is in multi-task learning. Using meta-learning, a model can simultaneously learn and switch between multiple tasks.

For instance, in robotics, this could be learning to pick and place different objects without specific programming for each activity.

Personalized Learning

Meta-learning helps build personalized learning systems that adapt to individual needs.

In education, for instance, intelligent tutoring systems can employ meta-learning to customize the learning path and pace for each student based on their unique strengths and weaknesses.

Model-Agnostic Algorithms

Meta-learning plays a vital role in developing model-agnostic algorithms. These algorithms can be used with any machine learning model to provide rapid adaptability across tasks.

This feature is crucial when dealing with diverse datasets requiring a suite of models to solve different problems.

Few-Shot Learning

Meta-learning is pivotal in "few-shot learning," where models are trained to make accurate predictions with minimal training data.

This characteristic is especially valuable in computer vision tasks, where classifying or identifying rare or novel entities often relies on a limited number of labeled images.

Frequently Asked Questions (FAQs)

Can Meta Learning be applied to online learning scenarios?

Yes, Meta Learning can be adapted to online learning scenarios. By continuously updating and adapting the meta-learner based on new tasks, it can enable models to learn and adapt in an online, dynamic learning environment.

How does Meta Learning handle task distribution changes?

Meta Learning can be robust to task distribution changes, as it focuses on learning the general learning process rather than being tied to specific tasks. However, significant distribution shifts may still require retraining or fine-tuning the meta-learner.

What are some algorithms commonly used in Meta Learning?

Some commonly used Meta Learning algorithms include MAML (Model-Agnostic Meta-Learning), Reptile, Meta-SGD, and Gradient-based Meta-Learning. These algorithms provide frameworks for training meta-learners and optimizing the learning process.

Can Meta Learning be used for unsupervised learning tasks?

Yes, Meta Learning can be applied to unsupervised learning tasks. It can help in learning representations or generating useful features for unsupervised tasks, such as clustering, anomaly detection, and dimensionality reduction.

How does Meta Learning improve generalization on new tasks?

Meta Learning improves generalization by learning a more effective learning process. It enables models to quickly adapt to new tasks with limited data, generalize knowledge across tasks, and transfer learned knowledge to solve novel problems efficiently.