What is Clustering?

Clustering, in the context of machine learning, is an unsupervised learning technique that involves grouping similar data points together based on their features or characteristics. The goal is to identify underlying patterns or structures within the data, allowing for better understanding, analysis, and prediction.

Importance of Clustering in Data Analysis

Clustering is essential in data analysis for several reasons:

Data exploration: Clustering helps us understand the structure and distribution of data, uncovering hidden patterns and trends.

Dimensionality reduction: Clustering can simplify complex datasets by reducing the number of dimensions, making it easier to analyze and visualize the data.

Anomaly detection: Clustering can identify outliers or unusual data points that may indicate errors or interesting phenomena.

Prediction: Clustering can be used to train predictive models or to improve the performance of other machine learning algorithms.

Types of Clustering

Hierarchical Clustering

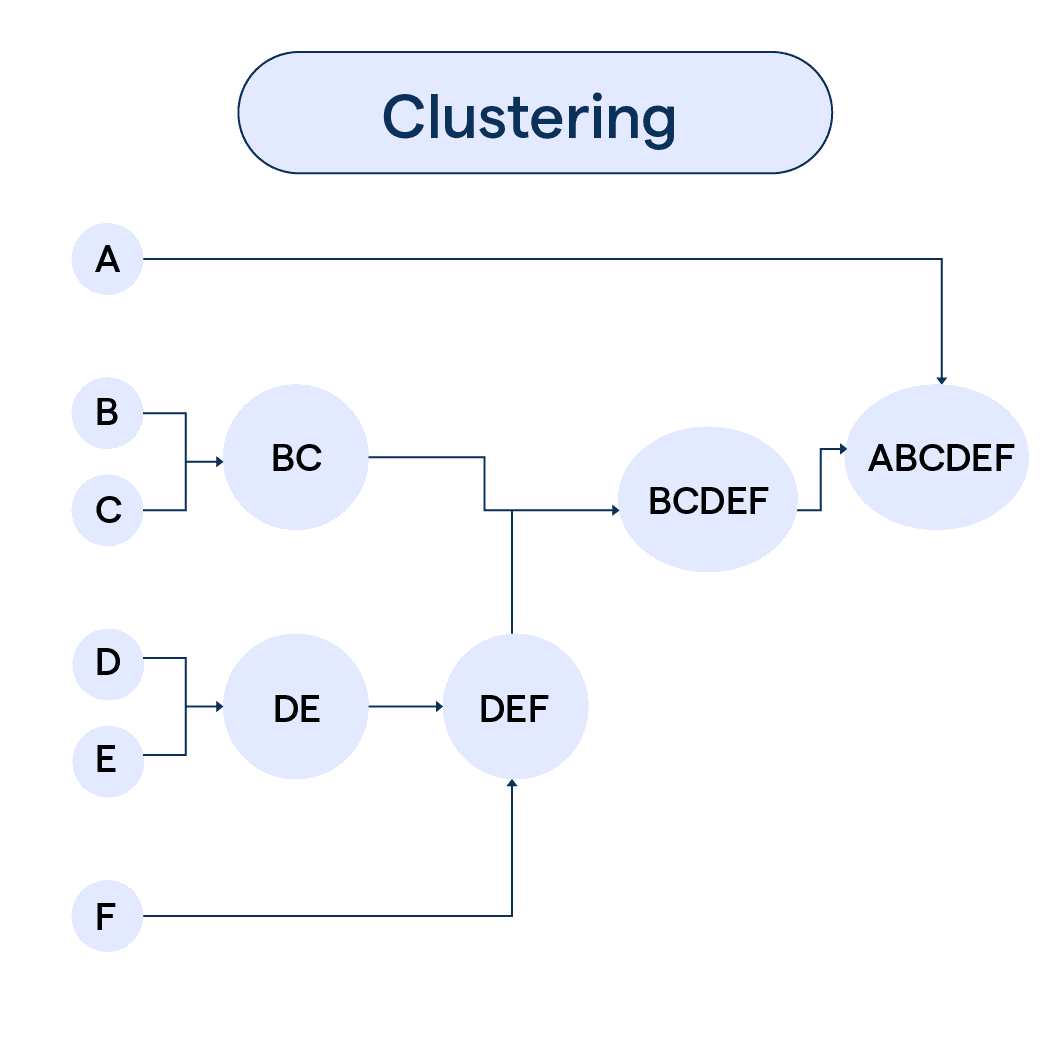

Hierarchical clustering is like organizing a family tree, where data points are grouped based on their similarity, forming a tree-like structure. There are two main approaches to hierarchical clustering:

Agglomerative: Starts with each data point as its own cluster and merges the closest pairs of clusters until only one cluster remains.

Divisive: Starts with one large cluster containing all data points and splits it into smaller clusters until each data point forms its own cluster.

Partition-based Clustering

Partition-based clustering is like dividing a pizza into equal slices, where data points are assigned to a fixed number of clusters based on their similarity. The most popular partition-based clustering algorithm is K-means. In K-means, the number of clusters (K) is predefined, and the algorithm iteratively assigns data points to the nearest cluster centroid until convergence.

Density-based Clustering

Density-based clustering is like finding islands in an ocean, where clusters are formed based on the density of data points in a region. In this approach, a cluster is a dense region of data points separated by areas of lower point density. One popular density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise), which identifies clusters of varying shapes and sizes and can detect noise or outliers.

Grid-based Clustering

Grid-based clustering is like dividing a city map into a grid, where data points are assigned to grid cells based on their spatial location. Clusters are formed by grouping neighboring grid cells with similar densities of data points. Grid-based clustering algorithms are typically faster than other clustering methods, as they rely on the grid structure for calculations.

Clustering Algorithms

K-means Clustering

K-means clustering is the "poster child" of partition-based clustering algorithms. It's simple, easy to understand, and works well for many datasets. The algorithm starts by initializing K cluster centroids randomly, then iteratively assigns data points to the nearest centroid and updates the centroids' positions until convergence or a maximum number of iterations is reached.

Agglomerative Hierarchical Clustering

Agglomerative hierarchical clustering is a bottom-up approach to hierarchical clustering. It begins with each data point as its own cluster and successively merges the closest pairs of clusters based on a distance metric (e.g., Euclidean distance) and linkage criteria (e.g., single, complete, or average linkage) until only one cluster remains. The result is a dendrogram, a tree-like structure that visually represents the clustering hierarchy.

DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN is a popular density-based clustering algorithm that can find clusters of varying shapes and sizes, while also detecting noise or outliers. The algorithm works by defining a neighborhood around each data point and grouping points that are close together based on a distance metric and a density threshold. Points that do not belong to any cluster are treated as noise.

Use Cases Of Clustering

Customer Segmentation in Marketing

One fantastic use of clustering in machine learning is customer segmentation. Imagine you're running a business and you want to target your customers more efficiently. By using clustering algorithms, you can group your customers based on purchasing habits, interests, demographics, and more. This allows for creating personalized marketing campaigns that resonate with each segment, which can lead to increased sales and customer loyalty. It’s like knowing what your customers want before they even tell you!

Social Network Analysis

Let’s dive into the world of social media. It's buzzing, right? Clustering plays a big role in understanding how information spreads on social networks like Twitter or Facebook. By grouping users based on common attributes such as interests or behaviors, companies can gain insights into different communities. This helps in understanding how trends start, or even predicting what the next viral sensation will be. For social networks, it’s an essential tool for monitoring and understanding user dynamics.

Improving Healthcare and Medical Imaging

Healthcare is an area where clustering is making a significant impact. Imagine doctors being able to diagnose diseases with the help of machine learning. By using clustering, they can identify similar cases and learn from historical data. In medical imaging, clustering helps in grouping similar pixels together, which is essential for understanding images at a deeper level and aiding in the diagnosis. This isn’t just cool tech, it’s life-changing!

Enhancing Recommender Systems

Ever wondered how Netflix or Amazon seems to know what you like? That’s clustering at work in recommender systems. These platforms analyze your past behavior, group you with similar users, and use this information to recommend products or movies. The “Customers who bought this also bought…” or “Because you watched…” features are classic examples. It's like having a personal shopping assistant who knows your taste!

Anomaly Detection for Fraud Prevention

Last but definitely not least, let’s talk security. In banking and finance, clustering is a superhero fighting against fraud. By grouping and analyzing transactions, it can detect patterns that stand out from the norm, which might indicate fraudulent activity. When a transaction doesn’t fit into the usual clusters, it raises a red flag. This allows companies to act quickly, protecting both themselves and their customers. It’s like having a financial watchdog keeping an eye out for you!

Suggested Reading:

Meta Learning

Choosing the Right Clustering Algorithm

Factors to Consider When Selecting a Clustering Algorithm

When choosing a clustering algorithm, keep these factors in mind:

Dataset size: Some algorithms work better for small datasets, while others are more suitable for large datasets.

Cluster shape: If you expect clusters to have specific shapes (e.g., spherical, elongated), choose an algorithm that can handle those shapes.

Noise and outliers: If your dataset contains noise or outliers, select an algorithm that can handle them effectively.

Scalability: Consider the computational complexity of the algorithm and its ability to scale to larger datasets.

Comparison of Popular Clustering Algorithms

Here's a quick comparison of some popular clustering algorithms:

K-means: Simple, fast, works well for spherical clusters, but sensitive to initial conditions and outliers.

Agglomerative hierarchical clustering: Can handle any cluster shape, but is computationally expensive for large datasets.

DBSCAN: Robust to noise and outliers, can find clusters of varying shapes, but requires tuning of distance and density parameters.

How to Perform Clustering?

Preparing Data for Clustering

Before clustering, follow these steps to prepare your data:

Clean the data: Remove or impute missing values, correct errors, and address inconsistencies.

Normalize the data: Scale the features to have similar ranges, as clustering algorithms are sensitive to feature scales.

Select relevant features: Use domain knowledge or feature selection techniques to identify the most important features for clustering.

Selecting the Appropriate Clustering Algorithm

Based on the factors discussed earlier, choose a clustering algorithm that best suits your dataset and objectives. Experiment with different algorithms and parameter settings to find the best fit for your data.

Evaluating and Validating Clustering Results

To evaluate and validate your clustering results, consider the following:

Visual inspection: Plot the clusters and examine their shapes, sizes, and separation.

Internal validation: Use internal validation measures, such as the silhouette score or the Calinski-Harabasz index, to assess the quality of the clustering.

External validation: If you have access to ground truth labels, use external validation measures, such as the adjusted Rand index or the Fowlkes-Mallows index, to compare the clustering results to the true labels.

Clustering Applications and Use Cases

Customer Segmentation in Marketing

Clustering can help marketers segment customers based on their demographics, behaviors, and preferences, enabling targeted marketing campaigns and personalized offers to boost customer engagement and loyalty.

Anomaly Detection in Finance and Security

Clustering can identify unusual transactions, suspicious activities, or network intrusions by detecting outliers or anomalies that deviate from the norm, helping to prevent fraud, cyberattacks, and other threats.

Image Segmentation and Pattern Recognition

Clustering can be used to segment images into regions with similar colors, textures, or shapes, which can be useful for object recognition, image compression, and computer vision applications.

Clustering Challenges and Limitations

Scalability and Computational Complexity

Some clustering algorithms, such as hierarchical clustering, have high computational complexity, making them unsuitable for large datasets. Consider using scalable algorithms like K-means or grid-based clustering for large-scale data.

Handling Outliers and Noisy Data

Clustering algorithms can be sensitive to outliers and noise, which may lead to poor cluster quality or misclassification. Choose robust algorithms like DBSCAN or preprocess the data to minimize the impact of noise and outliers.

Determining the Optimal Number of Clusters

Selecting the right number of clusters can be challenging, as it often depends on domain knowledge or trial and error. Techniques like the elbow method, silhouette analysis, or gap statistic can help estimate the optimal number of clusters.

Frequently Asked Questions

What is clustering in machine learning?

Clustering is an unsupervised learning technique that groups similar data points together based on their features or characteristics.

Why is clustering important?

Clustering helps identify patterns and structures in data, enabling better understanding, analysis, and prediction for various applications.

What are common clustering algorithms?

Common clustering algorithms include K-means, hierarchical clustering, DBSCAN, and Gaussian mixture models.

How is clustering different from classification?

Clustering is unsupervised and groups similar data points, while classification is supervised and assigns data points to predefined categories.

Can clustering be used for anomaly detection?

Yes, clustering can be used for anomaly detection by identifying data points that don't belong to any cluster, indicating potential outliers or anomalies.