What is Unsupervised Learning?

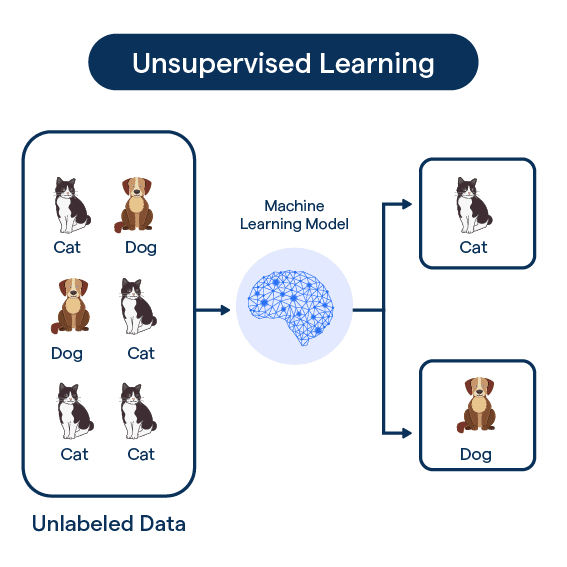

Unsupervised learning is a machine learning technique that involves training algorithms using unlabeled data. Unlike supervised learning that uses labeled datasets, unsupervised learning models are given only the input data without any corresponding output variables.

The goal is to discover hidden patterns and relationships in the data without any external guidance. Essentially, the models learn by themselves by exploring the structure of the data.

A common unsupervised learning method is cluster analysis. Let's say you have customer purchase data but no defined categories. By applying cluster analysis algorithms, the system can segment customers into groups based on common behaviors and attributes. This allows discovering novel segments and patterns that exist within the customer base.

Other popular unsupervised techniques include association rule learning to uncover interesting correlations and dimensionality reduction methods like PCA to identify important attributes in complex datasets.

The key advantage of unsupervised learning is the ability to process untagged data that would otherwise be unused. It helps reveal insights that humans may miss by exploring data without preconceived notions. However, the outcomes can be harder to evaluate without defined objectives.

Overall, unsupervised learning expands the potential of AI by enabling identification of hidden structures from raw data. It opens up possibilities to derive actionable information from almost any data source.

Components of Unsupervised Learning

In this section, we'll explore the key elements that make up unsupervised learning and provide a deeper understanding of how this learning paradigm functions.

Data Exploration and Pre-processing

Before applying unsupervised learning methods, it's crucial to explore and preprocess data to normalize, remove noise, and handle missing values for improved algorithm performance.

Feature Extraction and Dimensionality Reduction

Unsupervised learning seeks to find trends in data by extracting essential features and reducing dimensionality. Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are commonly employed.

Clustering Techniques

Clustering is central to unsupervised learning, helping to discover distinct data groups based on similarity. Examples of clustering methods include K-Means, Hierarchical Clustering, and DBSCAN.

Density Estimation

Density estimation techniques, such as Gaussian Mixture Models, Kernel Density Estimation (KDE), and Histograms, help determine the underlying distribution of the data.

Evaluation

Evaluating unsupervised learning models requires metrics like silhouette scores, intra-cluster distances, or visual inspection, given the absence of labelled data.

These components work together to facilitate the unsupervised learning process to uncover hidden patterns and structures in data.

How does Unsupervised Learning work?

This section will delive into how exactly Unsupervised learning works. Let’s dive in

The Essence of Unsupervised Learning

In unsupervised learning, algorithms comb through unlabeled data to glean patterns or structure. Absence of predefined labels means the model must decipher the inherent structure from the input data alone.

Principal Algorithms

Key unsupervised learning methods include clustering and association. Clustering forms groups based on data similarities, and association finds rules that describe large portions of the data.

Applicability in Real-World Scenarios

Unsupervised learning excels in exploratory tasks and unearthing hidden patterns and associations. One might apply it in customer segmentation, anomaly detection, or recommendation systems.

The Training Process

Unsupervised learning models undergo a training phase, using algorithms to identify patterns, group data, or simplify complex datasets. The model learns through continuous adjustments, improving data interpretation.

Unsupervised Learning's Strengths and Limitations

Unsupervised learning needs no labeled data, which is resource-intensive to produce. However, outcomes or patterns can sometimes be challenging to interpret or validate due to the lack of predefined targets.

Types of Unsupervised Learning

In this section, we will navigate the various types of unsupervised learning and elaborate on how each plays a unique role in data analysis.

Clustering

Clustering is a prevalent type of unsupervised learning used for exploratory data analysis. It involves grouping the data into clusters based on similarities. These similarities can be identified in various ways, such as distance measures or density.

Dimensionality Reduction

Dimensionality reduction techniques help reduce the dimensionality of the dataset while maintaining most of its important information. Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are commonly used methods under this category.

Anomaly Detection

Anomaly detection is used to identify outliers in the data, i.e., data points that significantly differ from the rest. It is widely used in various domains such as fraud detection, system health monitoring, and intrusion detection.

Association Rule Learning

Association rule learning is focused on discovering interesting relations or associations among a set of items in large datasets. It's a rule-based machine learning method, widely used in market basket analysis.

Autoencoders

Autoencoders are a type of neural network used for learning efficient codings of input data. They are valuable in dimensionality reduction and can be utilized for noise reduction, and are also used in generative models.

By leveraging these unsupervised learning methods, data scientists can uncover hidden patterns and structures within data, work with high-dimensional data efficiently, detect anomalies, and much more.

Challenges in Unsupervised Learning

In this section, we'll go through some of the hurdles often faced while employing unsupervised learning methods in machine learning.

Lack of Labels

Without labels to guide the learning process, it becomes trickier to identify the accuracy of a model or the quality of obtained clusters or dimensions.

High Dimensionality

High dimensionality can cause considerable problems in unsupervised learning, often resulting in overfitting and increased computational complexity.

Determining Optimal Number of Clusters

In clustering problems, determining the right number of clusters is subjective and can be a major challenge, influencing the quality of outcomes.

Noise and Outliers

Noisy data and outliers can significantly distort the structure of the data, impacting the results of unsupervised learning algorithms.

Interpretability

Unsupervised learning models can sometimes result in complex representations which may be challenging to comprehend and interpret.

Evaluation and Performance Metrics

Understanding the efficiency, accuracy, and overall performance of unsupervised learning methods can be quite challenging. In this section, we'll delve into the key performance metrics used for evaluating unsupervised learning models.

Silhouette Coefficient

The Silhouette Coefficient measures the similarity of an object to its own cluster compared to other clusters. The values range between -1 and 1. A higher Silhouette Coefficient indicates that the object is well-matched to its own cluster and poorly matched to neighboring clusters.

Dunn Index

The Dunn Index is a metric for evaluating the quality of clustering. It computes the ratio between the smallest distance among clusters and the biggest intra-cluster distance. The higher the Dunn Index, the better the clustering result.

Davies–Bouldin Index

Davies–Bouldin Index quantifies the average 'similarity' between clusters. Here, 'similarity' is a measure that compares the distance between clusters with the size of the clusters themselves. A lower Davies-Bouldin Index indicates a better partition.

Rand Index

The Rand Index computes the similarity between two data clusterings. The index ranges from 0 to 1, with 0 indicating that the two data clusterings do not agree on any pair of points, and 1 indicating that the data clusterings are exactly the same.

Mutual Information

Mutual Information measures the agreement of the two assignments, ignoring permutations. It is a quantity that measures a relationship between two random variables that are sampled simultaneously.

These are some key metrics used to evaluate the performance of unsupervised learning models. Choosing the right metric entirely depends on the problem you're trying to solve, the nature of your data, and the specific goals of your machine learning project.

Frequently Asked Questions (FAQs)

What is Unsupervised Learning?

Unsupervised learning is a type of machine learning where algorithms learn patterns from data without explicit instructions or labeled data.

How does Unsupervised Learning work?

Unsupervised learning algorithms analyze raw data, identify patterns, group similar data points, or find anomalies without prior knowledge of the output.

What are the types of Unsupervised Learning?

The main types of unsupervised learning are Clustering (grouping similar data points), Dimensionality Reduction (reducing data attributes), and Anomaly Detection (identifying outliers).

What are the challenges in Unsupervised Learning?

Challenges in unsupervised learning include determining the correct number of clusters, lack of labeled data for evaluation, and sensitivity to data preprocessing.

What are some practical tips for successful Unsupervised Learning?

Practical tips include thorough data preprocessing, selecting relevant features, and conducting exploratory data analysis before applying unsupervised learning techniques.