What are Recurrent Neural Networks (RNN)?

Recurrent Neural Networks (RNNs) are a type of artificial neural network architecture designed to handle sequential data.

Unlike traditional feedforward neural networks, RNNs maintain an internal state memory, helping them to recognize patterns in sequences of information. This makes them suitable for various tasks, including natural language processing, speech recognition, and time series prediction.

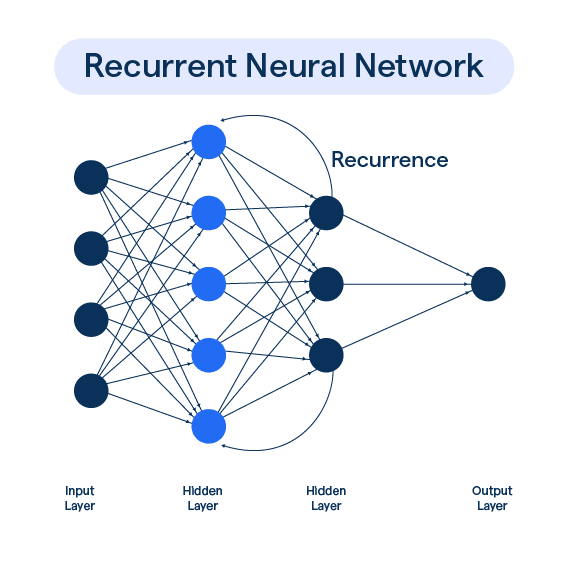

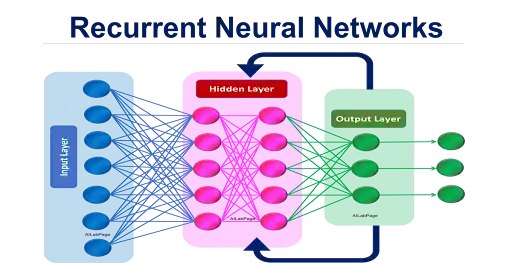

RNNs are organized into layers that consist of interconnected nodes or neurons. In a simple RNN, the output from one layer becomes an input for the same layer as the data moves from one time step to the next.

This feedback loop enables RNNs to store internal state between time steps, allowing them to remember and learn from previous outputs. Consequently, this iterative feedback process gives RNNs the ability to capture temporal dependencies and recognize patterns across time.

How does Recurrent Neural Network (RNN) Work?

RNNs have a unique architecture that allows them to process sequences of data. Let's take a closer look at the essential components and process of RNN.

Understanding the Architecture of RNN

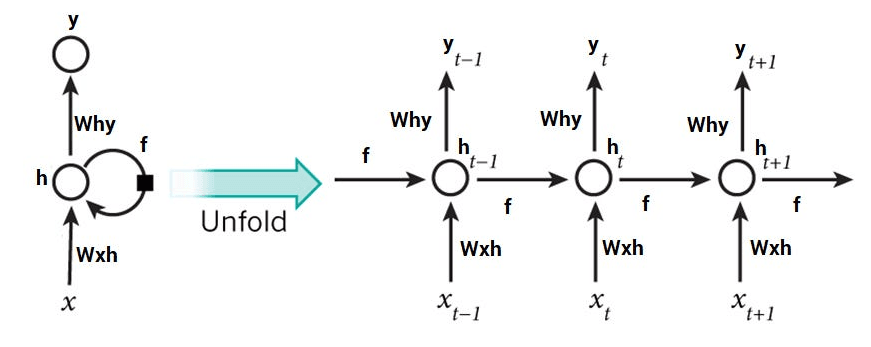

The architecture of RNN is characterized by recurrent connections that allow the previous output of the network to be used as input for the next iteration.

This creates an internal state or memory that stores information from previous steps.

Recurrent Connections and Memory Cells in RNN

The memory cells in RNN are at the heart of the computation.

These cells manage the flow of information between the input and output layers and accept input from the previous cell, keeping track of the information.

Forward Propagation in RNN

The forward propagation algorithm of RNN involves computing the output of each time instance. As we process the input sequence, the internal state of the network gets updated, contributing to the output of each instance.

RNN's backward propagation through time (BPTT) is the method of determining the impact of the previous cell's output on the current cell's output.

Backpropagation Through Time (BPTT)

Backpropagation Through Time BPTT is the process where the partial derivatives of the output are propagated back through the sequence to the input.

This process is used to adjust the weight of the network during the training phase.

Vanishing and Exploding Gradient Problems in RNN

The vanishing gradient problem arises when weights that are too small cause the values being pushed back through backpropagation to drop down to almost zero.

Conversely, the exploding gradient occurs when the weight of the current network is too large, causing the number to blow up to infinity during backpropagation.

Applications of Recurrent Neural Network (RNN)

The applications of recurrent neural network (RNN) are:

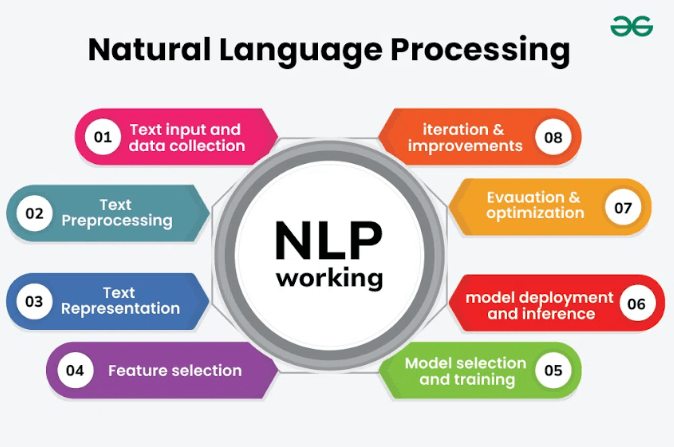

Natural Language Processing (NLP)

NLP is an application of RNN that is used to comprehend natural language.

It involves analyzing the sentence structure, identifying the parts of speech and then making meaning out of the sentences.

Speech Recognition

Speech recognition is an application of RNN that involves speech to text conversion.

The network is trained using audio and text data, making it possible for the network to recognize spoken words and convert them to text.

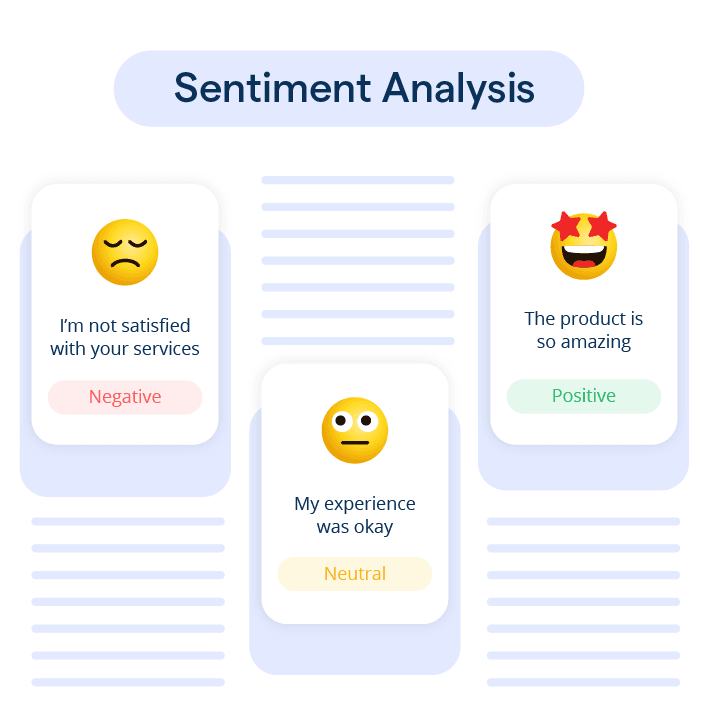

Sentiment Analysis

Sentiment analysis is an application of RNN that analyzes text to determine if it has a positive, negative, or neutral sentiment.

RNNs are particularly useful in this application because of their ability to analyze text effectively.

Machine Translation

Machine translation is an application of RNN that involves the translation of one language to another in real-time.

The network is exposed to a significant number of sentences in multiple languages, enabling the network to learn about grammar, sentence structure, and meaning of sentences.

Handwriting Recognition

Handwriting recognition is an application of RNN that involves recognizing handwritten text and converting it to digital text.

RNN is particularly useful for this application because of its ability to recognize complex patterns in text.

Advantages and Limitations of Recurrent Neural Network (RNN)

- Advantages of RNN: One of the primary advantages of RNN is their ability to process sequential data, making them ideal for time-series data analysis, natural language processing, and speech recognition.

- Limitations of RNN: One of the primary limitations of RNN is the vanishing gradient problem, which occurs during backpropagation when the gradient approaches zero.

The exploding gradient problem, where the gradient approaches infinity, also poses a challenge to RNN.

- Overfitting in RNN and Solutions: Overfitting can be a problem when training the network. Methods like regularization or more complex architectures like LSTM can reduce overfitting.

Types of Recurrent Neural Network (RNN)

There are different types of recurrent neural network:

Basic Recurrent Neural Network

A basic RNN is the simplest form of a recurrent neural network, with the looped connections between neurons achieving the recurrence.

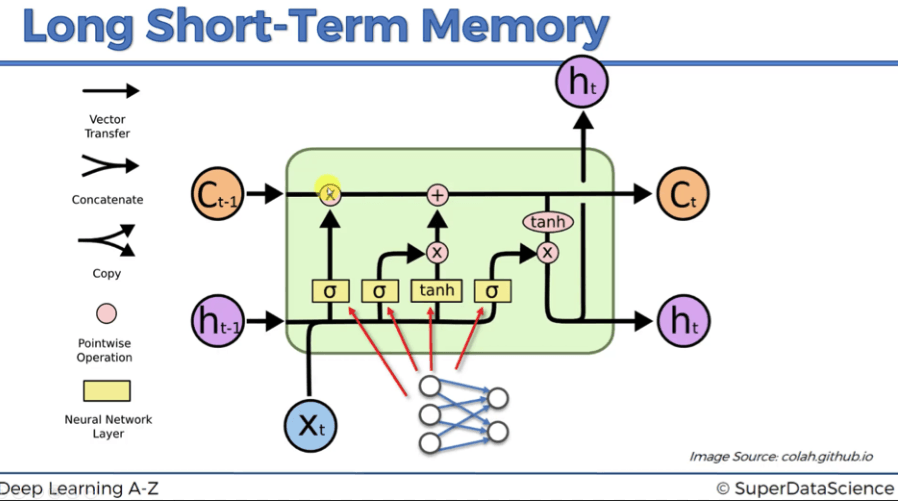

Long Short-Term Memory (LSTM) Network

LSTM network is a complex form of a recurrent neural network that effectively addresses the vanishing gradient problem.

It is made up of input, forget & output gates that regulate the flow of information while processing the sequence.

Gated Recurrent Unit (GRU) Network

The GRU is a simplified version of the LSTM network architecture that addresses the same problem but at a lower computational cost and fewer hyperparameters.

Training and Fine-Tuning Recurrent Neural Network (RNN)

- Preprocessing Data for RNN: Preprocessing data for RNN involves converting data into a suitable format, like time-series data or sentences.

- Training RNN Models: Training RNN involves providing an RNN network with inputs, iterating over the inputs to compute an output, and use this output to optimize the network's parameters.

- Regularization Techniques for RNN: Standard regularization techniques like dropout are applied in RNNs to prevent overfitting.

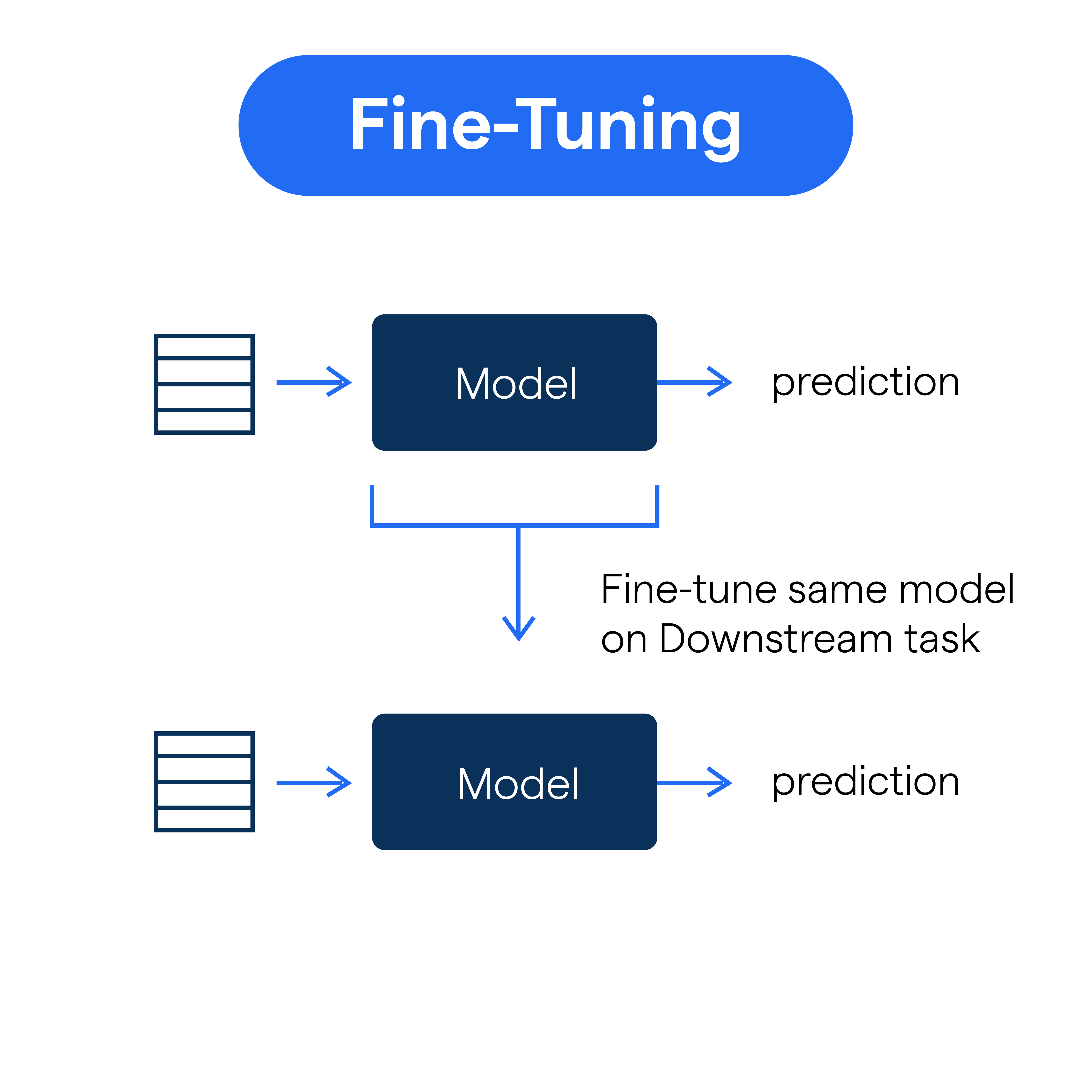

- Fine-Tuning RNN Models: Fine-tuning RNN involves modifying the network's hyperparameters to improve its performance after initial training.

RNN vs Other Machine Learning Algorithms

- Comparison of RNN with Feedforward Neural Networks: RNN and feedforward neural networks have a common base in their local processing of data, but RNNs process its input sequentially, which makes them better suited to handle sequential data than the feedforward networks.

- Comparison of RNN with Convolutional Neural Networks (CNN): CNN networks are mainly used for computer vision and image processing, while RNN's strength is in handling sequential data like time-series analysis, text data, and speech recognition.

- Comparison of RNN with Support Vector Machines (SVM): RNN is better suited for handling sequential data, while SVM models are mainly used in structured data, classification problems, and regression problems.

Challenges and Future Directions of Recurrent Neural Network (RNN)

- Challenges in RNN Training and Optimization: Training RNN requires significant attention to its essential components and hyperparameters, making it computationally expensive and time-intensive.

- Exploration of Hybrid RNN Architectures: There are still opportunities to explore hybrid architectures involving various types of neural networks to create more sophisticated and robust systems.

- RNN and Attention Mechanisms: Attention mechanisms could help improve the performance of RNN by identifying sequences in inputs that are likely to be more substantial and placing more weight on them.

- Advancements in Hardware for RNN: With the growth of artificial intelligence, hardware advancements like graphical processing units (GPUs) and tensor processing units (TPUs) are becoming prevalent, making RNN faster and easier to use in practical applications.

Thank you for reading our glossary on Recurrent Neural Network. We hope that you have gained a better understanding of RNN and its advantages, limitations, and various applications.

Frequently Asked Questions (FAQs)

How does a recurrent neural network (RNN) differ from other neural networks?

RNNs have feedback connections that allow them to process sequential data like time series or language.

This helps them capture patterns over time, making them ideal for tasks like speech recognition or text generation.

What is the basic structure of a recurrent neural network?

An RNN consists of a single hidden layer that maintains a hidden state or memory. This hidden state is updated at each time step and influences the prediction made at that step. It allows the network to handle sequential data efficiently.

What are the challenges in training recurrent neural networks?

Training RNNs can be tricky due to the problem of vanishing or exploding gradients. When the gradients become too small or large, it becomes difficult for the network to learn.

Techniques like gradient clipping and regularization can help mitigate these challenges.

How can recurrent neural networks be used in natural language processing?

RNNs can model the sequential dependencies in text, making them suitable for tasks like language translation, sentiment analysis, or text classification.

They can learn to generate text by conditioning the output on previous words.

Can recurrent neural networks handle long-term dependencies?

Standard RNNs struggle to capture long-term dependencies due to the vanishing gradient problem.

However, variants like long short-term memory (LSTM) and gated recurrent unit (GRU) have been developed to address this issue and can effectively handle long-term dependencies.