Mistakes are often seen as failures. But in machine learning, mistakes are gold. They are opportunities to improve.

In fact, a study found that over 80% of the improvements in AI systems come from refining models through error analysis (source: "The Deep Learning Revolution," by Terrence J. Sejnowski).

Machines don’t just stumble; they learn. They analyze their errors and adapt accordingly. This powerful process is called backpropagation.

Backpropagation is essential for training neural networks. It allows these networks to adjust their internal settings based on feedback from their errors.

Think of it as a way for machines to refine their thinking. Instead of simply guessing, they make precise adjustments that lead to better results.

This glossary will explain what backpropagation is and how it works. By the end, you’ll understand how this method powers modern AI.

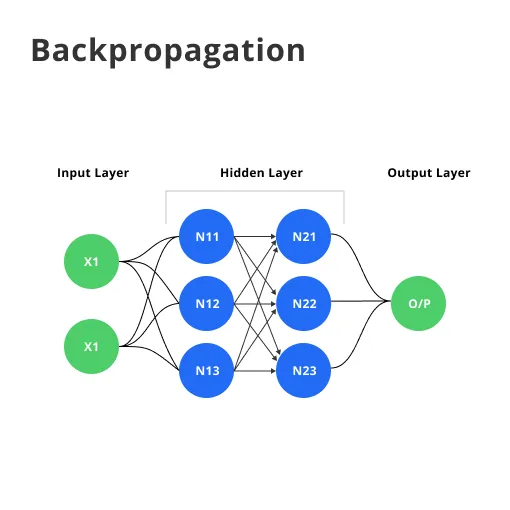

What is Backpropagation?

Backpropagation is a method used in training a backpropagation neural network. At its core, it helps the network learn by adjusting its weights based on errors made during predictions.

Imagine a student taking a test. Each wrong answer reveals what they need to improve. This is similar to how backpropagation functions. It identifies where the network went wrong and guides it toward the right answers.

The process is built on the principle of supervised learning, where the model learns from labeled training data. According to a study by the Stanford University AI Lab, neural networks can achieve over 95% accuracy on specific tasks when trained effectively with backpropagation.

This makes it a cornerstone in applications ranging from image recognition to natural language processing. For instance, Google's image recognition system utilizes backpropagation to achieve its impressive accuracy rates, showcasing the method's effectiveness.

Role in Neural Networks

In a backpropagation neural network, understanding the error is essential for progress. By minimizing this error through a systematic approach, the network becomes better at predicting outcomes.

This iterative process allows the network to refine its predictions over time, ultimately leading to more accurate results.

For instance, in an image classification task, a neural network might initially misclassify a cat as a dog. The backpropagation algorithm detects this error, calculates the loss, and updates the network weights.

This makes the network more adept at distinguishing between the two categories in future attempts.

The power of backpropagation lies in its ability to provide feedback that directly informs how the network should adjust.

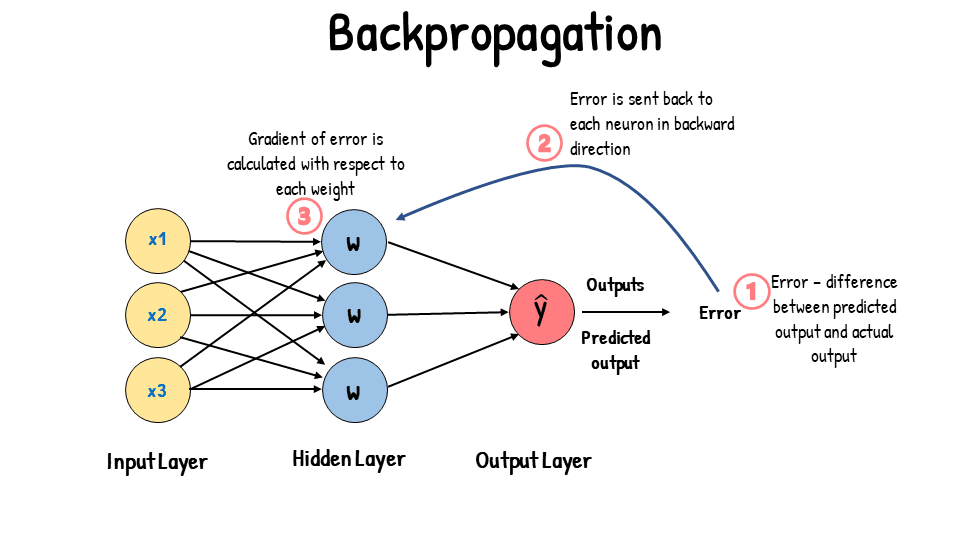

How Backpropagation Works

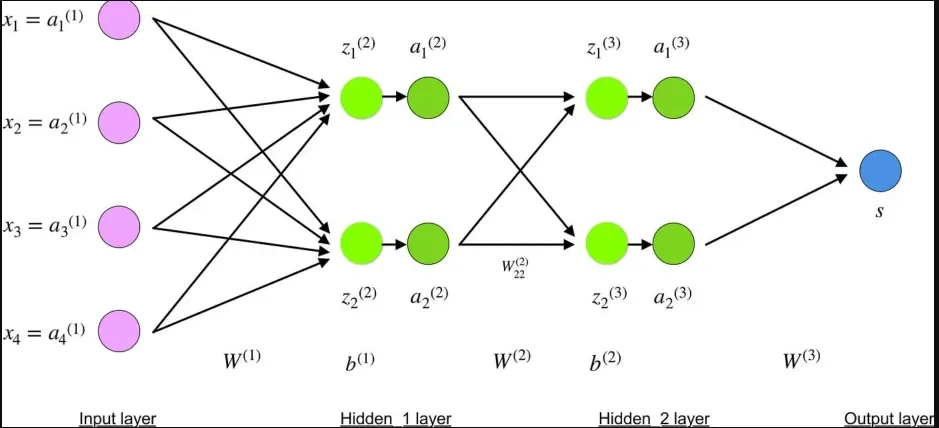

So, how does backpropagation work? It begins with a forward pass, where input data is processed through the layers of the network.

Each neuron in the network applies a weighted sum of inputs, followed by an activation function to produce an output. This output is then compared against the actual result, leading to the calculation of loss.

For example, suppose the network predicts that an image is 80% likely to be a cat. If the actual label is a dog, the loss function quantifies the difference between these two values. This loss is crucial, as it informs the network about its performance.

Key Steps

- Forward Pass:Input data flows through the network layers, resulting in a prediction. Each neuron contributes to the output based on its activation function.

For example, using the sigmoid activation function, the output ranges between 0 and 1, which can represent probabilities.

- Loss Calculation: The difference between the predicted output and the actual output is measured. This difference is called the loss. Common loss functions include Mean Squared Error (MSE) for regression tasks and Cross-Entropy Loss for classification tasks. The choice of loss function can significantly impact the performance of the neural network.

- Backward Pass: The backpropagation algorithm then works its magic, sending the error back through the layers. Each weight is adjusted according to how much it contributed to the error. For instance, if a particular weight significantly impacted the output error, it will receive a larger adjustment compared to weights that had minimal impact.

- Weight Updates: Finally, weights are updated to minimize future errors, leading to improved predictions. This is often done using an optimization algorithm like Stochastic Gradient Descent (SGD) or Adam. By adjusting the weights based on the calculated gradients, the network learns incrementally.

Research shows that networks trained with effective backpropagation can achieve faster convergence rates. For example, using adaptive learning rates in optimizers can speed up training time by as much as 30%, allowing models to reach high accuracy quicker than traditional methods.

Mathematics Behind Backpropagation

The backpropagation equations are foundational to understanding how the algorithm updates weights. At its core, the algorithm uses derivatives to calculate the rate of change of the loss function with respect to each weight. The primary equation used is

Δw= −η ∂L

∂w

Here, Δw is the change in weight, η is the learning rate, and ∂L/∂w represents the gradient of the loss L with respect to the weight w. This formula allows the network to take small steps towards minimizing loss.

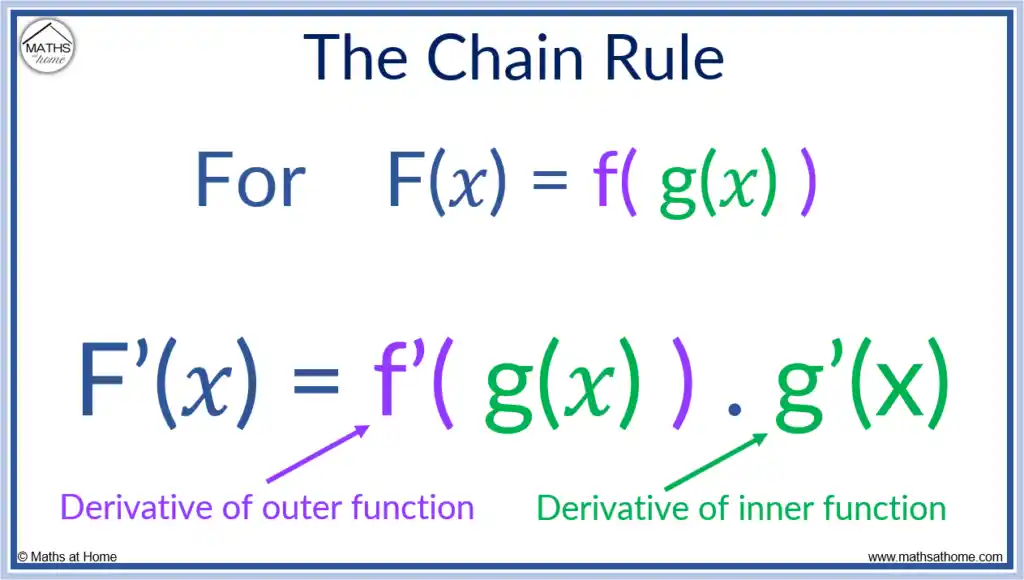

Explanation of the Chain Rule

The chain rule from calculus plays a crucial role here. It allows the algorithm to compute the gradients step-by-step as the error moves back through the layers.

Each weight's contribution to the overall error is effectively captured, enabling precise adjustments.

For instance, if we denote L as the loss, a as the activation output, and z as the weighted sum of inputs, we can express the derivatives as follows:

∂L ∂L ∂a ∂z

—- = —- . —- . —-

∂w ∂a ∂z ∂w

This equation illustrates how each layer's output affects the final loss, allowing the network to backtrack and adjust accordingly.

By systematically applying the chain rule, backpropagation ensures that every weight adjustment is based on its true impact on the output error.

Backpropagation in Different Neural Networks

In Convolutional Neural Networks (CNNs), backpropagation helps fine-tune filters used for image recognition. When a CNN misclassifies an image, CNN backpropagation adjusts the filters, improving future predictions.

For example, if a CNN misidentifies a cat as a dog, backpropagation will adjust the filters to better capture features like fur patterns and ear shapes that distinguish between the two animals.

CNNs have shown remarkable success, achieving up to 99% accuracy on specific image datasets, such as the CIFAR-10 dataset, thanks in large part to effective backpropagation.

This high level of accuracy is not only impressive but also critical in applications such as medical imaging, where accurate classification can lead to better diagnosis and treatment.

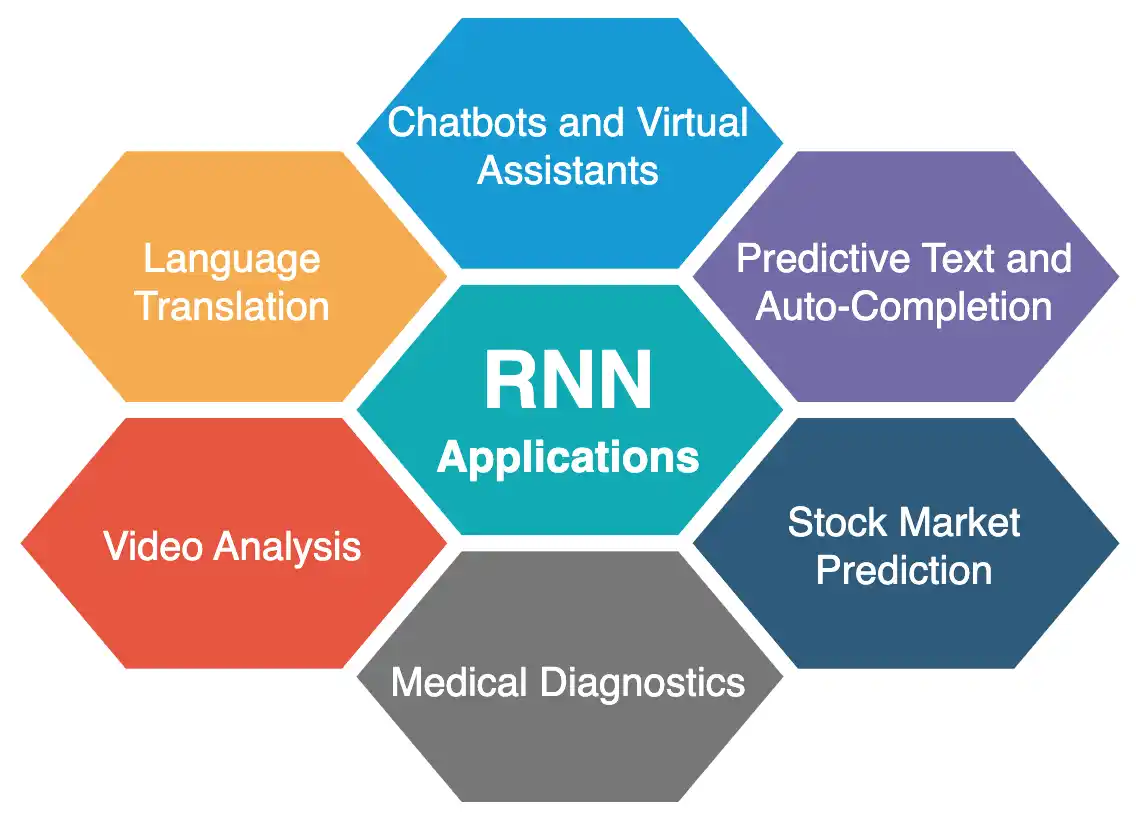

Application in RNNs

Recurrent Neural Networks (RNNs) benefit similarly. Here, RNN backpropagation deals with sequences. When an RNN makes a mistake in predicting the next element in a sequence, it updates its weights to learn from that error.

For example, in language modeling, if an RNN predicts "The cat sat on the" and incorrectly follows with "carpet" instead of "mat," it uses backpropagation to adjust its weights, improving its ability to understand context in future predictions.

RNNs have shown significant promise in natural language processing tasks, with some achieving over 90% accuracy in sentiment analysis, largely due to the refinements made possible through backpropagation.

This capability is particularly useful in applications like chatbots and translation services, where understanding context and sequence is essential for effective communication.

Common Challenges

Despite its effectiveness, backpropagation isn’t without challenges. One major issue is the vanishing gradient problem, where gradients become too small for effective weight updates, especially in deep networks. This can halt learning, making it difficult for the network to capture complex patterns.

To mitigate this, techniques like normalization and the use of activation functions such as ReLU (Rectified Linear Unit) have emerged.

These approaches help maintain gradient flow, allowing deeper networks to learn more effectively. For example, ReLU activation has been shown to reduce the likelihood of vanishing gradients, making it easier for networks to converge during training.

Additionally, overfitting is another common challenge, occurring when a model learns too much from the training data, failing to generalize to new data. This often leads to high accuracy on training sets but poor performance on unseen data.

Techniques like dropout, regularization, and cross-validation are crucial for addressing this issue, ensuring that models trained with backpropagation can perform well in real-world scenarios.

Research indicates that using dropout can improve generalization by up to 50%, making it a popular choice in many neural network architectures.

By randomly dropping out a fraction of neurons during training, the model learns to rely on multiple pathways for making predictions, which helps prevent overfitting.

Conclusion

Backpropagation is more than just a technical term; it’s a critical process that enables neural networks to learn from mistakes.

By understanding its mechanics, including the backpropagation algorithm and its applications in CNNs and RNNs, we can appreciate its importance in AI.

As machine learning continues to evolve, the role of backpropagation remains a cornerstone of effective learning and adaptation.

As the demand for smarter AI solutions grows, ongoing research aims to refine the backpropagation process further. Innovations in this field promise to enhance the efficiency and effectiveness of training, enabling neural networks to tackle even more complex challenges in the future.

Frequently Asked Questions (FAQs)

What is the main advantage of using backpropagation in neural networks?

The main advantage of backpropagation is its efficiency in training complex models. It enables the backpropagation neural network to learn quickly by adjusting weights based on error gradients.

This approach reduces the time needed to achieve high accuracy, making it ideal for real-time applications.

Can you explain a simple backpropagation example?

A simple backpropagation example is a neural network predicting housing prices. When the network predicts a price, the backpropagation algorithm calculates the difference between the predicted and actual prices.

The weights are then adjusted accordingly, helping the network learn from its mistakes for future predictions.

How does backpropagation help with overfitting in neural networks?

Backpropagation can help mitigate overfitting by using techniques like dropout during training.

By randomly ignoring certain neurons during the backpropagation process, the model learns to generalize better. This way, the backpropagation algorithm aids in creating a more robust model that performs well on unseen data.

What are the limitations of backpropagation in deep learning?

One limitation of backpropagation in deep learning is the vanishing gradient problem.

In very deep networks, gradients can become extremely small, making it hard for the backpropagation algorithm to update weights effectively. This issue can hinder the training of complex models like deep CNNs or RNNs.

How is backpropagation different in CNNs versus RNNs?

In CNNs, backpropagation focuses on spatial hierarchies, adjusting convolutional filters to capture features in images.

In contrast, rnn backpropagation handles sequential data, where it updates weights based on the context from previous inputs. This distinction highlights how backpropagation adapts to the specific requirements of different network architecture.