What is Cross-Validation?

Cross-validation is a technique used in machine learning to evaluate the performance of a model. It provides a better estimate of how well the model will generalize to unseen data by splitting the data into multiple subsets.

It helps in assessing the model's ability to handle different scenarios and identify potential issues such as overfitting or underfitting.

Cross-validation plays a crucial role in assessing the performance and reliability of machine learning models. It provides a more accurate evaluation of the model's performance by using multiple partitions of the data.

This helps in making informed decisions about model selection, hyperparameter tuning, and assessing the robustness of the chosen model.

Why is Cross-Validation Used?

Cross-validation is a widely used technique in machine learning and statistical analysis to evaluate the performance of a model. Let's explore its significance.

Model Performance Evaluation

One of the main reasons cross-validation is used is to accurately evaluate the performance of a model on unseen data, helping to choose the best model for a specific problem.

Preventing Overfitting

Cross-validation helps identify overfitting, which occurs when a model performs well on training data but poorly on new, unseen data, ensuring a more robust model.

Model Selection and Hyperparameter Tuning

Cross-validation allows us to compare different models or algorithms and fine-tune their hyperparameters, enabling the selection of the most optimal model for a problem.

More Reliable Estimates

By splitting data into different folds and training/testing on various subsets, cross-validation helps in obtaining more reliable estimates of a model's performance.

Data Efficiency

In situations with limited data, cross-validation can maximize the usage of available data for training and testing, increasing overall data efficiency and model reliability.

Types of Cross-Validation

There are different types of cross-validation:

K-Fold Cross-Validation

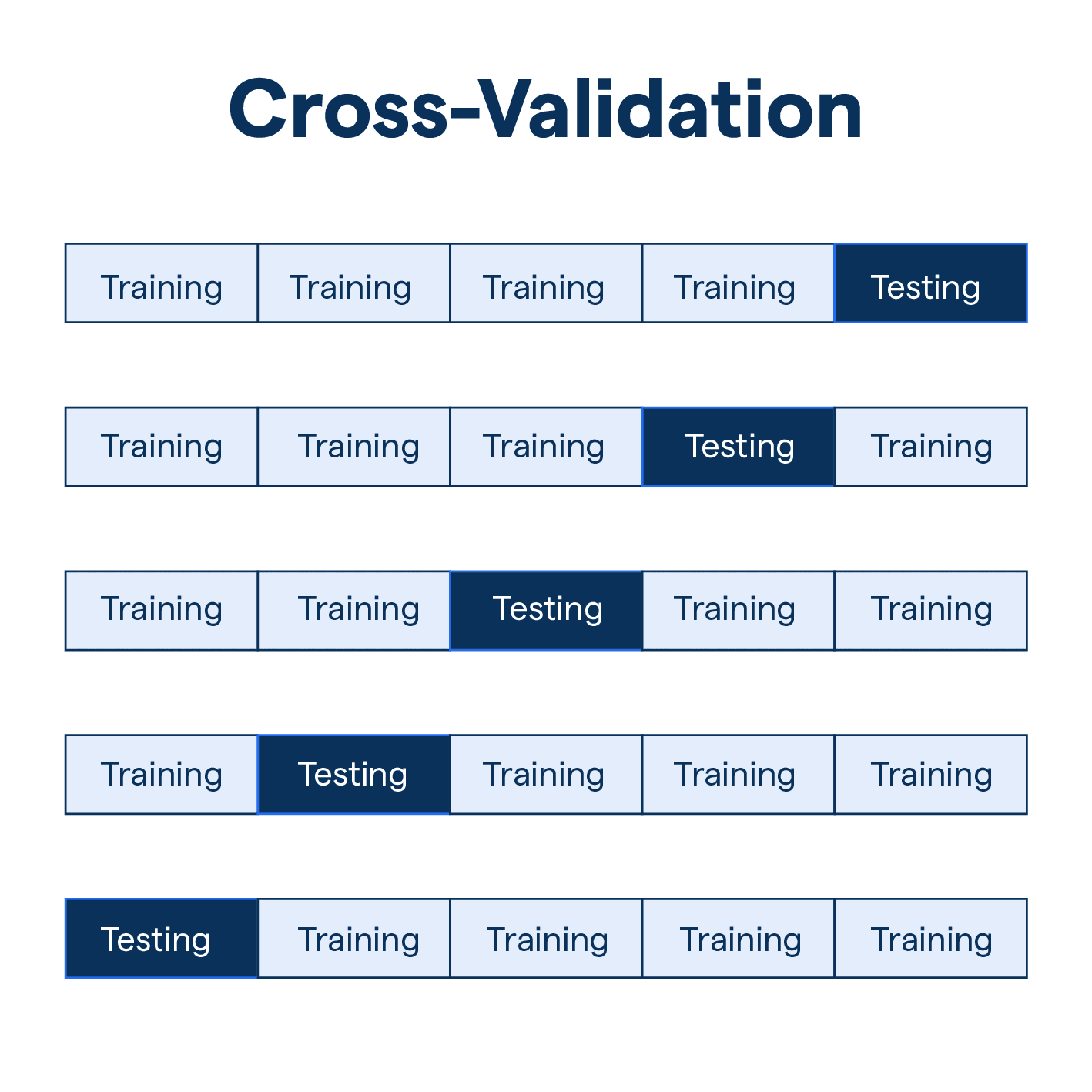

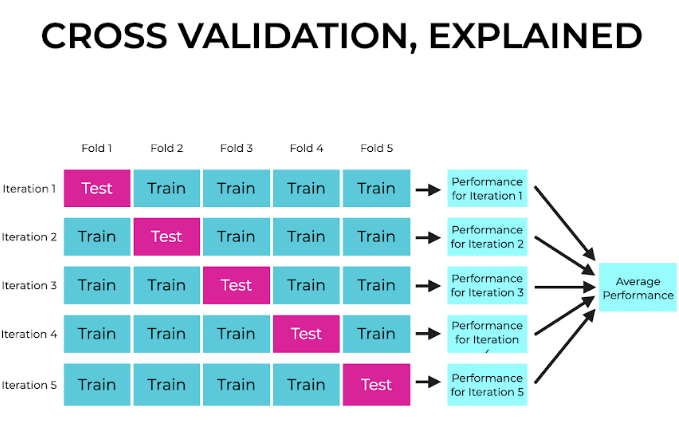

K-Fold Cross-Validation is a commonly used technique where the data is divided into 'K' equal-sized folds or partitions.

The model is trained on K-1 folds and evaluated on the remaining fold. This process is repeated K times, with each fold serving as the test set once. The performance metric is then averaged across all iterations.

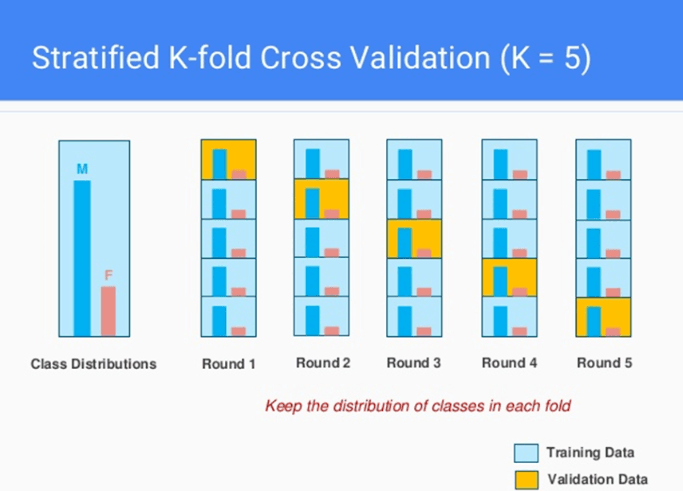

Stratified K-Fold Cross-Validation

Stratified K-Fold Cross-Validation is an extension of K-Fold Cross-Validation that ensures the distribution of class labels is preserved in each fold.

This technique is especially useful for imbalanced datasets, where the number of samples in each class differs significantly.

Leave-One-Out Cross-Validation (LOOCV)

Leave-One-Out Cross-Validation is a special case of K-Fold Cross-Validation where K is equal to the number of samples in the dataset.

In each iteration, one sample is used for testing, and the rest are used for training. LOOCV provides the least biased estimate of model performance but can be computationally expensive.

Shuffle-Split Cross-Validation

Shuffle-Split Cross-Validation involves randomly shuffling the dataset and splitting it into training and test sets multiple times.

This technique allows for more control over the training and testing proportions and can be useful for large datasets.

Time Series Cross-Validation

Time Series Cross-Validation is specifically designed for time series data. It ensures that the model is evaluated on data that occurs after the training data to simulate real-world scenarios.

How Does Cross-Validation Work?

Ever wondered how cross-validation works in machine learning model evaluation? Let's walk through its intriguing process:

Division of the Dataset: The initial step in cross-validation involves dividing your dataset into a certain number of 'folds', or subsets.

The most common method is k-fold cross-validation, where 'k' is typically set to 5 or 10.

Training and Validation: Each fold takes turns serving as the validation set, while the remaining folds make up the training set.

The model is trained on the training set and then validated on the validation set, producing a measure of model performance.

Repeating the Process: This process of training and validation is repeated k times, each time with a different fold serving as the validation set.

This repetition ensures all data has a chance to be included in the training and validation sets, providing a comprehensive assessment of the model's performance.

Aggregating Results: After the model has been trained and validated k times, the results from the k iterations are aggregated.

This typically involves calculating the average of the performance measure across all k iterations to give a single, averaged measure of model performance.

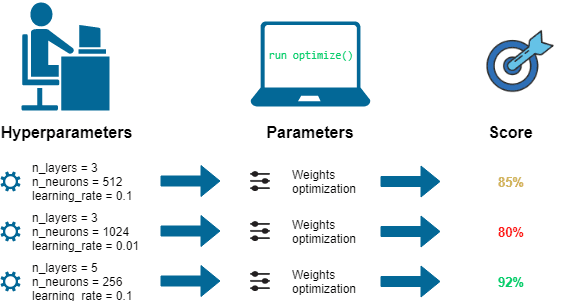

Fine-Tuning the Model: The results of cross-validation can be used to fine-tune a machine learning model. By comparing the model's performance across different configurations of hyperparameters during the cross-validation process, the model can be optimized for its task.

Where Do We Implement Cross-Validation?

Here you can implement cross-validation:

Predictive Model Validation

Cross-validation is often implemented in predictive model validation. By splitting the dataset into training and validation sets multiple times, you can test how the model performs on unseen data, which can help you avoid overfitting.

Hyperparameter Tuning

When tuning a model's hyperparameters, cross-validation can be used to estimate their effectiveness. This ensures the parameters selected do not merely perform well on one specific subset of the data.

Comparing Different Models

To compare the predictive abilities of different modeling approaches under the same conditions, cross-validation can be implemented.

It measures the models’ performance on various data subsets, fostering a more reliable comparison.

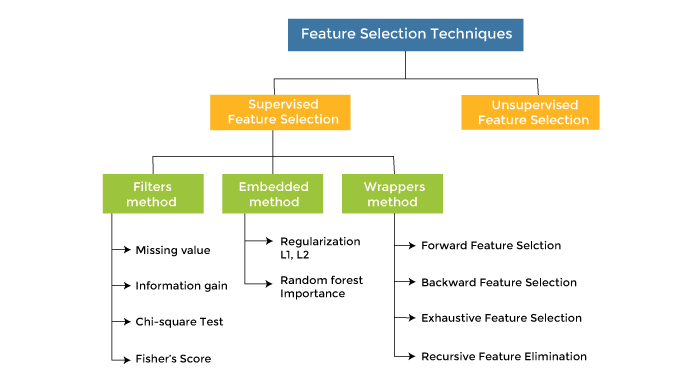

Feature Selection

In determining which features contribute most to the predictive capability of a model, one can use cross-validation.

By analyzing how the model performs without certain features, you can identify the important ones.

Assessment of Model Robustness

Cross-validation is used to test model robustness, ensuring that the model has not just memorized the training data, but can generalize well to new, unseen data.

This is vital to assert the model's ability to make accurate predictions when deployed.

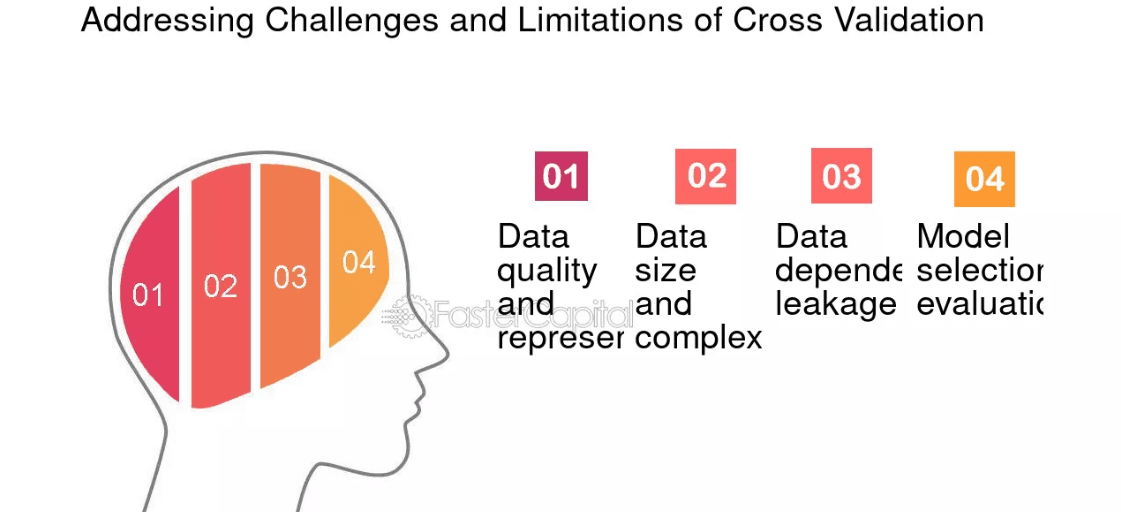

Limitations and Challenges of Cross-Validation

Cross-validation is a widely used technique for model evaluation and selection. However, it’s not without its limitations and challenges. Let's dive into some of these:

Computational Complexity

Performing cross-validation can be computationally expensive, particularly when dealing with large datasets or complex models.

Repeatedly training and testing the model over multiple folds increases the time and resource requirements, which can be a stumbling block in time-sensitive projects.

Choice of k-folds

Selecting an appropriate number of folds (k) can be challenging, as a balance needs to be struck between accuracy and efficiency.

A larger k value will yield more accurate results but comes at the cost of higher computational complexity. Conversely, a smaller k value might be more efficient but may not provide sufficiently accurate results.

Variation in Results

Cross-validation results can vary depending on how the data is divided into folds. When partitions lead to an uneven representation of classes or patterns, model performance may suffer, creating misleading or inconsistent results.

Strategies like stratified k-fold cross-validation can help mitigate this issue but may not entirely eliminate it.

Data Leakage

Cross-validation can be susceptible to data leakage if careful attention is not paid to data preprocessing steps.

Data leakage occurs when information from the testing set is incorporated into the training set, leading to overly optimistic performance estimates. To prevent leakage, preprocessing should be performed separately within each split of the data.

Handling Imbalanced Data

In the presence of imbalanced class distributions, cross-validation can produce biased results. The minority class samples might not be well-represented in each fold, making it difficult for the model to learn patterns associated with that class.

To address this challenge, techniques like oversampling, undersampling, and cost-sensitive learning can be employed when performing cross-validation.

Frequently Asked Questions (FAQs)

What is cross-validation and why is it important?

Cross-validation is a technique to evaluate machine learning models. It splits data into subsets for more reliable evaluation and helps in selecting the best model and avoiding overfitting.

What are the different types of cross-validation techniques?

There are several types, including k-fold, stratified k-fold, leave-one-out, shuffle-split, and time series cross-validation. Each has its own use case and benefits.

How does cross-validation work?

It involves splitting data into training and test sets, training the model on the training set, and evaluating its performance on the test set. The process is repeated for each fold or partition.

What are the main advantages of using cross-validation?

Cross-validation provides a better estimate of model performance, allows for model comparison, enables hyperparameter tuning, and helps identify overfitting or underfitting issues.

What are some limitations of cross-validation?

Cross-validation can still suffer from overfitting or underfitting issues, may not accurately assess the performance of imbalanced datasets, and can be computationally expensive, especially with leave-one-out cross-validation. Nested cross-validation can help mitigate some limitations.