What is Fine-Tuning?

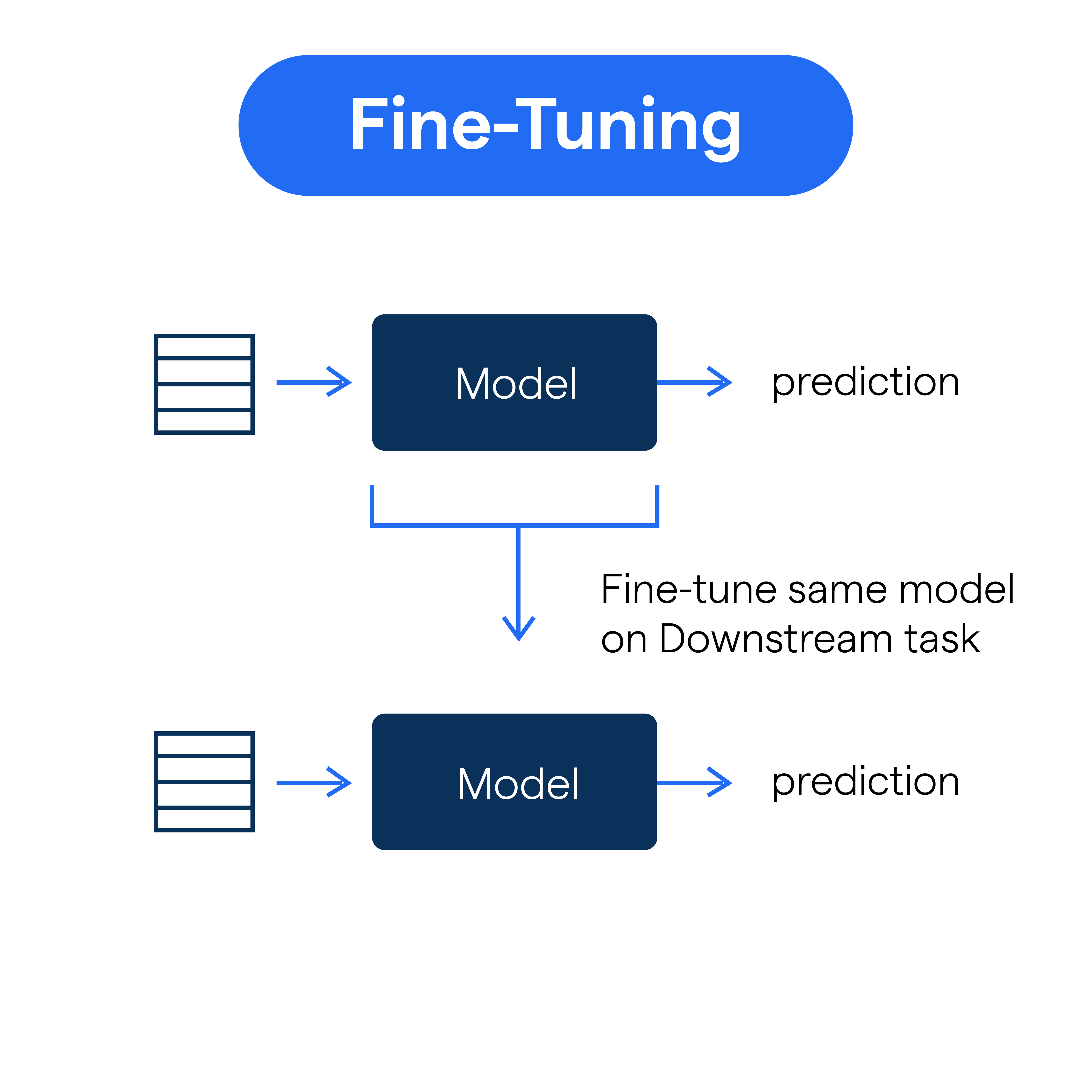

Fine-tuning is a process used to improve models in machine learning and other fields by making them more precise or "finely tuned" to a specific task.

It involves adjusting certain parameters or training aspects to enhance performance on a particular problem rather than building a new model from scratch.

How Does Tuning In Work?

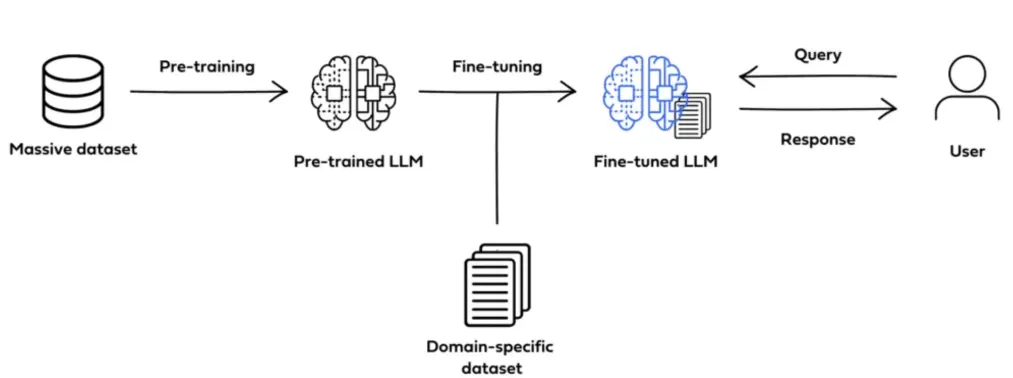

When you tune in to a model, you start by taking a pre-trained model and modifying it through additional training on new, task-specific data.

This approach allows the model to become more to the nuances of the new data while retaining its core abilities.

Word Tuning and Precision

Word tuning is an example of fine-tuning applied to language models. By exposing the model to specific vocabulary or phrasing in a fine-tuning process, it can generate more contextually appropriate and refined responses.

Components of Fine-Tuning

When fine-tuning an LLM, the retraining targets several components or parameters of the model, such as weights and biases, to better align its response patterns with the specific task or dataset at hand. This might remind you of a musical number's fine adjustment before the curtain goes up.

Why is Fine-Tuning Necessary?

Fine-tuning, including word tuning and specific fine tunings, is necessary for precision, efficiency, and flexibility in machine learning applications. So let’s see the necessaries of the fine-tuning.

Tuning In to Specific Needs

Fine-tuning in machine learning allows models to be tailored precisely to specific tasks.

By tuning in to the data’s unique patterns, a model can be finely tuned, enhancing its relevance and accuracy for particular applications.

This process is crucial to align a model’s capabilities with specialized requirements.

Improving Accuracy with Word Tuning

In language processing, word tuning focuses on refining how models understand and generate language.

By applying fine-tuning to word relationships, models become finely tuned to specific contexts.

This word tuning improves the model's ability to deliver accurate and context-aware outputs, making it essential for nuanced language applications.

Efficiency Through Fine-Tuning

A finely tuned model operates more efficiently, using fewer resources to deliver precise results.

Fine-tuning reduces unnecessary processing and optimizes performance, which is especially valuable for real-time applications.

A model delivers faster, more accurate responses, a vital trait in high-stakes fields.

Versatility with Multiple Fine Tunings

Fine-tuning enables a single model to support diverse applications by creating multiple fine tunings.

Each fine-tune adaptation makes the model applicable across various industries, such as healthcare and finance, demonstrating the adaptability of finely tuned models.

Where is Fine-Tuning Used?

Fine-tuning isn't confined to one niche—it has a wide and varied fan base. Let's see where it finds its relevance.

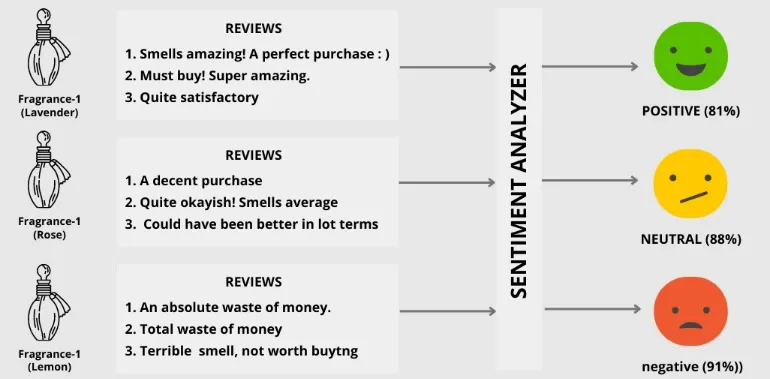

Sentiment Analysis

In sentiment analysis, fine-tuning helps LLMs break down the text and understand the sentiment behind it more accurately.

Much like catching subtle cues in a conversation, it helps the model interpret the underlying tone.

Text Classification

Fine-tuning is a great ally in adapting LLM models to accurately classify specific types of documents or content pieces. Just like having a librarian who knows exactly where to file each book!

Conversational AI

Fine-tuning enables AI models to operate efficiently across a range of specialized tasks.

By applying fine-tunings, developers can fine-tune a model’s accuracy, reliability, and relevance, making it highly responsive and finely tuned for targeted applications.

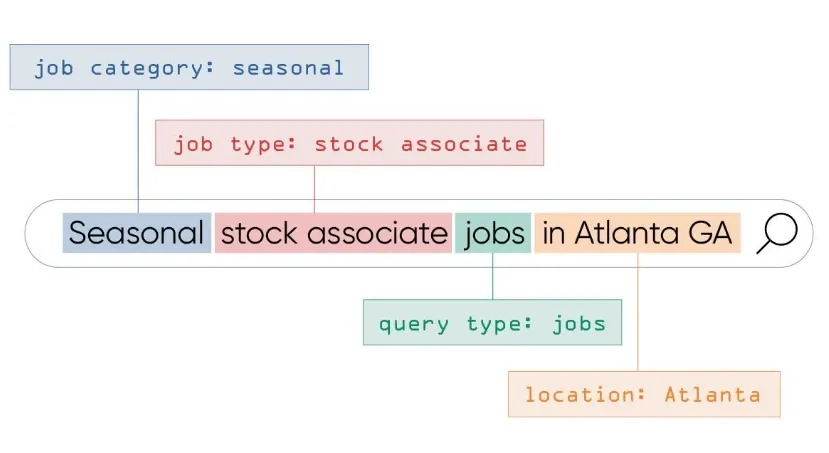

Named Entity Recognition

With tasks like named entity recognition, fine-tuning contributes to speeding up and enhancing the process by understanding the specificities of the dataset.

Word Tuning in NLP

In NLP, word tuning is a form of fine-tuning that refines a model's understanding of context, language, and meaning.

This fine-tuning process allows a model to achieve better results in applications like translation, sentiment analysis, and text summarization, where finely tuned word associations are crucial for accuracy.

Fine-Tuning for Personalized Applications

Fine-tuning is commonly used to create personalized or industry-specific applications.

For instance, healthcare, finance, and customer service AI systems are often on industry-specific data to ensure they are finely tuned to meet the needs of that field.

How Does Fine-Tuning Work?

Here's the process behind the magic of fine-tuning, step by step:

Initialization

Fine-tuning is a crucial step in customizing chatbots like BotPenguin. It’s the process of refining a pre-existing model to suit specific needs.

Fine-tuning involves adjusting the chatbot’s responses, tone, and functionality for finely tuned communication with users. This helps create a bot that’s more responsive to user queries and better aligned with the intended brand experience.

The Process of “Tuning In”

In BotPenguin, tuning in means setting up the chatbot’s parameters to understand user inputs with higher accuracy.

Fine-tuning focuses on interpreting different forms of user language, which we can call word tuning. This ensures that even complex phrases or unique vocabulary are understood by the bot.

Achieving a Finely Tuned Chatbot

By fine-tuning the chatbot’s responses, developers can make sure it is finely tuned to answer precisely, improving customer satisfaction.

Fine-tunings allow BotPenguin to address user inquiries effectively by focusing on conversational nuances.

Benefits of Fine-Tuning with BotPenguin

A BotPenguin bot adapts better to dynamic customer needs. Fine-tune your bot to save time, increase engagement, and elevate user experience, making your bot a powerful asset in customer interactions.

Best Practices of Fine-Tuning

Fine-tuning is a process of adapting pre-trained models to specific tasks and optimizing their performance by making targeted adjustments.

Here are best practices for achieving finely tuned, high-performing models.

Start with Pre-Trained Models

When tuning in to your model's specific needs, beginning with a well-chosen pre-trained model is critical.

Pre-trained models provide a foundation, as they have already undergone broad training on general datasets, allowing fine-tuning to focus on specific nuances.

Define Clear Objectives

A finely tuned model must align with specific objectives. Defining goals in advance—whether for accuracy, response time, or relevance—is essential for effective word tuning and other adjustments. With clear objectives, fine-tunings become more structured and measurable.

Use Quality Data

Fine-tuning relies heavily on the quality of data. By using accurate, representative data, fine-tuning can produce more robust, reliable models.

The goal is to have a finely tuned model that accurately represents real-world scenarios.

Avoid Overfitting

Overfitting is a common risk during fine-tuning. To maintain a finely tuned model, use techniques like cross-validation or dropout layers, balancing between being well-tuned and not over-specific.

Iterate and Evaluate

Fine-tunings should be continually evaluated and adjusted. Fine-tune the model progressively, testing each version to measure improvements, and refining further as needed to achieve the best results.

Pitfalls of Fine-Tuning

In machine learning, while fine-tuning is often essential for optimizing model performance, it also presents several pitfalls that can undermine its effectiveness.

Risk of Overfitting During Fine-Tuning

When fine-tuning a model, there’s a significant risk of overfitting to the specific training data. This occurs when a model becomes too finely tuned to the training set, capturing details that don't generalize well.

Overfitting undermines the model's performance on new, unseen data, making the finely tuned model unreliable in broader applications.

Loss of Generalization with Fine Tunings

Fine-tuning aims to improve performance, but excessive fine-tuning can cause the model to lose its generalization capability.

A finely tuned model may perform well in one domain but struggle to adapt when applied outside that context. Balancing the fine-tuning process is essential to retain adaptability across various datasets.

Increased Resource Demands in Fine-Tuning

Fine-tuning often requires extensive computational resources, especially when multiple fine tunings are applied.

Each layer of fine-tuning adds time and cost, which can be restrictive for projects with limited resources. Efficient fine-tuning is crucial to avoid long processing times and high costs.

Model Drift Due to Continuous Word Tuning

Repeated word tuning can lead to model drift, where the model diverges from its original purpose.

Over-fine-tuning can introduce inconsistent patterns, compromising the model's accuracy and reliability over time.

Maintenance Challenges in Models

Fine-tuning a model frequently demands ongoing maintenance to ensure consistent performance. Each fine-tuning requires monitoring, adding complexity and cost to the model's lifecycle.

Frequently Asked Questions (FAQs)

What is the difference between fine-tuning and training a model from scratch?

Fine-tuning modifies a pre-trained model for a specific task, while training from scratch involves training a model from random initial weights.

Fine-tuning leverages pre-existing knowledge, while training from scratch requires training on a new dataset.

What if I need access to a pre-trained model?

If a pre-trained model is not available, training from scratch might be necessary. However, fine-tuning allows you to benefit from the knowledge and expertise obtained from training on massive and diverse datasets.

Can I fine-tune a model for multiple tasks simultaneously?

In most cases, it's better to fine-tune a model for a single task to ensure optimal performance. Fine-tuning for multiple tasks simultaneously can lead to interference and decreased performance.

How do I choose the right pre-trained model for fine tunings?

Select a pre-trained model that aligns closely with your task in terms of architecture and domain expertise. Also, consider models that have achieved state-of-the-art results in related tasks.

How can I avoid overfitting during fine tunings?

To avoid overfitting, use techniques like regularization, dropout, and early stopping. Also, monitor the model's performance on validation data and adjust hyperparameters accordingly.