What is Regularization?

Before diving into the deep end, let's start with the basics. Regularization is a technique used to prevent overfitting in machine learning models, ensuring they perform well not just on the training data but on unseen data too.

Definition

Regularization is a process applied during the training of a model that aims to reduce the model's complexity to prevent overfitting, ensuring a more generalized, robust performance.

Purpose

The core purpose of regularization is to improve model generalization. Overfitting happens when a model learns the training data too well, including its noise and outliers, failing to perform on new, unseen data.

How It Works

Regularization works by adding a penalty on the different parameters of the model to reduce the freedom of the model hence discouraging overly complex models.

Types

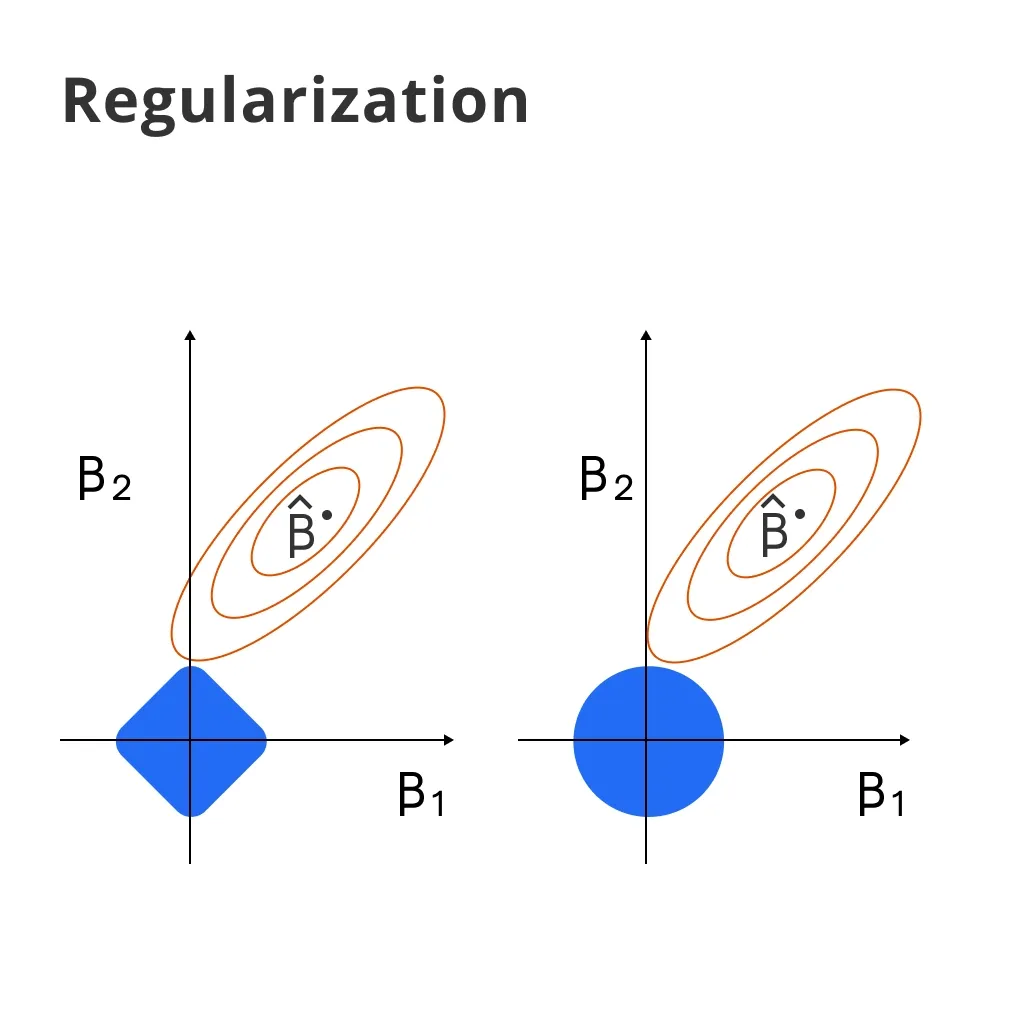

There are primarily two types of regularization techniques in machine learning: L1 regularization (Lasso) and L2 regularization (Ridge). Each has its way of adding penalties and serves slightly different purposes.

Importance

The importance of regularization lies in its ability to create models that generalize well, are less prone to overfitting, and perform reliably on unseen data.

Why is Regularization Critical?

Delving deeper, let's discuss why regularization is not just an optional tweak but a critical necessity in machine learning models.

Prevents Overfitting

The paramount reason for regularization is to avoid overfitting, ensuring that the model remains general and applicable to new data.

Enhances Model Predictive Power

By preventing overfitting, regularization enhances the model's ability to predict accurately unseen data, which is the ultimate goal of any machine learning model.

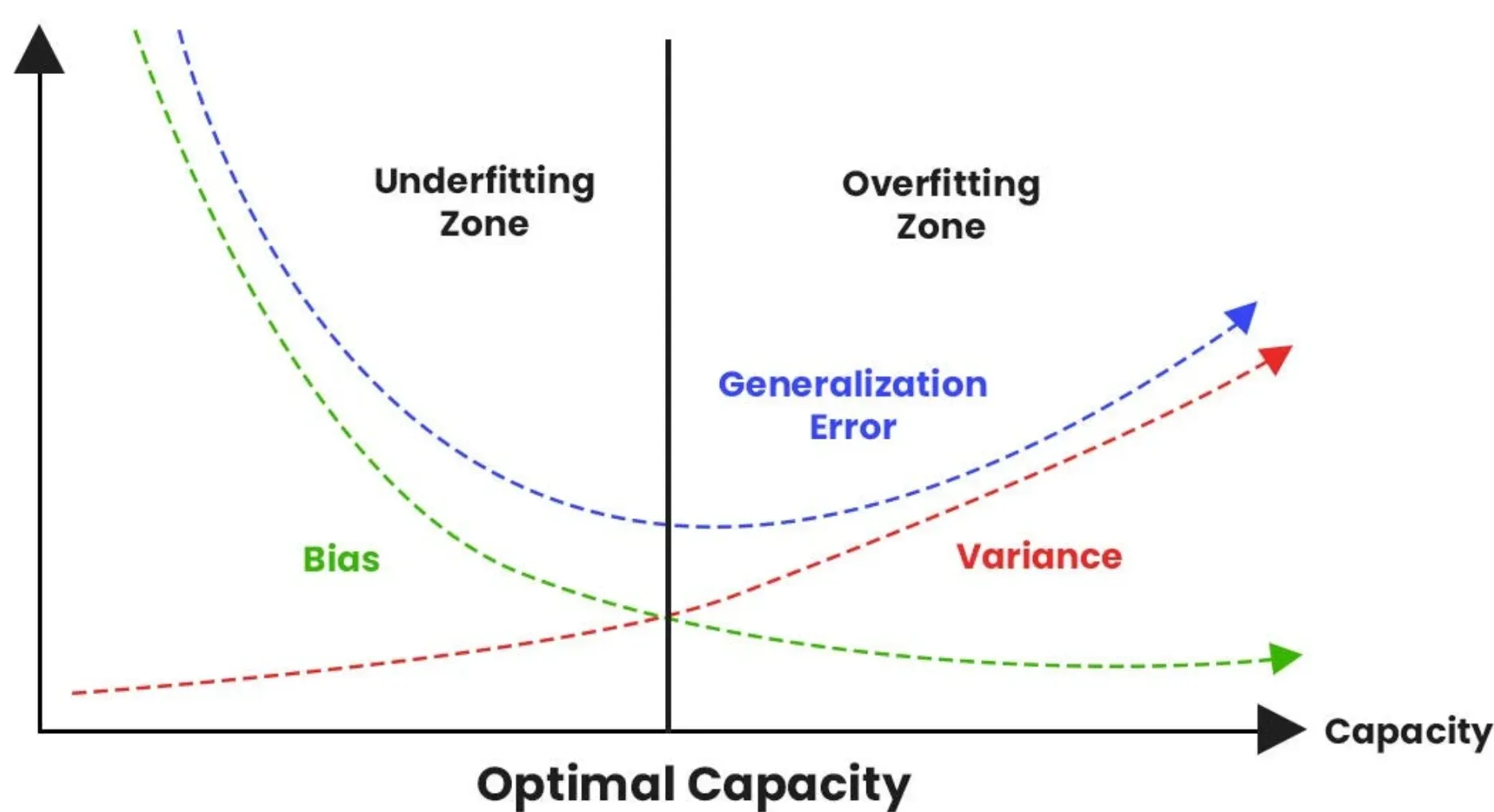

Balances Bias-Variance Tradeoff

Regularization helps in managing the bias-variance tradeoff, reducing variance without increasing bias too much, which in turn improves model performance.

Encourages Feature Selection

L1 regularization has the added benefit of feature selection by shrinking some coefficients to zero, effectively reducing the number of features the model considers.

Simplifies Models

Regularization simplifies models by encouraging smaller weights, making the models more interpretable, especially important in fields like healthcare or finance where model decisions need to be explained.

When to Use Regularization?

Knowing when to apply regularization can significantly impact your model's effectiveness.

High Variance Scenarios

Whenever your model is showing signs of high variance or overfitting( performs well on training data but poorly on validation/test data), regularization should be considered.

Complex Datasets

For complex datasets with many features, regularization can help in simplifying the model, making sure it generalizes well.

Limited Data

In situations where the amount of data is limited, regularization can prevent the model from learning the noise in the training set.

Prior to Model Training

Regularization is a preventive measure, so it should ideally be incorporated during the model training phase, not after the model has been fully trained.

Models Prone to Overfitting

Algorithms that are inherently more complex and prone to overfitting, such as deep learning networks, greatly benefit from regularization techniques.

Who Benefits from Regularization?

Regularization isn't a one-size-fits-all solution but it has its champions who can utilize it to its full potential.

Machine Learning Practitioners

Data scientists and machine learning engineers are the primary beneficiaries, using regularization to build robust models.

Industries with Complex Data

Sectors dealing with complex and high-dimensional data, like finance, healthcare, and e-commerce, can realize improved model performance with regularization.

Academics and Researchers

Researchers in fields that utilize predictive modeling can use regularization to ensure their models are not overfitting and are generalizable.

Students Learning Machine Learning

For learners, understanding and applying regularization is a crucial step in grasping the nuances of building predictive models.

Companies Investing in AI

Organizations investing in AI-driven products benefit from regularization by ensuring their algorithms perform reliably in real-world scenarios.

Where is Regularization Applied?

Let's explore the arenas where regularization truly shines.

Predictive Modeling

In any scenario where the goal is to predict future outcomes based on historical data, regularization helps in building models that generalize well.

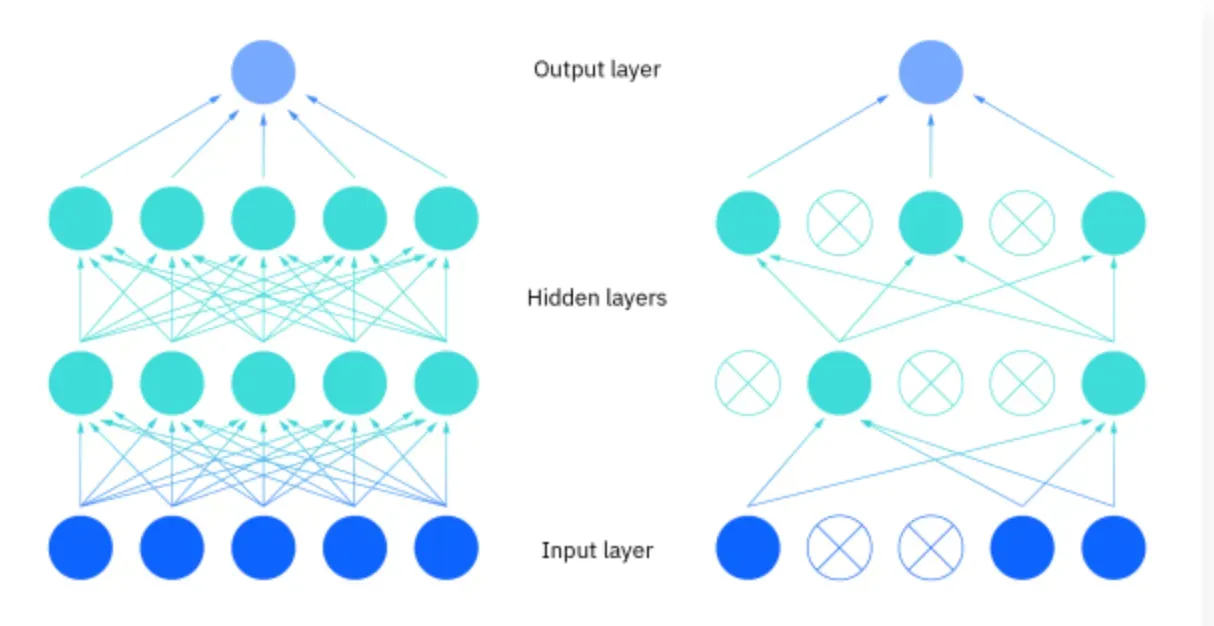

Deep Learning

Deep learning networks, known for their complexity, are at high risk of overfitting. Regularization techniques, especially dropout, are essential here.

Natural Language Processing (NLP)

NLP tasks, often dealing with sparse datasets, benefit from regularization to prevent models from overfitting to the training data.

Image Recognition

Regularization techniques are critical in image recognition tasks to ensure models can generalize from their training set to new, unseen images.

Financial Analysis

In finance, where predictive models are used for risk assessment, portfolio management, etc., regularization helps in dealing with inherently noisy financial data.

How to Implement Regularization?

Implementing regularization correctly can make or break your machine learning model's performance.

Choosing the Right Technique

Understand the difference between L1, L2, and Elastic Net regularization to choose which best fits your model's needs.

Tuning Hyperparameters

Utilize techniques like cross-validation to find the optimal lambda value that balances the complexity and performance of the model.

Feature Scaling

Before applying regularization, it's crucial to scale your features since regularization is sensitive to the scale of input features.

Integrating with Model Training

Regularization should be integrated as part of the model training process, not as an afterthought, to ensure it functions as intended.

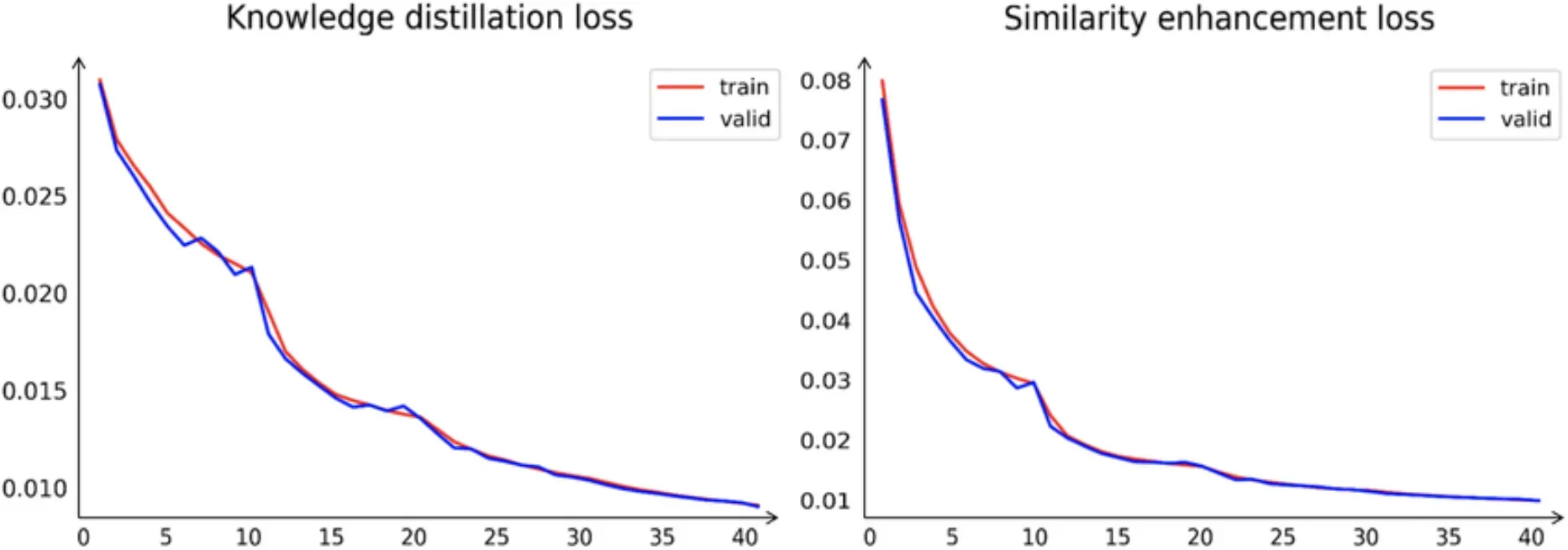

Monitoring Model Performance

Keep an eye on how regularization affects your model's performance, making adjustments as necessary based on validation data feedback.

Challenges in Regularization

Despite its usefulness, regularization comes with its set of challenges that practitioners need to be aware of.

Choosing the Right Lambda

Finding the optimal regularization strength (lambda) can be tricky and requires careful tuning.

Risk of Underfitting

Over-regularization can lead to underfitting, where the model is too simple to capture the underlying pattern in the data.

Computation Cost

Especially in large models, regularization can increase the computational cost due to the added complexity of calculation.

Balancing L1 and L2 Regularization

In Elastic Net regularization, finding the right balance between L1 and L2 regularization can be challenging.

Feature Selection Bias

L1 regularization can introduce bias in feature selection, particularly in scenarios where there are correlations amongst features.

Trends in Regularization

The field is constantly evolving, and staying atop trends is crucial.

AutoML and Regularization

Automated Machine Learning (AutoML) platforms are incorporating intelligent regularization techniques that automatically adjust to model needs.

Advances in Deep Learning Regularization

New regularization techniques specific to deep learning, like dropout and batch normalization, are becoming standard practices.

Regularization in Transfer Learning

With the rise of transfer learning, especially in deep learning, regularization plays a crucial role in adapting pre-trained models to new tasks.

Integration with Reinforcement Learning

Regularization techniques are being explored in reinforcement learning to ensure policies learned by agents generalize across different environments.

Expanding Beyond Traditional Methods

Research is ongoing into new forms of regularization that can cater to the unique needs of evolving machine learning landscapes, such as graph neural networks and federated learning.

As models become increasingly complex and data becomes ever more intricate, the principles of regularization remind us that sometimes, less is indeed more.

Approached with thoughtfulness and a clear understanding of its nuances, regularization can be the linchpin in creating models that not only perform well on paper but stand the test of real-world application.

Frequently Asked Questions (FAQs)

How does Regularization help in Machine Learning?

Regularization helps prevent overfitting in machine learning by adding a penalty term to the loss function, discouraging overly complex models, and promoting generalization.

What are the Common Types of Regularization Techniques?

The common types are L1 regularization (Lasso), L2 regularization (Ridge), and Elastic Net which is a combination of L1 and L2.

Can Regularization Improve Model Performance?

Yes, regularization can improve the performance of a machine learning model on unseen data by reducing its complexity and improving its generalization capacity.

Is Regularization relevant for all Machine Learning Algorithms?

No, regularization is mostly relevant for parametric learning algorithms where the model complexity can be controlled by adjusting the model parameters.

What is the role of the Regularization Parameter?

The regularization parameter controls the strength of the penalty term. A higher value creates a simpler model, while a lower value permits a more complex model.