What is Reinforcement Learning?

Reinforcement Learning is a type of machine learning where an agent interacts with an environment and learns to select the best actions by maximizing cumulative rewards based on positive (successful) or negative (unsuccessful) feedback from its experiences.

Why use Reinforcement Learning?

The main reasons for using Reinforcement Learning are:

- Ability to learn from interactions in complex and uncertain environments without explicitly needing programming for each possible scenario

- Adaptable decision-making based on dynamic conditions

- Continuous learning and improving from sparse, delayed feedback

Who uses Reinforcement Learning?

A diverse range of professionals and researchers use Reinforcement Learning in various fields, such as:

- Robotics

- Gaming

- Finance

- Healthcare

- Supply Chain and Logistics

- Energy Management

- Telecommunications and Networking

When to Apply Reinforcement Learning?

Reinforcement Learning is most suitable when:

- The problem environment is complex and uncertain, and traditional programming methods prove ineffective

- Decision-making (actions) follow a feedback loop

- Feedback is sparse, delayed, and dependent on multiple decisions

Where is Reinforcement Learning Utilized?

Reinforcement Learning is employed across multiple practical applications, including but not limited to:

- Autonomous vehicles and drones for navigation and control

- Simulation environments for training, analytics, and predicting system behavior

- Humanoid robotics or robotic manipulators in manufacturing processes

- Algorithmic trading and finance decision-making

- Personalized recommendation systems in e-commerce and media platforms

- Adaptive resource allocation and network routing in telecommunications

- Treatment plan personalization in healthcare management

Reinforcement Learning brings a paradigm shift in artificial intelligence by offering the potential to autonomously learn optimal strategies and decision-making from raw experiences in various application scenarios.

How Reinforcement Learning works?

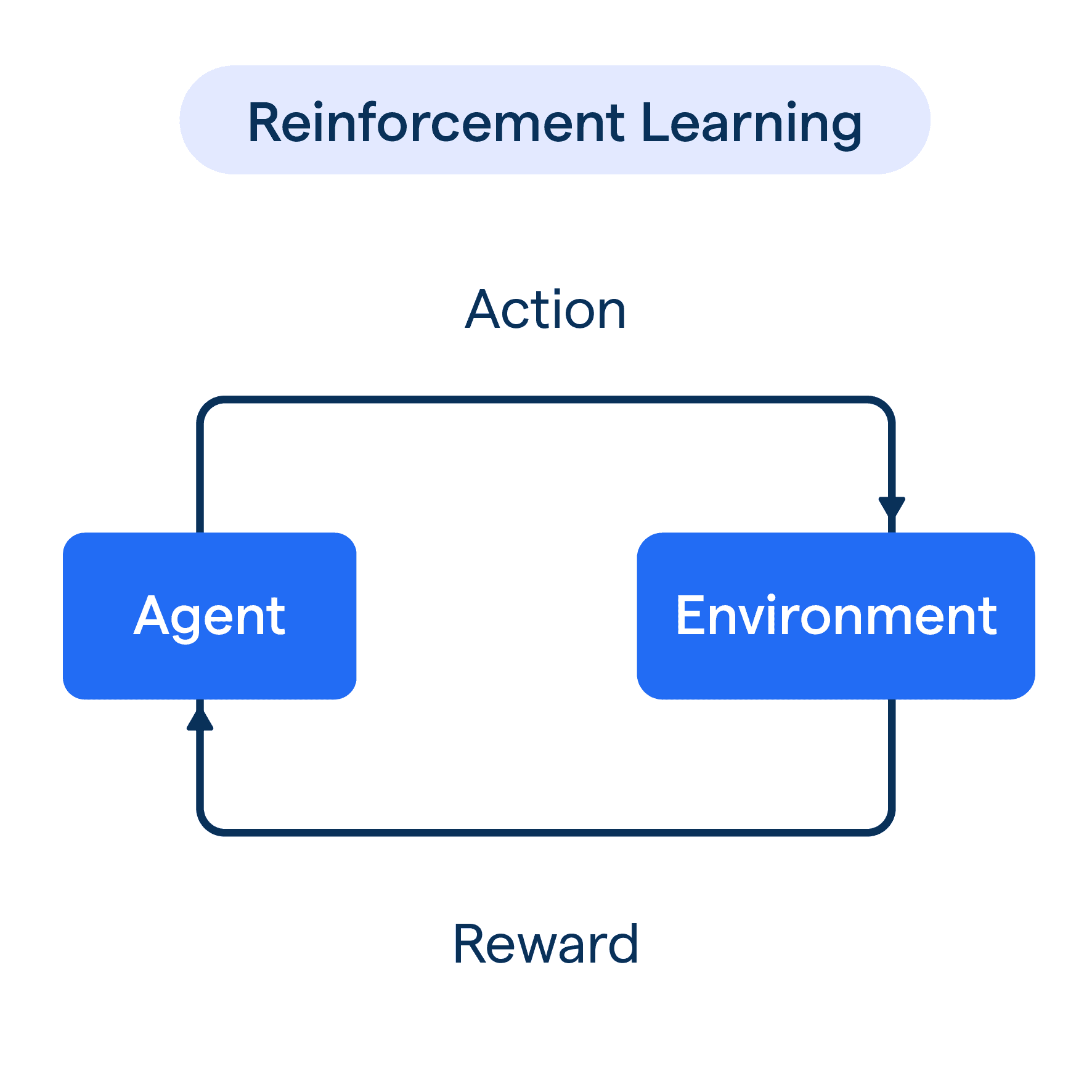

Reinforcement Learning (RL) operates on a reward-based feedback loop. The process inherently involves four main components: agent, environment, actions, and rewards.

The Agent and the Environment

In the RL paradigm, the AI model, referred to as the 'agent', interacts with an 'environment'.

The environment is what the agent operates in and could represent anything — a maze, a financial market, a game, or even simulations of real-world scenarios.

Interactions and Actions

Interactions happen when the agent takes actions, and these actions affect the environment's state. It's important to note that the same action can lead to different results depending on the current state of the environment.

Actions constitute a significant part of RL, serving as the means through which an agent interacts with the environment.

From moving left, right, forward, or backward in a maze, to buying, selling, or holding in a stock market simulation, actions are chosen strategically by the agent to maximize the return.

Rewards

Every time an agent performs an action, it receives feedback from the environment in the form of a 'reward' or a 'penalty'.

Rewards are the motivating factors guiding the agent's behavior. The overarching aim of an RL agent is to maximize the cumulative reward over a set of actions or over time.

Creating Policies

Based on rewards or penalties received from its actions, an agent learns a 'policy'. A policy is a mapping from perceived states of the environment to actions to be taken.

An optimal policy is the one that allows the agent to gain maximum cumulative reward in the long run.

The agent doesn't know the consequences of its actions initially and thus explores the environment. The agent keeps track of the obtained rewards and updates its knowledge about the value of actions.

Over time, it leverages this knowledge to exploit the actions that fetched higher rewards in the past while balancing exploration and exploitation.

RL Algorithms

Popular RL algorithms include Q-Learning, Deep Q Network (DQN), Policy Gradients, and Actor-Critic methods. These algorithms differ mainly in terms of how they represent the policy (either implicitly or explicitly) and the value functions.

Q-Learning, for instance, learns the optimal policy by learning a value function that gives the maximum expected cumulative rewards for each state-action pair.

DQN extends Q-Learning to higher dimensions using deep neural networks to represent the action-value function. Policy Gradients, on the other hand, directly optimize the policy without the need for a value function.

Types of Reinforcement Learning

In this section, we will provide a high-level overview of the various types of reinforcement learning, a sub-field of machine learning concerned with teaching an artificial agent how to achieve goals through interaction with its environment.

Model-Free Reinforcement Learning

Model-free methods directly utilize data samples collected during agent-environment interactions for policy or value function estimation.

Not relying on an explicit model of the environment allows for faster learning and generalization. Some common types of model-free algorithms are:

Value-Based Methods

Value-based methods estimate state or state-action value functions, which are later used to derive optimal policies.

The most well-known value-based algorithm is Q-learning, which learns action-value functions in a model-free setting.

Policy-Based Methods

Policy-based methods directly estimate the policy - the mapping from states to actions. The aim is to discover an optimal policy by updating it based on experiences.

A popular policy-based algorithm is the REINFORCE algorithm, which uses the policy gradient method.

Model-Based Reinforcement Learning

Model-based methods involve learning an explicit model of the environment - a transition model (how the environment changes based on selected actions) and a reward model (the rewards received for each action). Here are two key approaches:

Planning and Control

The agent uses its learned model and an associated planning algorithm to find the optimal policy.

Planning involves running simulations using the model and choosing actions to maximize the return. The most popular planning algorithm is the Monte Carlo Tree Search (MCTS).

Integrated Model-Free and Model-Based Learning

Some algorithms combine elements of both model-based and model-free learning. This approach can improve both efficiency and sample complexity.

One notable example is the DYNA architecture, which seamlessly integrates learning, planning, and action selection.

Applications of Reinforcement Learning

Reinforcement Learning has been applied to various fields like robotics, gaming, finance, and more. Let's check out some of the most prominent applications of Reinforcement Learning.

- Robotics: Reinforcement Learning is widely used in robotics to train agents to perform tasks such as grasping and manipulation.

- Games: Reinforcement Learning is used in games to train agents to play games such as Go, Chess, and Video Games.

- Autonomous Driving: Reinforcement Learning is used in autonomous driving to train agents to make decisions about acceleration, braking, and steering.

- Finance: Reinforcement Learning is used in finance to develop trading strategies and to optimize portfolio management.

Challenges in Reinforcement Learning

Delve into the complexities and challenges inherent in the world of reinforcement learning. While promising, this field of machine learning is not without its obstacles.

The Exploration vs Exploitation Dilemma

This challenge involves the balance between choosing known rewards (exploitation) and discovering new ones (exploration).

Managing this trade-off is critical but often problematic in reinforcement learning.

Credit Assignment Problem

Determining which actions led to the final reward in a sequence of steps (episode) is non-trivial.

This difficulty in 'credit assignment' is a significant challenge in the learning process.

High-Dimensional Spaces

In practical applications, reinforcement learning must handle high-dimensional state-action spaces.

Traditional methods may not scale effectively, leading to complexity and computational inefficiencies.

Delayed Rewards

Delayed rewards, where an agent has to take several actions before receiving a reward, pose difficulties in assessing the value of actions. This can complicate the learning process.

Sample Efficiency

Reinforcement learning often requires a vast number of trial-and-error interactions to learn an optimal policy. Improving sample efficiency, i.e., learning more from fewer interactions, remains a challenge.

While these challenges make reinforcement learning a complex field, it's important to remember that addressing them is an active area of research, pushing the boundaries of what's possible in artificial intelligence.

Frequently Asked Questions (FAQs)

How does reinforcement learning work?

Reinforcement learning is a form of machine learning that rewards an agent for correct actions in an environment. The agent learns to maximize rewards by trial-and-error.

What are some applications of reinforcement learning?

Reinforcement learning has important applications for robotics, gaming, finance, and autonomous driving, among others.

What are some challenges of reinforcement learning?

Challenges of reinforcement learning include sample inefficiency, dealing with continuous state and action space, and balancing exploration and exploitation.

What is deep reinforcement learning?

Deep reinforcement learning involves combining deep neural networks with reinforcement learning algorithms that can handle complex data, for example in natural language processing.

What are some algorithms used in reinforcement learning?

Q-Learning is a widely-used model-free algorithm, while Actor-Critic is a value-based and policy-based algorithm. PPO (Proximal Policy Optimization) is a popular deep reinforcement learning algorithm.