Generative AI models are AI platforms that can generate numerous outputs based on training datasets, deep learning architecture, neural networks, and user prompts. These models are the magicians weaving their magic in the form of technology to make machines generate realistic images, create high-quality content, produce mesmerizing music, and much more.

Let us consider a practical situation to understand these Generative AI models. Imagine, you are a graphic designer and in need of captivating designs for promotional poster activities. You need not create each design from scratch. All you need is provide your magician- Generative AI models with a prompt instructing them to create a modern, abstract design for your poster with vibrant colors.

Based on its training data and deep learning architecture, Generative AI models will generate several attractive designs within seconds. You can then choose the one you feel is best, and tweak it according to your brand. Now, you are all set to use it in your campaign. This is the power of these models.

Now, let us go deeper and find out how Generative AI models work, their types, how they can transform your business, and much more. Before that let us have a brief on what Generative AI models are all about.

What are Generative AI Models?

Generative AI models are a type of artificial intelligence that can create new images, text, audio, and more. Instead of just predicting things, they can generate brand-new creative outputs.

They learn patterns from huge amounts of data and use it to generate new things following the same patterns. Some good examples are text generators for writing, image creators, music makers, etc.

According to a survey from Gartner, businesses using Generative AI have helped them with a 15.8% revenue increase, 15.2% cost savings, and 22.6% improvement in productivity on average.

How Do Generative AI Models Work?

Generative AI models are data-driven models with the ability to create content. These models are trained using unsupervised or semi-supervised learning methods. Hence, Generative AI models can learn by studying large amounts of data from sources like the internet, Wikipedia, books, image collections, and more.

During this learning process, they can identify both the tiny details and the bigger patterns. This helps them to reconstruct those patterns when they generate new content, which will appear as though it is a human creation and not AI-generated.

Let us explore how Generative AI models are created using various methods and technologies.

Neural Networks

Neural network models are designed to operate similarly to the structure and function of a human brain. You can find nodes or neurons that are interconnected and arranged in layers. They give signals to each other.

Neural networks follow a process called backpropagation in which it learns by fine-tuning the weights and biases of these interconnected neurons.

Deep Learning

Deep learning is a sub-field of machine learning and uses neural networks that have several hidden layers. These are called deep neural networks. They help the models understand the complex patterns and relationships in data and replicate the human brain's decision-making abilities.

This technique is used to solve various issues like image, and speech recognition, and natural language processing.

Training Methods of Generative AI Models

Generative AI models are trained using various methods to make them more effective. Let us find out more below.

Unsupervised Learning

As the name suggests, the unsupervised learning method learns from data and does not require human intervention. It is also a type of machine learning method. It helps Generative AI models to self-train in unlabeled data and recognize the data structures, patterns, and relationships.

You can use this method for cases where labeled data is not available or is very little. It can work well in areas like customer segmentation, and image recognition.

Semi-Supervised Learning

Semi-supervised learning is a combination of supervised and unsupervised learning. It will contain both labeled and unlabeled training data with the labeled data available in a small portion. You can use this method in cases where it is difficult to access enough amount of labeled data, whereas unlabeled data is easily available.

With this labeled data, Generative AI models can understand initial patterns and use it to predict unlabeled data.

Generative AI vs LLM

When you learn about Generative AI models, it is inevitable to understand Large Language Models (LLMs) too. Both of these are related concepts, yet you can find notable differences when it comes to their focus, training data, and applications. Let us explore Generative AI vs LLM for these parameters.

| Parameter | LLMs | Generative AI |

| Focus | Their major focus is to understand and generate human language. They can process and analyze text data, making them appropriate for natural language processing (NLP) tasks. | Generative AI is more broader. It can generate content like text, image, music, codes, etc, and doesn't stop with language generation alone. |

| Training Data | They are trained on large text datasets from sources like the internet. They understand language patterns, grammar structure, and meaning from the data on which it is trained. | Based on their application, these models can be trained on various types of data. They learn to generate new content that resembles the existing patterns. |

| Applications | They are majorly used for tasks related to texts like translation, summarization, text completion, and sentiment analysis. | Generative AI uses are not limited to text. It is used for tasks like content creation, simulation, etc. |

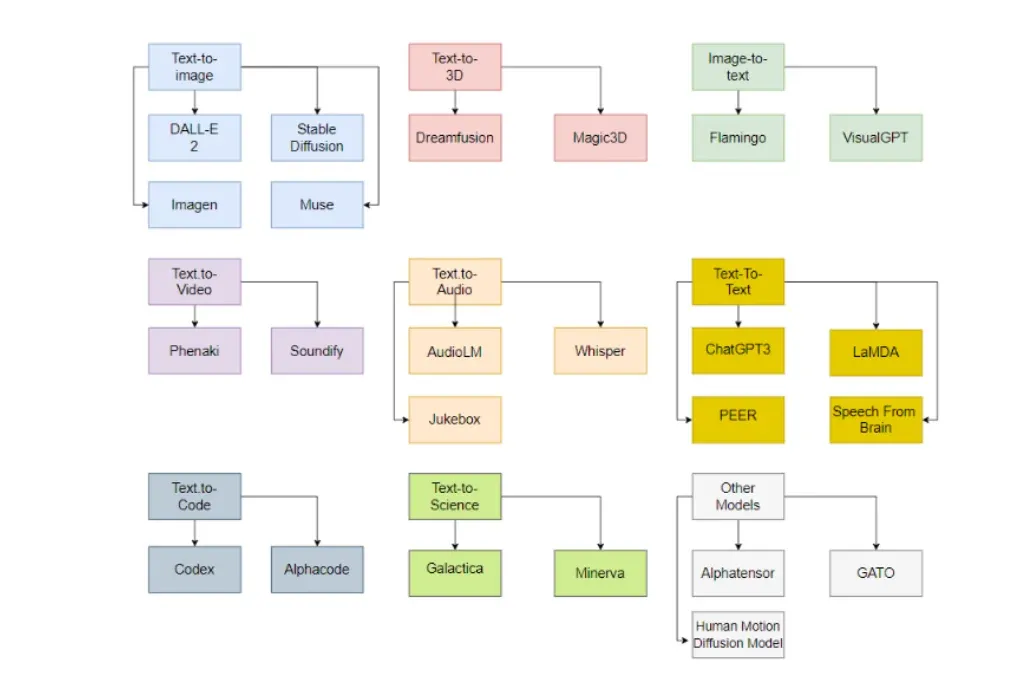

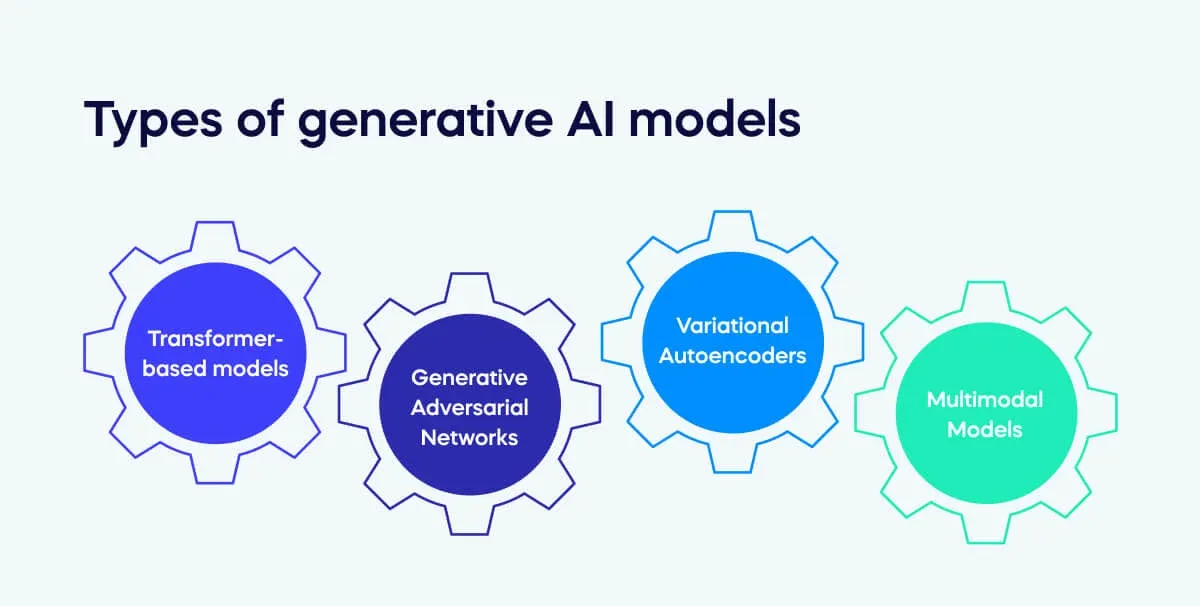

Types of Generative AI Models

There are various types of Generative AI models and each has its own strengths and applications. Let us consider a few of these common types of models below.

Variational Autoencoders (VAEs)

VAEs are generative AI models that use unsupervised learning to encode and decode data. They consist of an encoder network that maps input data to a latent space and a decoder network that reconstructs the data from the latent space. They are widely used in tasks like image generation, image reconstruction, and data compression.

Generative Adversarial Networks (GANs)

GANs are Generative AI models that follow a game-theoretic approach. They consist of a generator network that produces synthetic data and a discriminator network that distinguishes between real and generated samples.

The two networks are trained adversarially, with the generator trying to generate realistic data to fool the discriminator. GANs have shown remarkable results in various domains, including image and video generation, transfer learning, and data augmentation.

Autoregressive Models

Autoregressive models are sequential Generative AI models that predict the probability distribution of the next element in a sequence, conditioned on the previous elements. They generate data by sampling from the predicted distribution and iteratively generating the next element of the sequence.

Autoregressive models are commonly used for tasks like text generation, speech synthesis, and time series prediction.

Flow-Based Models

Flow-based models are Generative AI models that transform complex data distributions into simple distributions. They use an invertible transformation to the input data and can reverse it easily. Initially, they start with a random noise and then use reverse transformation to quickly generate new samples which does not require complex optimization.

They can be used in domains like image generation, where they can generate high-quality images with controllable attributes.

Suggested Reading:

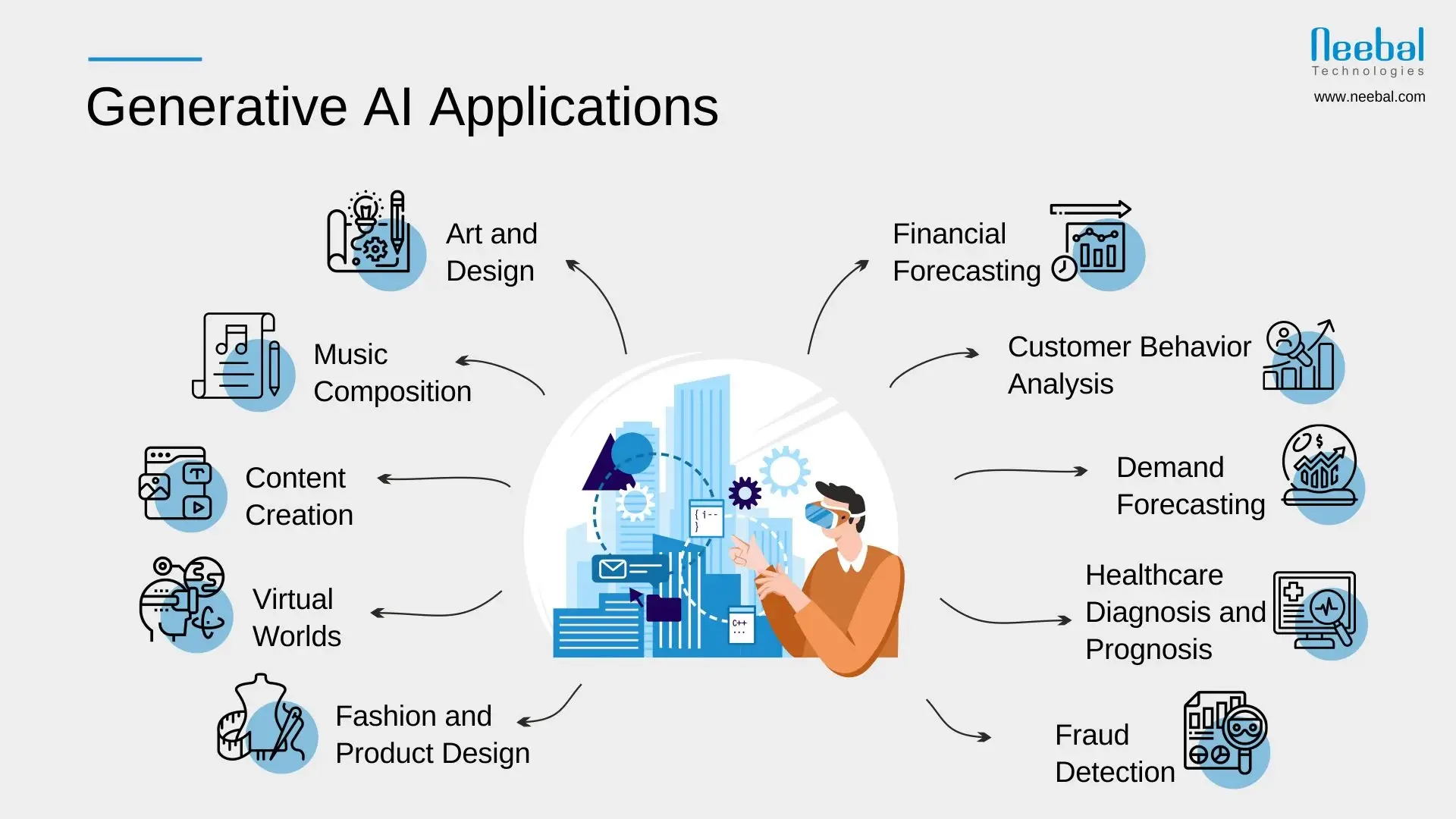

Applications of Generative AI Models

Generative AI uses are visible in various businesses. When used in areas like computer vision, Generative AI models can greatly improve the data augmentation technique. Let us explore more areas, where they can prove to be helpful.

Image Generation

A popular Generative AI use case is image generation. This technology is used to create fake images that resemble real ones.

Image generation has applications in various fields, from generating synthetic images for computer graphics and virtual reality to aiding artists and designers in generating new visual concepts.

Text Generation

Text generation using Generative AI models allows machines to create coherent and contextually relevant text content. These models have applications in chatbots, virtual assistants, content creation, and machine translation.

And, taking your first step towards Generative AI-powered chatbots isn't that tough. Meet BotPenguin- the home of chatbot solutions. With all the heavy work of chatbot development already done for you, all that is remaining is to set up this innovative AI chatbot for your business which offers features like:

- Marketing Automation

- WhatsApp Automation

- Customer Support

- Lead Generation

- Facebook Automation

- Appointment Booking

Music Generation

Generative AI models have also succeeded in generating music that mimics different styles and genres. By training on a large dataset of musical compositions, models like recurrent neural networks (RNNs) or autoregressive models can generate original melodies, harmonies, and even lyrics.

Music generation has applications in entertainment, creative production, and music composition assistance tools.

Data Augmentation

Generative AI models can augment datasets by generating synthetic data similar to the original dataset.

This can help address challenges related to training data scarcity and imbalance. By generating additional samples, generative models can improve the generalization and robustness of machine learning models.

Data augmentation is particularly useful in computer vision, natural language processing, and medical imaging.

Challenges in Generative AI models

Generative AI models, though quite helpful, also have their own limitations. These challenges are due to the complicated nature of the tasks that the model handles, ethical impacts, and others. Let us explore them below.

Training Instability

One of the primary challenges in the Generative AI use case is training instability. Generative models, such as GANs, often require a delicate balance between the generator and discriminator networks to converge to an optimal solution.

However, finding this balance can take time and effort, resulting in training instability. Training instability can lead to difficulties in achieving convergence, slow training progress, and mode collapse, where the generator produces limited and repetitive outputs.

Various techniques, such as adjusting learning rates, network architectures, and regularization methods, have been developed to address training instability in generative AI.

Mode Collapse

Mode collapse is a specific manifestation of training instability in generative AI models. It occurs when the generator of a GAN fails to explore the entire distribution of the training data and produces limited variations.

In other words, the generator collapses to generate a few representative samples, disregarding the full diversity of the target distribution. Mode collapse hampers the model's ability to generate diverse and novel samples.

Researchers have proposed several techniques, such as adding diversity-promoting objectives or regularization terms, to mitigate mode collapse and encourage the generation of a more diverse range of outputs.

Ethical Considerations

As Generative AI models become more powerful and capable, they raise important ethical considerations. One of the key concerns is the potential for misuse and the creation of deepfakes or fake content that can spread misinformation or be used for malicious purposes.

These AI-generated fakes have the potential to deceive individuals and undermine trust.

Suggested Reading:

Understanding Generative and Discriminative Models: Which One Should You Choose?

Recent Advances and Future Prospects in Generative AI Models

As Generative AI models become more accessible and user-friendly, they can allow you to use the power of AI in various applications, positively impacting industries, innovation, and society as a whole.

OpenAI's GPT

OpenAI's GPT (Generative Pre-trained Transformer) is a language model that has garnered significant attention for its impressive capabilities in natural language understanding and generation.

With a staggering 175 billion parameters, GPT has demonstrated remarkable proficiency in tasks like text completion, language translation, and even creative writing.

This model uses unsupervised learning on a massive scale and has set new benchmarks for generative AI. GPT represents a culmination of recent advances in transformer-based architectures and has sparked enormous interest in natural language processing.

Potential Applications in Various Fields

The emergence of models like GPT-3 opens up various applications.

In industries such as customer support and chatbots, GPT-3 can provide more sophisticated and human-like interactions. It can also automate tasks like content generation, writing code, or even creating entire websites. In healthcare, GPT-3 can assist with medical diagnosis and treatment recommendations based on analyzing patient data and medical literature.

Also, GPT-3 can aid in language translation, sentiment analysis, and information retrieval, making it valuable in journalism, marketing, and research.

Impact on Industries and Society

The advancements in Generative AI, like GPT-3 models, can potentially transform industries and society. By automating tasks that previously required human effort, these models can enhance productivity and streamline workflows.

They can transform content creation, freeing up time for creatives and enabling the generation of personalized and tailored content at scale. The impact extends to customer service, where GPT-3 can provide more efficient and satisfying interactions.

However, the widespread adoption of such models also raises concerns about job displacement and the need to reevaluate workforce skills and job market dynamics.

Conclusion

Generative AI models are transforming the way businesses operate and optimizing their processes. As these technologies continue to advance, you can expect more innovations in this field. Very soon, you may find Generative AI assisting with or automating many tasks across industries.

However, it is very important that you develop these systems responsibly and also ensure that they are trained with fair data, follow ethical standards, and are used safely.

When you take the necessary precautions to avoid potential risks and implement Generative AI models, it can open new doors of opportunities and allow you to enjoy its benefits.

Frequently Asked Questions (FAQs)

Are Generative AI models reliable?

The reliability of Generative AI models depends on their training data. Hence, you have to train these models on accurate, unbiased, trusted sources with verifiable data. This way, they can generate outputs with accurate information.

How Generative AI models can improve customer experience?

Generative AI in customer service can provide personalized support to users. They can collect information from datasets and help you understand the market with which you can fine-tune your products or services.

What are the applications of Generative AI models?

Generative AI models find applications in areas like image synthesis, text generation, music composition, and even drug discovery. They can be used to generate realistic images, create virtual characters, assist in content creation, and support creative tasks in multiple domains.

What is the difference between Generative AI models and Discriminative AI models?

Generative AI models focus on generating new data, while discriminative models focus on classification tasks by distinguishing among existing data. Generative models learn to capture the underlying distribution, whereas discriminative models learn to distinguish between different classes or categories.

What are the challenges faced in training generative AI models?

Training generative AI models can be challenging due to issues like training instability and mode collapse. These challenges can impact convergence, slow down training progress, and lead to the generation of limited and repetitive outputs.

How can Generative AI models be used responsibly?

To ensure the responsible use of Generative AI models, ethical considerations should be taken into account. Guidelines and regulations can be implemented to address concerns related to deepfakes, privacy, bias, and content integrity, promoting transparency, fairness, and accountability in their development and deployment.