A key to advancing machine learning is understanding the complex relationship between data and models. In this blog, we'll explore two types of models: Generative and Discriminative models.

Generative models create realistic new examples by capturing underlying patterns. We'll take a closer look at how generative adversarial networks generate faces and how variational autoencoders compose music.

In contrast, Discriminative models focus on classification through learned boundaries. We'll examine how logistic regression performs sentiment analysis and how convolutional neural networks recognize images.

By comparing techniques like modeling joint distributions versus conditional probabilities, you will gain valuable insights into applying the right model for tasks like anomaly detection, data augmentation, and more.

Whether interested in vision, language, or other domains, this blog delivers an accessible overview of generative and discriminative methods and their wide-ranging applications.

So, let’s get started with the generative AI model.

What are Generative Models?

In machine learning, generative models refer to a model that emphasizes building a statistical model of a dataset's underlying distribution.

A generative model learns patterns from a dataset and tries to create a new sample with similar characteristics.

A generative model is a system that tries to understand the data and create a probability distribution that matches the dataset's patterns. The idea behind a generative model is that it can learn to create new data points similar to the original dataset.

Examples of Generative Models

Generative models capture patterns in data to generate realistic new examples. This section explores some commonly used generative models.

Here are examples of widely applied generative models.

Gaussian Mixture Models (GMMs): A Gaussian Mixture Model represents the density function of a given dataset as a mixture of several Gaussian distributions. In simpler terms, a GMM can identify the distribution of a set of continuous data and then mimic it.

Hidden Markov Models (HMMs): A Hidden Markov Model is a probabilistic framework that can model time series data. HMMs are used to predict the next observation in a sequence based on the hidden states of the process.

Variational Autoencoders (VAEs): A Variational Autoencoder is a type of neural network that can learn the underlying distribution of the input data and generate new data points. A VAE does this by mapping input data to a latent space, which is used to reconstruct the input or generate new data points.

Generative Adversarial Networks (GANs): Generative Adversarial Networks (GANs) use two neural networks: a generator creates data, and a discriminator checks if it's real. The generator refines its output to fool the discriminator.

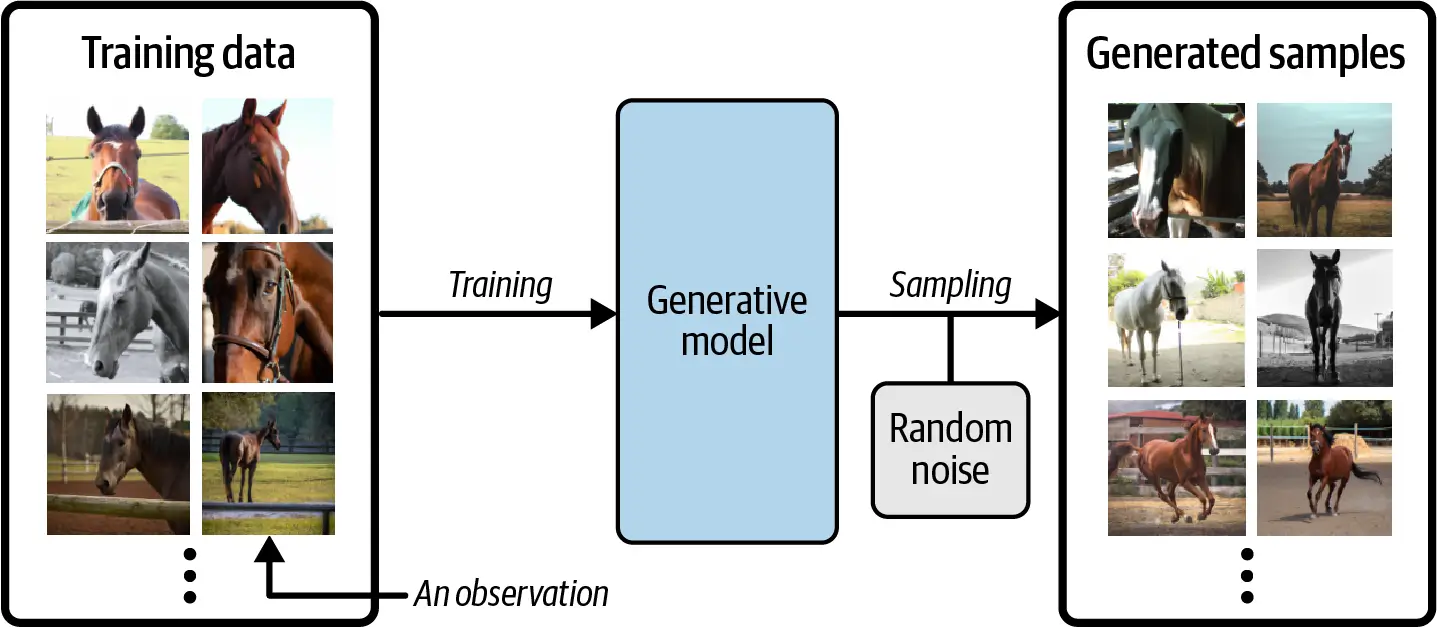

How do Generative Models Work?

Generative models work on learning the underlying probability distribution of a given dataset.

They aim to understand the patterns and structures in the data and then generate new samples that capture the same distribution.

The fundamental idea behind generative models is to create a model that can generate new data points statistically similar to the original dataset.

To do this, generative models use techniques such as density estimation, latent variable modeling, and probabilistic graphical models.

Training and Inference Process of Generative Models

The training process of generative models involves learning the parameters that describe the underlying probability distribution of the dataset. This is typically done using a large amount of labeled training data.

The model optimizes its parameters iteratively during training to maximize the probability of producing the observed data.

This process minimizes a loss function that measures the discrepancy between the model's generated samples and the original data.

The generative model can be applied to inference or sample generation after training. Inference involves using the learned model to make predictions or estimate the probability of unseen data. The model can generate new samples by randomly sampling from the learned distribution.

Applications of Generative Models

Generative models have found applications in various domains where the ability to generate new data is valuable. Some areas where generative models excel include:

Image Generation: Generative models can generate realistic images, such as generating new faces or creating artwork.

Text Generation: Generative models can generate new text that resembles human-written content, which can be helpful in natural language processing tasks.

Anomaly Detection: Generative models can detect anomalies in data by identifying samples that deviate significantly from the learned distribution.

Data Augmentation: Generative models can generate additional training examples, helping improve the performance of other machine learning models.

Here, we have covered all about the generative AI model. It is time to explore the next model, which is the Discriminative model.

Ready to explore? Keep reading….

Suggested Reading:

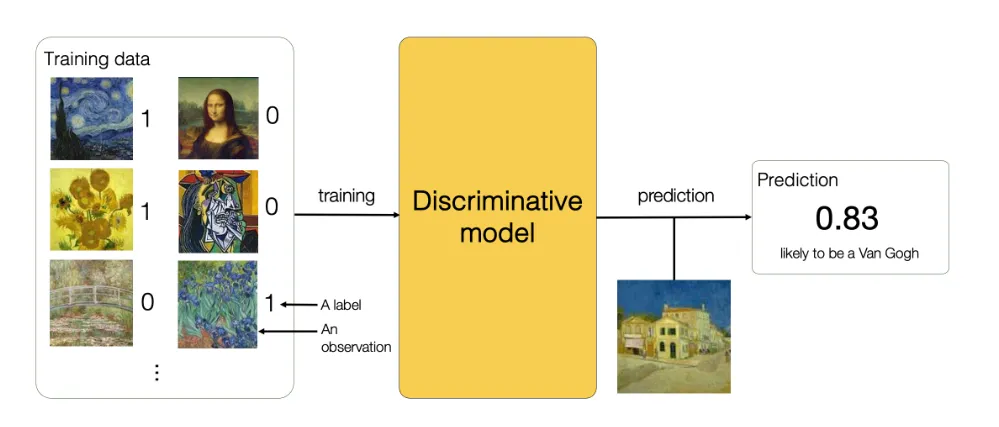

What are Discriminative Models?

Discriminative models, a machine learning model, aim to learn the direct mapping between input variables and output labels without considering the underlying probability distribution of the data.

These models focus on classifying or discriminating between classes or categories based on the available input features.

Unlike generative models that model the joint distribution of inputs and outputs, discriminative models directly model the conditional probability of the output given the input.

They learn to find the decision boundary that separates different classes or categories in the input space.

Examples of Discriminative Models

Discriminative models, such as Logistic Regression, Support Vector Machines (SVM), and Neural Networks, excel in diverse tasks like classification and sequence analysis. Let’s learn more about them.

Logistic Regression: Based on the input features, a discriminative model called logistic regression is used to estimate the likelihood of a binary result. Tasks involving categorization frequently employ it.

Support Vector Machines (SVM): SVM is another popular discriminative model that finds an optimal hyperplane to separate different classes in high-dimensional spaces. It is effective for both binary and multi-class classification.

Artificial Neural Networks (ANN): ANNs are flexible discriminative models composed of interconnected layers of artificial neurons. They are capable of learning complex mappings between input and output variables

Convolutional Neural Networks (CNN): CNNs are specialized deep learning models commonly used for image classification tasks. They extract hierarchical features from input images for discriminative classification.

Recurrent Neural Networks (RNN): Recurrent Neural Networks (RNNs) are discriminative models that process sequential data, such as time series or natural language data. They capture temporal dependencies and are widely used in tasks like language translation and speech recognition.

How Discriminative Models Work?

Discriminative models, a machine learning model, work by learning the direct mapping between input variables and output labels.

Unlike generative models that model the joint distribution of inputs and outputs, discriminative models focus on modeling the conditional probability of the output given the input.

Underlying Principles

Discriminative models learn to find the decision boundary that separates different classes or categories in the input space.

They observe the input features and their corresponding labels and use this information to estimate the probability of a specific output label given the input.

The models use various mathematical techniques and algorithms to optimize the decision boundary based on the training data. The goal of this optimization method is to decrease the error between the expected and actual outputs by modifying the model's parameters.

Training and Inference Process

The training process of discriminative models involves feeding the model with labeled training data.

The model iteratively updates its parameters to minimize the difference between the predicted output and the true output labels. This process is often achieved through optimization algorithms like gradient descent.

Once the model is trained, it can be used for inference or prediction. During inference, the model takes unseen or test data as input and calculates the probability of each possible output label.

The label with the highest probability is then assigned as the predicted output.

Applications of Discriminative Models

Discriminative models have a wide range of applications across various domains. Let's explore some of the use cases where these models excel.

Natural Language Processing

Discriminative models may be used in natural language processing tasks like text classification and sentiment analysis to predict the category or sentiment of a given text. They can assist with email spam detection, article classification, and customer feedback analysis.

Computer Vision

Convolutional neural networks (CNNs), among other discriminative models, are extensively employed in computer vision for tasks related to object identification, picture segmentation, and image classification. They can distinguish people, identify items in photos, and find irregularities in medical imaging.

Speech Recognition

Discriminative models, particularly recurrent neural networks (RNNs), are employed in speech recognition systems. They can convert spoken words into written text, enabling voice-controlled applications and transcription services.

Financial Analysis

In finance, discriminative models are helpful for credit scoring, fraud detection, and stock market prediction. They can help identify potential defaulters, detect suspicious transactions, and make investment recommendations.

Now, after looking into both the models, let us see the differences between these generative ai models and discriminative models and see the pros and cons of each.

Suggested Reading:

Reshaping Education: Generative AI in Learning & Development

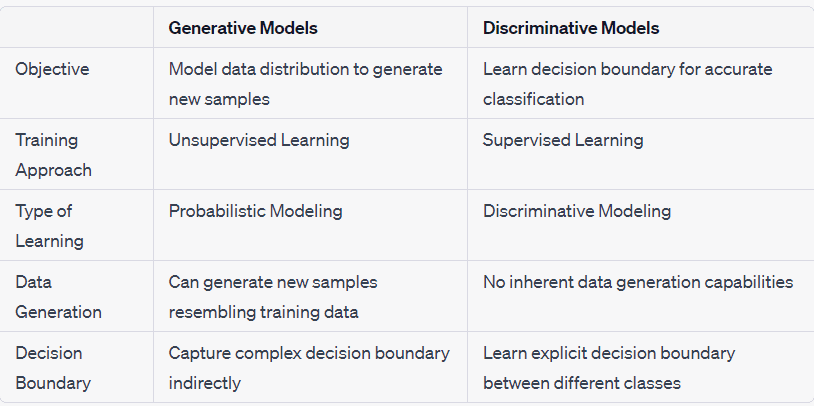

Comparison of Generative and Discriminative Models

When it comes to machine learning models, two popular approaches are generative models and discriminative models.

Let's explore the differences between these two models and see the pros and cons of each. Additionally, we'll discuss how to choose the right model for specific tasks and scenarios.

Differences between Generative and Discriminative Models

The goal of generative models is to simulate the joint probability distribution of output labels and input feature sets. They can produce fresh samples and understand the distribution of the underlying data.

Discriminative models, on the other hand, only consider the conditional probability of the output in light of the input. They directly model the decision border between several groups or categories.

Pros and Cons of Generative Models

Generative models have several advantages and disadvantages worth considering.

Pros of Generative Models

Generative models provide a complete probabilistic data model. They can generate new samples from the learned distribution.

They can estimate missing or unobserved variables using the learned joint distribution.

Generative models can handle missing or incomplete data since they model the entire distribution.

Cons of Generative Models

Learning the joint distribution can be challenging, especially in high-dimensional or complex data.

Generative models often require more training data compared to discriminative models.

They can be computationally expensive, as they involve modeling the entire joint distribution.

Pros and Cons of Discriminative Models

Discriminative models, too, have their own set of advantages and disadvantages.

Pros of Discriminative Models

Discriminative models focus directly on classification or discrimination between different classes or categories.

They can be simpler and computationally more efficient compared to generative models.

Discriminative models are more flexible in terms of handling complex high-dimensional data.

Cons of Discriminative Models

Discriminative models do not model the joint distribution or provide insights into the underlying data distribution.

They can be sensitive to outliers and noisy data, affecting the decision boundary and classification accuracy.

Discriminative models may need more adequate or imbalanced training data.

Choosing the Right Model

Choosing the perfect model depends on the specific task and the available data. Here are some guidelines to consider:

When precise classification or differentiation between distinct classes is the primary goal, and a sufficient amount of labeled data is accessible, discriminative models like logistic regression, support vector machines, or neural networks may be suitable options.

If understanding the underlying probability distribution and generating new samples is crucial, generative models like Gaussian mixture models or hidden Markov models can be more suitable.

When dealing with missing data or the need to estimate unobserved variables, generative models offer an advantage.

For tasks like image classification, computer vision, or natural language processing, where accurate classification is the primary objective, discriminative models like convolutional neural networks or recurrent neural networks are often preferred.

Conclusion

This overview explored the fundamental differences between generative and discriminative approaches in machine learning.

Generative models capture underlying distributions to generate new data samples, while discriminative models directly map inputs to outputs for classification tasks.

Both have valuable applications, from generating images and text to detecting anomalies and diagnosing diseases.

The right choice depends on your goals and available data. Whether your interests involve vision, language, finance, or other domains, applying these techniques can help advance your work.

With a solid understanding of these powerful modeling strategies, you can select the best approach to solve your unique machine-learning challenges.

Suggested Reading:

Frequently Asked Questions (FAQs)

Is GPT generative or discriminative?

GPT (Generative Pre-trained Transformer) is primarily a generative model that generates text based on patterns it has learned from vast amounts of data. It predicts the next word in a sequence, producing coherent and contextually relevant text, making it an exemplar of generative models.

What are the key differences between generative and discriminative models?

Generative AI models capture the joint probability distribution of input features and labels, enabling the generation of new data. In contrast, discriminative models focus solely on the conditional probability of the labels given the input features, emphasizing classification tasks. This contrast underlies their diverse applications and training methodologies.

In what ways do generative and discriminative models complement each other in machine learning tasks?

Generative AI models can be used to generate synthetic data that helps augment the training set, which in turn improves the performance of discriminative models. Furthermore, the outputs of generative AI models can serve as inputs to discriminative models, enabling a symbiotic relationship between the two models.

What are some of the recent advancements in the field of generative and discriminative models?

Recent advancements include the development of more sophisticated generative adversarial networks (GANs) that produce higher quality outputs, as well as integrating deep learning techniques in discriminative models, leading to improved accuracy and robustness in various classification tasks.