What is Deep Learning?

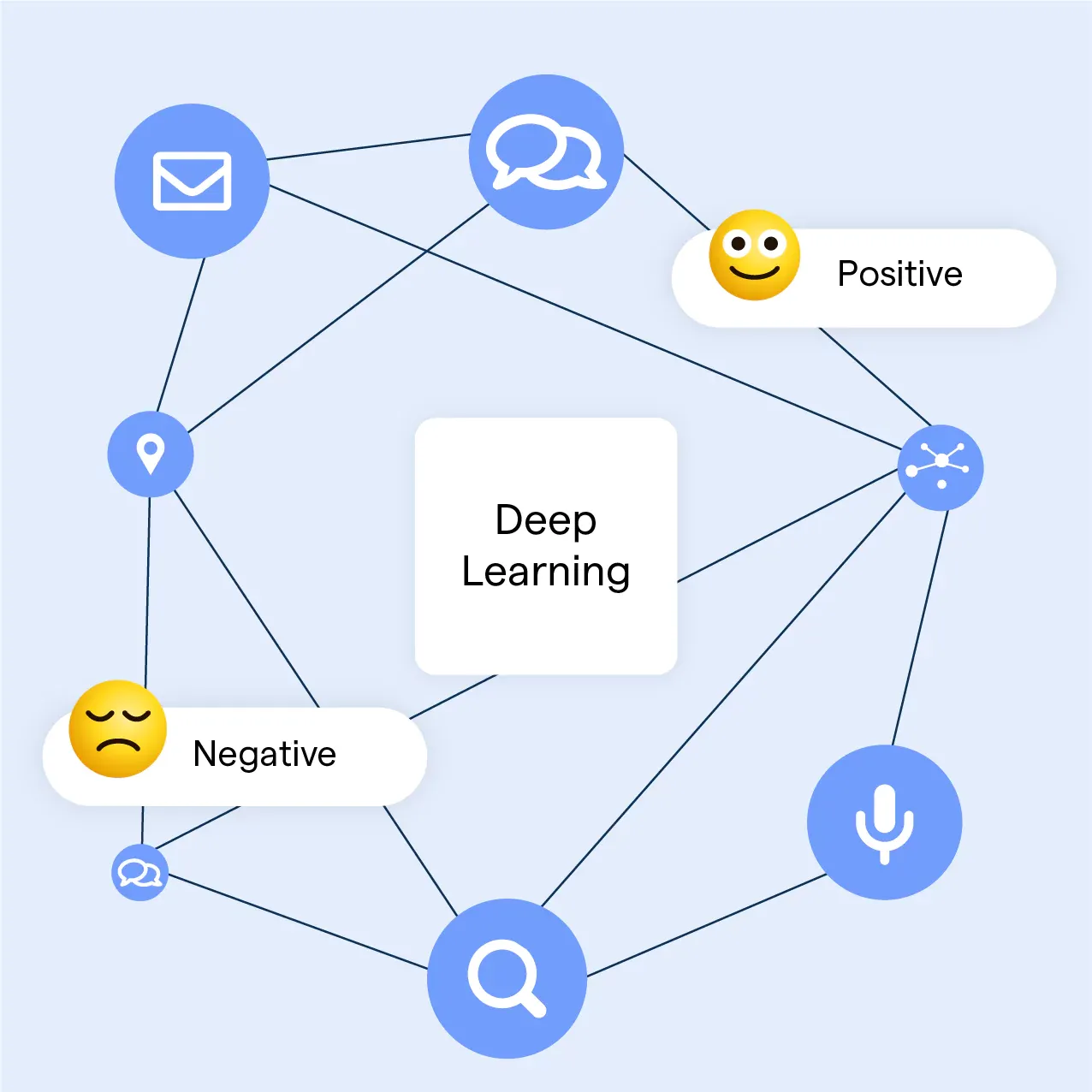

Deep learning is a subfield of machine learning that focuses on artificial neural networks with multiple layers, known as deep neural networks.

These networks can learn complex patterns and representations from large amounts of data, enabling them to perform tasks such as image recognition, natural language processing, and game playing.

Deep learning has gained popularity due to its ability to achieve high accuracy in tasks that were previously considered challenging for machines.

It has been widely applied across various industries, including healthcare, finance, and autonomous vehicles, driving innovation and improving efficiency.

Applications of Deep Learning

Deep Learning is like a Swiss Army knife, with a wide range of applications, including:

- Image and speech recognition

- Natural language processing and translation

- Recommendation systems

- Medical diagnosis and drug discovery

- Fraud detection and cybersecurity

- Robotics and autonomous vehicles

Why do we need Deep learning?

Enhanced Pattern Recognition

Deep learning algorithms excel at recognizing patterns in data. From detecting faces in images to understanding speech, these algorithms identify underlying structures and make sense of complex datasets.

Handling Unstructured Data

Traditional algorithms often struggle with unstructured data like text, images, or audio. Deep learning models, however, can effectively process and analyze this type of data, making them invaluable in numerous applications.

Improved Prediction and Decision-Making

By leveraging large datasets, deep learning models are capable of making highly accurate predictions. This ability is crucial in areas like financial forecasting, healthcare diagnostics, and supply chain management.

Scalability with Data Volume

Deep learning models become more effective as the volume of data increases. Unlike other algorithms that plateau, deep learning continues to improve, making it ideal for big data applications.

Customization and Personalization

Deep learning supports customization and personalization by learning from user data. This is essential for creating tailored experiences, such as personalized content recommendations in streaming services or targeted advertising.

How Does Deep Learning Work?

Artificial Neural Networks

At the heart of deep learning are artificial neural networks (ANNs), which are inspired by the human brain's structure and function. ANNs are made up of interconnected nodes or neurons that process and transmit information. Each neuron receives input from other neurons, applies a mathematical function (called an activation function), and passes the result to the next layer of neurons.

Layers in Deep Learning

Deep learning models are like onions - they have layers! These layers of neurons can be grouped into three main types:

- Input Layer: This is where the model receives the raw data, such as images, text, or sound.

- Hidden Layers: These layers perform complex transformations on the input data to extract meaningful features and patterns.

- Output Layer: This layer produces the final result, such as a classification or a prediction.

Activation Functions

Activation functions are like the bouncers of the neural network world. They're mathematical functions that determine the output of a neuron based on its input. They introduce non-linearity into the model, allowing it to learn complex patterns and relationships in the data. Common activation functions include the sigmoid, ReLU (Rectified Linear Unit), and softmax functions.

Forward and Backward Propagation

Deep learning models learn by adjusting the weights of the connections between neurons. This is done through a process called forward and backward propagation. During forward propagation, the input data is passed through the network to produce an output. The model then compares its output to the true target value, calculating an error or loss. Backward propagation involves adjusting the weights of the connections to minimize this error, using a technique called gradient descent.

Types of Deep Learning Models

Supervised Learning

In supervised learning, the model is like a student with a knowledgeable teacher. It's trained on a labeled dataset, where each input is associated with a corresponding target output. The model learns to map inputs to outputs by minimizing the error between its predictions and the true target values. Examples of supervised learning tasks include image classification, speech recognition, and regression.

Unsupervised Learning

Unsupervised learning is like a curious explorer, venturing into the unknown. The model is trained on an unlabeled dataset, where the goal is to discover underlying patterns or structures in the data. The model learns to identify similarities and differences between data points, often through techniques such as clustering or dimensionality reduction. Examples of unsupervised learning tasks include anomaly detection, topic modeling, and image segmentation.

Reinforcement Learning

In reinforcement learning, the model is like a video game character, learning to make decisions by interacting with an environment. The model receives feedback in the form of rewards or penalties, and its goal is to maximize the cumulative reward over time. This type of learning is particularly useful for training agents in tasks such as game playing, robotics, and resource allocation.

Transfer Learning

Transfer learning is like the ultimate life hack for deep learning. It allows a model trained on one task to be fine-tuned for a different, but related task. This can significantly reduce the amount of training data and computational resources required for the new task. Transfer learning is commonly used in tasks such as image recognition, where a model trained on a large dataset (e.g., ImageNet) can be fine-tuned to recognize specific objects or scenes.

Common Deep Learning Architectures

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are like the superstars of the deep learning world, designed for processing grid-like data, such as images. CNNs use convolutional layers to scan the input data for local patterns, such as edges, textures, and shapes. These patterns are then combined in higher layers to form more complex features, such as objects and scenes. CNNs have been highly successful in tasks such as image classification, object detection, and segmentation.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are like the time travelers of deep learning, designed for processing sequences of data, such as time series or natural language. RNNs have connections that loop back on themselves, allowing them to maintain a hidden state that can capture information from previous time steps. This makes them well-suited for tasks such as language modeling, text generation, and speech recognition. However, RNNs can suffer from issues such as vanishing or exploding gradients, which can make them difficult to train.

Long Short-Term Memory (LSTM) Networks

Long Short-Term Memory (LSTM) networks are like the upgraded version of RNNs, designed to overcome the vanishing gradient problem. LSTMs use a special gating mechanism that allows them to learn long-range dependencies and selectively remember or forget information over time. This makes them particularly effective for tasks such as machine translation, sentiment analysis, and video analysis.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are like the artists of the deep learning world, able to generate new data samples that resemble a given dataset. GANs consist of two neural networks, a generator and a discriminator, that are trained together in a process called adversarial training. The generator learns to create realistic data samples, while the discriminator learns to distinguish between real and generated samples. GANs have been used to generate realistic images, music, and even 3D models.

Deep Learning Frameworks

TensorFlow

TensorFlow is like the popular kid in the deep learning framework world, developed by Google. It provides a flexible and efficient platform for building and deploying machine learning models, with support for a wide range of neural network architectures. TensorFlow also offers tools for visualization, debugging, and distributed training.

PyTorch

PyTorch is like the cool, artsy friend in the deep learning framework world, developed by Facebook. It is known for its dynamic computational graph and strong support for research and experimentation. PyTorch provides an intuitive interface for building and training neural networks, as well as a large ecosystem of libraries and tools.

Keras

Keras is like the friendly, easy-going neighbor in the deep learning library world. It provides a simple and user-friendly interface for building and training neural networks. Keras is built on top of TensorFlow and can also be used with other backends such as Theano or Microsoft Cognitive Toolkit. Keras is popular among beginners and researchers for its ease of use and flexibility.

Caffe

Caffe is like the speed demon of the deep learning framework world, developed by the Berkeley Vision and Learning Center. It is particularly popular in the computer vision community for its speed and efficiency in training and deploying convolutional neural networks. Caffe provides a wide range of pre-trained models and supports fine-tuning for specific tasks.

Deep Learning Hardware

Graphics Processing Units (GPUs)

Graphics Processing Units (GPUs) are like the workhorses of deep learning, specialized hardware devices designed for rendering graphics and performing parallel computations. GPUs have become essential for the large-scale matrix operations required in training neural networks. NVIDIA is the leading provider of GPUs for deep learning, with their CUDA platform and cuDNN library providing optimized support for popular frameworks such as TensorFlow and PyTorch.

Tensor Processing Units (TPUs)

Tensor Processing Units (TPUs) are like the custom-tailored suit of deep learning hardware, designed by Google specifically for accelerating deep learning tasks. TPUs are optimized for the low-precision arithmetic required in neural network training and can deliver significant speed improvements over GPUs. TPUs are available on Google Cloud Platform and can be used with TensorFlow.

Cloud-based Deep Learning Platforms

Many cloud providers offer deep learning platforms that provide access to powerful hardware and software resources for training and deploying neural networks. These platforms can save time and reduce the complexity of setting up and managing your own deep learning infrastructure. Popular deep learning platforms include Google Cloud AI Platform, Amazon SageMaker, and Microsoft Azure Machine Learning.

Deep Learning Challenges

Handling Large Datasets

Deep learning often requires massive datasets for training, which poses challenges in terms of storage, processing power, and memory. Additionally, curating and labeling such datasets can be time-consuming and costly. For smaller organizations without substantial resources, this can create barriers to implementing effective deep learning systems.

Requiring High Computational Power

The computational power required for deep learning is immense. Training deep neural networks involves processing a huge number of parameters, which can take a long time even on powerful machines. This not only increases the costs associated with hardware but also the energy consumption, making it less environmentally friendly.

Lack of Interpretability

It’s often difficult to understand how deep learning models arrive at specific decisions or predictions. This lack of interpretability, or the “black box” nature of deep learning, is a significant challenge, especially in sectors where explainability is crucial, like healthcare or finance. Stakeholders may be hesitant to trust or adopt models they do not understand.

Handling Unstructured Data

Deep learning models excel at handling structured data but struggle with unstructured data. Unstructured data, like text or images, doesn't fit neatly into traditional database structures. Extracting meaningful features from such data for deep learning is challenging and often requires complex pre-processing and feature engineering techniques.

Vulnerability to Adversarial Attacks

Deep learning models are susceptible to adversarial attacks where the input data is perturbed slightly to produce incorrect outputs. Such vulnerabilities can have serious consequences, particularly in security-sensitive applications. Researchers are continuously working on developing techniques to make deep learning models more robust against these kinds of attacks.

Frequently Asked Questions

What is Deep Learning?

Deep Learning is a subset of machine learning that utilizes neural networks with multiple layers to learn complex patterns and make decisions from data.

What are Neural Networks?

Neural Networks are computational models inspired by the human brain. They consist of layers of neurons that can learn to recognize patterns in data.

What is the difference between Deep Learning and Machine Learning?

Machine Learning is a broader concept for algorithms that learn from data. Deep Learning is a subset of Machine Learning using deep neural networks.

What is Backpropagation in Deep Learning?

Backpropagation is an algorithm used in training neural networks. It calculates the gradient of the loss function to adjust the weights for better predictions.

How is Deep Learning used in real-world applications?

Deep Learning is used in various applications like image and speech recognition, natural language processing, autonomous vehicles, and medical diagnosis among others.