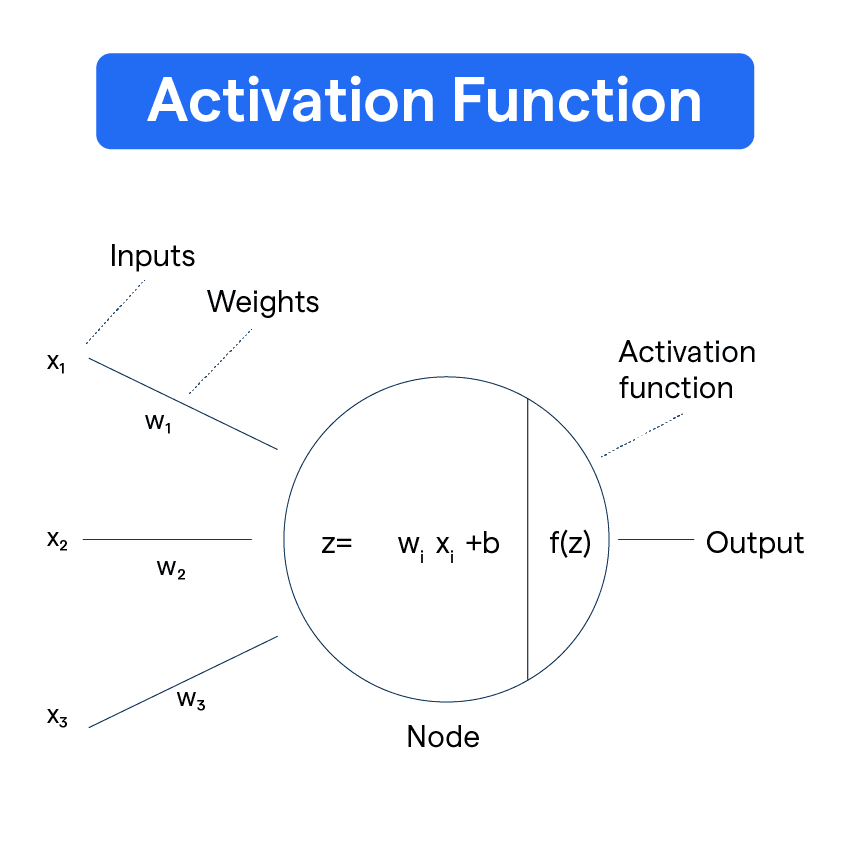

What is an Activation Function?

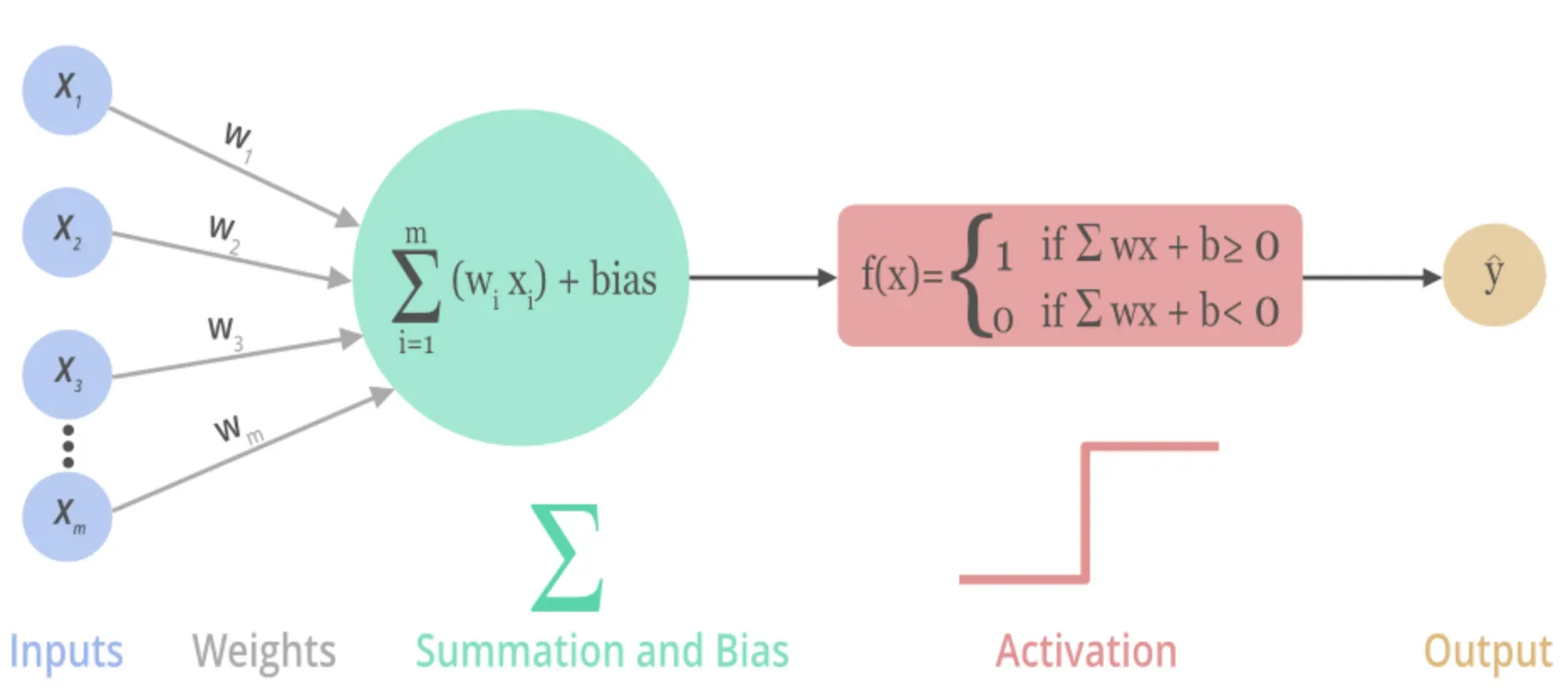

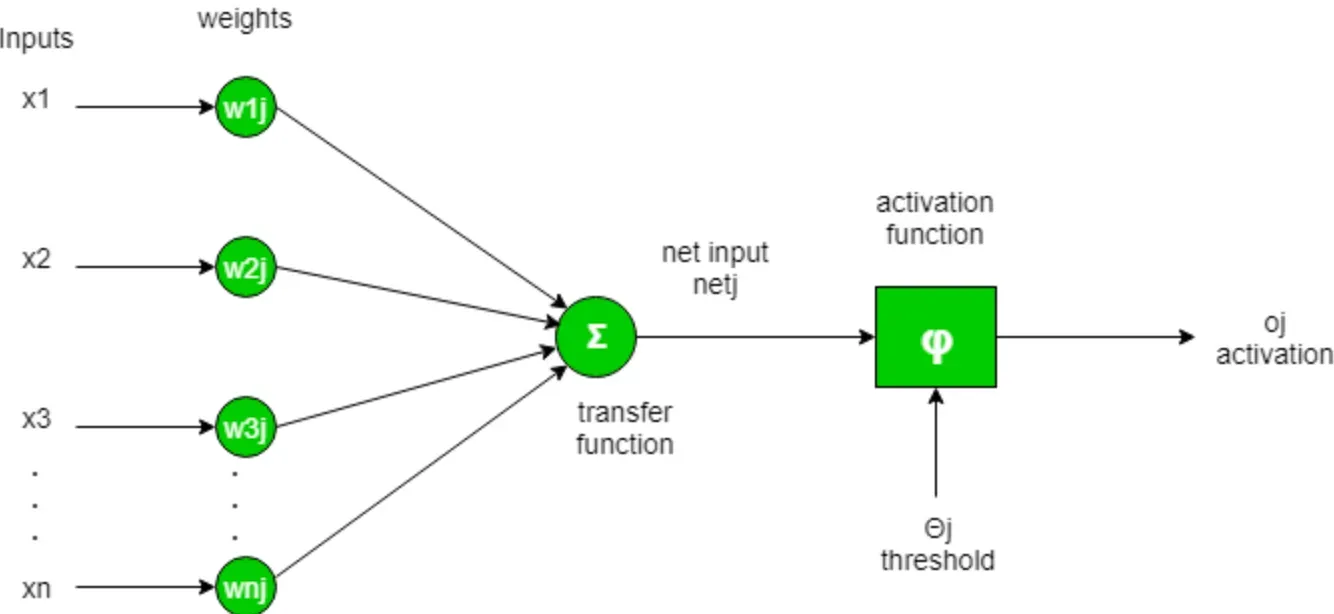

Activation Functions are the backbone of machine learning frameworks. They convert a model's input into an output, making it ready for the next layer. These functions are a crucial part of the learning process, they help turn a machine's bunch of numeral insights into actionable outputs.

In essence, an activation function is a mathematical equation that assists in determining the output of a neural network, artificial neuron, or any node in a machine learning algorithm.

Why is it Used?

Activation functions are used to introduce non-linearity into the output of a neuron. Without them, the neuron would only be capable of learning linear relationships between features.

Contextual Importance

The incorporation of an activation function in a neural network significantly optimizes its functionality—it methodically steers the change, making the machine 'learn.'

Common Types of Activation Functions

There's a variety of activation functions at your disposal. Each of them has unique traits that make them beneficial for specific scenarios.

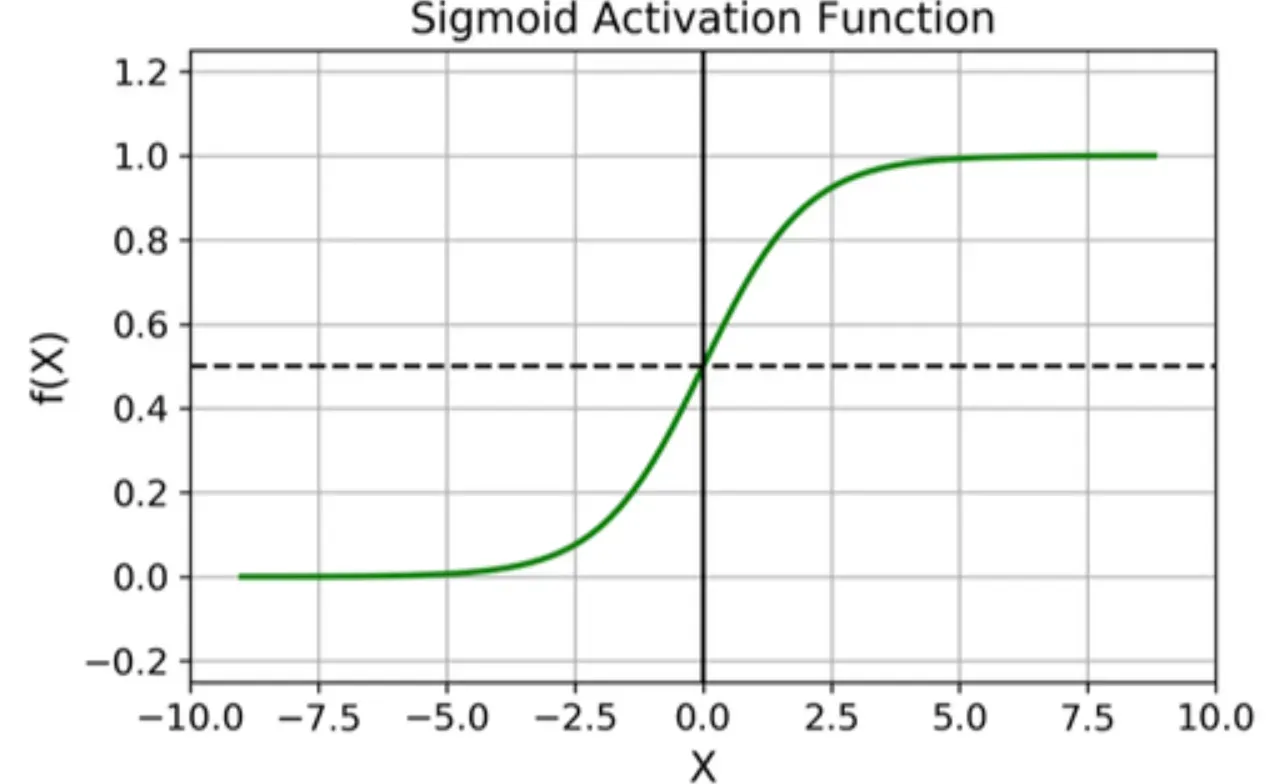

Sigmoid Function

This is an S-shaped function that maps the real-valued number to a value between 0 and 1. It's smooth and easy to derive, which is great for binary classification problems.

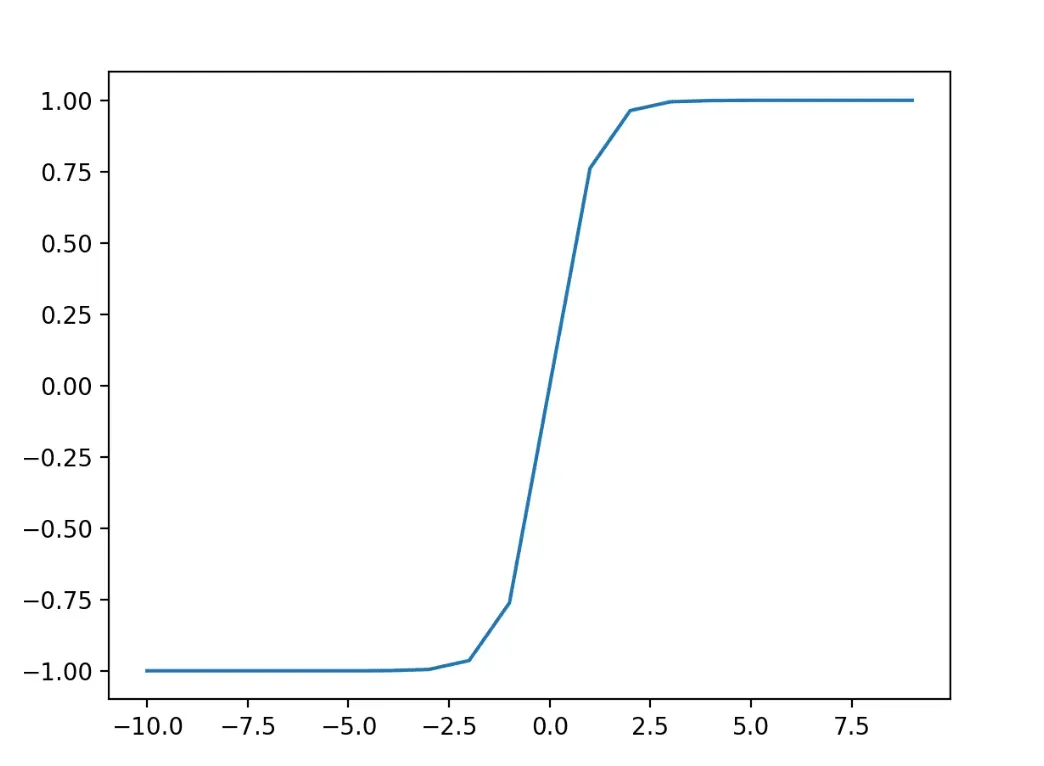

Hyperbolic Tangent Function (Tanh)

The Tanh function is pretty much like the sigmoid function, but it maps real-valued numbers between -1 and 1. This gives you an output that is zero-centered, making it easier for the model to learn from the earlier layers.

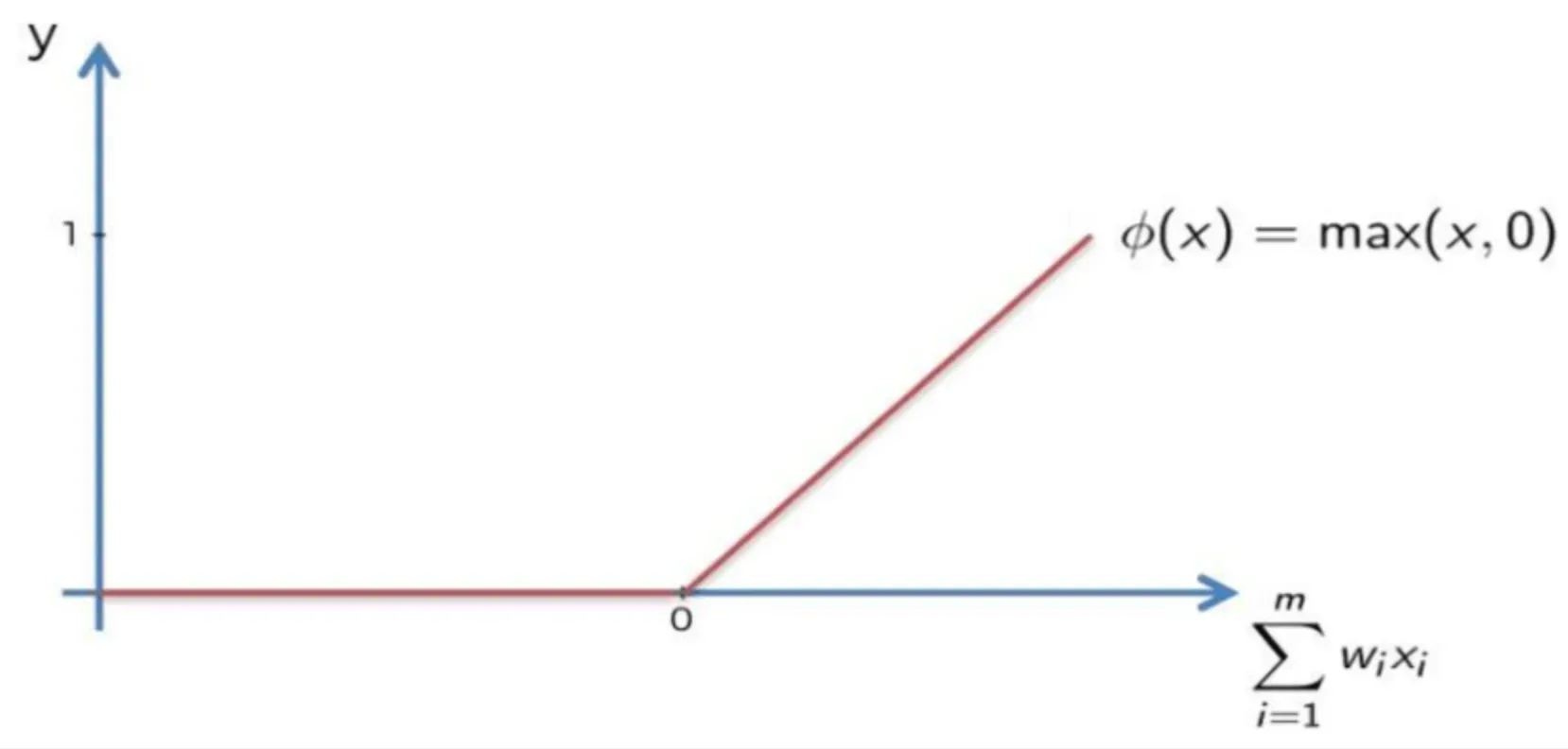

Rectified Linear Unit (ReLU)

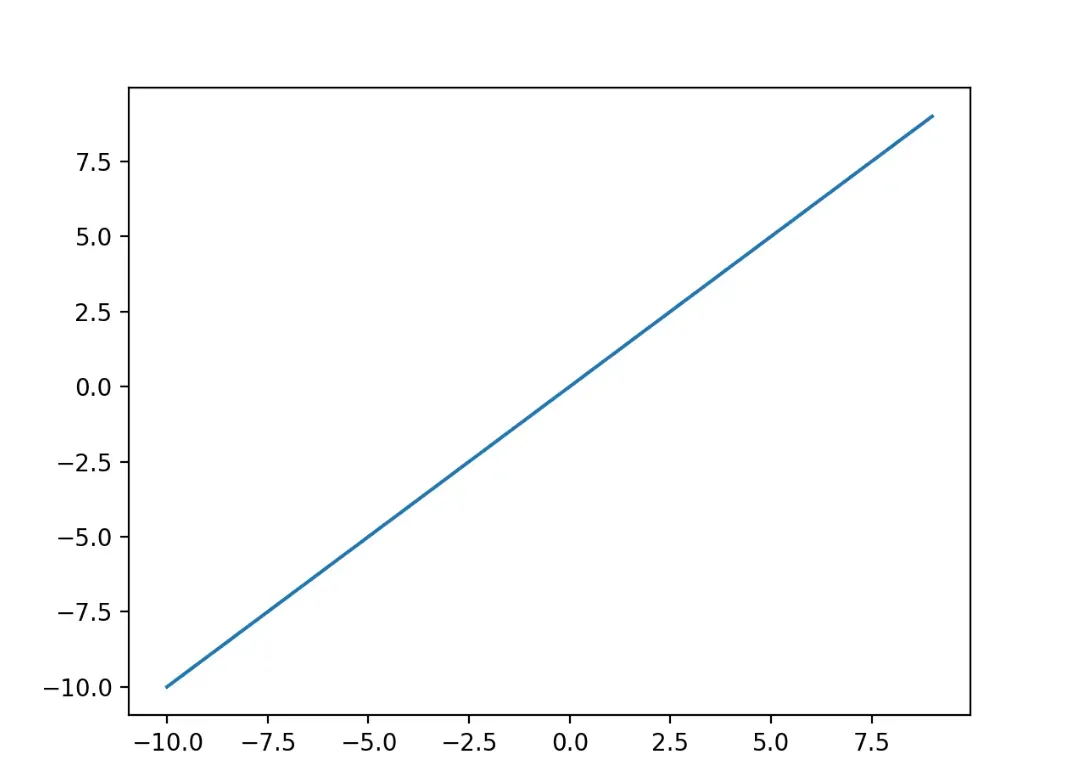

ReLU is the most popular activation function due to its simplicity and computational efficiency. It gives an output of X if X is positive and 0 otherwise.

Leaky ReLU

Just like the ReLU, this function delivers an output of X if X is positive. But, unlike ReLU, if X is negative, it will yield a small negative value.

Selecting the Right Activation Function

Activation functions aren't one-size-fits-all. The choice of an activation function depends on several factors, including the complexity of the task at hand and the nature of input and output data.

Complexity of the Task

More complex tasks may require more complex activation functions or combinations of different functions.

Nature of Input and Output Data

The type of input and output data also dictate the choice of activation function. For example, the sigmoid function is a popular choice for binary classification tasks.

Computation Restrictions

Sometimes, the computation resources available might dictate the choice of an activation function. Simpler functions are often less computationally taxing and may be a good fit for systems with limited resources.

Implementing Activation Functions in Machine Learning

Activation functions form a major part of designing and implementing successful machine learning models.

In Deep Learning Models

Deep Learning neural networks make use of various activation functions to help manage both the input fed into the model and the output it delivers.

In Non-Linear Data Point Models

When working with non-linear data points, activation functions can help shape the ultimate output of algorithms such as decision trees and neural networks.

Other Machine Learning Models

Broadly, activation functions can be used across a variety of machine learning models to fine-tune predictions and increase the accuracy of the model.

Optimizing Activation Functions

The efficiency of the utilization of activation functions for machine learning models can be optimized with these strategies:

- Experiment with different activation functions to see which works best with your dataset

- Monitor the function's impact on processing speed and resources

- Regularly update your functions in line with advancements in technology and theory

Frequently Asked Questions (FAQs)

What is an activation function in machine learning?

An activation function in machine learning is a mathematical function used to determine the output of a neural network. It introduces non-linearity into the output of a neuron, which allows for learning beyond simple, linear relationships.

Why do we need an activation function?

Activation functions are crucial as they help in deciding whether a neuron should be activated or not. They map the resulting values in between 0 to 1 or -1 to 1, etc. (depending upon the function).

What are the different types of activation functions?

There are several types of activation functions, including Sigmoid, Hyperbolic tangent (Tanh), Rectified Linear Unit (ReLU), and Leaky ReLU, among others.

How to choose the right activation function?

Choosing the right activation function depends on the problem at hand, the nature of the input and output data, the complexity of the task, and the computational resources available.

What factors should be considered while implementing activation functions in a neural network?

It's important to consider the complexity of the machine learning task, the type of input and output, the structure of the neural network, and the computational efficiency of the function.