What is a Decision Tree?

A decision tree is a visual representation of decision-making processes. It resembles a flowchart with nodes and branches, where each node represents a decision or an event and each branch represents an outcome or a possible path.

Decision trees are widely used in various fields, such as data mining, machine learning, and operations research.

How do Decision Trees work?

Decision trees work by recursively splitting the dataset based on different attributes or features to create subgroups that are as pure as possible in terms of the target variable.

At each decision node, a criterion is used to determine which attribute provides the most information gain or reduction in impurity, enabling the tree to make the best decision.

This process continues until the tree reaches its maximum depth or a stopping criterion is met.

Classification and Regression Trees

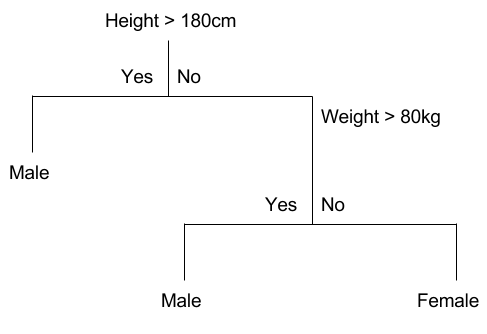

Classification trees are used to predict categorical or discrete outcomes, such as "yes" or "no" answers or class labels like "spam" or "not spam."

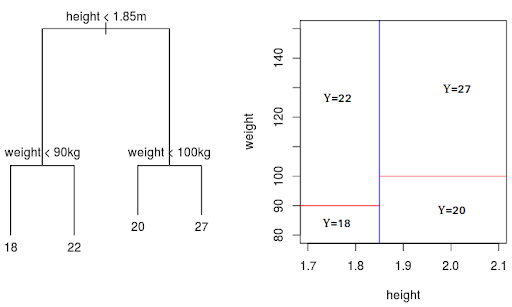

On the other hand, regression trees are used to predict continuous or numerical outcomes, such as predicting a person's income based on their education level, work experience, and other factors.

When to use Classification Trees?

Classification trees are particularly useful when you have categorical data and want to classify or categorize new observations based on existing patterns. They are commonly used in areas such as spam detection, customer segmentation, and disease diagnosis.

When to use Regression Trees?

Regression trees are useful in situations where you need to predict a continuous outcome or estimate a numerical value. They are often used in areas such as predicting house prices, forecasting sales growth, and estimating product demand.

Components of a Decision Tree

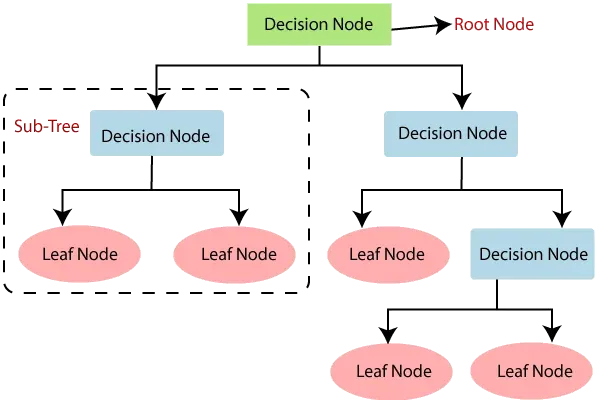

A decision tree is a flowchart-like structure used to make decisions or predictions. It consists of the following key components:

The Decision Node

The decision node in a decision tree represents a question or criterion used to split the dataset into smaller subsets.

It helps determine the best attribute or feature to use at each level of the tree and guides the decision-making process.

The Event Sequence Node

The event sequence node represents a specific outcome or event that occurs based on the decision made at the decision node.

It is the branch that the tree follows based on the answer to the question posed at the decision node. There can be multiple event sequence nodes associated with a decision node, depending on the number of possible outcomes.

The Consequences Node

The consequences node represents the final outcome or result of following a specific path in the decision tree. It provides information about the prediction or classification made based on the attributes or features of the input data.

The consequences node can be a class label in the case of a classification tree or a numerical value in the case of a regression tree.

Benefits of using Decision Trees

One of the major advantages of decision trees is their ease of interpretation.

Decision trees provide a clear visual representation of the decision-making process, making them easily understandable even for non-technical users.

The flowchart-like structure allows users to trace the decision path and understand how the final outcome is reached.

Ease of Preparation

Decision trees require minimal data preparation compared to other machine learning algorithms.

They can handle missing values and can work with a mix of categorical and numerical data without the need for extensive preprocessing. Additionally, decision trees can handle both discrete and continuous features without a need for scaling or normalization.

Less Data Cleaning Required

Decision trees are robust to outliers and can handle noisy or imperfect data. They can handle data sets with a mix of irrelevant or redundant features, automatically selecting the most informative attributes based on their split criterion.

This saves time and effort in data cleaning and feature selection, allowing users to quickly build models.

Applications of Decision Trees

Decision trees are often used in business settings to assess potential growth opportunities.

By analyzing past performance and market conditions, decision trees can identify potential customers, target demographics, and the most effective marketing strategies to maximize business growth.

Using demographic data to find prospective clients

Decision trees can be utilized to analyze demographic data to identify prospective clients or target specific customer segments.

By considering attributes such as age, income, location, and buying preferences, decision trees can provide insights for targeted marketing campaigns and personalized recommendations.

Decision Trees as support tools in various fields

Decision trees find applications in various domains such as healthcare, finance, and manufacturing. In healthcare, they can be used for disease diagnosis and treatment recommendations.

In finance, decision trees can assist in credit scoring and fraud detection. In manufacturing, decision trees can optimize production processes and quality control.

Frequently Asked Questions (FAQs)

How can decision trees handle missing values in the dataset?

Decision trees can handle missing values by using surrogate splits or by assigning a majority or weighted class label based on the available data.

Can decision trees handle both categorical and numerical features?

Yes, decision trees can handle both categorical and numerical features without the need for explicit data transformation or preprocessing.

What is pruning in decision tree algorithms?

Pruning is a technique used to reduce the size of a decision tree by removing unnecessary branches or nodes, thereby preventing overfitting and improving generalization.

How do decision trees handle imbalanced datasets?

Decision trees handle imbalanced datasets by using techniques such as class weighting, upsampling, or downsampling to give equal importance to minority classes.

Can decision trees handle continuous target variables in regression tasks?

Yes, decision trees can handle continuous target variables in regression tasks, providing predictions or estimates based on the average value of the target variable within the leaf nodes.