What is Pruning?

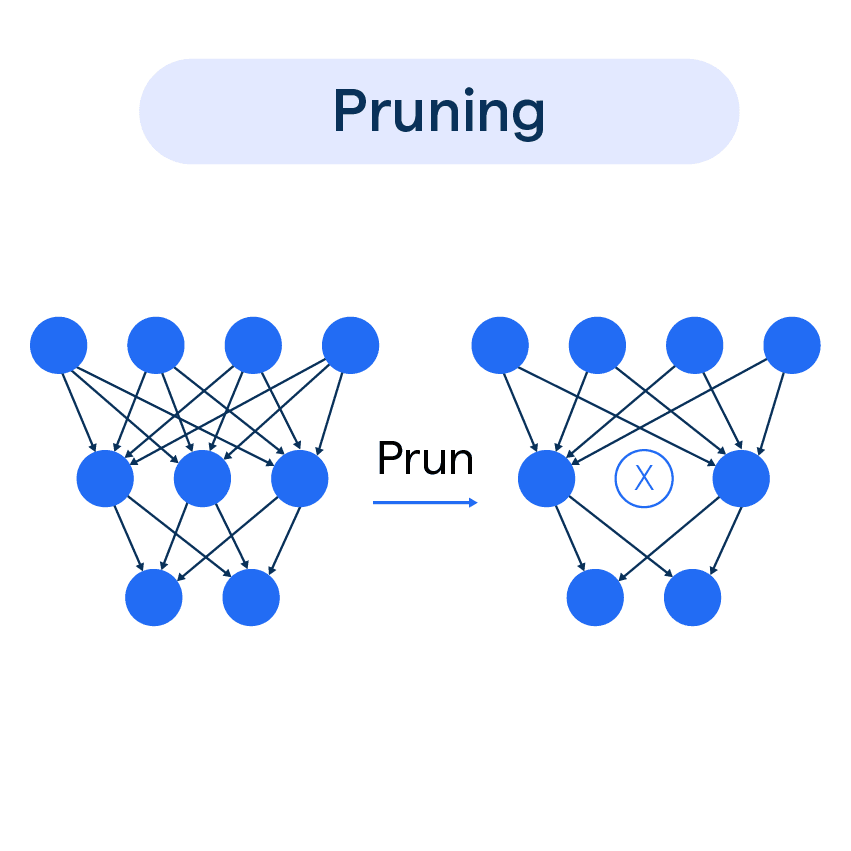

Pruning is a technique used in machine learning to optimize and improve the performance of models. It involves removing unnecessary components, such as weights or units, from the model without sacrificing accuracy. By eliminating these less important components, pruning reduces the model's complexity, making it more efficient and easier to deploy.

Why Pruning?

Pruning is an important technique in machine learning for several reasons. Firstly, it reduces the size of models, making them more compact and efficient. This can be particularly useful for deploying models on resource-constrained devices or in situations where memory and storage are limited.

Secondly, pruning enhances model interpretability. By removing unnecessary components, pruning makes the underlying structure of the model more transparent and easier to analyze. This can be crucial for understanding the decision-making process of complex models like neural networks.

Types of Pruning

Pruning can be performed using different approaches, depending on the specific requirements of the model and the desired outcome. Let's take a look at some common types of pruning:.

Pre-pruning

Pre-pruning, or early stopping, involves halting the growth of the decision tree before it reaches its full size, preventing overfitting.

Post-pruning

Post-pruning entails building the full decision tree and then selectively trimming it to improve the generalization and reduce model complexity.

Ensemble Pruning

Ensemble pruning targets ensemble learning models by refining and selecting optimal base learners to maximize accuracy and model efficiency.

Weight Pruning

Weight pruning, applied to neural networks, eliminates weak connections or weights below a threshold, simplifying the network and reducing computational complexity.

Neuron Pruning

Neuron pruning focuses on removing entire neurons or entire layers with lesser contributions, streamlining the neural network while retaining its performance.

How does Pruning Work?

In this section, we'll cover the key idea behind pruning in machine learning models.

Removing Redundant Connections

Pruning works by removing connections between neurons in a neural network that have little impact on the model's accuracy. This process identifies and eliminates redundant or non-critical connections.

Improving Efficiency

By removing unnecessary connections, pruning reduces the size of the model and eliminates superfluous parameters. This improves the model's efficiency by decreasing storage requirements, reducing overfitting, and speeding up inference.

Iterative Approach

Pruning is done iteratively by removing a small percentage of low-importance connections, retraining the model, and evaluating performance after each round. This maintains accuracy while incrementally compressing the model.

Tradeoff Between Accuracy and Efficiency

The degree of pruning involves a tradeoff between model accuracy and efficiency. More aggressive pruning leads to higher efficiency but can impact accuracy if taken too far. Careful tuning is required to balance the two.

When to Prune?

Deciding when to perform pruning is an important consideration. Pruning can be done during the training process (during iterative optimization) or post-training. The optimal timing for pruning depends on factors such as the model complexity, dataset size, and computational resources available.

- Pruning during training allows for continuous refinement of the model and can potentially lead to more efficient convergence. However, it requires additional computational resources during the training process.

- Pruning post-training can be performed on pre-trained models and offers flexibility in terms of choosing the pruning threshold and fine-tuning strategies. It is suitable when computational resources are limited or when the training process is time-sensitive.

Pruning Algorithms

Several pruning algorithms have been developed to automate the pruning process. Here are a few popular ones:

Different Pruning Algorithms

In this section, we'll delve into various pruning algorithms that aid in optimizing decision trees and neural networks.

Reduced Error Pruning

This algorithm removes nodes from the decision tree based on its impact on the overall error rate. The tree is pruned until further pruning increases the validation error.

Cost Complexity Pruning

Also known as weakest link pruning, it calculates the complexity of the tree and prunes the nodes that contribute to the highest complexity.

Minimum Description Length Pruning

This approach balances the trade-off between tree simplicity and its fit to training data, pruning nodes to ensure effective generalization.

Optimal Brain Damage and Optimal Brain Surgeon

These algorithms, mainly used in pruning neural networks, remove weights that have minimal contribution to the network's performance.

Magnitude Based Pruning

In neural networks, this technique omits neurons with weights below a certain threshold to simplify the network.

Evaluation Measures for Pruning

When evaluating the impact of pruning, several metrics can be considered:

Model Accuracy

Model accuracy measures the ratio of correct predictions to the total predictions made.

F1 Score

F1 score balances precision and recall, offering a comprehensive evaluation of model performance, especially in cases with imbalanced data.

Confusion Matrix

A confusion matrix helps visualize the classification performance of a model by showing correct and incorrect predictions in a table.

Feature Importance

Feature importance highlights the significance of individual features in contributing to the model's predictions, aiding the pruning process.

Model Complexity

Evaluating model complexity can help identify areas to reduce overfitting and improve generalization through pruning.

Application of Pruning in Machine Learning

Pruning finds applications in various machine learning domains. Some common examples include:

Reducing Overfitting

Pruning helps reduce overfitting, as it trims network connections or tree branches that have minimal impact on prediction accuracy, ultimately yielding a more generalized model.

Accelerating Training Time

By removing less influential model components, pruning can accelerate training time. This streamlined process contributes to swift development and faster performance tuning.

Enhancing Model Interpretability

Pruned models can become easier to interpret due to their reduced complexity. Simplified models facilitate better understanding and interpretation of learning patterns and decision-making processes in Machine learning algorithms.

Resource Optimization

Pruning helps minimize resource consumption, like memory and computation demands, by eliminating extraneous elements. This is particularly valuable when deploying models on resource-constrained environments and embedded systems.

Implementing Post-Training Pruning

Post-training pruning is an approach where the model is first fully trained and then selectively pruned for optimization. It enables developers to identify and remove unnecessary connections while preserving the model's effectiveness.

Challenges and Limitations of Pruning

While pruning offers many benefits, it also has some challenges and limitations:

Loss of Important Information

Selective removal of nodes or weights while pruning carries the risk of losing potentially valuable information, which may lead to reduced model performance or inaccurate predictions.

Selection of an Appropriate Threshold

Determining an optimal threshold for pruning is critical, as it dictates the balance between model complexity and performance. Finding the right threshold can often be challenging and closely depends on the specific problem domain.

Dependency on the Initial Network Structure

The success of pruning relies heavily on the initial network architecture. A suboptimal design can obstruct the pruning process and make it difficult to achieve desired improvements in model efficiency and performance.

Impact on Model Generalization

Improper pruning can lead to underfitting or overfitting issues, compromising a model's generalization capabilities. Striking the right balance is crucial for maintaining model performance on unforeseen data.

Computational Cost and Time

Certain pruning methods can be computationally intensive, resulting in longer training times and ultimately offsetting the benefits gained by reducing the model's complexity.

Frequently Asked Questions (FAQs)

What is pruning in machine learning?

Pruning is a technique used to optimize and improve the performance of machine learning models by removing unnecessary components like weights or units while maintaining accuracy.

How does pruning work?

Pruning involves assigning importance scores to different components and then removing less important weights or units. The model is then fine-tuned to maintain performance and accuracy.

What are the benefits of pruning?

Pruning reduces the size of models, making them more efficient and easier to deploy, while also enhancing model interpretability. It can also be used to optimize model performance.

When is the best time to perform pruning?

Pruning can be done either during the training process or post-training. The optimal timing for pruning depends on factors such as the model complexity, dataset size, and computational resources available.

What are some pruning algorithms?

Popular pruning algorithms include the Optimal Brain Surgeon (OBS), Optimal Brain Damage (OBD), Iterative Magnitude Pruning (IMP), and Connection Pruning.