What are Deep Neural Networks?

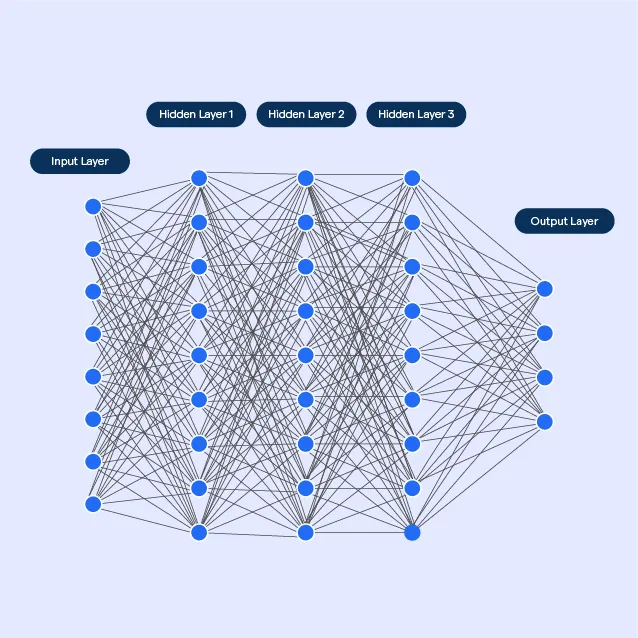

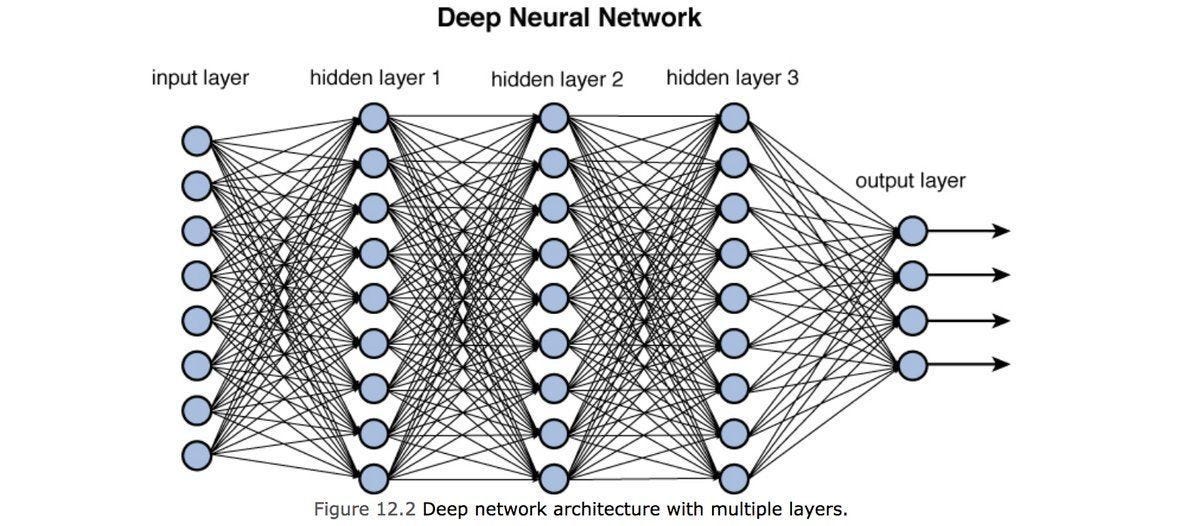

Deep neural networks are a type of machine learning algorithm that is modeled after the structure of the human brain.

These networks consist of multiple layers of interconnected nodes or "neurons" that work together to process complex information.

Each layer in the network performs a specific mathematical operation on the input data, and the output of one layer serves as the input for the next layer.

By combining and refining information from multiple layers, deep neural networks can identify patterns and relationships in data and make accurate predictions or classifications.

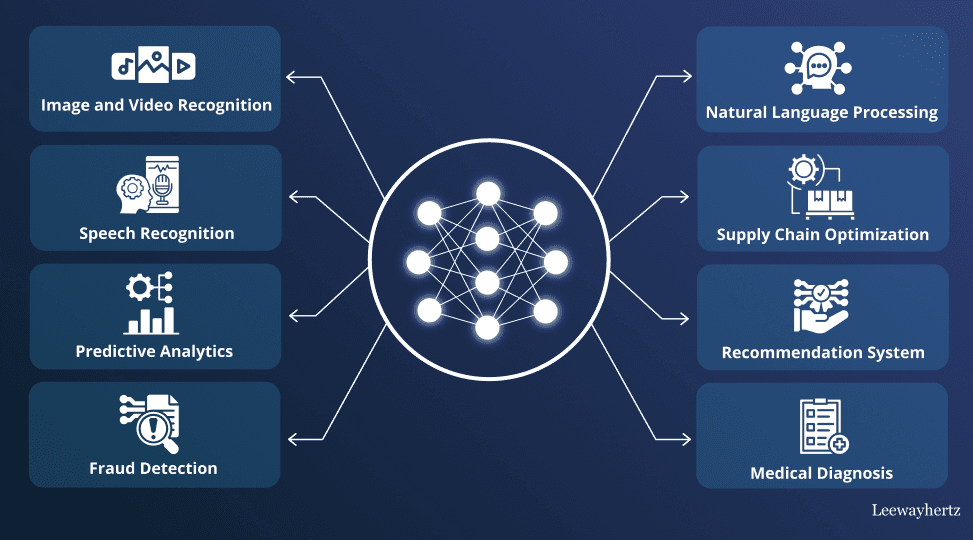

They are used in various applications, such as image and speech recognition, natural language processing, and autonomous vehicles.

History of Deep Neural Networks

DNNs have a long and exciting history that dates back to the 1940s when the first artificial neuron was proposed. However, it was not until the 1980s and 1990s that DNNs became popular due to advancements in computer hardware, software, and algorithms.

Since then, DNNs have revolutionized many fields and led to significant breakthroughs in AI research.

Why are Deep Neural Networks critical?

DNNs are essential because they can automate tasks previously thought impossible or difficult for computers to perform.

For example, DNNs can recognize faces, translate languages, predict stock prices, and accurately diagnose diseases.

Moreover, DNNs can learn from large amounts of data and improve their performance over time, making them ideal for applications that require continuous Learning and adaptation.

Critical Concepts in Deep Neural Networks

The critical concepts in deep neural networks are:

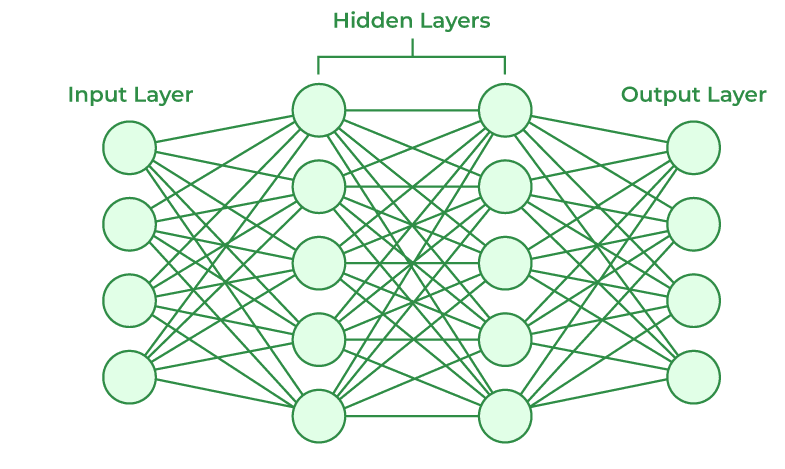

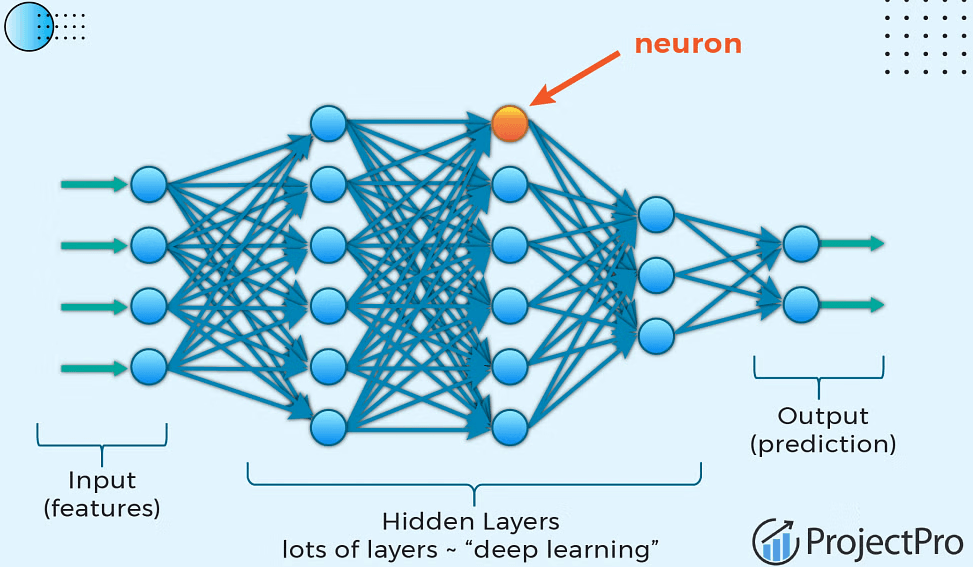

Artificial Neural Networks (ANNs)

These are computational models inspired by the structure and function of biological neurons. ANNs consist of an input layer, one or more hidden layers, and an output layer.

Each neuron in the network receives inputs, performs a weighted sum of these inputs, applies an activation function to the aggregate, and outputs a signal to the next layer.

Backpropagation

Backpropagation is a learning algorithm used to adjust the neurons' weights in a DNN to minimize the difference between the predicted and actual outputs.

Backpropagation propagates the error backward from the output layer to the input layer and updates the weights using gradient descent.

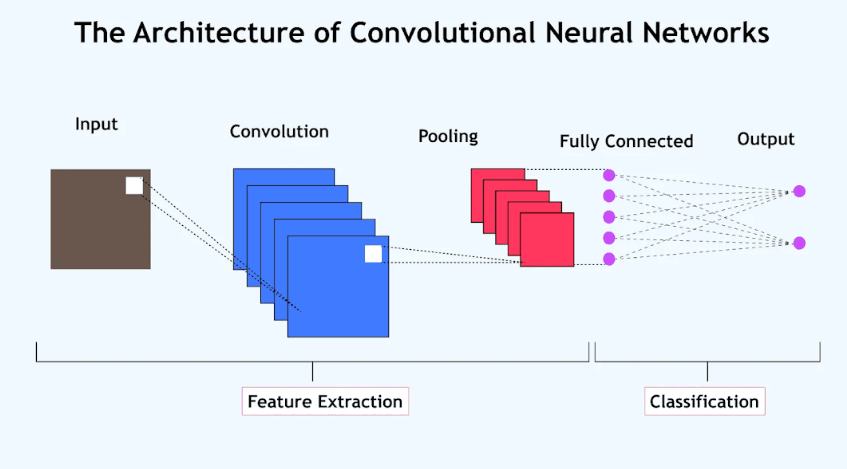

Convolutional Neural Networks (CNNs)

CNNs are a DNN type particularly suited for image and video recognition tasks. CNNs use a convolutional operation to extract features from input images and reduce their dimensionality.

This operation is followed by pooling, activation, and fully connected layers to classify the images.

Recurrent Neural Networks (RNNs)

RNNs are a type of DNN designed to handle sequential data such as speech, text, and time series.

RNNs use feedback connections to pass information from one time step to the next and can learn long-term dependencies in the data. RNNs can also include memory cells such as Long Short-Term Memory (LSTM) units.

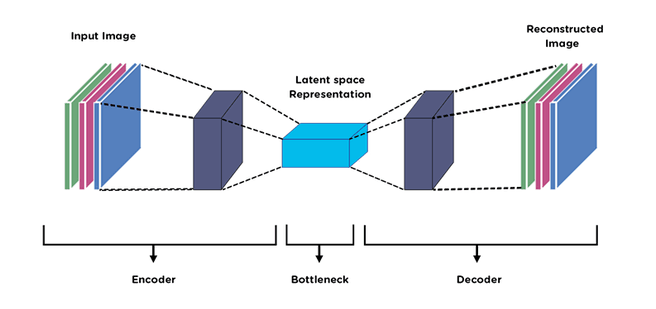

Autoencoders

Autoencoders are a type of unsupervised DNN that can learn a compressed representation of input data.

Autoencoders consist of an encoder that maps the input data to a lower-dimensional model and a decoder that maps the lower-dimensional picture back to the input data. Autoencoders can be used for data compression, denoising, and anomaly detection.

Transfer Learning

Transfer learning is a technique that involves reusing the pre-trained weights of a DNN on a new, related task.

Transfer learning can save time and resources compared to training a DNN from scratch and can improve the performance of the DNN on the new job.

Deep Neural Network Architecture

Input Layer

The input layer of a DNN is responsible for receiving the raw input data and passing it to the first hidden layer.

The input layer can have one or more neurons, depending on the size and dimensionality of the input data.

Hidden Layers

The hidden layers of a DNN are responsible for processing the input data and extracting relevant features for the task.

The number and size of the hidden layers can vary depending on the complexity of the job and the amount of available data.

Output Layer

The output layer of a DNN is responsible for producing the network's final output, which can be a scalar, a vector, or a sequence.

The activation function of the output layer depends on the task, but commonly used procedures include softmax for classification tasks and linear or sigmoid for regression tasks.

Activation Functions

Activation functions are used in the neurons of a DNN to introduce nonlinearity into the network and enable it to learn complex patterns.

Popular activation functions include ReLU, sigmoid, tanh, and softmax.

Regularization Techniques

Regularization techniques are used in DNNs to prevent overfitting, which occurs when the network memorizes the training data instead of learning general patterns.

Popular regularization techniques include dropout, batch normalization, and weight decay.

Dropout

Dropout is a regularization technique that randomly drops out some of the neurons in a DNN during training, which forces the remaining neurons to learn more robust features and reduces the risk of overfitting.

Batch Normalization

Batch normalization is a normalization technique that normalizes the activations of the neurons in a DNN to have zero mean and unit variance.

Batch normalization can improve the training speed and stability of the network

Deep Neural Network Training

The deep neural network training are:

Data Preprocessing

_(1).jpg)

Data preprocessing is a crucial step in DNN training that involves cleaning, transforming, and normalizing the input data to improve the performance and convergence of the network.

Standard preprocessing techniques include normalization, standardization, and data augmentation.

Loss Functions

Loss functions are used in DNNs to measure the difference between the predicted and actual outputs of the network.

The choice of the loss function depends on the task, but commonly used procedures include mean squared error, cross-entropy, and binary cross-entropy.

Optimizers

Optimizers are used in DNNs to adjust the weights of the neurons during training to minimize the loss function.

Popular optimizers include stochastic gradient descent (SGD), Adam, and Adagrad.

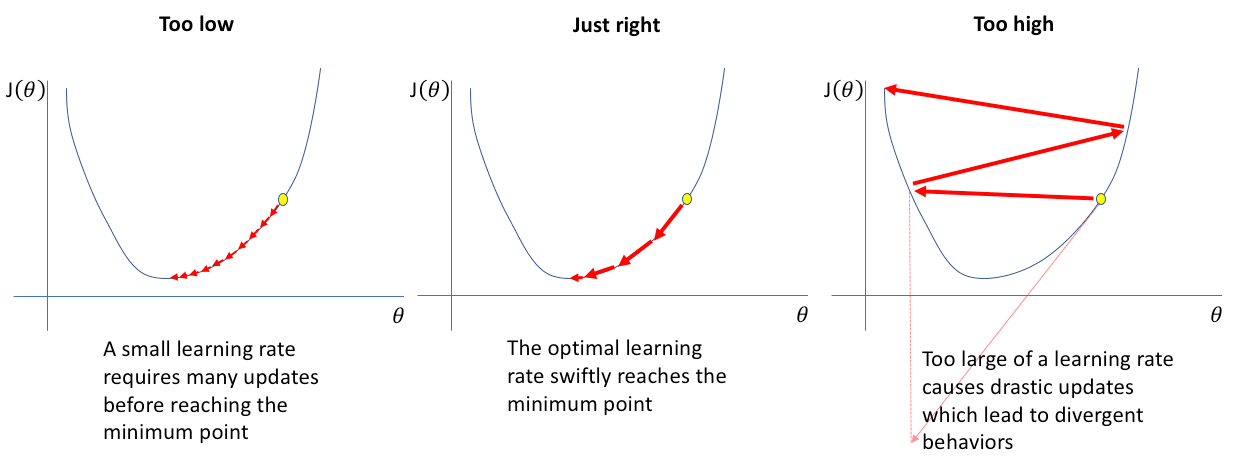

Learning Rate

The learning rate is a hyperparameter of DNNs that controls the step size of the weight updates during training.

The learning rate can significantly affect the training speed and convergence of the network and should be tuned carefully.

Mini-batch Gradient Descent

Mini-batch gradient descent is a variant of SGD that updates the neurons' weights based on small input data instead of the entire training dataset.

Mini-batch GD can speed up the training of DNNs and improve the network's generalization.

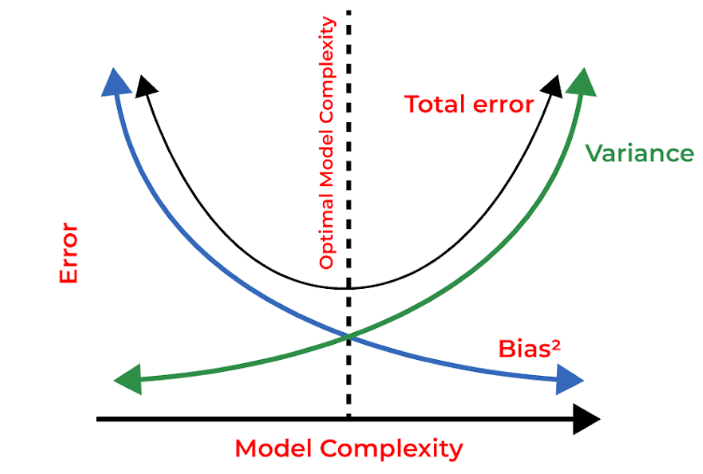

Overfitting and Underfitting

Overfitting and underfitting are common problems in DNN training that occur when the network either memorizes the training data or fails to learn the underlying patterns.

Various techniques, such as regularization, early stopping, and model selection can be used to prevent overfitting and underfitting.

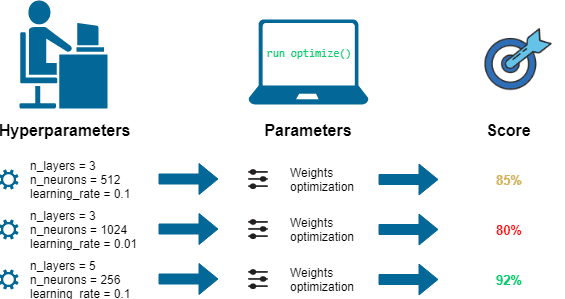

Hyperparameter Tuning

The process of determining the best settings for a DNN's hyperparameters, such as the number of hidden layers, learning rate, and regularisation strength, is known as hyperparameter tuning.

Hyperparameter tuning can significantly improve the performance and convergence of the network.

Applications of Deep Neural Networks

Computer Vision

DNNs have achieved remarkable success in computer vision tasks such as object recognition, face recognition, image segmentation, and object detection.

DNN-based computer vision systems are used in various applications, such as autonomous vehicles, surveillance systems, and medical imaging.

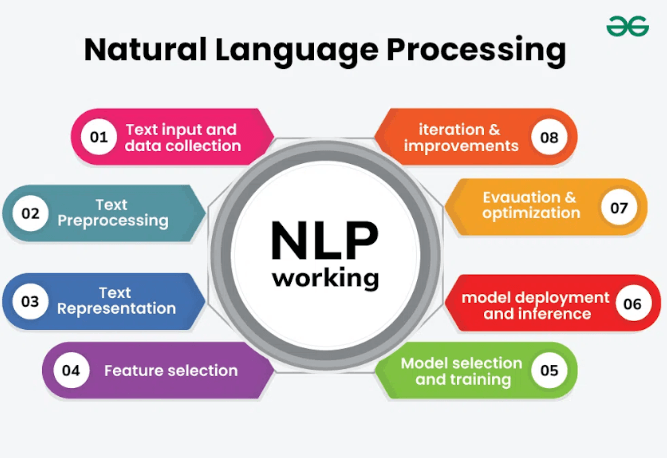

Natural Language Processing

DNNs have also shown great promise in (NLP) tasks such as language translation, sentiment analysis, and speech recognition.

DNN-based NLP systems are used in various applications such as virtual assistants, chatbots, and language learning.

Speech Recognition

DNNs have revolutionized the field of speech recognition by enabling accurate and robust speech-to-text transcription.

DNN-based speech recognition systems are used in various applications such as dictation, voice search, and voice-enabled assistants.

Recommender Systems

DNNs can also be used to build powerful recommender systems that can provide personalized recommendations to users based on their preferences and behavior.

DNN-based recommender systems are used in various applications such as e-commerce, music streaming, and social media.

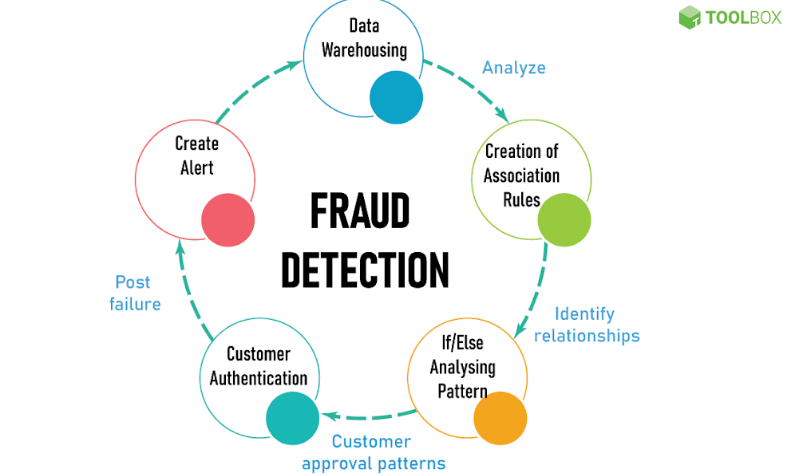

Fraud Detection

DNNs can also detect and prevent fraud in various industries like finance, insurance, and e-commerce.

DNN-based fraud detection systems can analyze large volumes of data to identify patterns and anomalies indicative of fraudulent activity.

Robotics

DNNs are increasingly used in robotics applications such as object manipulation, motion planning, and autonomous navigation.

DNN-based robotic systems can perceive their environment and make intelligent decisions based on the input data.

Gaming

DNNs can also be used to build intelligent agents to play games such as chess, Go, and video games.

DNN-based game-playing agents can learn from experience and improve their performance over time.

Healthcare

DNNs are used in various healthcare applications such as disease diagnosis, medical imaging, and drug discovery.

DNN-based healthcare systems can analyze large amounts of medical data and provide accurate and timely diagnoses and treatments.

Challenges in Deep Neural Networks

The challenges in deep neural networks are:

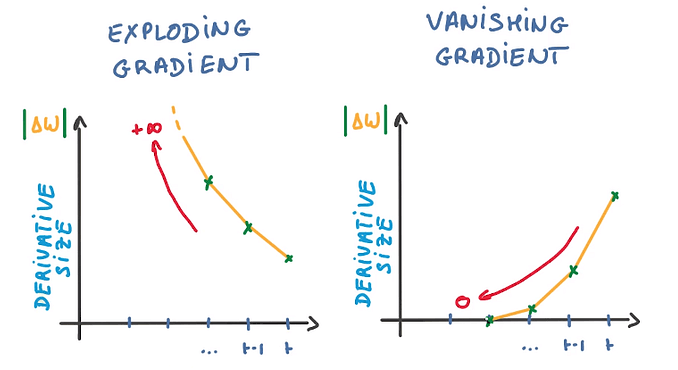

Vanishing and Exploding Gradients

The process of identifying hidden patterns, trends, and insights in massive amounts of data is known as data mining. It entails extracting meaningful information from datasets and transforming it into a usable structure for later use.

These problems can hinder the convergence and stability of the network and require careful initialization and regularization of the weights.

Overfitting

Overfitting is a common problem in DNN training that occurs when the network memorizes the training data instead of learning the underlying patterns.

Overfitting can lead to poor generalization and requires various regularization techniques, such as dropout and early stopping, to be addressed.

Adversarial Attacks

Adversarial attacks are a type of cyberattack that targets DNNs by introducing subtle perturbations to the input data that can cause the network to misclassify the data.

Malicious attacks are a significant concern in security-sensitive applications such as autonomous vehicles and defense systems.

Interpretability and Explainability

DNNs are often criticized for being black boxes that are difficult to interpret and explain. This lack of interpretability and explainability can hinder the adoption and trustworthiness of DNN-based systems in various domains such as healthcare and finance.

Future of Deep Neural Networks

- Advancements in Deep Learning: Deep Learning is a rapidly evolving field constantly pushing the boundaries of what is possible with DNNs.

Advances in deep Learning are expected to lead to more powerful and versatile DNNs that can learn from even larger and more complex datasets.

- Neuromorphic Computing: It is a new computing paradigm inspired by biological neurons' structure and function. Neuromorphic computing will lead to more efficient and robust DNNs operating in real-time and low-power environments.

- Explainable AI: Explainable AI is a research field that aims to make DNNs more transparent and interpretable by providing explanations of their decisions and behaviors.

Explainable AI is expected to increase the trustworthiness and reliability of DNN-based systems in various domains.

- Quantum Computing: It is a new computing paradigm based on the principles of quantum mechanics. Quantum computing is expected to lead to more powerful and faster DNNs that can solve problems currently intractable with classical computing.

Limitations of Deep Neural Networks

Despite their impressive performance and potential, DNNs have limitations, such as high computational and energy requirements, dependence on large amounts of data, and inability to reason and abstract.

These limitations require new approaches and techniques to be developed to overcome them.

Frequently Asked Questions (FAQs)

What are Deep Neural Networks (DNNs)?

Deep Neural Networks are advanced artificial neural networks with multiple hidden layers, enabling complex data representation and improved pattern recognition.

What is the main difference between shallow and deep neural networks?

External neural networks have fewer hidden layers, while deep neural networks contain multiple layers, increasing learning capacity and complex data representation.

How do Deep Neural Networks learn?

DNNs learn by adjusting weights and biases through backpropagation and optimization algorithms, using training data to minimize prediction errors and improve performance.

What are some popular applications of Deep Neural Networks?

Popular DNN applications include image recognition, natural language processing, speech recognition, and game playing, enhancing AI capabilities across various industries.

What are the limitations of Deep Neural Networks?

DNN limitations include overfitting, high computational requirements, difficulty in training, and the need for large datasets, posing challenges for certain applications.