What is Loss Function?

A loss function, also known as cost function or error function, is a method used in machine learning to measure how well a model is performing.

It estimates the difference between the predicted outcomes by the model and the actual output.

Significance of Loss Function

Loss functions are an essential component in machine learning and artificial intelligence, guiding the learning process of the model. They provide a way to quantify the error of a model so that it can be minimized during training.

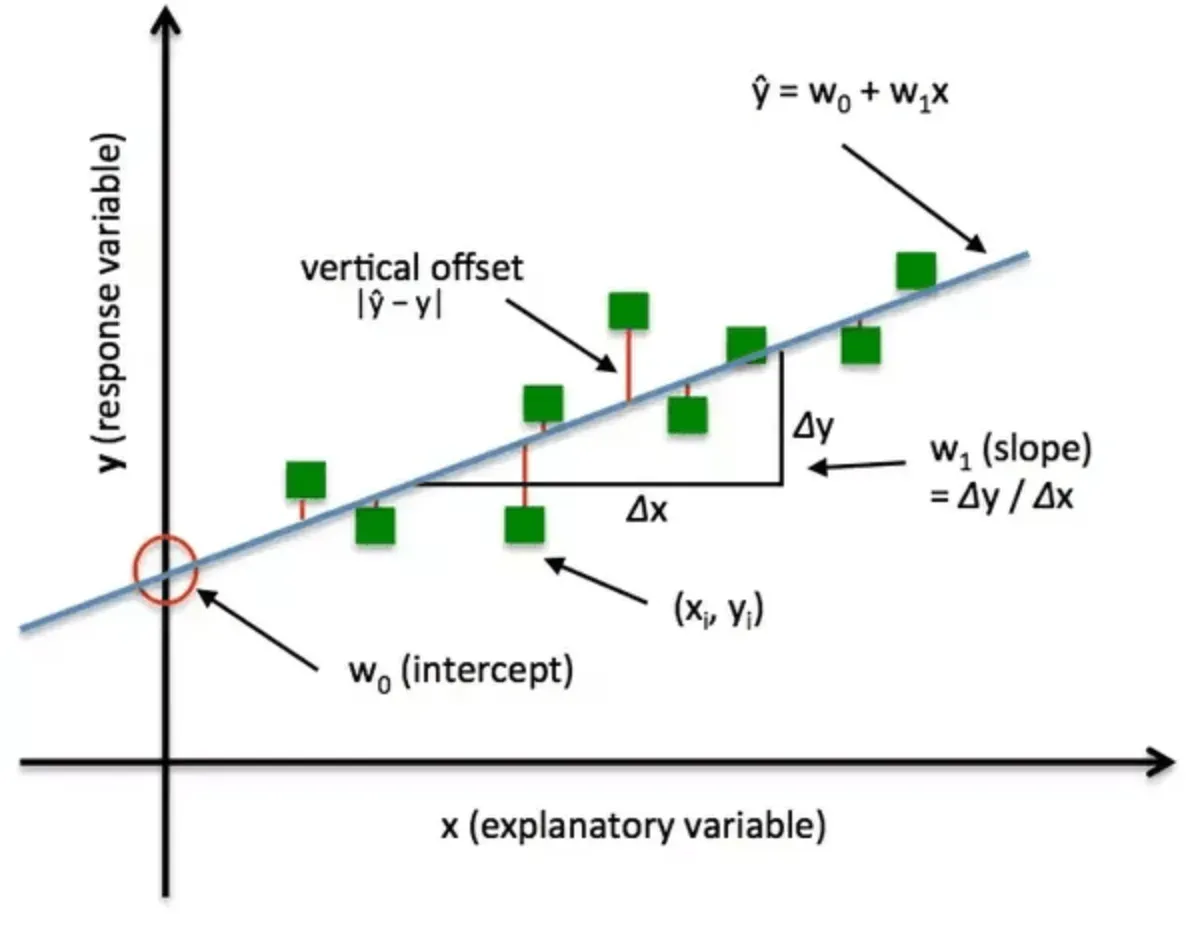

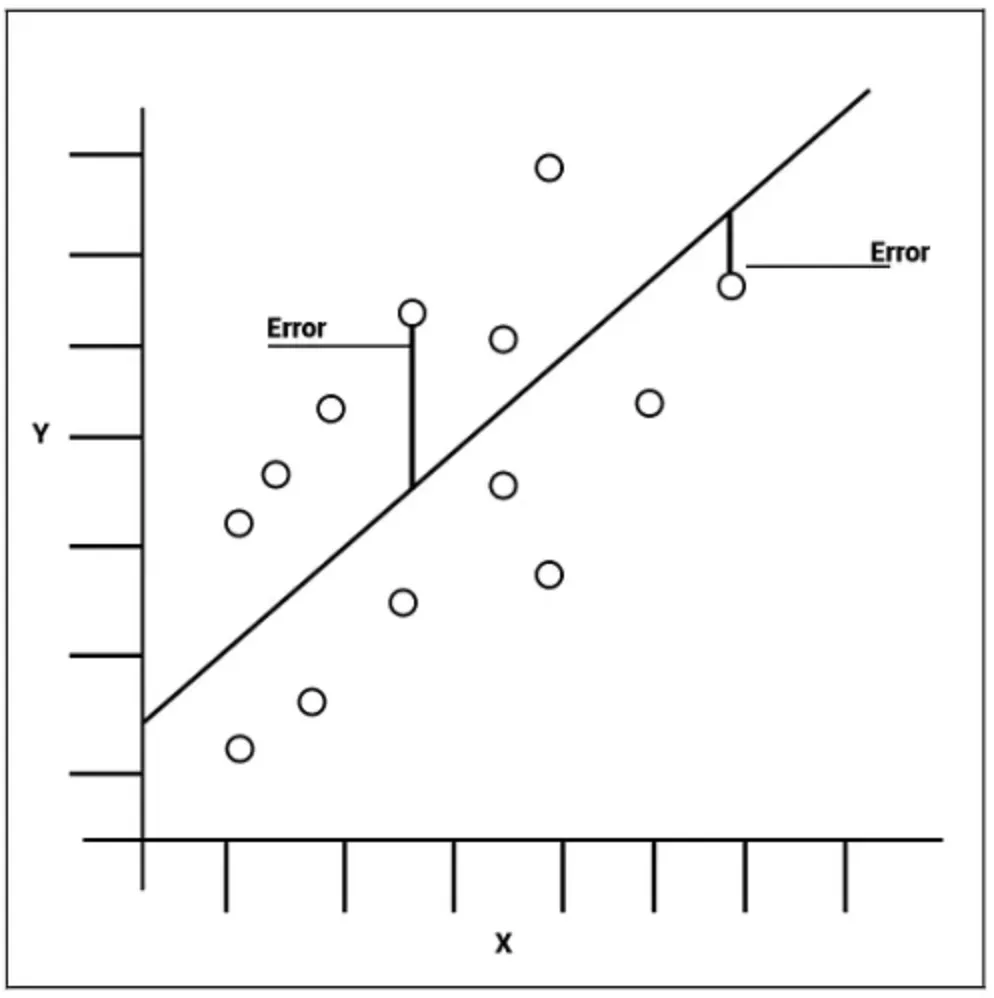

The loss function works by comparing the target variable (actual outcome) with the predicted variable. The "loss" indicates how 'off' the predictions are from the actual results.

The goal of the loss function is to find the best parameters that will minimize the loss. The mechanism used for this optimization is often an algorithm like gradient descent.

Key Components of Loss Function

- Predicted Value: The predicted value is the output of the model based on input data. It is determined by the model's current parameters.

- Actual Value: The actual value is the true outcome that we were trying to predict. It is also often known as the ground truth or target variable.

- Loss Value: The loss value is the output of the loss function when comparing the predicted value to the actual value. It indicates the amount of 'error' in the prediction.

- Parameters: The parameters of a machine learning model are the parts that get adjusted during training to improve the predictions. The loss function guides this adjustment.

Types of Loss Function

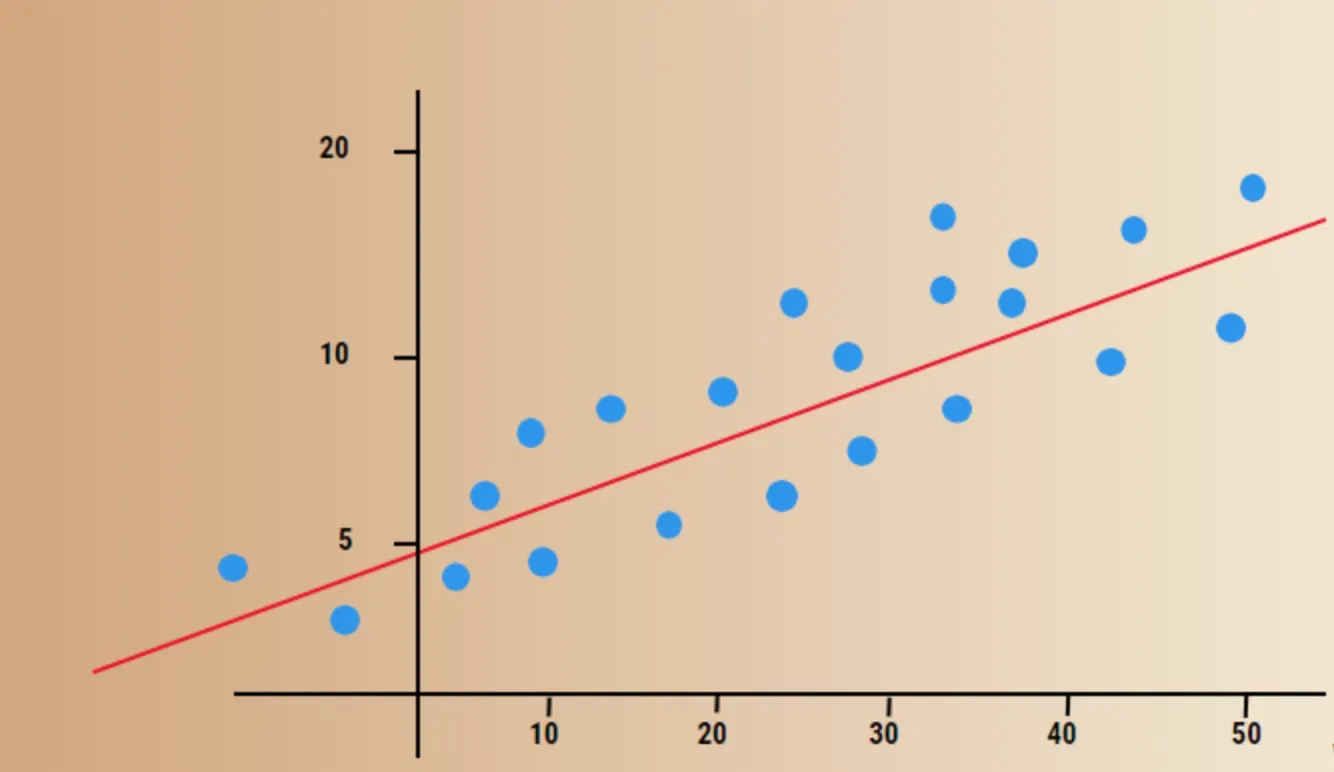

Mean Squared Error

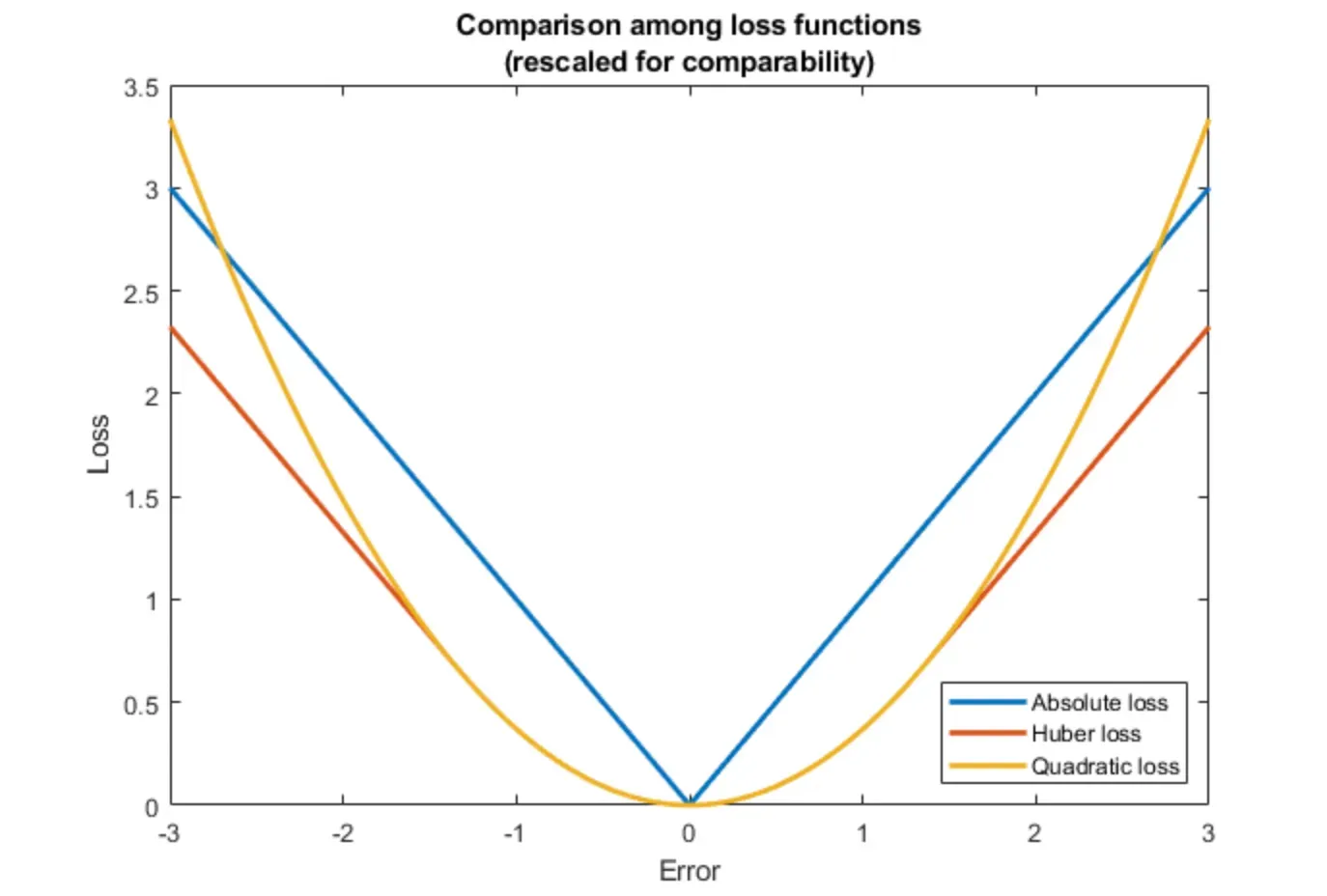

Mean Squared Error (MSE) is a common loss function used for regression problems. It calculates the square of the difference between predicted and actual value and attempts to minimize this.

Cross Entropy Loss

Cross entropy loss is used in binary classification problems. It calculates the logarithm of the predicted probability of the actual class label, attempting to maximize accuracy predictions.

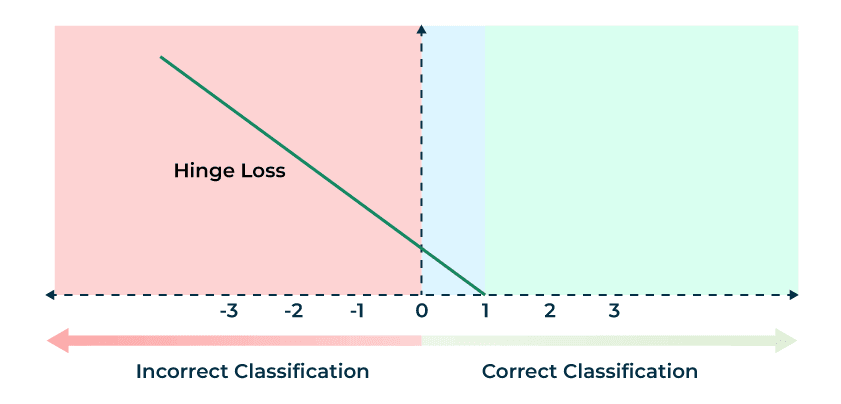

Hinge Loss

Hinge loss is typically used in Support Vector Machines (SVM) and some types of neural networks. It is designed to deal with max-margin classification, and ignores correctly classified instances.

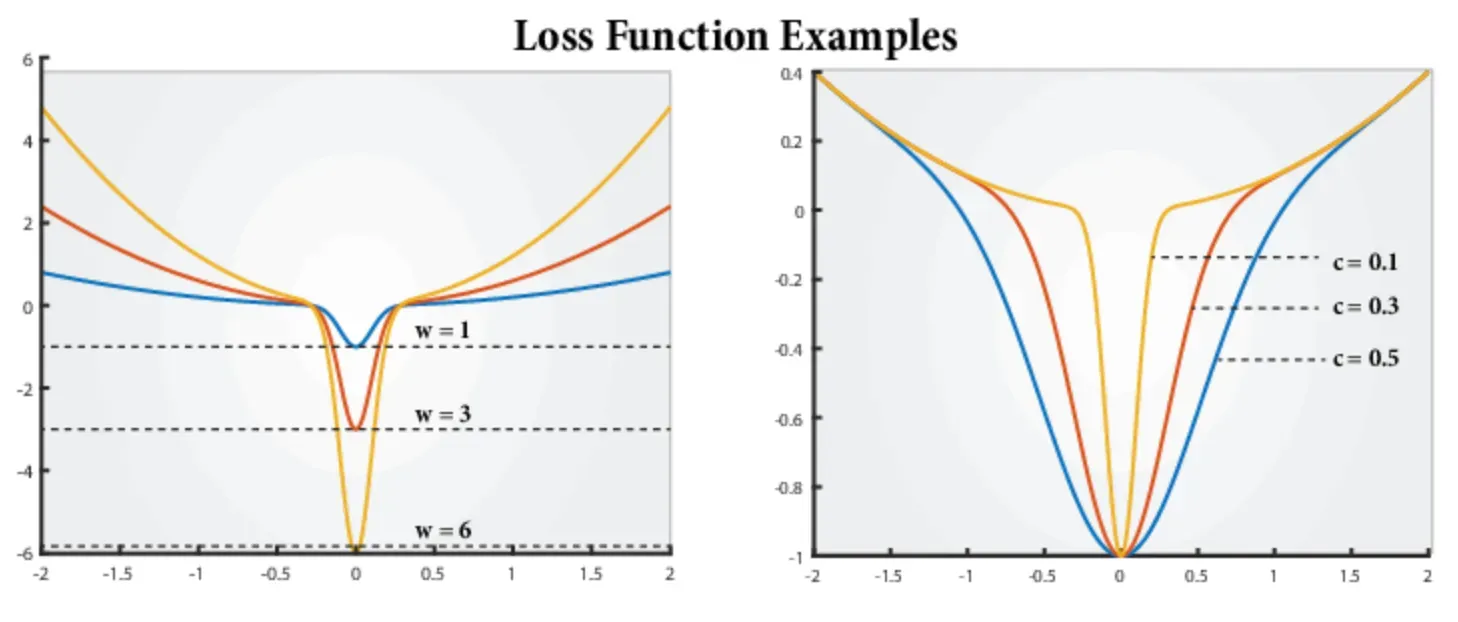

Log-Cosh Loss

Log-Cosh is a smoothed version of Mean Absolute Error, used primarily in regression problems. It is less sensitive to outliers, presenting a stable and efficient loss function.

Loss Function in Supervised Learning

Regression Loss Functions

Loss functions for regression tasks, such as Mean Squared Error or Mean Absolute Error, are used to measure the difference between continuous numeric values.

Classification Loss Functions

In classification tasks, loss functions like Cross Entropy or Hinge Loss are used to quantify the difference between predicted class labels and actual ones.

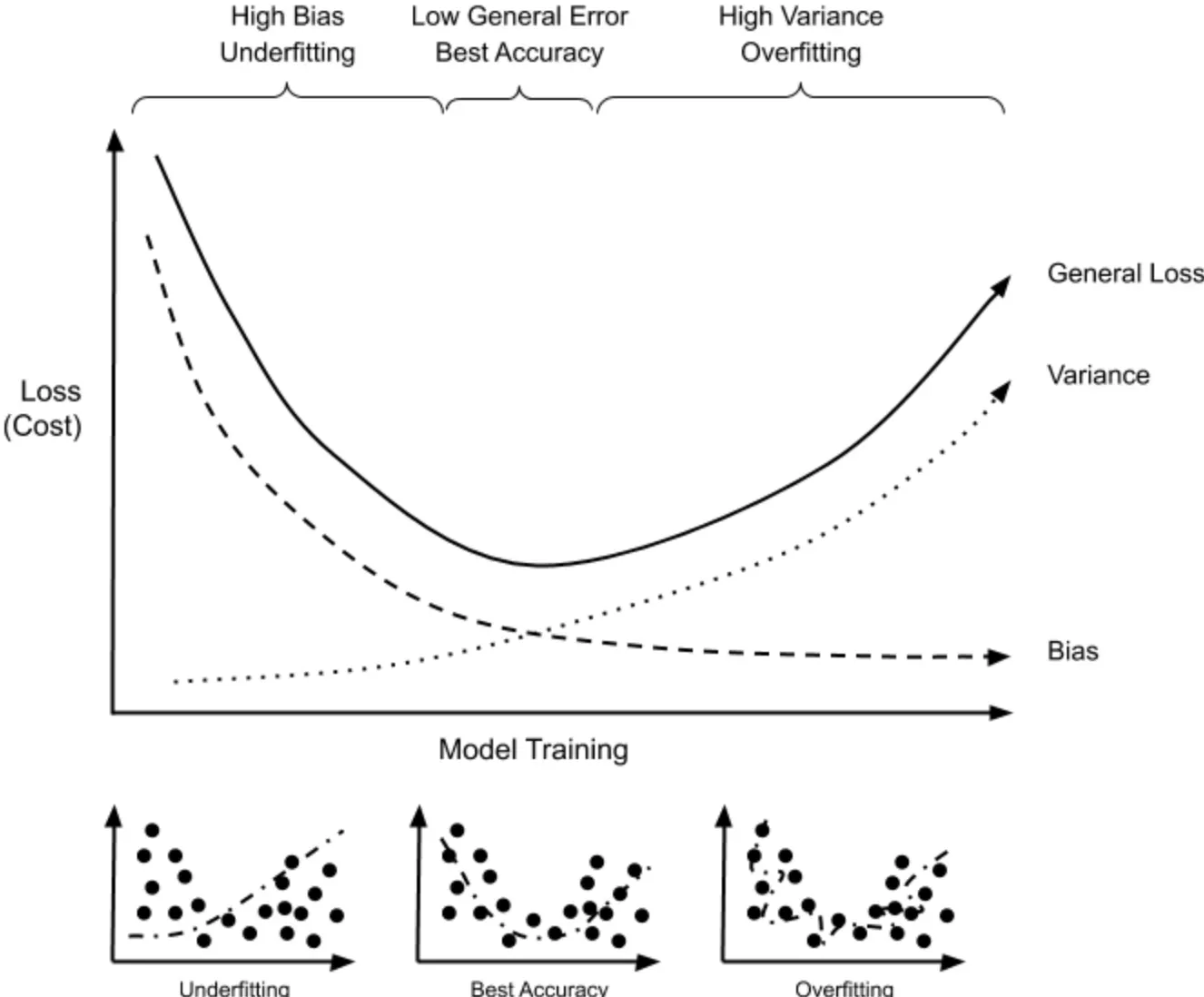

Importance of Correct Loss Function

Choosing the correct loss function for the right problem (classification or regression) is crucial in achieving good results in supervised learning.

Impact of Loss Function on Model Performance

The type of loss function used can greatly impact a model's performance. Different functions will punish certain types of errors differently, affecting the model's predictions.

Loss Function vs Objective Function

Understanding Objective Function

The objective function is a broader concept. It is the function that a machine learning model tries to optimize. A loss function can be a part of an objective function.

Difference between Loss Function and Objective Function

While the terms are often used interchangeably, a loss function quantifies the error between predicted and actual values, while an objective function is what the model seeks to optimize during training.

Relation between Loss Function and Objective Function

The loss function is a component of the objective function. The objective function aggregates all individual loss function outputs to provide a global measure of model performance.

Regularization in Objective Function

Regularization terms are added to the loss function to make up an objective function. These terms help prevent overfitting by penalizing complexity.

Effect of Loss Function on Optimization

Role of Loss Function in Gradient Descent

Loss function is core to optimization algorithms like gradient descent. The gradient of the loss function directs the steps taken to reach the point of minimal loss.

Evaluation of Model

The value of the loss function is used to evaluate and compare different models, or different parameter settings within a model.

Iterative Optimization

Machine learning and AI models often use iterative optimization strategies. The training process involves repeatedly adjusting the model's parameters to minimize the loss function.

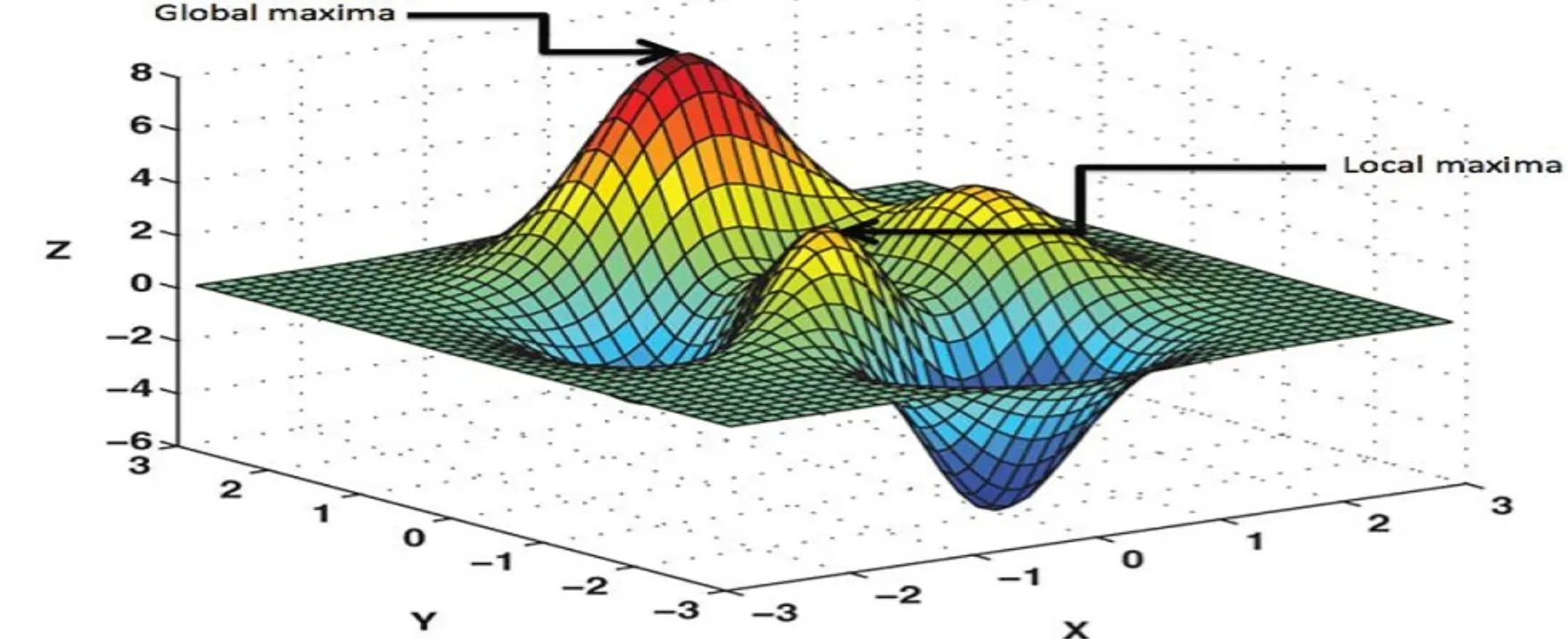

Challenges in Optimization

High-dimensional loss function spaces, local minima, and noisy optimization landscapes are challenges during the optimization process where loss function plays a significant role.

Loss Function in Deep Learning

Backpropagation and Loss Function

In deep learning, loss function plays a crucial role in backpropagation - the mechanism used to update the weights of a neural network.

Differentiation of Loss Function

In deep learning, the loss function needs to be differentiable, meaning it has a derivative function. This is important for gradient-based optimization methods.

Optimization of Deep Neural Networks

The choice and behavior of the loss function can significantly affect the performance and optimization dynamics of deep neural networks.

Learning rate and Loss Function

The learning rate, which controls how much the parameters are updated in response to the estimated error, interacts closely with the loss function. Too large a learning rate may cause divergent behavior, while too small may cause a slow-learning process.

Frequently Asked Questions (FAQs)

What is a loss function and why is it important?

A loss function is a method used in machine learning to measure how well a model's predictions align with actual outcomes. It's important as it guides the model's learning process and helps to optimize the model parameters.

How does a loss function work?

A loss function works by comparing the predicted value outputted by the model with the actual value. The difference, also known as the 'error', is quantified by the loss function.

What are the common types of loss function?

Common types of loss function include Mean Squared Error (MSE) used for regression problems, and Cross Entropy Loss used for binary classification problems.

What is the difference between a loss function and objective function?

While often used interchangeably, the loss function measures the model's error, while the objective function is what the model seeks to optimize during training. The loss function is usually a part of the objective function.

How does a loss function impact the performance of a machine learning or AI model?

The type and implementation of the loss function can greatly influence a model's performance. It affects how model's errors are penalized during optimization, which in turn reflects in the quality of output predictions.