What is GPT (Generative Pre-Trained Transformer)?

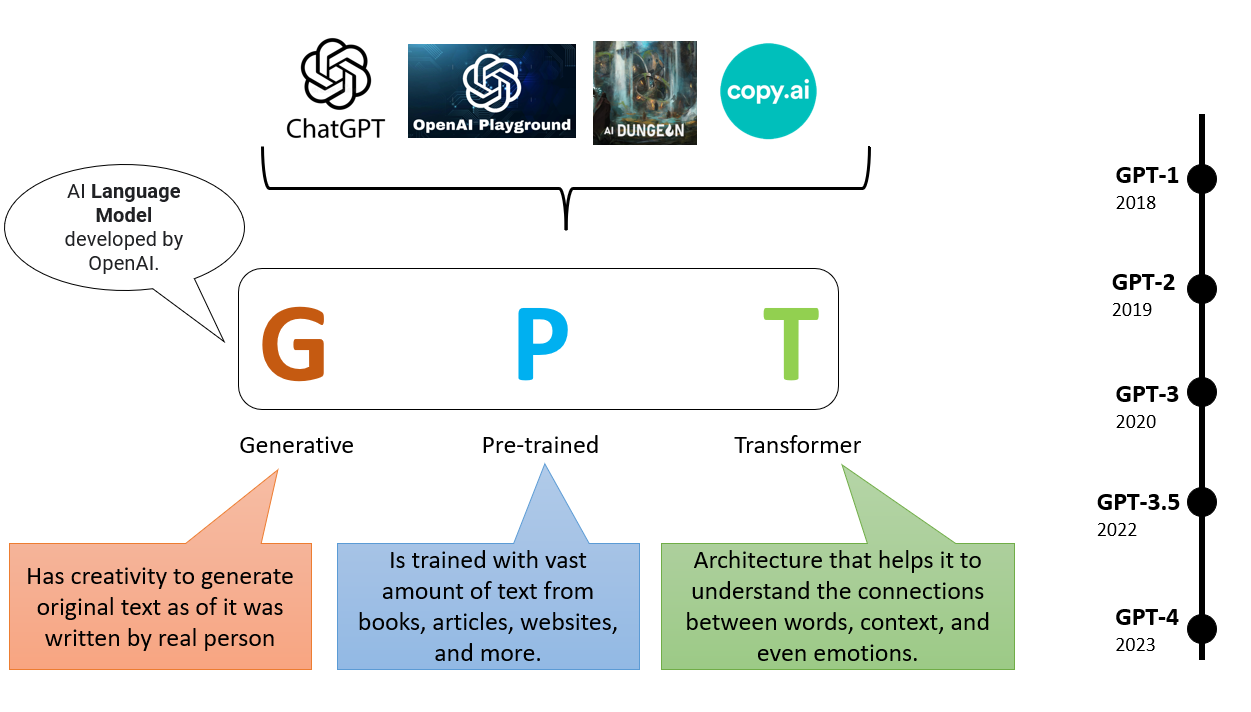

Generative Pre-Trained Transformer (GPT) is a type of AI language model that uses machine learning to generate human-like text. It can create paragraphs that seem like they were written by a human, and it does this by predicting the next word in a sequence. Think of it like auto-fill for your sentences but much smarter.

Basic Principles behind GPT

GPT uses something called a Transformer architecture. It's built to understand context in a text sequence, so it knows the difference between, say, apple the fruit and Apple the company. Pretty cool, right?

Key Components of GPT

The key components of the cutting-edge AI language model GPT are the following:

- Transformer Architecture: Utilizes layers of self-attention mechanisms to process input data in parallel, enhancing efficiency and scalability. The Transformer in GPT has two main components - the encoder, which reads the text, and the decoder, which generates the response.

- Tokenization: Breaks down text into smaller units (tokens) for better handling of context and meaning.

- Pre-training: Trains on vast amounts of text data to learn language patterns, grammar, and facts.

- Fine-tuning: Adjusts the model on specific datasets to improve performance on particular tasks or domains.

- Contextual Understanding: Captures context over long text sequences, allowing for coherent and contextually relevant responses.

- Text Generation Capability: Produces human-like text based on given prompts, making it useful for various applications.

- Multimodal Inputs (GPT-4): Processes and integrates information from multiple input types, like text and images.

Who Developed GPT?

In this section, you’ll find the development process of the cutting-edge GPT.

The Role of OpenAI in the Development of GPT

GPT was developed by OpenAI, an artificial intelligence research lab. They're like the tech wizards behind this clever tech, constantly innovating and pushing the boundaries of what AI can do.

Key Contributors in the Development of GPT

The team behind GPT is filled with some of the brightest minds in AI, including researchers like Alec Radford and Ilya Sutskever. They've truly revolutionized the world of machine learning and natural language processing.

When Was GPT Developed and Its Evolution Over Time?

The evolution process of GPT is as follow:

The Chronology of GPT Development

The journey began with GPT-1 in 2018, which was quickly succeeded by the more advanced GPT-2 in 2019. Now, we're at GPT-4 in 2023, and it's safe to say, the future looks promising!

The Evolution from GPT-1 to GPT-4

With each iteration, GPT has become more sophisticated and efficient. GPT-4, the latest version, boasts a whopping 175 billion machine learning parameters.

Why was GPT Developed?

Here are the reasons behind the development of GPT.

Goals and Objectives of GPT

OpenAI developed GPT with a simple goal in mind: to create an AI that can understand and generate human-like text. They wanted to enhance the capabilities of machine learning and bring us one step closer to truly intelligent AI.

Use Cases that GPT was Designed to Address

GPT is incredibly versatile. It's used in applications ranging from chatbots and customer service to content creation and even video game dialogue. If there's a need for smart, automated text, GPT is likely part of the solution.

Where is GPT Used?

Applications of GPT in Different Industries

GPT has found its way into a plethora of industries, including healthcare, where it can assist in patient diagnosis; finance, where it helps provide real-time customer support; and education, where it aids in personalized learning.

Examples of GPT Implementations in Real-World Scenarios

Think about that smart assistant on your phone, or the chatbot that helps you with online shopping. These are examples of GPT in action, tirelessly working to make our lives easier.

When was GPT Developed and Its Evolution Over Time?

The Chronology of GPT Development

The journey began with GPT-1 in 2018, which was quickly succeeded by the more advanced GPT-2 in 2019. Now, we're at GPT-4 in 2023, and it's safe to say, the future looks promising!

The Evolution from GPT-1 to GPT-4

With each iteration, GPT has become more sophisticated and efficient. GPT-4, the latest version, boasts a whopping 175 billion machine learning parameters

How Does GPT Work?

The cutting-edge GPT might seem like magic, but it's all science. Let's see how it functions

GPT might seem like magic, but it's all science, we promise. Let's see how it functions.

Overview of GPT Architecture

GPT is built around a Transformer-based architecture. It's trained on large amounts of text data, learning to predict the next word in a sequence and in turn, generating cohesive, human-like text.

Understanding the Transformer Model in GPT

The Transformer is the heart of GPT. It's designed to handle sequential data while understanding the context. This is what enables GPT to generate accurate and contextually appropriate responses.

Process of Text Generation in GPT

GPT starts by reading the input text, then it uses what it has learned during training to predict the next word, and the next, and so on, until it has a complete response.

Training and Fine-Tuning Process in GPT

GPT is trained on vast amounts of text data. Then, it's fine-tuned on a specific task, like translation or summarization, using a smaller, task-specific dataset.

Limitations and Challenges of GPT

As amazing as AI language model GPT is, it's not without its limitations.

Accuracy and Reliability of GPT Outputs

While GPT can generate impressive text, it sometimes makes mistakes, like generating incorrect or nonsensical information. Remember, it's an AI, not a human.

Ethical and Bias Concerns with GPT

GPT learns from data available on the internet, which can sometimes include biased or inappropriate content. It's a complex issue that researchers are actively working to address.

GPT and Other Language Models

GPT is just one of many language models out there. Let's see how it compares.

Comparing GPT with Other Transformer-Based Models (BERT, T5)

While GPT, BERT, and T5 all use Transformer architecture, they differ in their design and use cases. For instance, BERT is often used for tasks like question-answering, while GPT excels in text generation.

GPT vs LSTM-Based Models

GPT and LSTM-based models like ELMo both deal with language, but they do so in different ways. LSTM models are excellent for sequence prediction, while GPT shines in understanding context and generating text.

Suggested Reading: BERT vs GPT

GPT in AI Research

The cutting-edge GPT is not just a tool, but also a subject of intense research.

GPT's Influence on Recent AI Research Trends

GPT has greatly influenced AI research, with many studies focusing on understanding its inner workings or finding ways to improve its performance and minimize its limitations.

Role of GPT in Advancing AI

GPT's ability to generate human-like text has significantly advanced the field of AI. It has set a new benchmark for language models and inspired countless innovations.

Frequently Asked Questions (FAQs)

What is a Transformer in GPT?

A Transformer is a type of machine learning model used in GPT to understand context and generate human-like text.

What are some real-world uses of GPT?

GPT is used in chatbots, content creation, digital assistants, video game dialogue, and much more.

What are the limitations of GPT?

GPT can sometimes generate inaccurate information and it can also unintentionally propagate biases found in its training data.

How is GPT different from other language models like BERT or LSTM?

GPT is specifically designed for text generation, while BERT is often used for tasks like question-answering and LSTMs are excellent for sequence prediction.