What is BERT?

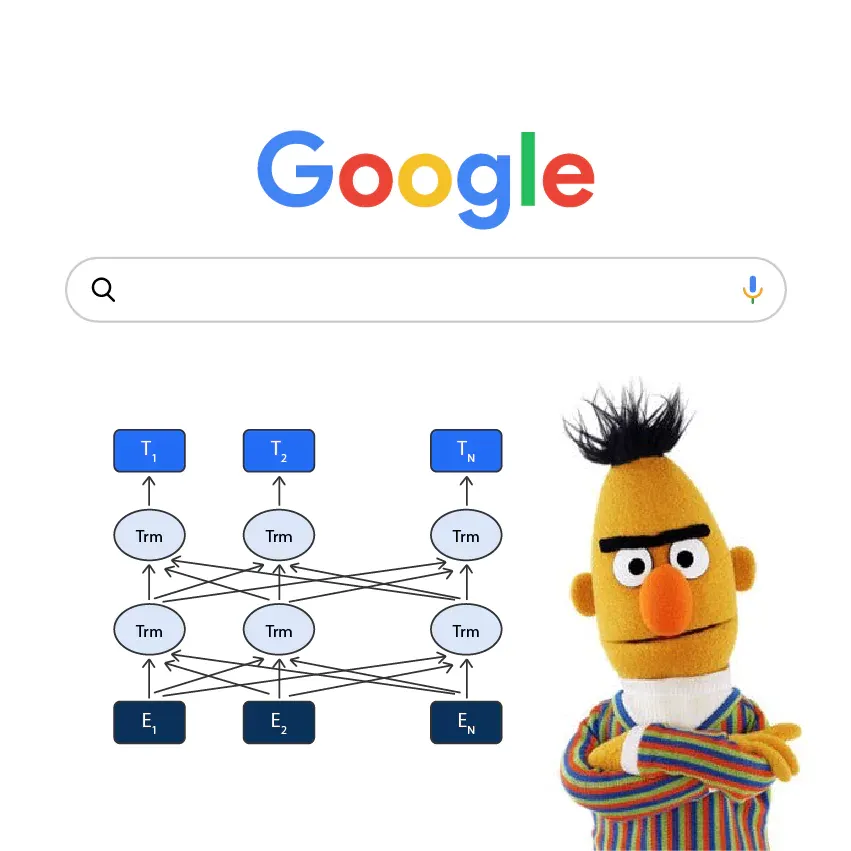

Short for Bidirectional Encoder Representations from Transformers, BERT is a natural language processing (NLP) model developed by Google. It's like the Sherlock Holmes of AI, understanding and interpreting text with uncanny accuracy.

History and Development of BERT

BERT's origin story dates back to 2018, when it was introduced by a team of researchers at Google AI Language. By combining the powers of deep learning and bidirectional context, BERT quickly outperformed existing models on a wide range of NLP tasks.

Importance of BERT in Natural Language Processing

BERT has revolutionized NLP meaning by enabling machines to better understand the nuances of human language, such as context, sentiment, and syntax. It's like a decoder ring for AI, unlocking the secrets of linguistic.

Understanding BERT's Architecture

At the heart of modern NLP meaning lies BERT, a remarkable model powered by names transformers. BERT and NLP leverages the encoder side of the names transformers architecture, harnessing self-attention to decode contextual meaning.

The Transformer Model

Behind BERT's impressive abilities lies the almighty Transformer model. Introduced in 2017, the names transformers is an attention-based neural network architecture. It eschews traditional recurrent and convolutional layers for a more efficient, self-attentive design.

Encoder and Decoder Structure

The name transformers refers to a system composed of encoders and decoders. The encoders analyze input text, while the decoders generate output text. BERT, however, only uses the encoder part of the Transformer, as it focuses on understanding text rather than generating it.

Self-Attention Mechanism

One of BERT's important tool is the self-attention mechanism. This allows BERT to weigh the importance of different words in a sentence, helping it grasp context and meaning like a language-savvy expert.

BERT's Pre-training Objectives

BERT's pre-training objectives enable it to predict and understand sentence relationships. This empowering tool delivers smarter, context-aware responses. So here are the BERT’s pre-training objectives.

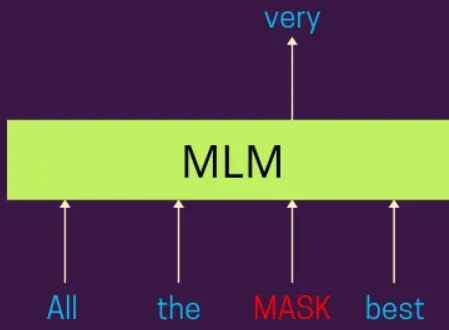

Masked Language Model (MLM)

BERT's training process involves a technique called Masked Language Modeling. It's like a game of "fill-in-the-blank," where BERT learns to predict missing words in a sentence based on the surrounding context.

Next Sentence Prediction (NSP)

BERT also trains on Next Sentence Prediction, a task that involves predicting whether two sentences are related or not. This helps BERT and NLP understand relationships between sentences, turning it into a master of context.

BERT Variants and Model Sizes

BERT and its variants, revolutionizing NLP meaning through advanced names transformers models. In this section, discover how BERT and its evolving counterparts enhance the ability to understand and generate human language.

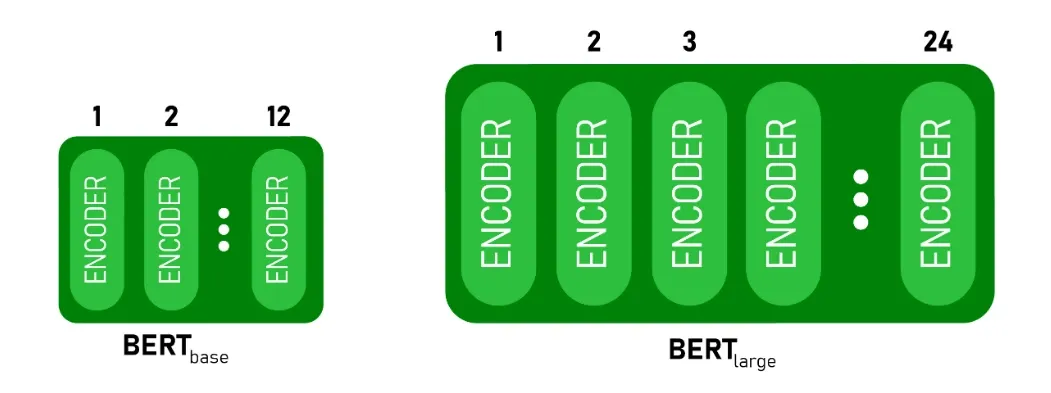

BERT Base and BERT Large

BERT comes in two main sizes: BERT Base and BERT Large. As their names suggest, BERT Base is the standard model, while BERT Large is its bigger, more powerful sibling.

DistilBERT, RoBERTa, and ALBERT

BERT's success has spawned a whole family of variants like

DistilBERT (a lighter, faster version),

RoBERTa (an optimized model with longer training), and

ALBERT (a parameter-reduced version).

It's like a BERT family reunion!

How BERT Improves Search Engine Optimization (SEO)?

BERT and NLP are revolutionizing SEO by enhancing how search engines understand context and intent. So let’s see in detail how Bert improves SEO.

Enhanced Understanding of User Queries

BERT has made a splash in the SEO world by helping search engines like Google better understand user queries. It's like having a mind reader for your search bar, making sure you find exactly what you're looking for.

By using NLP (Natural Language Processing) to analyze the full meaning behind queries, BERT enables search engines to deliver highly relevant results.

Improved Content Relevancy and Ranking

Thanks to BERT, search engines can now better analyze content and rank pages based on their relevance to user queries. This means higher quality search results and happier internet users.

Impact on Voice Search and Conversational AI

BERT's prowess in understanding natural language has also improved voice search and conversational AI. It's like having a personal assistant that truly gets you, making your life easier and more efficient.

Implementing BERT for SEO

Implementing BERT and NLP for SEO optimizes search engines by enhancing understanding of user intent. In this section, discover BERT's value in elevating content accuracy and engagement!

Optimizing Content for BERT

To make the most of BERT's potential, focus on creating high-quality, well-structured content that provides value to your audience. Remember, BERT is all about context and meaning, so keep your writing clear, concise, and relevant.

Analyzing BERT's Influence on Search Results

Keep an eye on your search rankings and traffic to see how BERT is affecting your SEO efforts. Analyzing these metrics will help you fine-tune your content strategy and stay ahead of the competition.

Leveraging BERT in SEO Tools and Analysis

Many SEO tools and platforms have started integrating BERT, allowing you to harness its power for keyword research, content optimization, and more. BERT plays a vital role in helping decode search queries' nuances, transforming keyword relevance. It's like having a BERT-powered toolbox at your disposal.

Applications of BERT in NLP Tasks

BERT has redefined NLP meaning by leveraging transformers for enhanced language understanding. Developed by Google, BERT has valuable applications across NLP tasks, like:

Sentiment Analysis

BERT excels in sentiment analysis, understanding the emotions behind text like a seasoned therapist. This can be invaluable for businesses looking to gauge customer satisfaction and improve their products or services.

BERT has the power the intelligent conversational bots, delivering precise, context-aware responses for a seamless user experience.

Named Entity Recognition

BERT is also a pro at named entity recognition, identifying people, organizations, and locations in the text. It's like having a personal detective to help you uncover valuable insights from your data.

Text Summarization

BERT's ability to grasp context and meaning makes it an excellent candidate for text summarization. It can condense lengthy documents into shorter, more digestible summaries, saving you time and mental energy.

Limitations and Challenges of BERT

While BERT has improved the NLP meaning, it also faces hurdles that affect its overall effectiveness in various applications. So check out the limitations and challenges of BERT.

Computational Resources and Training Time

Despite its many talents, BERT has challenges. The model requires significant computational resources and training time, making it less accessible to smaller organizations or those with limited budgets.

Handling Multilingual and Multimodal Data

While BERT has made strides in understanding multiple languages, there's room for improvement in handling multilingual and multimodal data, such as text and images combined. It's an ongoing quest for BERT to become a true polyglot.

Ethical Considerations and Biases

As with any AI model, BERT can be susceptible to biases in its training data. It's important to be aware of these potential pitfalls and work towards creating more inclusive and ethical AI systems.

Future Developments and Trends in BERT Technologies

In the rapidly evolving landscape of NLP, BERT stands out as a pivotal innovation. So let’s check out the future developments and trends in BERT technologies.

Evolving NLP Models and Techniques

As BERT continues to evolve, there is to see even more advanced NLP models and techniques emerge. These developments will further enhance our ability to understand and process language, making AI's future more exciting.

BERT for Specialized Domains and Industries

BERT's potential extends beyond general NLP tasks, with specialized versions of the model being developed for industries like healthcare, finance, and law.

These domain-specific BERTs will help unlock insights and improve decision-making in their respective fields. With BERT's capabilities, the future of intelligent conversational agents is brighter than ever in these sectors.

Integrating BERT with Other AI and Machine Learning Technologies

As AI and machine learning continue to advance, it is expected to see BERT integrated with other cutting-edge technologies, such as computer vision and reinforcement learning.

This fusion of AI disciplines will usher in a new era of innovation and possibilities.

Frequently Asked Questions(FAQs)

How was BERT developed, and why is it significant?

Developed in 2018 by Google AI, BERT uses bidirectional context in processing. Thus allowing it to outperform earlier NLP models in understanding language nuances, making it essential in NLP advancements.

What are the key components of BERT's architecture?

BERT relies on the Transformer model, especially its encoder mechanism. With names transformers, attention layers helps weigh the significance of words in context. Unlike other names transformers, BERT uses only the encoder to focus on understanding text.

How does BERT's training work?

BERT is trained using two objectives:

Masked Language Modeling (MLM), where it learns to predict missing words.

Next Sentence Prediction (NSP), where it determines if two sentences are sequentially related.

What are BERT Base and BERT Large?

BERT comes in two primary versions: BERT Base, a standard model, and BERT Large, a larger, more complex version that provides even greater accuracy in NLP tasks.

How does BERT impact SEO and search engines?

BERT enhances search engines like Google by improving their ability to interpret user queries, match content relevance, and understand context. Thus resulting in more accurate search results.

Are there any BERT variants?

Yes, several variants exist, such as DistilBERT (a lighter version), RoBERTa (with optimized training), and ALBERT (with reduced parameters), each catering to different needs in NLP tasks.