What is Masked Language Modeling?

Masked language modeling is a technique used in natural language processing (NLP). It helps computers understand and generate human language. Imagine you’re reading a sentence with some words hidden, and you need to guess those words. This is what masked language modeling does. It takes a sentence, hides some words, and then tries to predict the hidden words. This method trains models to understand context and meaning.

How Does Masked Language Modeling Work?

In this section, you’ll find the working process of masked language modeling.

Fundamental Principles and Techniques

Masked language modeling relies on a few key ideas:

- Context Understanding: The model learns the context of words in a sentence. Predicting masked words helps figure out how words relate to each other.

- Data Training: Large amounts of text data are used. The model learns patterns, grammar, and word meanings from this data.

- Iteration: The process repeats many times. The model keeps improving its predictions by learning from its mistakes.

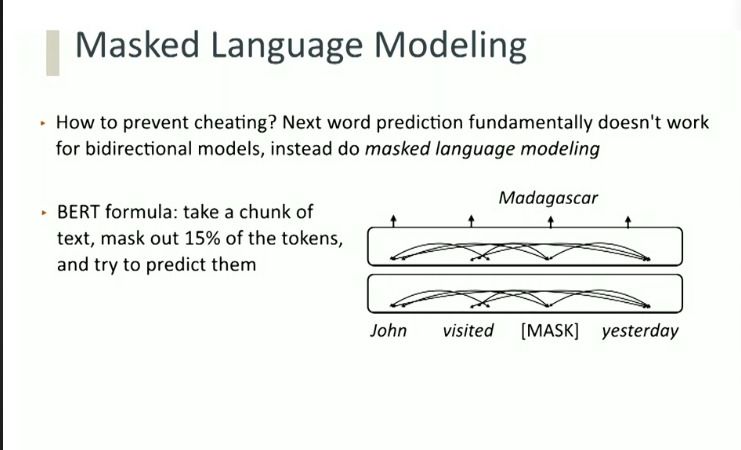

Step-by-Step Process of Masked Language Modeling

Here's how masked language modeling works, step by step:

- Text Input: The process starts with a sentence or a passage.

- Masking Words: Random words in the text are hidden or "masked." For example, in the sentence "The cat sat on the mat," the word "cat" might be masked like this: "The [MASK] sat on the mat."

- Model Prediction: The model tries to predict the masked words based on the surrounding words. It uses the context to guess what the hidden word might be.

- Comparison: The model's guess is compared to the actual word. If the guess is wrong, the model adjusts its understanding.

- Learning: This process repeats with many different sentences and masked words. Over time, the model gets better at predicting masked words, improving its understanding of the language.

Common Algorithms and Models of Masked Language Modeling

Here are the different algorithms and models of masked language modeling:

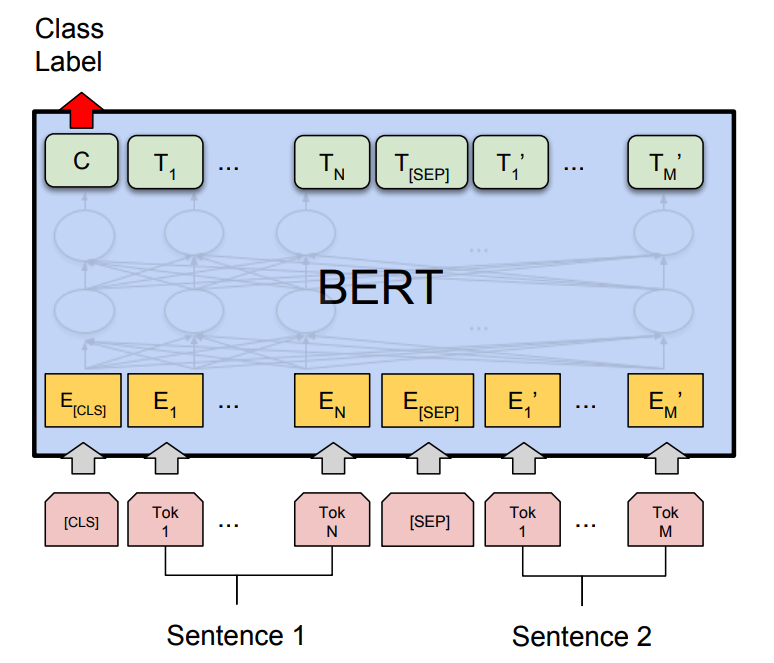

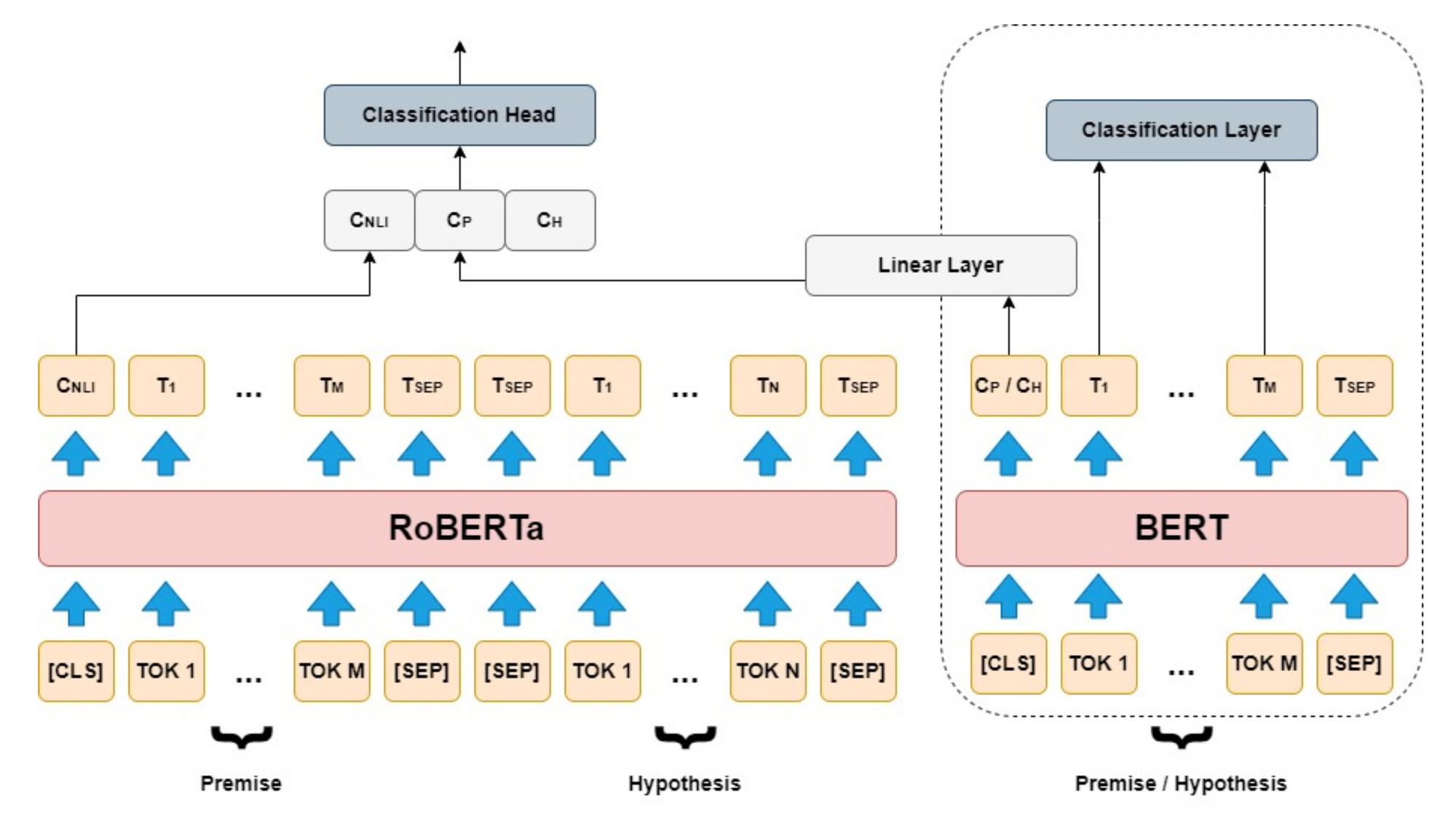

BERT (Bidirectional Encoder Representations from Transformers)

- Bidirectional Learning: BERT reads the text in both directions (left to right and right to left). This helps it understand the context better.

- Masked LM: BERT is trained using masked language modeling. It masks random words and tries to predict them.

- Applications: Used for tasks like question answering and sentence prediction.

Suggested Reading: Statistical Language Modeling

RoBERTa (Robustly Optimized BERT Pretraining Approach)

- Improved Training: RoBERTa tweaks the training process of BERT. It uses more data and longer training times.

- Performance: This results in better performance on many language tasks.

- Techniques: Like BERT, it uses masked language modeling to understand context.

DistilBERT

- Simplified Model: DistilBERT is a smaller, faster version of BERT.

- Efficiency: It maintains most of BERT's performance but is more efficient to use.

- Training: Uses masked language modeling like BERT and RoBERTa.

Other Models

- XLNet: Combines ideas from both masked language modeling and causal language modeling. It aims to capture more context.

- ALBERT: Focuses on reducing model size while maintaining performance. Uses techniques similar to BERT.

Suggested Reading: Large Language Models

Key Components of Masked Language Modeling

In this section, you’ll find the key components of masked language modeling.

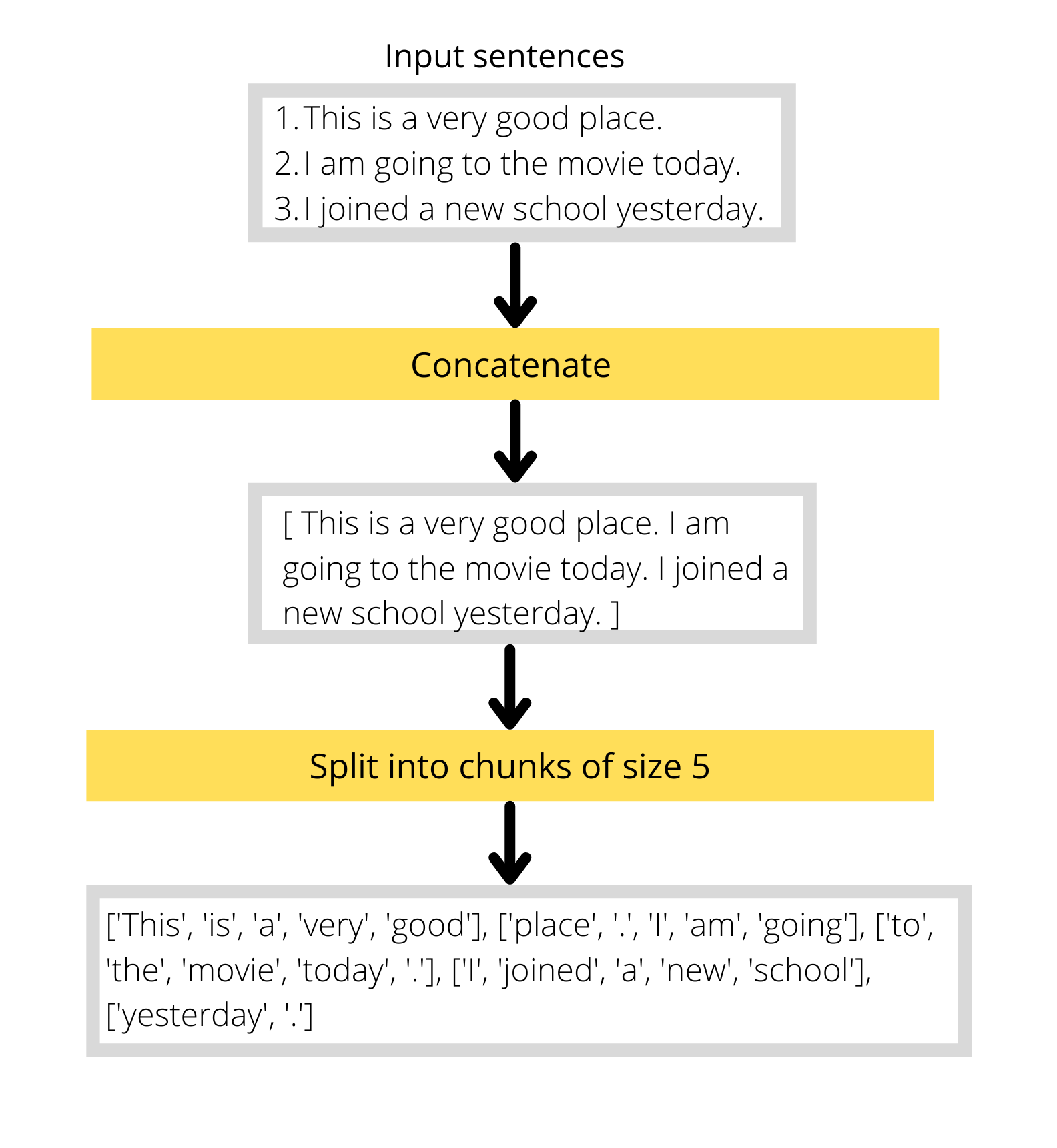

Data Preprocessing

Data preprocessing in masked language modeling is:

- Text Collection: Gather large amounts of text data. The more diverse, the better.

- Tokenization: Split the text into smaller units called tokens. These can be words or subwords.

- Masking: Randomly hide some tokens. For example, in the sentence "The dog barked loudly," "dog" might be masked, resulting in "The [MASK] barked loudly."

Model Training

Model training is about:

- Input: Feed the masked text into the model.

- Prediction: The model predicts the masked tokens based on the context. For example, it might predict "[MASK]" as "dog" in the sentence "The [MASK] barked loudly."

- Comparison: Compare the model's prediction to the actual word. If it's wrong, the model adjusts its internal settings to improve.

- Iteration: Repeat this process with many sentences. The model gets better with each iteration.

Evaluation Metrics

Evaluation metrics talk about:

- Accuracy: Measures how often the model's predictions are correct. Higher accuracy means better performance.

- Loss: Represents the difference between the predicted and actual words. Lower loss indicates better performance.

- Perplexity: Evaluates how well the model predicts the next word. Lower perplexity means the model understands the text better.

What is Causal Language Modeling (CLM)?

Causal Language Modeling, also known as autoregressive language modeling, predicts the next word in a sequence based on the previous words. This method generates text by conditioning on the context provided by preceding words. Common models using CLM include the GPT (Generative Pre-trained Transformer) series. CLM operates sequentially, which aligns well with natural language generation tasks but is limited in capturing bidirectional context.

Difference Between Casual Language Modeling and Masked Language Modeling

Causal Language Modeling is particularly suited for tasks like text generation and completion, where sequential prediction is key. MLM, with its bidirectional context understanding, excels in tasks like text classification and question answering.

Causal language modeling focuses on predicting future words. Whereas MLM's ability to fill in the blanks makes it robust for various comprehension-based applications. Both approaches are complementary, each bringing unique strengths to different NLP tasks.

Frequently Asked Questions(FAQs)

How does MLM help in natural language processing?

MLD helps NLP by enabling models to learn context, improve language understanding, and generate more coherent text predictions.

Which models use masked language modeling?

Popular models like BERT and its variants utilize masked language modeling for pre-training on large corpora of text.

Is masked language modeling supervised or unsupervised learning?

MLM is an unsupervised learning technique where models learn from unlabeled text data without explicit correctional feedback.

Can masked language modeling improve machine translation?

Yes, MLM can enhance machine translation by providing a deeper understanding of linguistic context and improving the encoder representations.

What differentiates BERT from traditional language models?

BERT differs by using MLM and next-sentence prediction for pre-training, enabling it to capture bidirectional context better than traditional unidirectional language models.