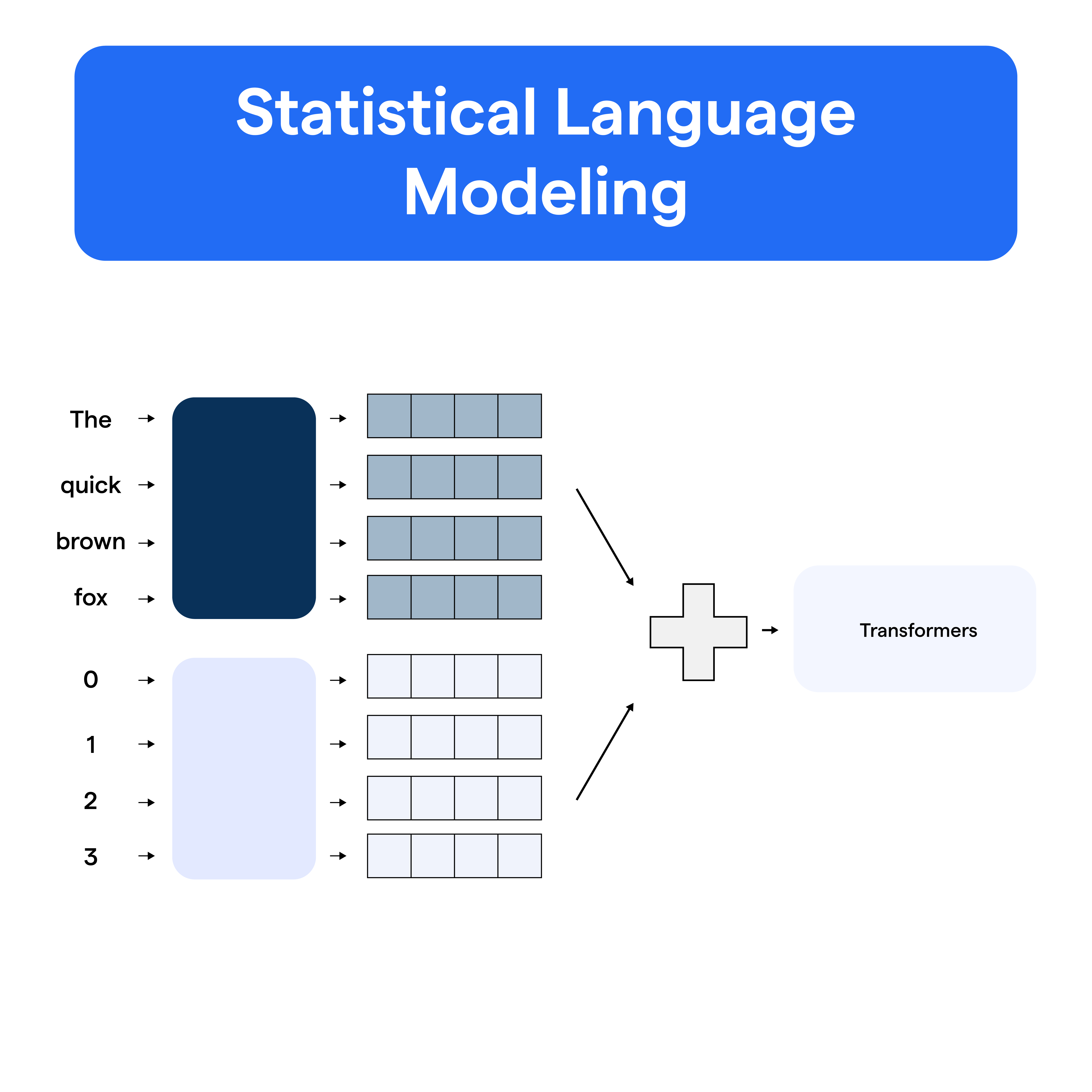

What is Statistical Language Modeling?

Statistical Language Modeling refers to the development of probabilistic models for predicting a sequence of words. These models calculate the likelihood for a sentence or a sequence of words to occur within a language, assigning probabilities to sentences and sequences based on their use and occurrence in the training data.

The Purpose of SLM

The purpose of SLM is to understand and quantify the characteristics of language that enable humans to communicate, generate, and comprehend different sequences of words. It is used extensively in Natural Language Processing (NLP) tasks like speech recognition, machine translation, and named entity recognition.

The Power of SLM

SLM reflects the brilliant complexity of human language. The probabilistic models make machine-human interaction successful by accurately predicting, generating, and interpreting human-like language sequences. Without SLM, the machine would still be dumb entities without any understanding, capable of mere factual or mechanical response.

The Evolution of SLM

SLM's roots date back to the 20th century, right from the simple Markov chain model to the present explosive advancements in NLP thanks to deep learning techniques. The rise of machine learning methods, improved computational capabilities, and the wealth of digital text data available have spurred the development of sophisticated SLMs.

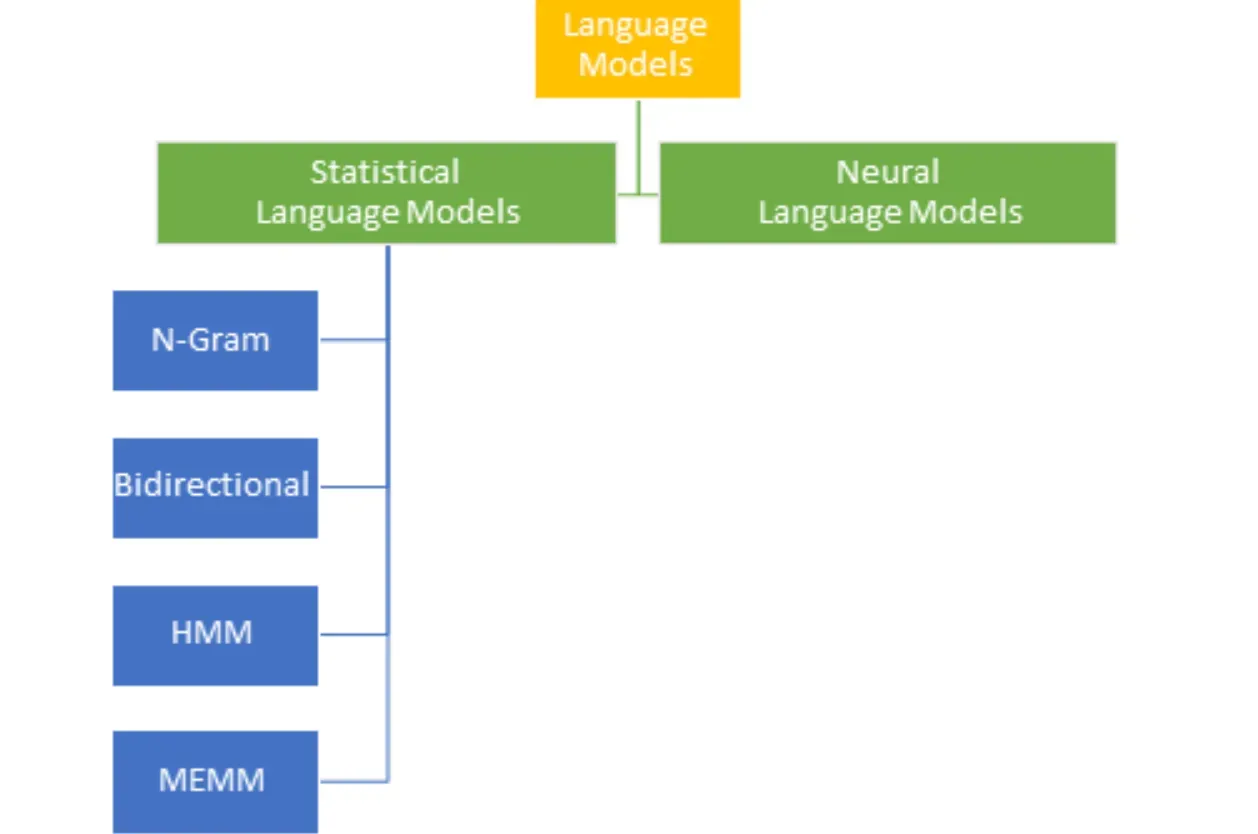

Types of Statistical Language Models

SLMs have evolved over time, with different types demonstrating varied degrees of success and efficiency.

N-gram Models

N-gram models form the foundation of traditional SLM, which considers word probability to be dependent on a fixed number of preceding words. For example, in a bigram model (n=2), the likelihood of encountering the word ‘ball’ would depend on just the word right before ‘ball’.

Continuous Space Language Models

Continuous space models broke the shackles that limited language models to discrete word representation by introducing continuous vector space representations. This helps these models capture much more intricate linguistic features, such as semantic and syntactic relationships between words.

Neural Network-based Language Models

Neural language models, with their powerful ability to learn and capture abstract features from given data, have brought to the table a new level of precision and performance. These models find word relationships in more abstract ways and break free from many constraints of traditional models.

Cache Models

Cache models capitalize on the temporal aspect of language: things recently spoken about are likely to be spoken about again soon, marking a shift from traditional static language models. This helps in accurately predicting words for more repetitive contexts.

The Process of Constructing an SLM

Construction of an SLM involves carefully curated steps that lay solid groundwork for its working.

Data Collection

The initial step involves gathering an extensive amount of language data that forms the training ground for your SLM. The choice of data depends on the language, domain, and specific tasks the model is intended to be used for.

Model Selection

The next step is to choose the model type you want to employ based on the task, the computational resources available, and the performance expectation. Common choices range from basic n-gram models to more advanced neural language models.

Parameter Estimation

Once the model is chosen, the next step involves learning or estimating the parameters of the model. For instance, for an n-gram model, this might involve calculating the maximum likelihood estimates of the conditional probabilities of the n-grams in the dataset.

Testing

The final step in constructing an SLM is to test it on unseen data - a separate testing set - to assess how well the model performs. This step might also entail tuning the model further to improve its performance.

Evaluation Metrics for SLM

Assessing the performance of an SLM involves running it through various tests that measure its efficiency and accuracy.

Perplexity

Perplexity measures the SLM's uncertainty in predicting the next word in a sequence. The lower the value of perplexity, the lesser the uncertainty, meaning the better the model.

Cross-Entropy

Cross-Entropy, calculated as an average of the log probabilities of the words in the text, is used to measure how well the model’s prediction matches the actual outcome. A lower cross-entropy value signifies a better model.

Log-Likelihood

Another essential metric is Log-Likelihood, which quantifies the probability the model assigns to the observed data. Higher values indicate a better-fitting model.

Word Error Rate

Word Error Rate (WER) measures the ratio of the number of incorrect predictions to the total number of words. It finds significant use in speech recognition and is a practical indicator of the model’s word prediction capability.

Applications of Statistical Language Modeling

From digital assistants on our smartphones to cutting-edge AI research, SLM has myriad applications.

Speech Recognition

Speech Recognition or Automatic Speech Recognition (ASR) is a prime use case for SLM. The model assists in converting spoken language into written transcripts by predicting the most likely word sequences.

Machine Translation

Machine Translation thrives on SLM to provide accurate translations of text from one language to another by predicting likely sequences of words in the target language using the source language's information.

Information Retrieval

Search engines power their secret sauce with SLM, using it to predict relevance and context for documents based on user queries, providing efficient information retrieval.

Predictive Text and Autocorrect

SLMs play a substantial role in the predictive text and autocorrect features on our smartphones. As you type, the model predicts the next likely word based on the current input.

The Future of Statistical Language Modeling

As we stand at the intersection of linguistics and computer science, exciting advancements are in store for SLM.

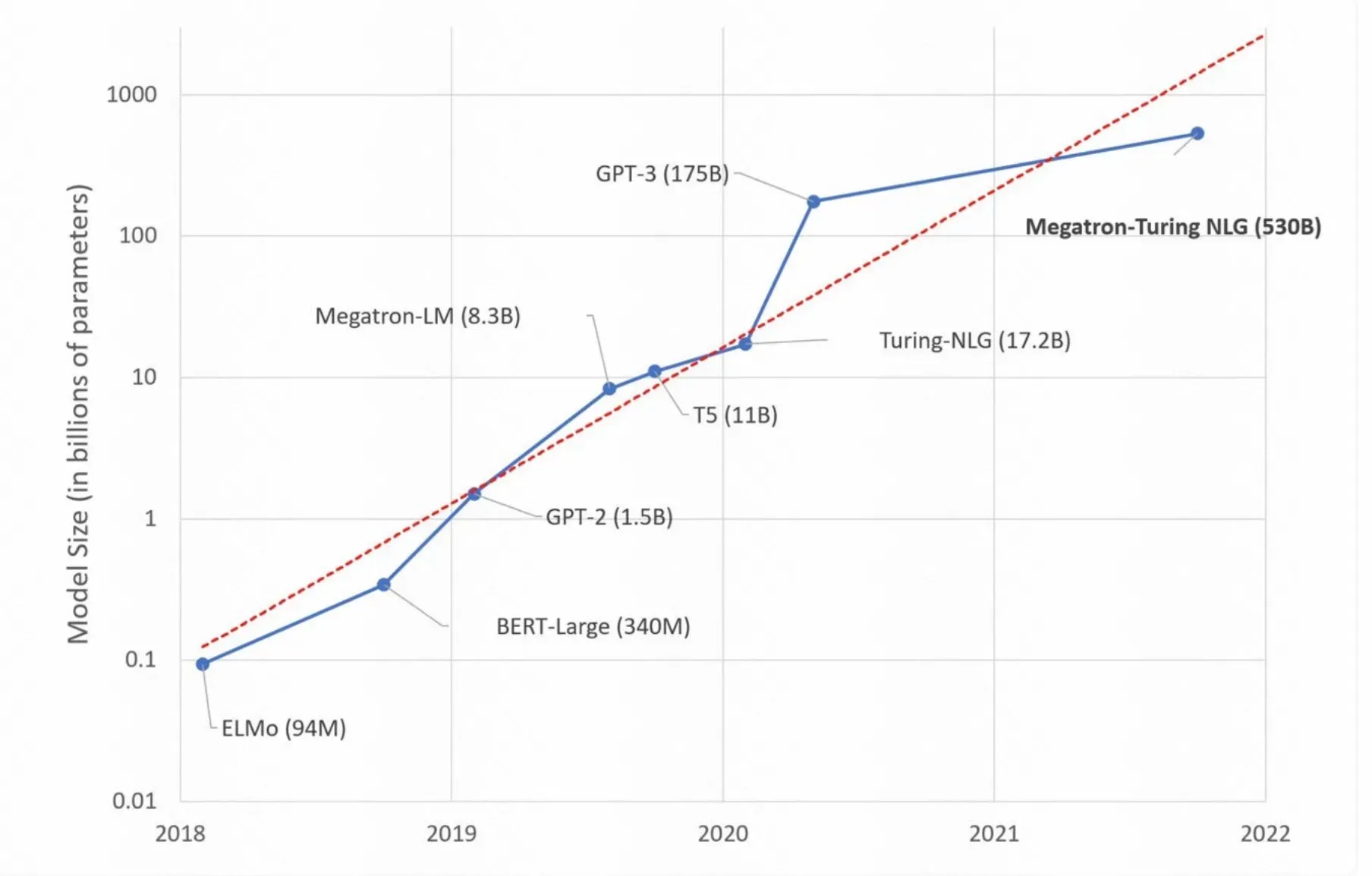

Advancements in Deep Learning

Novel deep learning models, such as Transformer-based models, have revolutionized SLM, promising improved accuracy, versatility in learning, and broader adoption.

Harnessing the Power of Big Data

Our digital age presents us with growing data availability. With this wealth of data, SLM can learn from a broader corpus of language data, resulting in more nuanced understanding and robust models.

Shaping the Computational Future

As computational capabilities continue to advance, the creation of more complex and powerful models becomes a reality. This enables higher learning capabilities and better predictive precision for SLMs.

Responsibility and Fairness

As we move forward, there is an increased emphasis on incorporating ethics directly into SLM. The future will see the rise of more responsible AI, ensuring the avoidance of biases and respect for user privacy in SLMs.

Frequently Asked Questions (FAQs)

What is Statistical Language Modeling?

Statistical Language Modeling focuses on predicting human language using statistical patterns and probabilities.

How does N-Gram modeling work?

N-Gram models predict the probability of the next word in a sequence based on the occurrence of preceding words, considering a fixed number of preceding words.

What are the advantages of continuous space models?

Continuous space models represent words as vectors in a high-dimensional space, capturing semantic relations. They leverage neural networks for accurate predictions in language processing tasks.

How can I build a simple statistical language model?

Building a simple statistical language model involves steps like the Markov assumption, N-Gram modeling, and Maximum Likelihood Estimation (MLE) to estimate word probabilities.

What is perplexity in evaluating language models?

Perplexity is a metric that measures the average uncertainty or surprise of a language model in predicting a test set. Lower perplexity values indicate better performance.