What are Deep Models?

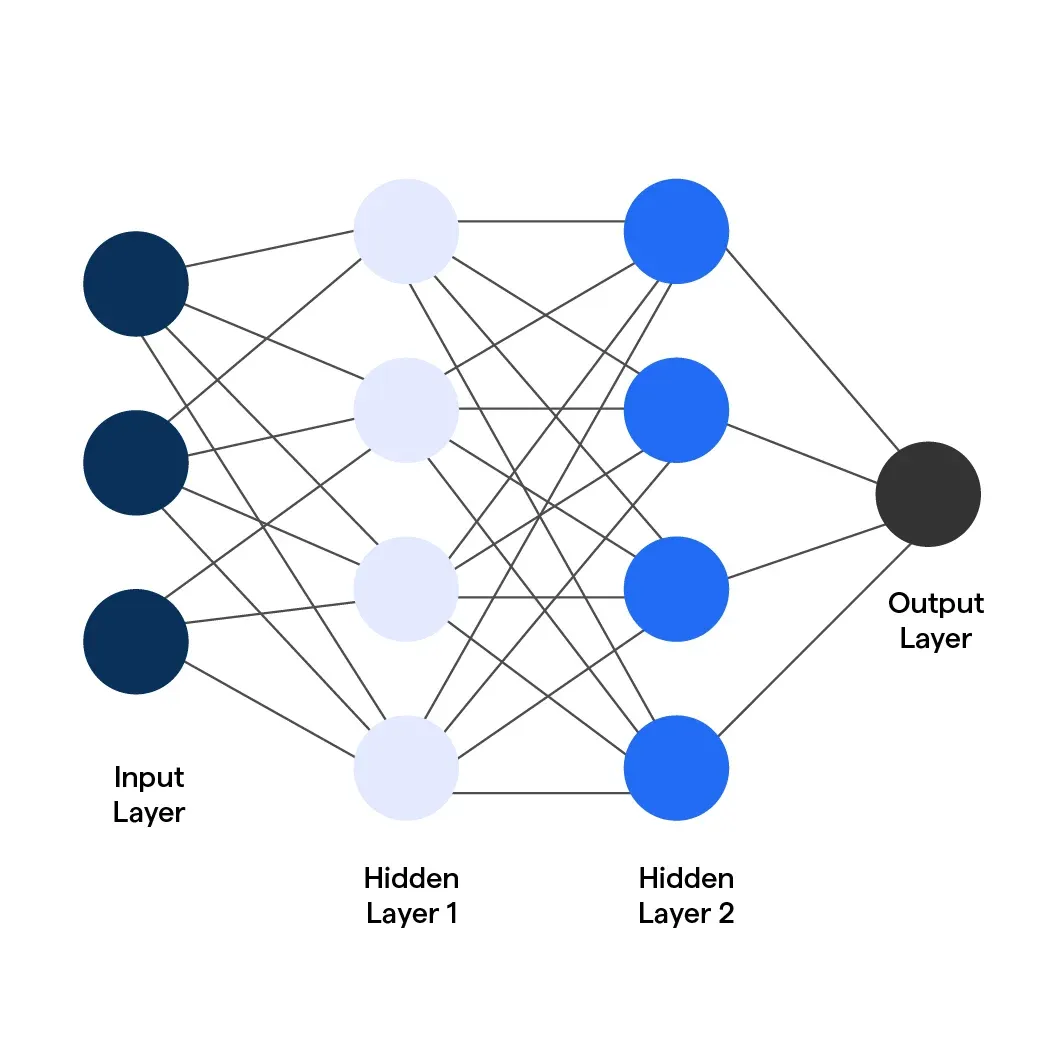

Deep Models are a type of machine learning algorithm that use artificial neural networks with multiple layers to extract high-level features from input data. This means that the model is able to learn and identify complex patterns and relationships within the data, making it particularly effective in solving difficult problems such as speech recognition, natural language processing, and computer vision.

The depth of the model refers to the multiple layers of interconnected neurons used to process the input data. Each layer is designed to extract and transform specific features from the input data using a combination of weights and biases. As the input data is passed through the layers, the model adjusts the weights and biases to optimize its predictions, resulting in increasingly accurate results.

Types of Deep Models

1. Convolutional Neural Networks (CNN)

Convolutional Neural Networks (CNNs) are a type of deep neural network that use convolutional layers to process images. They're commonly used for image classification, but can also be applied to other tasks such as object detection and segmentation.

2. Recurrent Neural Networks (RNN)

Recurrent Neural Networks (RNNs) are another type of deep neural network that can learn long-term dependencies between data points in sequences or time series.

RNNs have been applied in natural language processing tasks like machine translation and speech recognition because they can deal with variable length inputs and output sequences without requiring any additional training data beyond what's available during runtime execution--a process known as "backpropagation through time" (BPTT).

3. Natural Language Processing (NLP)

Natural Language Processing (NLP) is another common application of deep models, and involves processing and understanding human language. Deep models such as Recurrent Neural Networks (RNNs) and Transformer models have achieved state-of-the-art performance on many NLP tasks such as language modeling, sentiment analysis, and machine translation. For example, the famous GLUE benchmark is a yearly competition to evaluate the performance of NLP models on a variety of tasks, and deep models have consistently outperformed other models on this benchmark.

4. Speech Recognition

Speech recognition is another common application of deep models, and involves recognizing and transcribing human speech into text. Deep models such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have achieved state-of-the-art performance on many speech recognition tasks such as keyword spotting, speaker identification, and speech-to-text transcription. For example, the famous LibriSpeech dataset is a collection of over 1,000 hours of spoken text, and deep models have achieved high accuracy rates on this dataset.

Challenges and Limitations of Deep Models

1. Insufficient Data Availability

A major obstacle in deep models is the scarcity of available data. Deep learning models need a substantial amount of data to effectively identify and generalize patterns.

However, obtaining labeled data can be a lengthy, costly, and difficult process in specific fields, hindering the efficiency of deep models in certain applications.

2. Computational Demands

Deep learning models necessitate considerable computational resources, such as high-performance CPUs and GPUs, for training and execution.

This may restrict the accessibility of deep learning for individuals and organizations lacking these resources. Furthermore, the substantial computational expense of deep learning can constrain the scalability of models, particularly in real-time applications.

3. Prone to Overfitting

A prevalent issue with deep learning models is overfitting. Overfitting happens when a model excels in training data but underperforms in test data.

Overfitting can arise when a model is overly complex or when there is not enough training data.

While regularization techniques can help mitigate overfitting, they might also decrease the model's accuracy on the training data.

4. Lack of Interpretability

Another drawback of deep learning models is their lack of interpretability. Deep learning models can be hard to decipher, making it difficult to comprehend their decision-making processes.

This can pose problems in areas where interpretability is essential, such as healthcare or finance. Exploring methods for interpreting deep learning models is a current research focus.

5. Issues with Generalization

Generalization pertains to a model's capability to perform well on data beyond the training distribution. Deep learning models may have difficulties with generalization, particularly when the training data is inadequate or biased.

This can restrict the usefulness of deep learning models in fields where the data distribution continuously changes or where data exhibits significant variability.

Training Deep Models

1. Backpropagation

Backpropagation is a type of optimization algorithm that is used to train deep models by minimizing the difference between the predicted output and the actual output. It works by propagating the error from the output layer to the input layer and adjusting the weights and biases of each neuron along the way. Backpropagation is a computationally intensive and can suffer from issues such as vanishing gradients and overfitting.

2. Gradient Descent

Gradient descent is an optimization algorithm used in machine learning to minimize the loss function of a model. It is a first-order optimization algorithm that works by iteratively adjusting the parameters of a model in the direction of steepest descent of the cost or loss function.

The algorithm begins by calculating the gradient of the loss function with respect to the model parameters. The gradient provides information about the direction of steepest descent, allowing the algorithm to adjust the parameters in a way that reduces the loss function. The size of the adjustment is determined by the learning rate, which controls the step size taken in the direction of the gradient.

3. Regularization

Regularization is a set of techniques that prevent overfitting in deep models by adding constraints to the weights and biases of each neuron. Several types of regularization techniques, such as L1 regularization, L2 regularization, Dropout, and Batch Normalization, each with their strengths and weaknesses.

Applications of Deep Models

1. Image Recognition

It is one of the most common applications of deep models. It involves recognizing objects, scenes, and patterns in images. Deep models such as Convolutional Neural Networks (CNNs) have achieved state-of-the-art performance on image recognition tasks such as object detection, image segmentation, and image classification. For example, the famous ImageNet challenge is a yearly competition to classify images into 1,000 categories. CNNs have consistently outperformed other models on this task.

2. Natural Language Processing (NLP)

Natural Language Processing (NLP) is another common application of deep models and involves processing and understanding human language. Deep models such as Recurrent Neural Networks (RNNs) and Transformer models have achieved state-of-the-art performance on many NLP tasks such as language modeling, sentiment analysis, and machine translation. For example, the famous GLUE benchmark is a yearly competition to evaluate the performance of NLP models on various tasks, and deep models have consistently outperformed other models on this benchmark.

3. Speech Recognition

Speech recognition is another common application of deep models and involves recognizing and transcribing human speech into text. Deep models such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have achieved state-of-the-art performance on many speech recognition tasks such as keyword spotting, speaker identification, and speech-to-text transcription. For example, the famous LibriSpeech dataset is a collection of over 1,000 hours of spoken text, and deep models have achieved high accuracy rates on this dataset.

Challenges and Limitations of Deep Models

1. Overfitting

Overfitting is a common challenge in deep models. It occurs when the model is too complex and fits the training data too well, leading to poor generalization performance on new data. To prevent overfitting, regularization techniques such as L1 and L2 regularization, Dropout, and Early Stopping can be used, as well as data augmentation and model ensembling.

2. Vanishing Gradient

Vanishing Gradient is another common challenge in deep models and occurs when the gradients become very small and fail to update the weights and biases of the neurons in the lower layers, leading to slow or no learning. To prevent vanishing Gradient, initialization techniques such as Xavier and He initialization, activation functions such as ReLU and variants, and optimization algorithms such as AdaGrad and RMSProp can be used.

3. Interpretability

Interpretability is a major limitation of deep models. It refers to the difficulty of understanding and explaining how the model makes its predictions. Deep models are often viewed as "black boxes" due to their high complexity and non-linearity, and extracting meaningful insights from them can be difficult. However, several techniques, such as visualization, attribution, and explanation methods, are being developed to improve the interpretability of deep models.

FAQs

1. What is a Deep Model in machine learning?

A Deep Model is a machine learning technique that uses artificial neural networks with multiple layers to extract high-level features from input data, improving prediction accuracy.

2. How do Deep Models differ from shallow models?

Deep Models have multiple hidden layers and can learn complex patterns, while shallow models have fewer layers and limited capacity to capture intricate relationships in data.

3. What are common applications of Deep Models?

Deep Models are used in image and speech recognition, natural language processing, sentiment analysis, and recommendation systems, among other applications.

4. What are the primary challenges in training Deep Models?

Training Deep Models can be challenging due to the large amount of data and computational power required, risk of overfitting, and vanishing or exploding gradient problems.

5. Are Deep Models always better than shallow models?

Deep Models excel in certain applications but aren't always better. Shallow models can be more efficient and accurate for simpler tasks with less data and less computational resources.