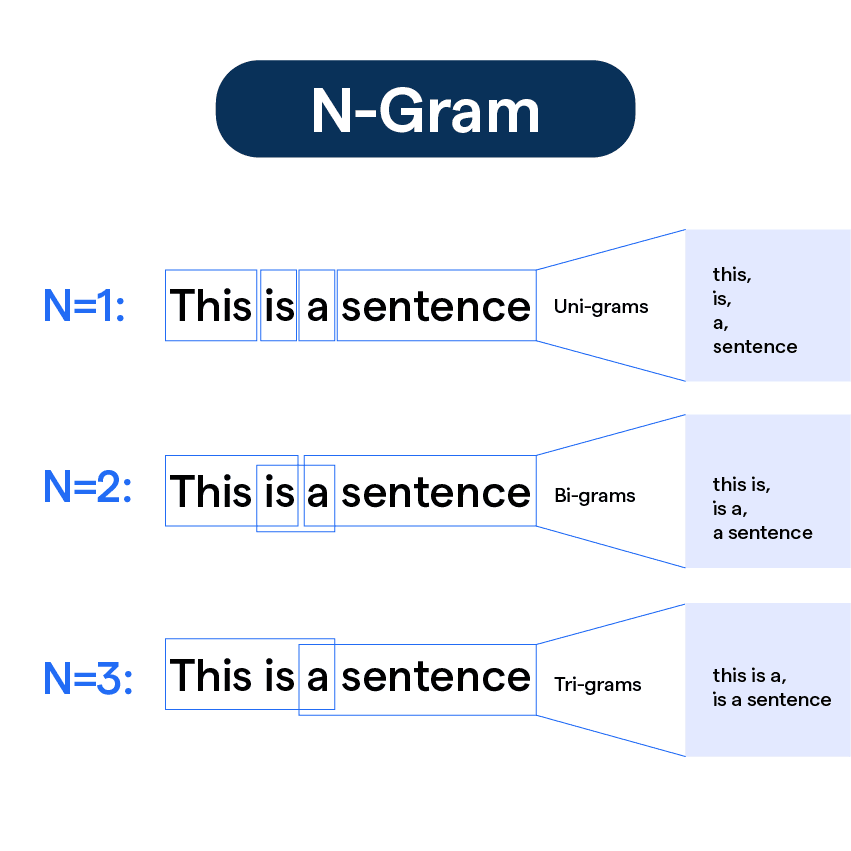

What is N-Gram?

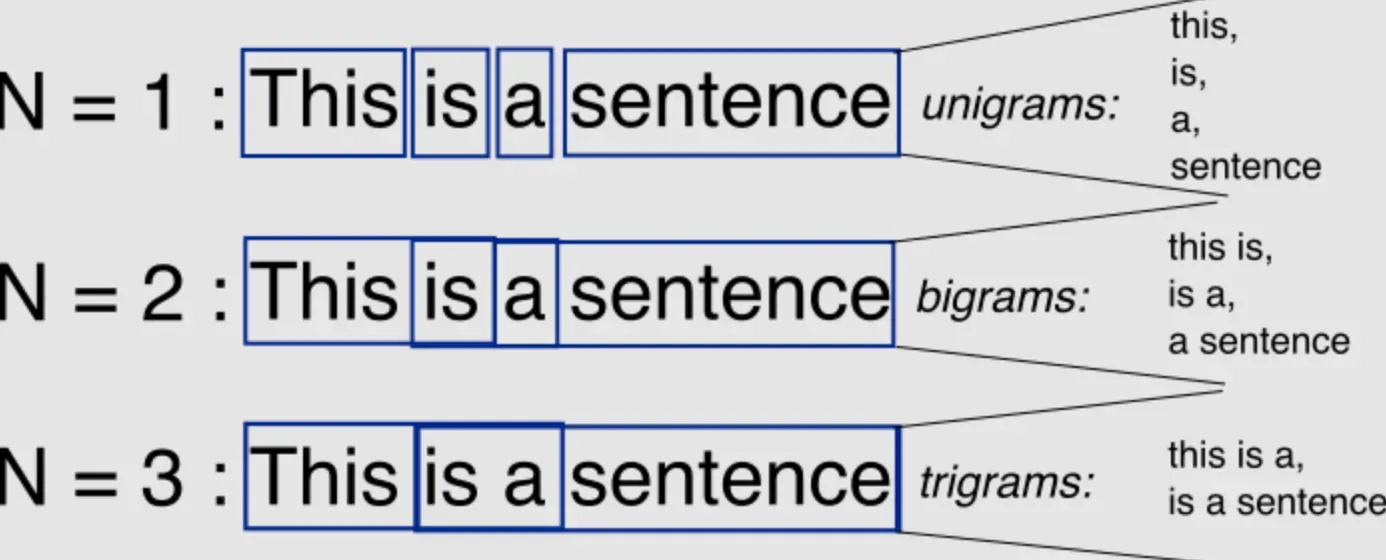

N-Gram is simply a sequence of N words, where N can be any positive integer.

For instance, a two-word sequence of words like "please turn," "turn your," or "your homework" is called a 2-gram, and a three-word sequence of words like "please turn your" or "turn your homework" is called a 3-gram. N-grams are the basic building blocks used in various NLP applications.

What is an N-Gram Model in NLP?

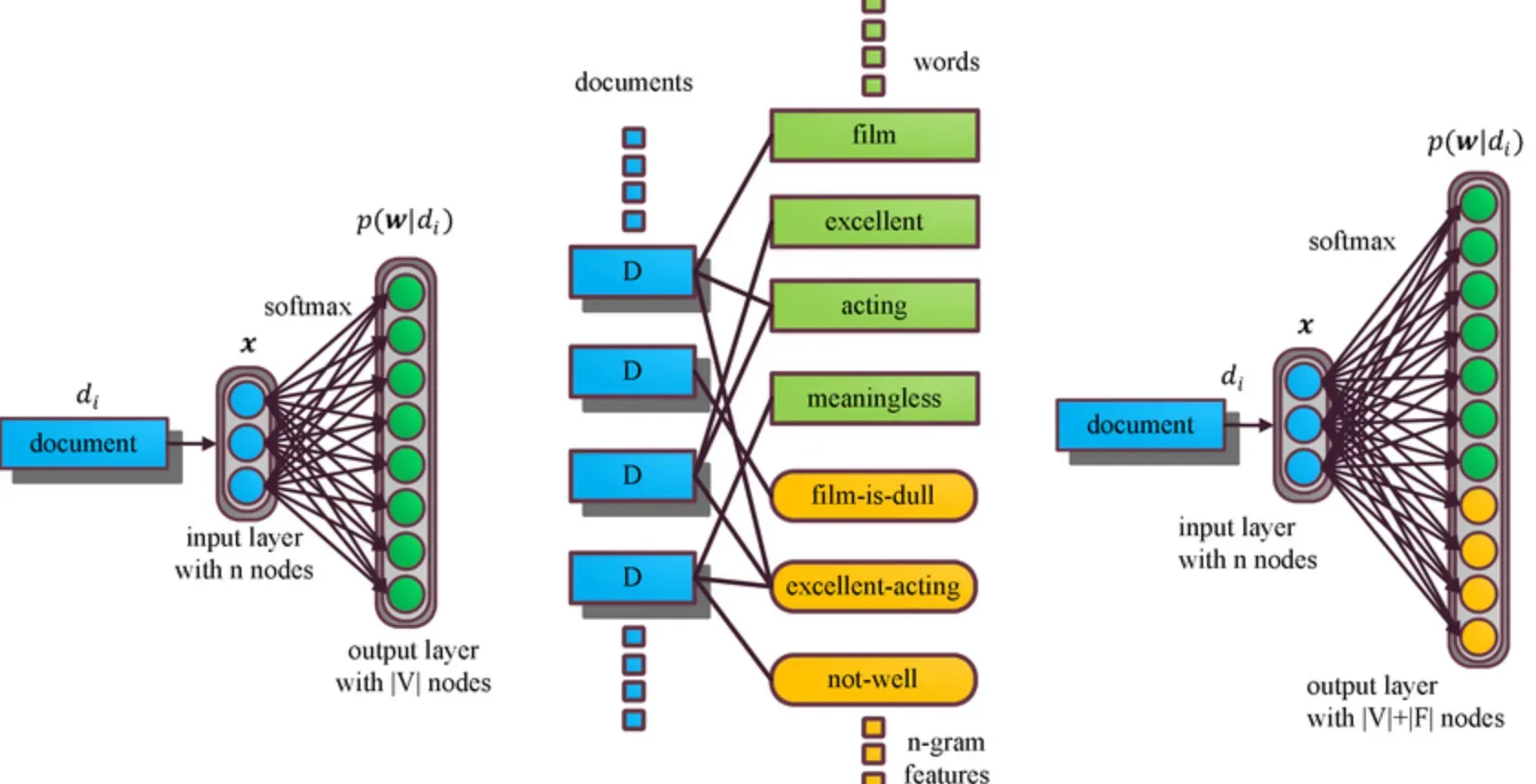

An N-Gram model is a type of Language Model in NLP that focuses on finding the probability distribution over word sequences.

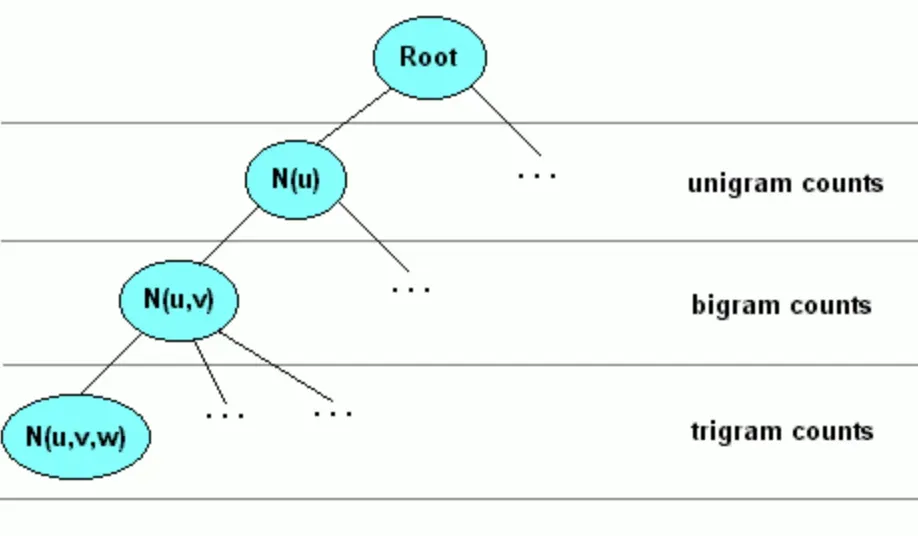

The model is built by counting the frequency of N-grams in corpus text and then estimating the probabilities of words in a sequence.

For instance, given the sentence "The cat ate the white mouse.", what is the probability of "ate the" appearing in the sentence? An N-gram model calculates and provides such probabilities.

However, a simple N-gram model has limitations, such as the sensitivity of training data and the occurrence of rare or unseen N-grams, among others.

To overcome these challenges, improvements are often made through the means of smoothing, interpolation, and back-off techniques.

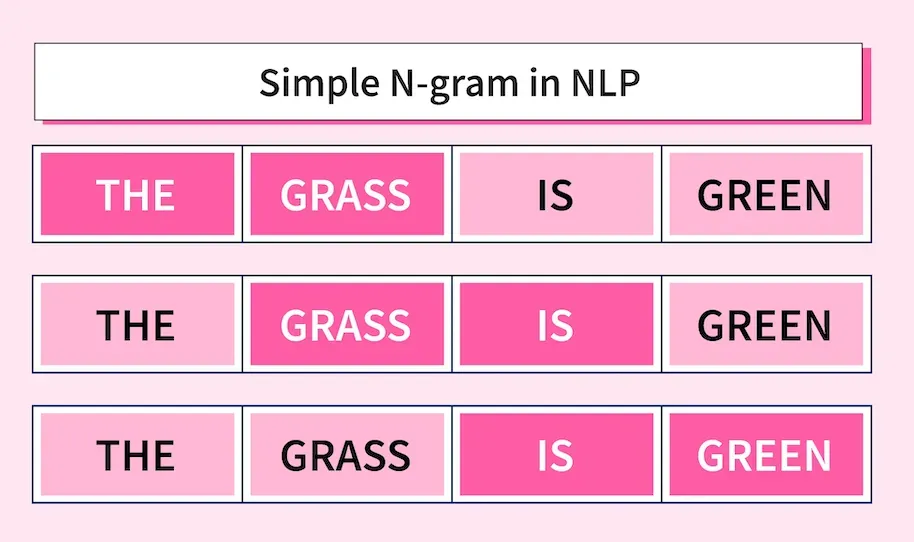

How do N-Grams Work?

N-Grams are constructed by consecutively breaking a given text down into chunks of 'n' length. If 'n' is 1, each word becomes a unigram. If 'n' is 2, adjacent pairs form bigrams, and so on.

Consider this sentence: "I like to play". In a bigram model, the sentence is divided as follows: ["I like," "like to," "to play"]

N-Grams are pivotal in applications like speech recognition, autocomplete functionality, and machine translation. They help imbue a sense of 'context' to a sequence of words, allowing language-based prediction models to operate with enhanced accuracy.

How do N-Grams Work Operationally?

Creating an N-Gram model revolves around four key steps:

- Tokenization: The given text data is broken down into tokens (individual words).

- Building N-Grams: These tokens are then grouped together to form N-Grams of a specified length.

- Counting Frequencies: Next, the frequency of each N-Gram in the text is calculated for understanding the data's structure.

- Application: Finally, these frequency distributions are leveraged for various NLP tasks, be it completing a sentence, suggesting next words, or even detecting the language of the text.

Applications of N-Grams

N-grams are used in many NLP applications because of their ability to detect and leverage meaningful word sequences. Applications of N-grams include:

Auto-Completion of Sentences

N-grams are used for text prediction or auto-completion to suggest the next word in a sentence prediction.

By calculating the probability of a sequence of words appearing together, the N-gram model can predict what will most likely come next in the sentence.

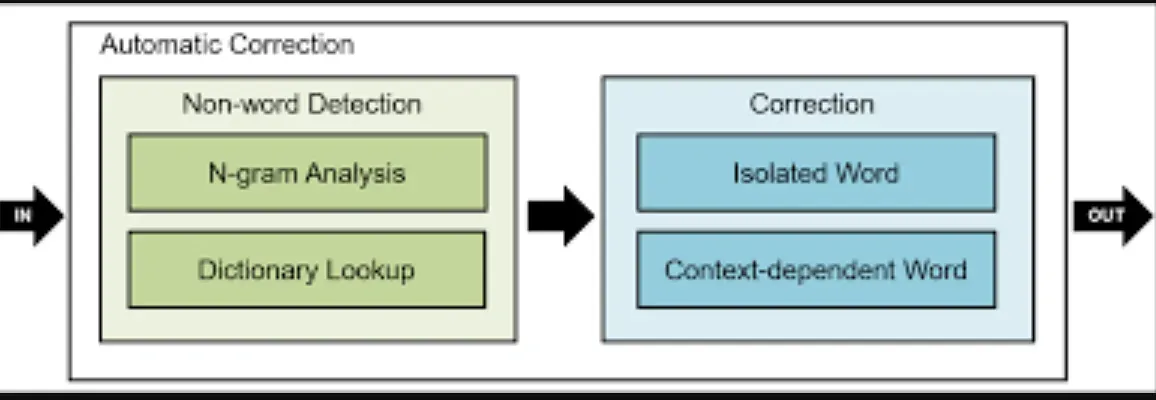

Auto Spell Check

N-Grams are often used in spell-checking and correction in word processors, and search engines, among other applications.

The N-gram model can recommend a correction based on the probability of a misspelled word given its context to the previous N-1 words.

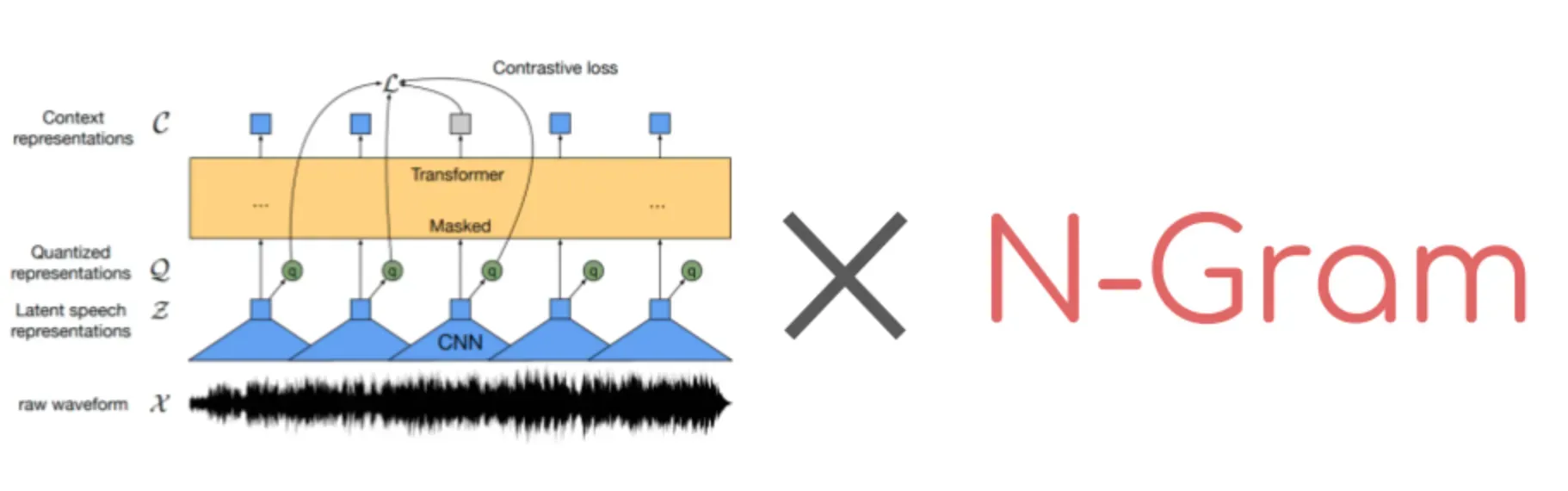

Speech Recognition

N-Gram models are used in audio-to-text conversion to improve accuracy in speech recognition.

It can rectify speech-to-text conversion errors based on its knowledge of the probabilities and the context of previous words.

Machine Translation

N-gram models are employed in machine translation to produce more natural language in the target language.

The model predicts the next word given the previous N-1 words, translating the sentence step by step.

Grammar Checking

N-Gram models can also be used for grammar checking. The model detects if there is an error of omission, e.g., a missing auxiliary verb, and suggests probable corrections that match the preceding context.

N-Gram Models in Machine Translation

N-gram models are widely used in machine translation to translate text from the source language to a target language.

The models predict the next word given the previous N-1 words, translating the sentence step by step.

By modeling long-range dependencies, such as tense, word order, and sentence structure, N-gram models can help overcome some of the limitations of traditional rule-based translation systems, delivering better translation quality.

N-Grams for Spelling Error Correction

N-Gram models can help in correcting misspelled words. By calculating the probability of each word based on its context and frequency of occurrence in the corpus, the model can suggest a correction for a misspelled word.

Often, dictionary lookups fail as words that are spelled similarly may have different meanings.

N-Grams at Different Levels

N-grams can be used not only at the word level but also at the character level, forming sequences of n characters (n-grams).

Character level N-grams are useful for word completion, auto-suggestion, typo correction, and root-word separation.

N-Grams for Language Classification and Spelling

N-Gram statistics can be used in many ways to classify languages or differentiate between US and UK spellings. Language classification is useful in chatbots, where the bot adapts to the user's preferred language.

In spellings, the N-gram model can differentiate between different spellings of the same word in British English and American English.

Evaluating N-Gram Models

N-gram models can be evaluated through intrinsic or extrinsic assessment methods. Intrinsic evaluation involves conducting a test that measures the performance of the model based on its strengths and weaknesses.

Extrinsic evaluation is an end-to-end method that involves the integration of N-gram models into an application and evaluating the performance of the app.

Perplexity as a Metric for N-Gram Models

Perplexity is a popular evaluation metric for N-gram models that measures how accurately the model predicts future words.

Lower perplexity values indicate that the N-gram model performed better in predicting the next word.

Challenges in Using N-Grams

Sensitivity to the Training Corpus

N-Gram models' performance is significantly dependent on the training corpus, meaning that the probabilities often encode particular facts about a given training corpus.

As a result, the performance of the N-gram model varies with the N-value and the data it was trained on.

Smoothing and Sparse Data

Sparse data is a common challenge in N-Gram models. Any N-gram that appeared a sufficient number of times might have a reasonable estimate for its probability.

Due to data limitations, some perfectly acceptable word sequences are bound to be missing from it. Smoothing is the primary technique that addresses spelled-out zeros in the N-gram matrix.

So there you have it - a comprehensive glossary page that covers everything you need to know about N-grams.

Use N-grams with caution, be mindful of the challenges and limitations and, most importantly, have fun creating intelligent chatbots, spam filters, auto-corrects and more with N-grams!

Frequently Asked Questions (FAQs)

How are N-grams commonly used in text prediction?

N-grams are used in text prediction by analyzing the frequency of occurrence of N-grams in a given dataset.

This information is then utilized to predict the most likely next word or sequence of words based on the context.

Can N-grams handle different languages?

Yes, N-grams can be used with any language as they rely on the statistical analysis of sequences of words or characters.

However, the effectiveness of N-grams may vary depending on the complexity and structure of the language being analyzed.

What challenges can arise when using N-grams?

Some challenges when using N-grams include the exponential growth in the number of possible N-grams as the value of N increases, the sparsity of certain N-grams in the dataset, and the limitation in capturing long-range dependencies in language.

How can N-grams be used in spam detection?

N-grams can be helpful in spam detection by analyzing the frequency of occurrence of specific N-grams or patterns associated with spam messages.

This information can be used to identify and filter out potential spam emails or messages.

Are there any limitations to using N-grams?

Yes, N-grams have limitations. They may struggle with capturing semantics and context in natural language, especially for tasks like sentiment analysis.

Additionally, N-grams can be memory-intensive, and the choice of N value can significantly impact performance and accuracy.