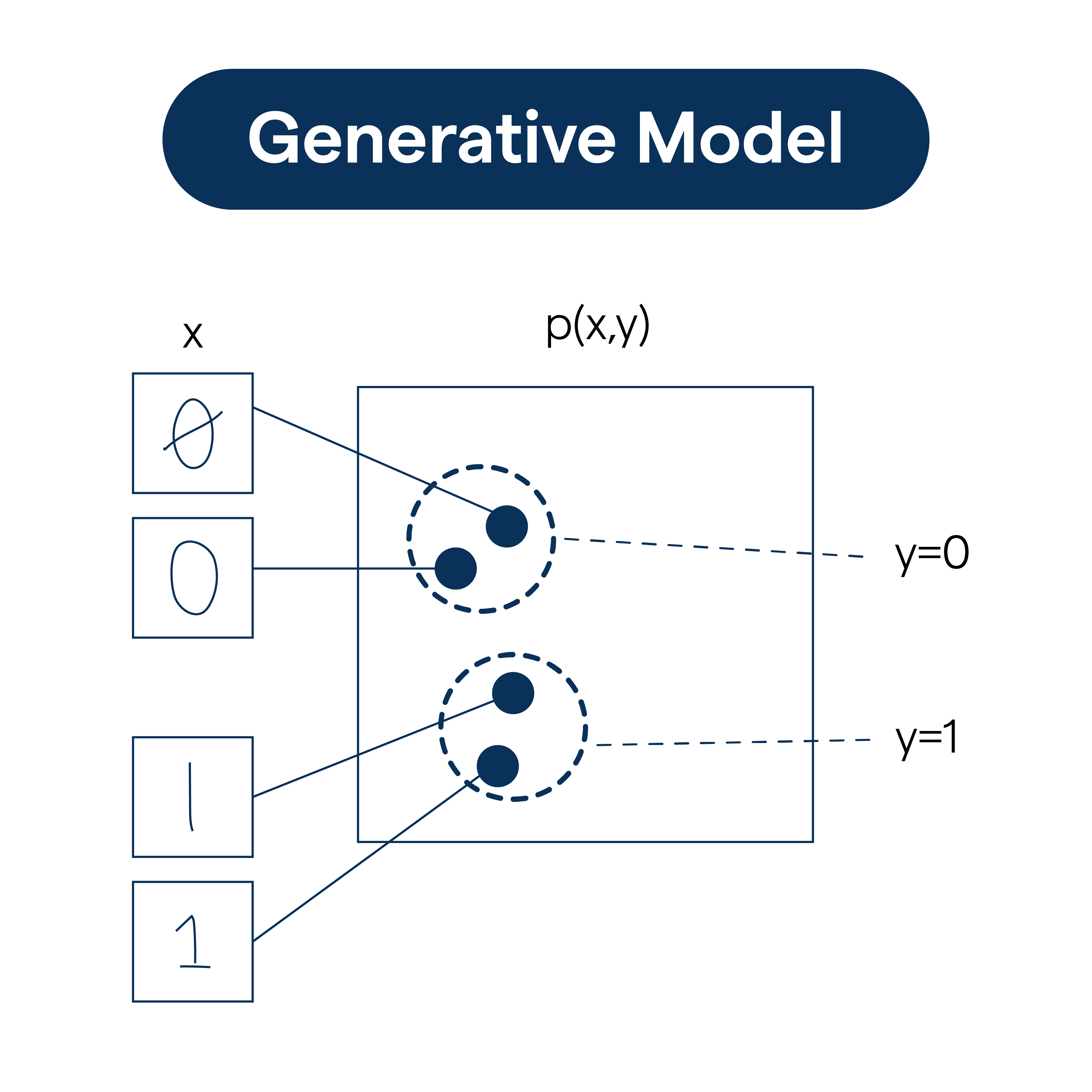

What is a Generative Model?

Generative models characterize and learn patterns from input data so that new data can be generated. These models focus on capturing the true data distribution of the training set to generate data points with similar characteristics.

Application of Generative Models

Beyond creating new data, generative models find applications in various sectors like image processing, natural language processing, voice generation etc. They are commonly used in tasks such as denoising autoencoders, super-resolution, and text-to-speech conversions.

Subcategories of Generative Models

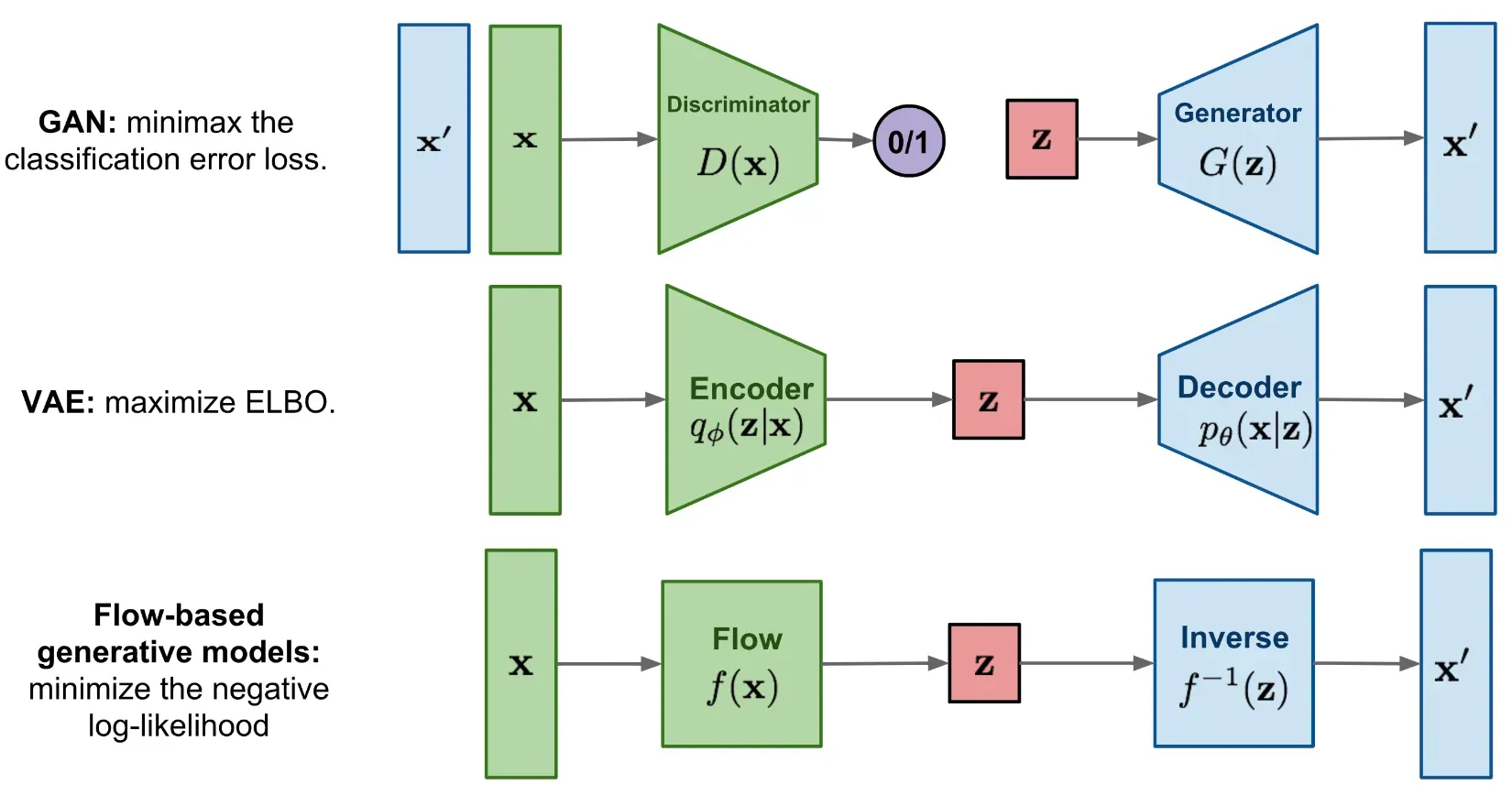

Generative models can be categorized mainly into two types: explicit models (like Variational Autoencoders - VAE) where the true data distribution is explicitly defined, and implicit models (like Generative Adversarial Networks - GAN) where the model learns the data distribution implicitly.

Training a Generative Model

Training involves endowing a generative model with input data to evaluate its parameters. The aim is to help the model understand the underlying distribution so it can generate new samples.

Evaluating Generative Models

Generative models can be evaluated by comparing the generated data to the actual data. Metrics like Inception Score (IS), Frechet Inception Distance (FID), Perceptual Path Length (PPL), etc., are used for evaluation.

Variational Autoencoders (VAEs)

Here we delve into the understanding of one of the core types of generative models - The Variational Autoencoders.

Basic Concept of VAEs

VAEs are a type of generative model that allows for complex structures in datasets and noise in the data generation process. They're based on neural networks and use techniques from variational inference to model complex, high-dimensional data.

Architecture of a VAE

A VAE comprises two main parts: an encoder that maps input data to a latent space, and a decoder that reconstructs the original data from the latent code. In between lies a probabilistic bottleneck, characterizing the distribution of the latent variables.

Training a VAE

Training a VAE involves the optimization of two combined loss functions: reconstruction loss (difference between output and original data) and the KL-divergence (measuring the difference between the learnt and prior distributions).

Use Cases of VAEs

VAEs are used in a variety of applications like image synthesis, anomaly detection, drug discovery, etc. They are particularly appreciated for their ability to learn the continuous hierarchical latent factors and create structured outputs.

Advantages and Limitations of VAEs

While VAEs are powerful in terms of generating smooth and spatially coherent images, they often suffer from the issue of producing blurry images as compared to other generative models like GANs.

Generative Adversarial Networks (GANs)

Another note-worthy figure in the domain of generative models is Generative Adversarial Networks or GANs.

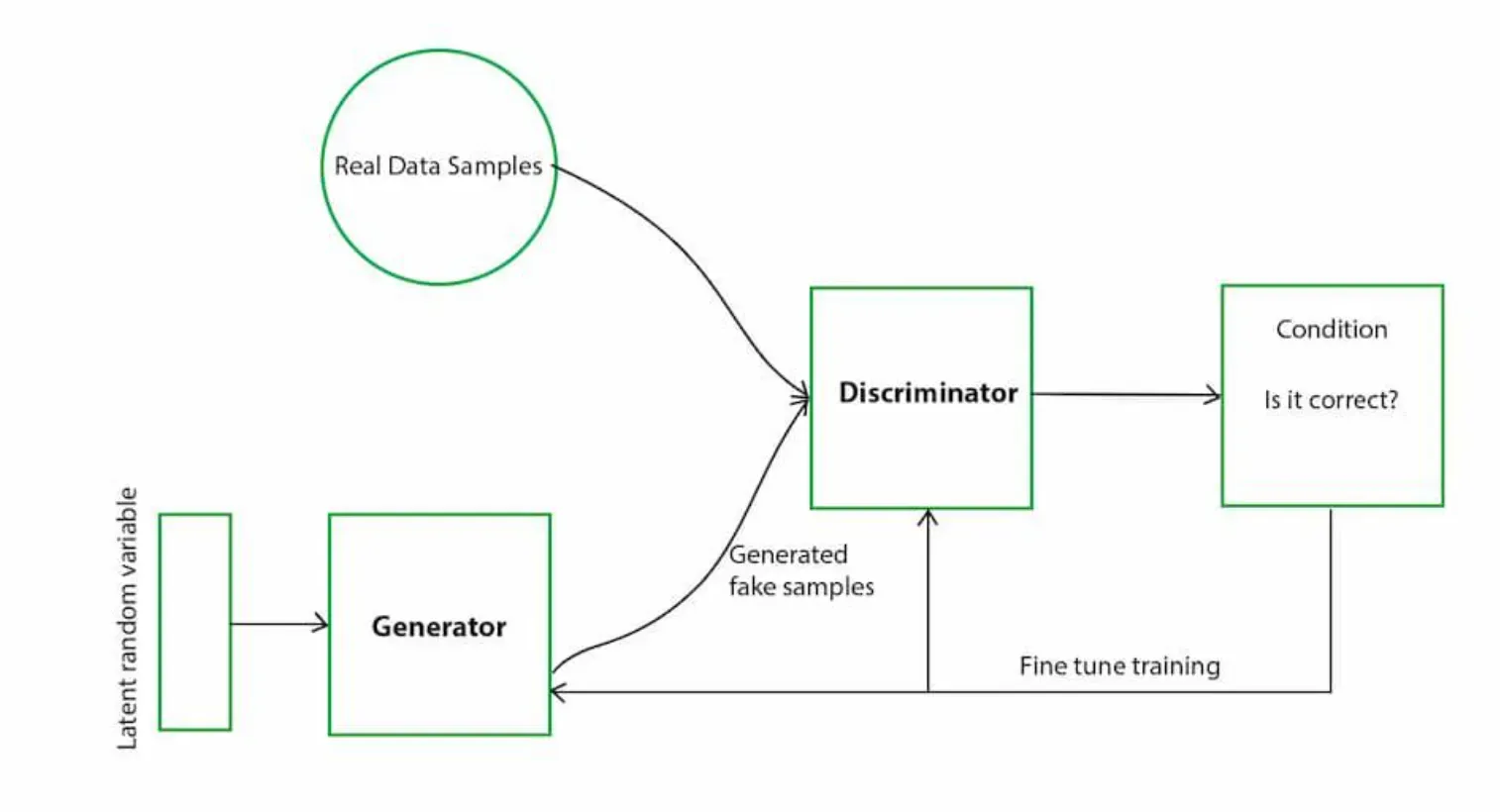

Basic Idea of GANs

GANs work on the idea of game theory, with two neural networks playing a game against each other. A generator network tries to create data that the discriminator network wouldn't be able to distinguish from real data.

Architecture of a GAN

The architecture of a GAN includes a generator network, which takes random noise as input and outputs a data instance, and a discriminator network, which classifies its input as real or fake.

Training a GAN

The training of a GAN involves optimizing a two-player minimax game, where the generator aims to fool the discriminator by generating high-quality data, while the discriminator strives to distinguish the generator's output from the real data.

Use Cases of GANs

GANs can be used to generate realistic images from random noise, perform style transfer, increase image resolution, and even generate realistic human faces. They are cherished for their ability to generate high-quality and realistic images.

Advantages and Limitations of GANs

While GANs can generate high quality images, they can often suffer from training instability, mode collapse, and difficulty in learning the right cost function.

Model-based Generative Deep Learning Models

Model-based Generative Deep Learning models are another fascinating concept to explore in the generative models’ landscape.

Overview of Model-based Generative Models

These models can generate new data instances based on a large number of labeled training samples. They can model the underlying statistical structure to recreate realistic data.

Architecture of Model-based Generative Models

An essential feature of this architecture is a generative network that directly models the conditional distribution of the output given the input.

Training Model-based Generative Models

Training involves maximizing the likelihood of the observed data, often using stochastic gradient ascent. For example, Restricted Boltzmann Machines (RBM) use Contrastive Divergence or Persistent Contrastive Divergence for training.

Use Cases of Model-based Generative Models

Model-based Generative Models are mostly used for tasks like image and character recognition, motion capture data and collaborative filtering.

Advantages and Limitations of Model-based Generative Models

Model-based generative models can represent complex distributions succinctly, but they require a significant number of tissue samples - real or synthetically generated, to generate accurate results.

Flow-based Generative Models

Flow-based generative models harness the power of invertible transformations for efficient exact log-likelihood computation and latent-variable manipulation.

Basic Concept of Flow-based Models

In flow-based generative models, a simple probability distribution is transformed into a complex one using invertible functions or flows. This process allows for efficient sampling and density evaluation which is a significant advantage for generative modelling.

Architecture of a Flow-based Model

A Flow-Based Model generally consists of a sequence of invertible transformation functions. The input vector flows through this sequence to result in an output that's a complex transformation of the original input data.

Training a Flow-based Model

Training involves learning a series of bijective transformations (or flows) to transform a complex data distribution into a simple one (like Gaussian) to which we can easily sample from and compute densities.

Use Cases of Flow-based Models

Flow-Based Models find applications in fields like anomaly detection, density estimation, and data synthesis. They enable the generation of complex, high-dimensional distributions of real-world data.

Advantages and Limitations of Flow-based Models

Flow-based models enable exact likelihood calculation, efficient sampling, and flexibility. However, they may require a larger number of parameters to model complex distributions.

The subject of generative models is captivating because of its potential to create something new, meaningful, and realistic out of random noise.

Frequently Asked Questions (FAQs)

What makes Generative Models unique in Machine Learning?

Generative Models, unlike discriminative models, learn the joint probability distribution of inputs and outputs. They create new data instances, allowing them to generate data similar to training set, which makes them ideal for tasks like content creation.

How are Generative Models used in Image Generation?

Generative Models like GANs (Generative Adversarial Networks) are used for synthesizing new images that mimic training data. They learn the data distribution from training examples and generate new images, contributing to applications like art generation or deepfake creation.

What's the relation between Bayesian Networks and Generative Models?

Bayesian networks are a type of generative model. They model the full joint distribution of the data, capturing probabilistic relationships among variables. They're beneficial in applications that need reasoning under uncertainty, like medical diagnosis or anomaly detection.

Can adversarial training improve Generative Models?

Yes, adversarial training can augment generative models. In GANs, the generator (a generative model) and discriminator (a discriminative model) are trained together. The adversarial process makes the generator produce data increasingly similar to real data, enhancing the generative model's output.

How do Autoencoders fit into Generative Models?

Autoencoders, particularly Variational Autoencoders (VAEs), are generative models that learn to encode input data into a lower-dimensional latent space and then generate new data by decoding points from this latent space, aiding tasks requiring dimensionality reduction.