What is a Variational Autoencoder?

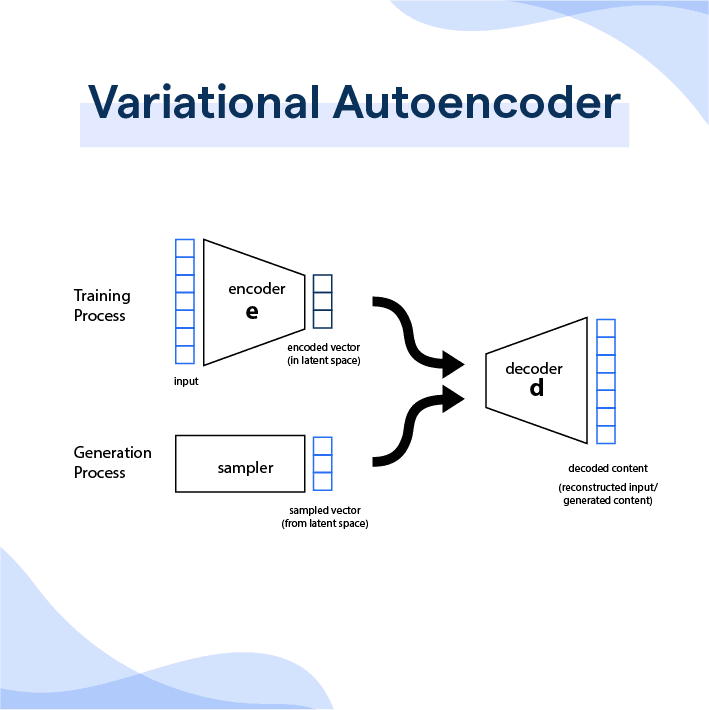

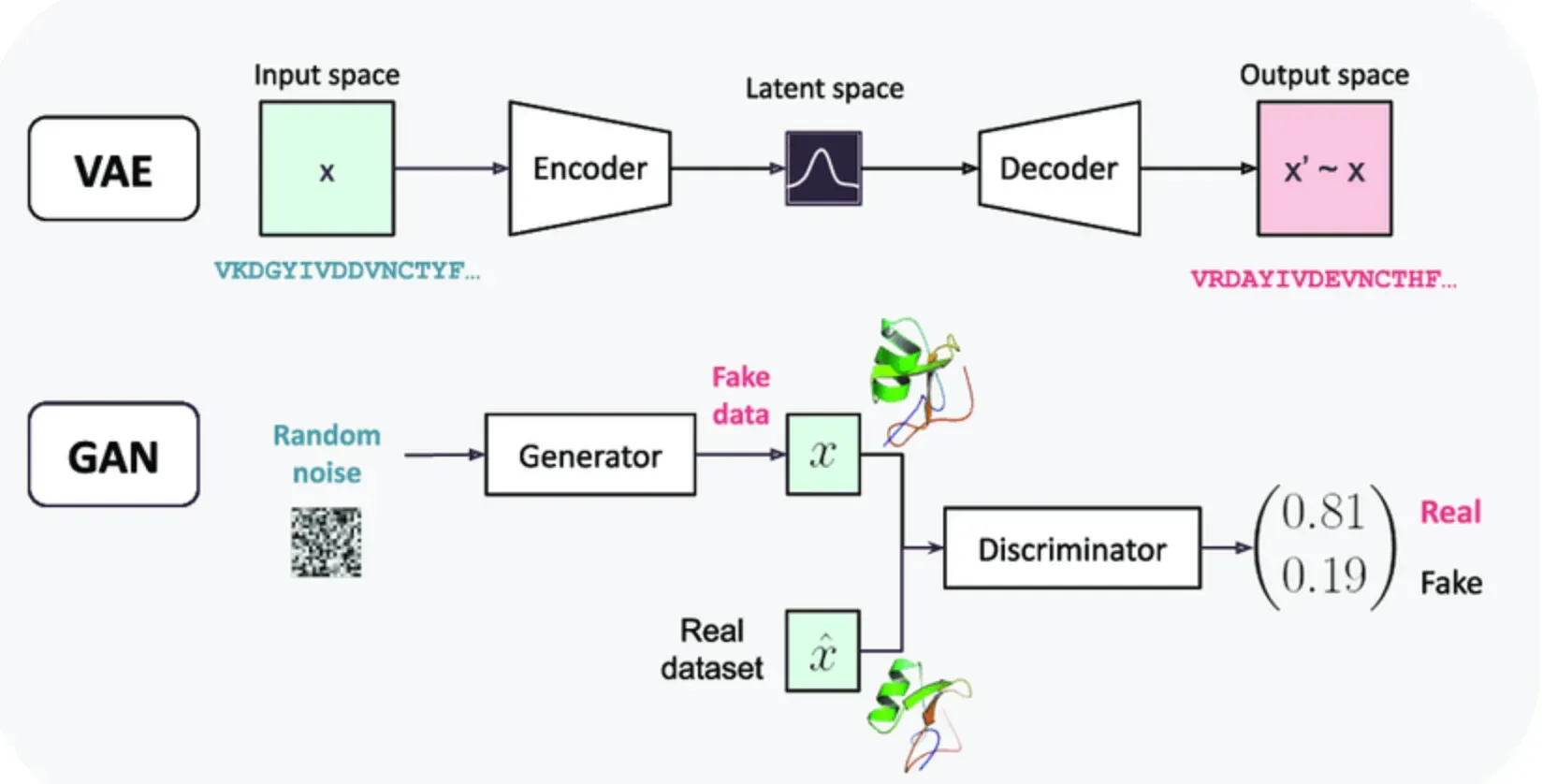

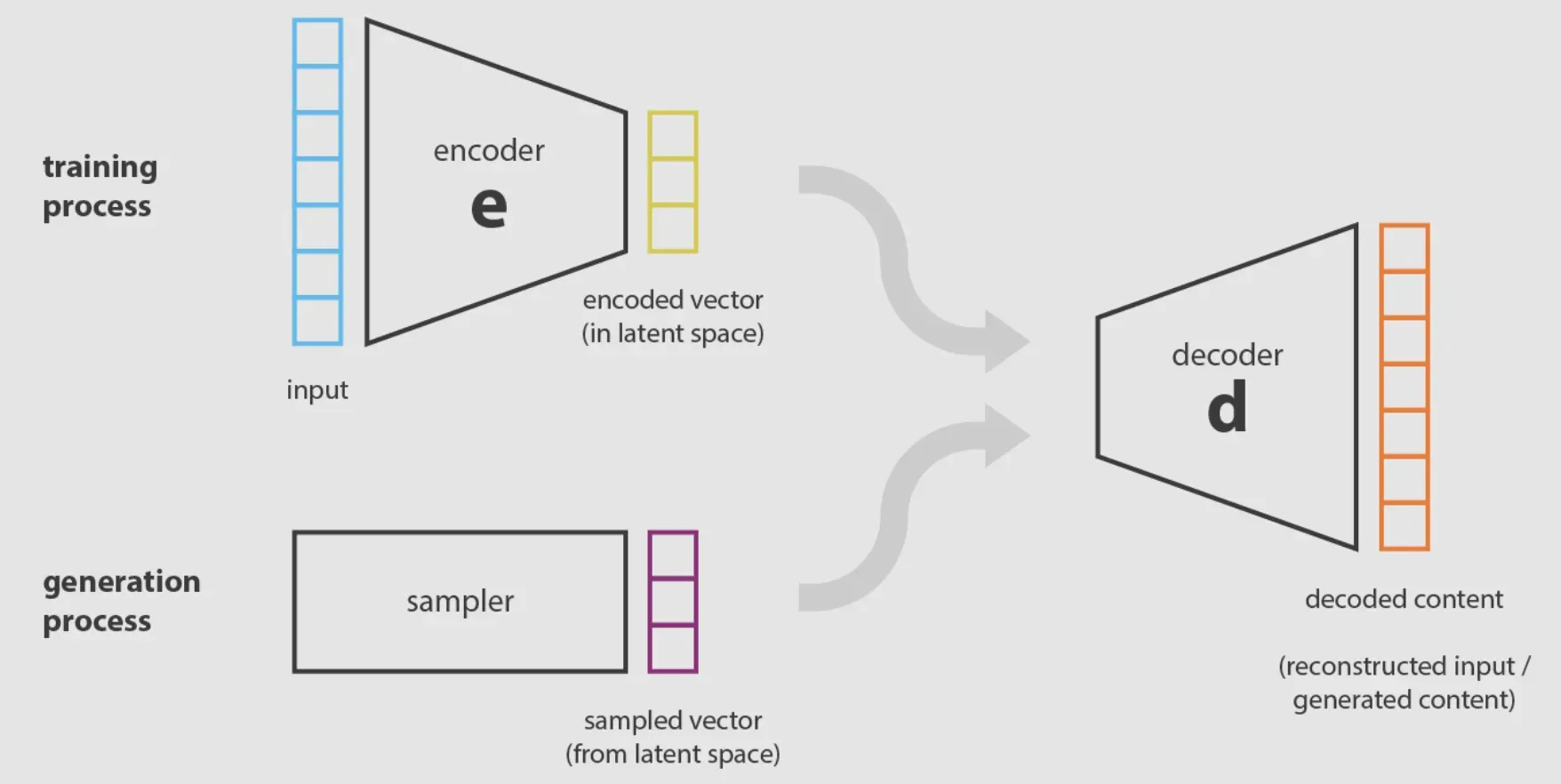

A Variational Autoencoder (VAE) is a type of neural network architecture that combines elements of both an autoencoder and a probabilistic model. It is designed to learn a compressed representation of input data while also capturing the underlying probability distribution of the data.

Unlike traditional autoencoders, VAEs introduce a stochastic element to the encoder, allowing for the generation of new data samples through random sampling in the latent space.

How does a Variational Autoencoder work?

Here’s how it works:

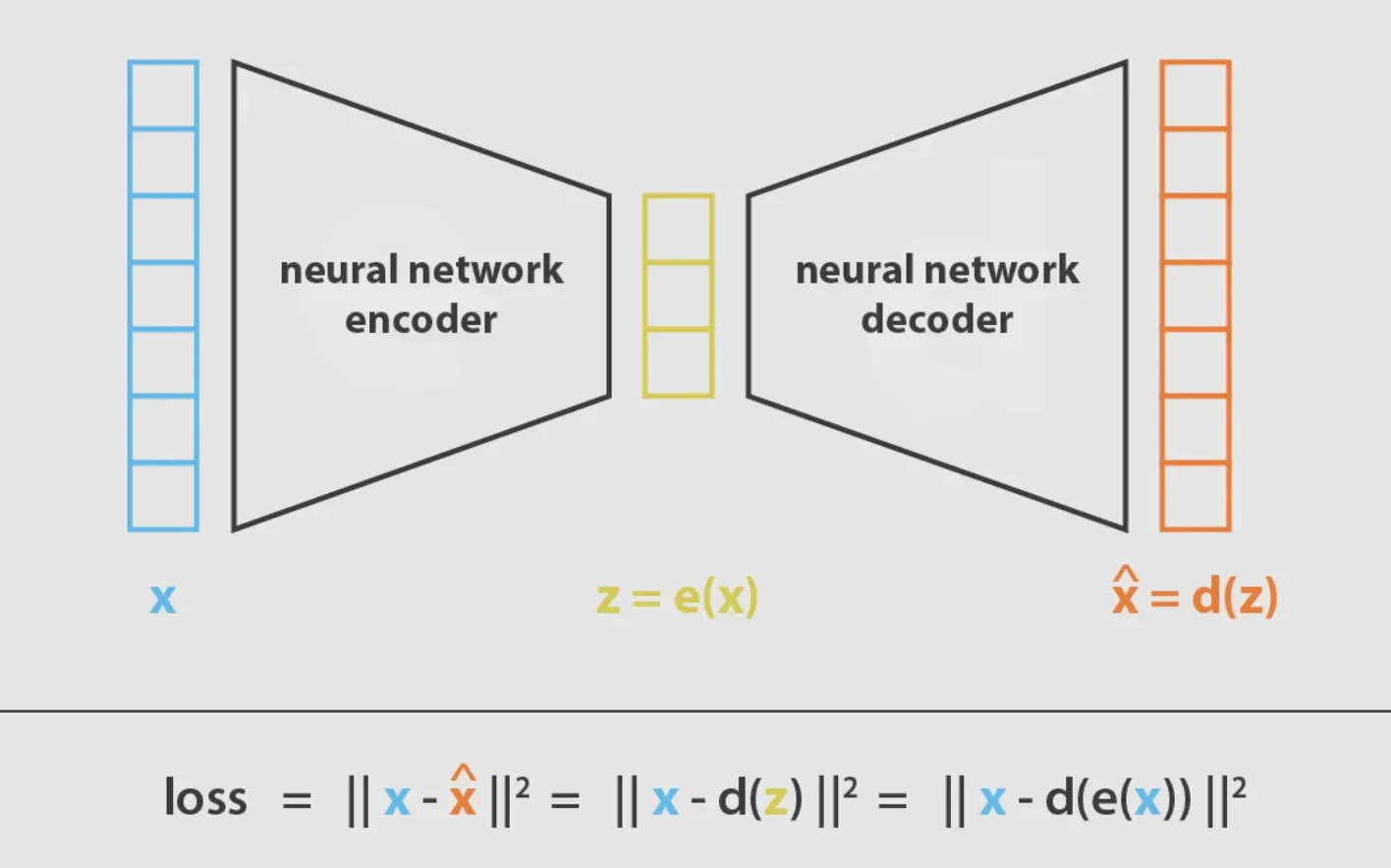

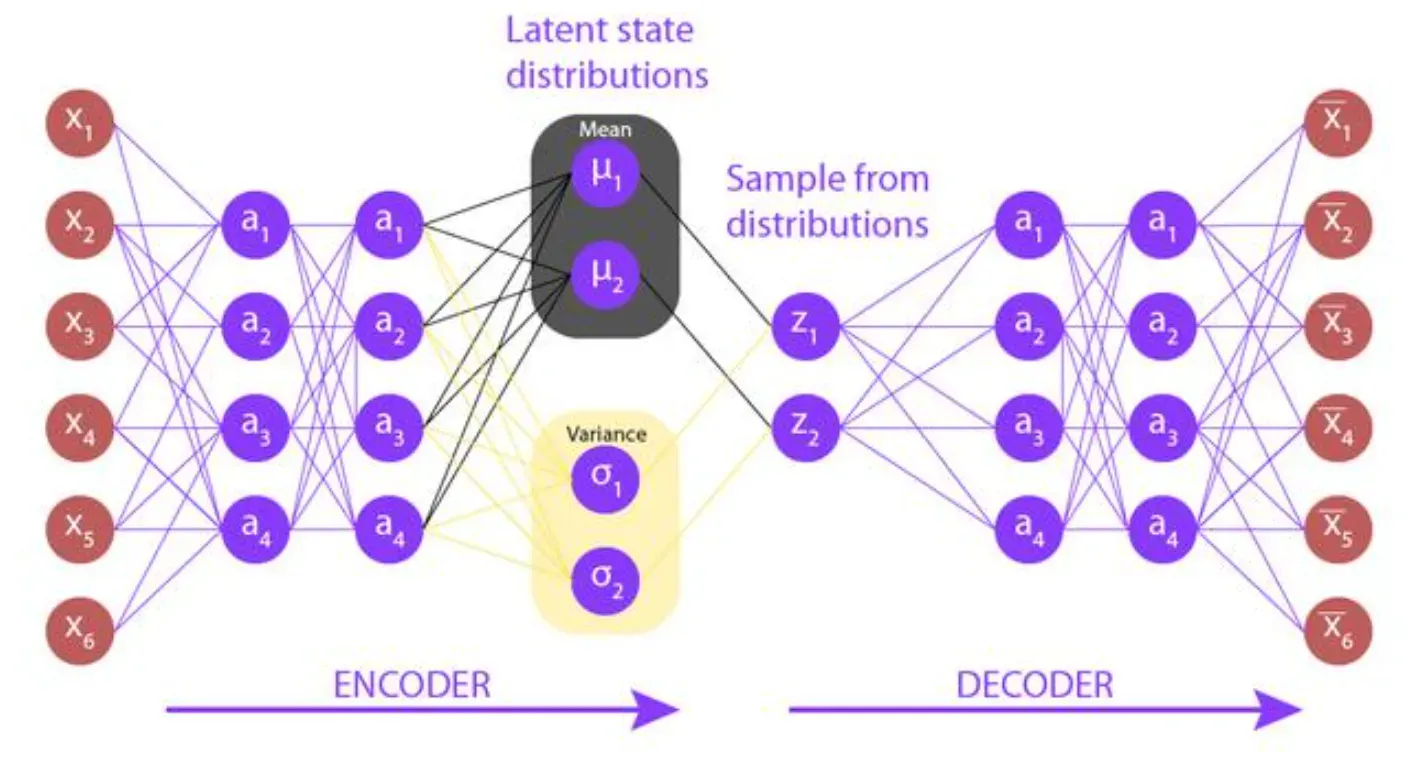

The input data is encoded by the encoder network, mapping it into the latent space distribution. The decoder network takes a sample from the latent space distribution and reconstructs the original input data.

The latent space distribution is regularized to follow a predefined prior distribution by minimizing the Kullback-Leibler (KL) divergence between the learned distribution and the prior distribution.

Step-by-Step Explanation of Training Process

- Encoding

The encoder network maps the input data to the latent space distribution, typically modelled as a multivariate Gaussian. It consists of fully connected layers, with the mean and variance outputs representing the parameters of the latent distribution.

- Decoding

The decoder network takes a sample from the latent space distribution and reconstructs the original input data. It mirrors the encoder architecture but in reverse order.

- Reconstruction Loss

The reconstruction loss measures the similarity between the original input data and its reconstruction. It encourages the autoencoder to minimize the discrepancies between the two.

- Regularization

The KL divergence between the learned latent distribution and the predefined prior distribution is minimized to regularize the latent space. This encourages the distribution of latent vectors to follow the desired prior.

- Loss Function and Optimization

The reconstruction loss and the regularization term are combined into a single loss function. Gradient descent optimization algorithms, such as Adam or SGD, are used to iteratively update the encoder and decoder weights to minimize this loss.

- Sampling and Generation

Trained Variational Autoencoders can generate new data samples by sampling from the learned latent space distribution.

Random points in the latent space are selected and decoded by the decoder network, resulting in the generation of novel data samples.

By following these steps, a Variational Autoencoder is able to learn a compressed representation of input data while capturing the underlying probability distribution.

Why are Variational Autoencoders useful?

Variational Autoencoders offer several advantages that make them highly useful for generative modelling tasks:

Continuous latent space

The latent space in Variational Autoencoders is continuous and follows a probabilistic distribution, allowing for smooth interpolation between different data points.

This enables the generation of new data samples by sampling from the latent space.

Ability to perform random sampling and interpolation

By sampling random points from the latent space distribution, Variational Autoencoders can generate diverse and novel data samples.

Additionally, the smoothness of the latent space allows for meaningful interpolation between existing data points to create new, realistic samples.

Improved organization of the latent space

The regularization term encourages the latent space to follow the desired prior distribution, leading to an organized and structured representation of the data.

This can facilitate tasks such as data exploration, anomaly detection, and clustering.

What is a Variational Autoencoder used for?

Variational Autoencoders find applications in various domains, including:

Generative modeling

Variational Autoencoders can generate new data samples by sampling from the learned latent space distribution.

This makes them useful for tasks such as image synthesis, text generation, and music composition.

Data compression

Variational Autoencoders learn a compressed representation of the input data in the latent space.

This can be beneficial for tasks that involve reducing the dimensionality or compressing large datasets.

Anomaly detection

By learning the underlying distribution of the input data, Variational Autoencoders can identify patterns that deviate from the norm.

This makes them suitable for detecting anomalies or outliers in datasets.

Clustering and visualization

The organized representation of the latent space in Variational Autoencoders allows for meaningful clustering of data points.

This facilitates data exploration and visualization, aiding in pattern recognition and analysis.

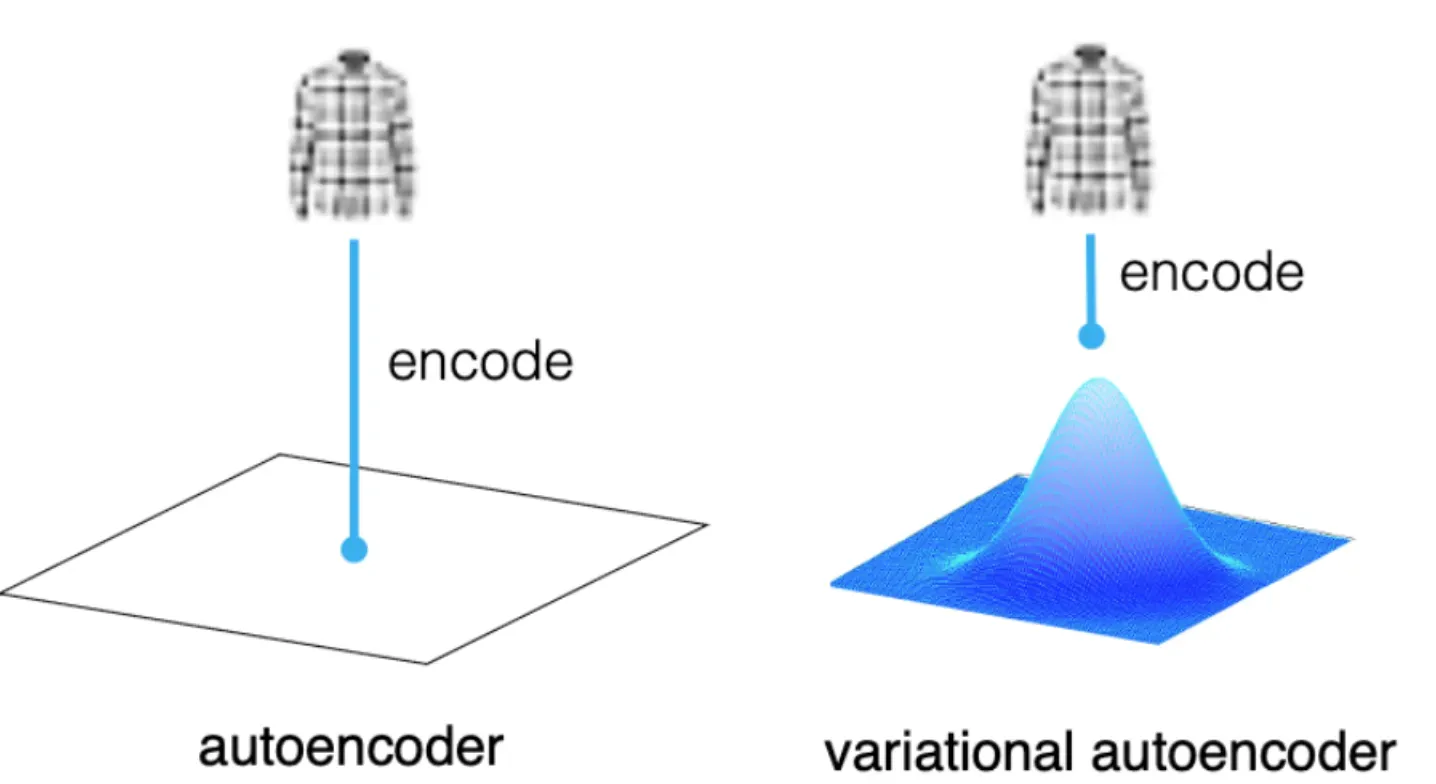

What is the difference between Autoencoder and Variational Autoencoder?

A Variational Autoencoder differs from a traditional autoencoder in several ways:

Encoding of the latent space

In a traditional autoencoder, the latent space serves as an arbitrary encoding of the input data with no explicit probabilistic interpretation.

In contrast, a Variational Autoencoder models the latent space as a distribution, typically a multivariate Gaussian, allowing for sampling and generation of new data points.

Regularization of the latent space

Variational Autoencoders introduce regularization in the latent space through the minimization of the KL divergence between the learned latent distribution and a predefined prior distribution.

This encourages the latent space to follow a desired probabilistic structure.

Generation of new data

Variational Autoencoders can generate new data samples by sampling from the learned latent space distribution. Traditional autoencoders lack this ability.

What is the most crucial drawback of Variational Autoencoders?

While Variational Autoencoders have proven to be powerful generative models, they do suffer from a common drawback:

The generated samples from a Variational Autoencoder can sometimes appear blurry and lacking in realism. This is often due to the trade-off between reconstruction accuracy and the regularization of the latent space.

The regularization term can constrain the latent space distribution, resulting in a smoothing effect that reduces the fine details present in the original data.

Variational Autoencoder in Practice

Variational Autoencoders have found success in a wide range of domains:

Image generation

Variational Autoencoders have been used to generate realistic images across different domains, such as faces, handwritten digits, and landscapes.

Text generation

Variational Autoencoders can generate coherent and contextually relevant text, making them useful for tasks such as dialogue generation, story generation, and language translation.

Drug discovery

Variational Autoencoders have been employed in drug discovery pipelines to generate novel chemical compounds with desired properties.

Music Composition

Variational Autoencoders can generate new musical compositions, capturing the underlying structure and patterns within the training data.

Variational Autoencoder Challenges and Future Developments

While Variational Autoencoders have shown great promise, there are still some challenges and limitations to be addressed:

Difficulties in modelling complex distributions

Variational Autoencoders struggle to model particularly complex or multimodal distributions due to the limitations of the Gaussian assumption for the latent space.

Trade-off between accuracy and diversity

Balancing the reconstruction accuracy and the diversity of generated samples is an ongoing challenge in Variational Autoencoder research.

Increasing the regularization term to improve diversity can lead to a decrease in reconstruction accuracy.

Large-scale deployment

Scaling Variational Autoencoders to large datasets and real-time applications can be computationally expensive and time-consuming.

Frequently Asked Questions (FAQs)

How does a Variational Autoencoder differ from a traditional autoencoder?

A Variational Autoencoder models the latent space as a distribution, enabling the generation of new data points. In contrast, a traditional autoencoder treats the latent space as an arbitrary encoding of the input data.

Can Variational Autoencoders be used for unsupervised learning tasks?

Yes, Variational Autoencoders can be used for unsupervised learning tasks. They can learn meaningful representations of input data without the need for labelled training data.

Are Variational Autoencoders suitable for high-dimensional data?

Yes, Variational Autoencoders can handle high-dimensional data. The continuous latent space representation allows for effective compression and reconstruction of high-dimensional input data.

Can Variational Autoencoders be used for anomaly detection?

Yes, Variational Autoencoders can be used for anomaly detection. By comparing the reconstruction error between the input data and its reconstruction, unusual or anomalous samples can be identified.

How do Variational Autoencoders handle missing data?

Variational Autoencoders can handle missing data by training the model to reconstruct input data even when some values are missing. The reconstruction loss helps guide the model in filling in the missing values.