What is GPT-3?

GPT-3 (short for Generative Pre-trained Transformer 3) is a state-of-the-art AI language model developed by OpenAI. It uses machine learning to understand and generate human-like text. Thus making it an incredibly powerful tool for a wide range of applications, from content creation to code writing.

Evolution from GPT-2 to GPT-3

GPT-2, the predecessor of GPT-3, was already a highly capable language model. However, GPT-3 takes it up a notch with its 175 billion parameters (compared to GPT-2's 1.5 billion). This massive increase in parameters allows GPT-3 to produce even more accurate and coherent text, making it a game-changer in the world of natural language processing (NLP).

GPT-3: The Creators and Timeline

The creators and timeline of the advanced AI language model GPT-3 are as follows:

Who Developed GPT-3?

GPT-3 was created by the talented team at OpenAI. It is an artificial intelligence research lab that aims to develop and promote friendly AI for the benefit of humanity.

When Was GPT-3 Released?

GPT-3 was released in June 2020, following a research paper published by OpenAI that detailed its capabilities and potential applications.

The Technicalities: How Does GPT-3 Work?

In this section, you’ll find the technicalities of the AI language model GPT-3.

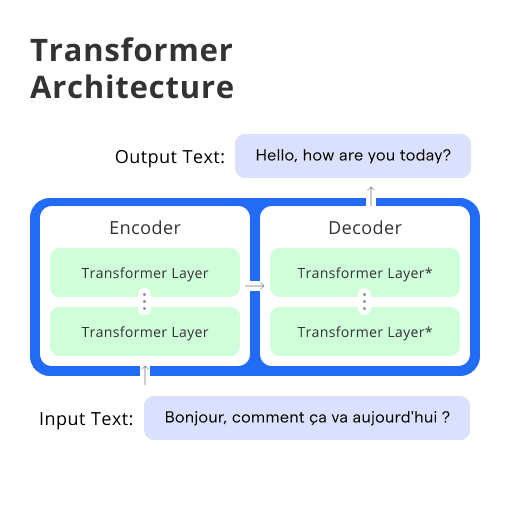

Transformer Architecture

At the heart of GPT-3 lies the transformer architecture, a type of deep learning model that excels in handling sequential data, like text. Transformers use a mechanism called self-attention to weigh the importance of each word in a sentence. Thus allowing them to capture long-range dependencies and have more coherent text generation.

Tokenization and Language Modeling

GPT-3 processes text by breaking it down into smaller chunks called tokens. These tokens are then used to train the model, allowing it to predict the next token in a sequence based on the context. This ability to generate text based on context is what makes GPT-3 so powerful and versatile.

Fine-Tuning GPT-3 for Specific Tasks

While GPT-3 is an impressive general-purpose language model, it can be fine-tuned for specific tasks, such as summarization or translation. GPT-3 does this by training it on a smaller dataset tailored to the desired application.

Parameters and Capabilities of GPT-3

The capabilities of GPT-3 are the following:

Parameters: 175 billion

Language Understanding: Advanced natural language processing, capable of understanding context and generating human-like text.

Text Generation: Produces coherent and contextually relevant responses across various topics.

Applications: Useful in chatbots, translation, summarization, content creation, and more.

Zero-Shot Learning: Can perform tasks it wasn't explicitly trained on with minimal instruction.

Few-Shot Learning: Improves performance with a few examples.

Code Generation: Capable of generating code snippets in various programming languages.

Limitations: Occasionally generates incorrect or nonsensical answers, and can be sensitive to input phrasing.

Understanding GPT-3's 175 Billion Parameters

GPT-3's massive 175 billion parameters are essentially the "brain" of the model, representing the connections and weights that allow it to understand and generate text. The more parameters, the better the model can capture nuances and complexities, making GPT-3 one of the most powerful language models to date.

GPT-3's Impressive Language Understanding

GPT-3's vast number of parameters allows it to exhibit a deep understanding of language. It can generate coherent, contextually accurate text, answer questions, and even engage in conversations, making it an invaluable tool for various applications.

5 Key Applications of GPT-3

The key applications of GPT-3 are the following:

Content Generation

GPT-3 can generate high-quality content for blogs, social media posts, and more, making it a valuable asset for content creators and marketers.

Conversational AI

GPT-3 has the ability to understand and generate human-like text. This ability makes it perfect for creating chatbots, like BotPenguin, and virtual assistants that can engage in natural, meaningful conversations with users.

Summarization

GPT-3 can be fine-tuned to create concise summaries of lengthy articles, reports, or documents, saving time and effort for users.

Translation

GPT-3 can be used to translate text between languages, making it a powerful tool for breaking down language barriers and facilitating global communication.

Code Generation

GPT-3 can generate code snippets based on natural language descriptions, making it easier for developers to write and understand code.

GPT-3 and SEO: A Powerful Combination

In this section, you’ll find about the most powerful combination of the AI language model GPT-3 and SEO.

Keyword Research with GPT-3

GPT-3 can help identify relevant keywords and search terms for SEO campaigns. This allows marketers to optimize their content for search engines more effectively.

GPT-3 for Content Creation

By generating high-quality, engaging content, GPT-3 can help improve a website's search engine ranking and drive organic traffic.

On-Page and Off-Page SEO Strategies Using GPT-3

GPT-3 can be used to create optimized meta tags, headings, and internal links, as well as generate ideas for guest posts and backlink-building strategies.

Limitations and Challenges of GPT-3

Like any other advanced AI language model, GPT-3 also has some limitations and challenges, like:

Ethical Concerns

GPT-3's ability to generate realistic text raises ethical concerns, such as the potential for generating fake news or deepfake content.

Inaccurate or Biased Output

GPT-3 may produce inaccurate or biased information, as it relies on the data it was trained on, which may contain biases or inaccuracies.

Computational Resources

GPT-3 requires significant computational resources to run, making it challenging for small businesses or individuals to access and utilize its full capabilities.

GPT-3 APIs and Integration

The APIs and integration of the advanced AI language model GPT-3 are as follows:

Accessing GPT-3 through OpenAI API

The OpenAI API allows developers to access GPT-3's capabilities and integrate them into their applications, opening up a world of possibilities for AI-powered tools and services.

Integrating GPT-3 into Applications

GPT-3 can be integrated into various applications, such as content management systems, chatbots, and translation services, to provide AI-powered features and enhancements.

GPT-3 in the AI and NLP Landscape

GPT-3 has made significant impact in the AI and NLP landscape, like:

GPT-3's Impact on AI and NLP

GPT-3 has made a significant impact on the AI and NLP landscape, showcasing the potential of large-scale language models and inspiring further research and development in the field.

Comparison with Other NLP Models and Techniques

While GPT-3 is undoubtedly a powerful language model, it's essential to consider other NLP models and techniques, such as BERT and LSTM, when evaluating AI-powered solutions for specific applications. Each AI language model has its strengths and weaknesses, and the best choice will depend on the task at hand.

Frequently Asked Questions (FAQs)

What is GPT-3?

GPT-3, or Generative Pre-trained Transformer 3, is a powerful advanced AI language model developed by OpenAI, capable of generating human-like text based on given prompts.

How does GPT-3 work?

GPT-3 uses deep learning and transformers to process and generate text. It's trained on vast amounts of data, allowing it to understand context and produce coherent responses.

Can GPT-3 write articles or stories?

Yes, GPT-3 can generate articles, stories, and other forms of text content by providing relevant prompts, making it a useful tool for creative writing and content generation.

Is GPT-3 available for public use?

GPT-3 is available through OpenAI's API, which requires an invitation or approval. Developers and businesses can request access to incorporate GPT-3 into their applications or services.

Are there limitations to GPT-3's capabilities?

While GPT-3 is impressive, it has limitations, such as generating incorrect or nonsensical information, sensitivity to input phrasing, and potential ethical concerns regarding content generation and misuse.