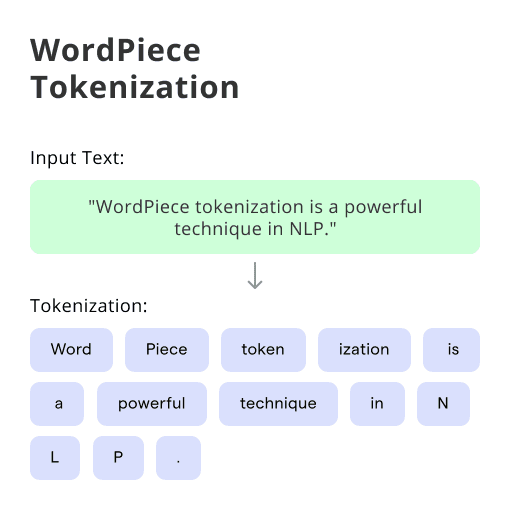

What is WordPiece Tokenization?

WordPiece Tokenization refers to the process of splitting text into smaller subword units called tokens. It combines the advantages of both character-level and word-level tokenization, allowing for more flexibility in capturing the meaning of words and effectively handling unknown or out-of-vocabulary (OOV) words.

Why is WordPiece Tokenization used?

WordPiece Tokenization has gained popularity due to its numerous advantages over other tokenization methods. It offers improved language modeling, better handling of rare or OOV words, and enhanced performance in machine translation, named entity recognition, sentiment analysis, and other NLP tasks.

Subword Granularity

WordPiece Tokenization provides a higher level of subword granularity that enables finer distinctions between words, making it suitable for languages with complex morphology or compound words. This helps in capturing the meaning and context more accurately.

Out-of-Vocabulary Handling

One of the major benefits of WordPiece Tokenization is its ability to handle OOV words effectively. By breaking words into smaller subword units, even unseen words can be represented using known subword units, reducing the number of OOV instances.

Flexible Vocabulary Size

Unlike fixed vocabularies used in word tokenization, WordPiece Tokenization allows for flexible vocabulary sizes. This means that the size of the vocabulary can be adjusted based on the specific application needs or the available computational resources.

Rare Word Handling

WordPiece Tokenization handles rare words better by breaking them into subword units that are more frequently observed in the training data. This results in a more accurate representation of such words and improves the overall performance of NLP models.

Parameter Optimizations

WordPiece Tokenization parameters, such as the number of iterations in the BPE algorithm or the initial maximum length of subword units, can be tuned to optimize the tokenization process for a specific dataset or task. This flexibility allows for fine-tuning and improves the overall performance of NLP models.

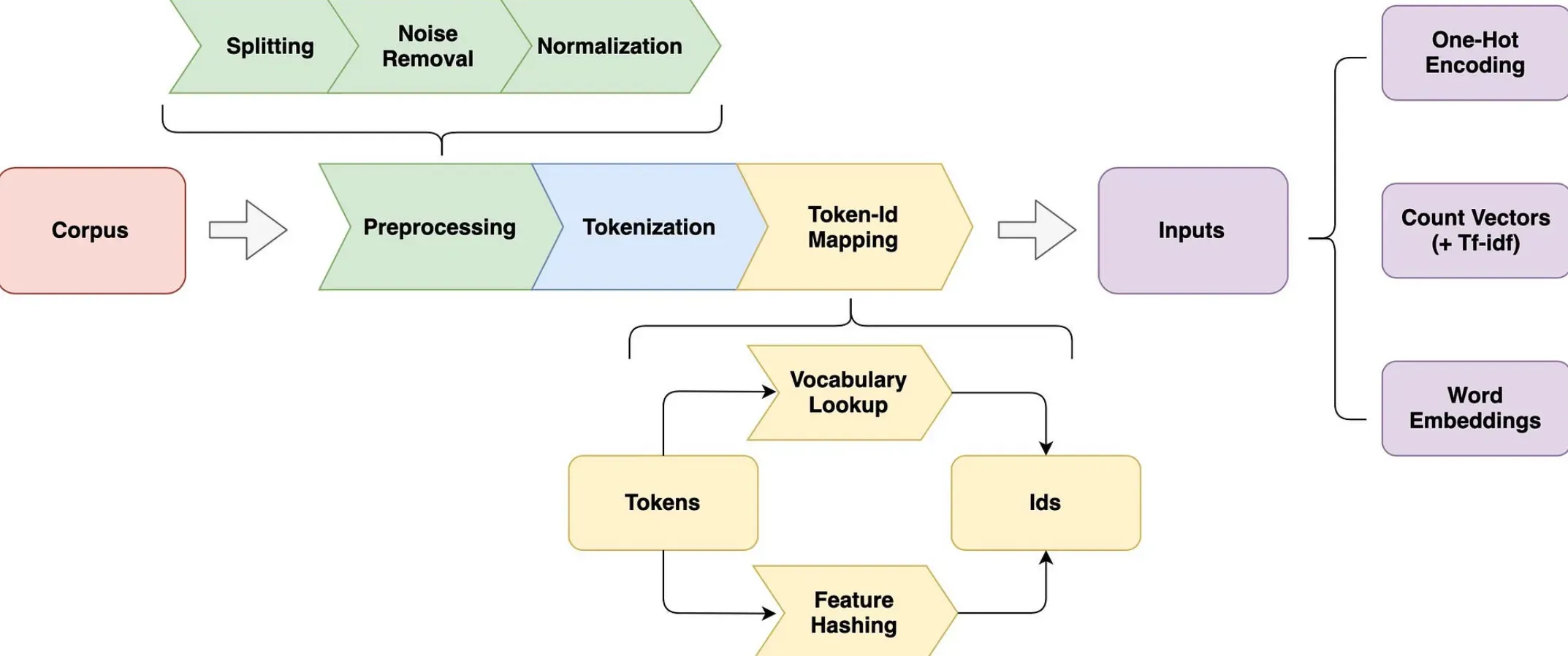

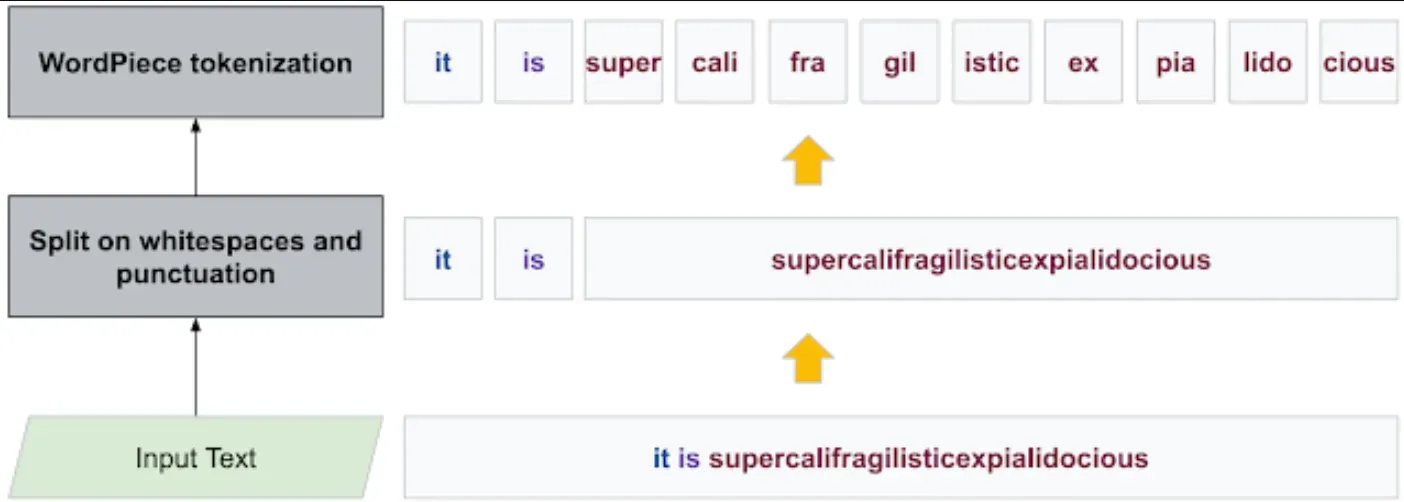

How does WordPiece Tokenization work?

WordPiece Tokenization follows a systematic process to break down text into subword units. This section provides a step-by-step breakdown of the WordPiece Tokenization process and illustrates it with examples.

Subword Unit Extraction

The first step in WordPiece Tokenization is to initialize the vocabulary with individual characters from the training dataset. Then, the most frequent pairs of subword units are iteratively merged to create new subword units until the desired number of subword units is reached.

Subword Encoding

Once the vocabulary is established, the text is encoded by replacing each word with its corresponding subword units. This results in the transformation of the text into a sequence of subword tokens.

Subword Decoding

During decoding, the subword tokens are converted back into words. This process involves merging consecutive subword units to form complete words. The decoding process makes use of the vocabulary for matching subword tokens to their corresponding words.

The Workings of WordPiece Tokenization

With the basics covered, let's take a closer look at how WordPiece tokenization actually works and the mechanics behind its efficiency.

The Core Process of WordPiece Tokenization

WordPiece tokenization starts with a base vocabulary of individual characters. Then, using an iterative process, it combines these individual characters or character n-grams into sub-word units or word pieces, based on their frequency in the training data.

Encoding Words Using WordPiece Tokenization

When encoding words, WordPiece tokenization first checks if the word is in its vocabulary. If it is, it encodes it as is. However, if the word isn't present, it progressively breaks down the word into smaller units until it identifies pieces that are in its vocabulary.

Handling Out-of-Vocabulary Words with WordPiece Tokenization

The key strength of WordPiece tokenization is its adeptness with out-of-vocabulary words. It achieves this by breaking down unknown words into familiar sub-word units, which can then be processed by the model, enhancing language translation model's effectiveness.

Increasing Computational Efficiency with WordPiece Tokenization

By breaking text into sub-word units instead of individual characters, WordPiece tokenization reduces the sequence length, making NLP models more computationally efficient. In addition, it results in fewer unknown tokens, increasing the model's overall performance.

Influence of WordPiece Tokenization in Language Models

Moving on, let's explore how WordPiece tokenization impacts various aspects of language models, from data representation to system performance.

WordPiece Tokenization and Data Representation

WordPiece tokenization significantly improves the representation of data in language models. By splitting words into sub-word units, it manages complex morphology and rare words more effectively, leading to higher-quality translations.

Impact on Language Model Performance

The influence of WordPiece tokenization extends to the overall performance of language models. Reducing sequence length and better handling of unknown words makes these models more computationally efficient and accurate.

Facilitating Generalization in Language Models

WordPiece tokenization facilitates better model generalization by working at a sub-word level. This allows models to cope with rare words and exposes them to a broader vocabulary, enhancing their ability to understand and generate text.

Enhancing Information Retrieval with WordPiece Tokenization

In information retrieval tasks, WordPiece tokenization provides the means to handle a wider range of words, supporting more accurate and comprehensive search results, thus improving the overall effectiveness of search systems.

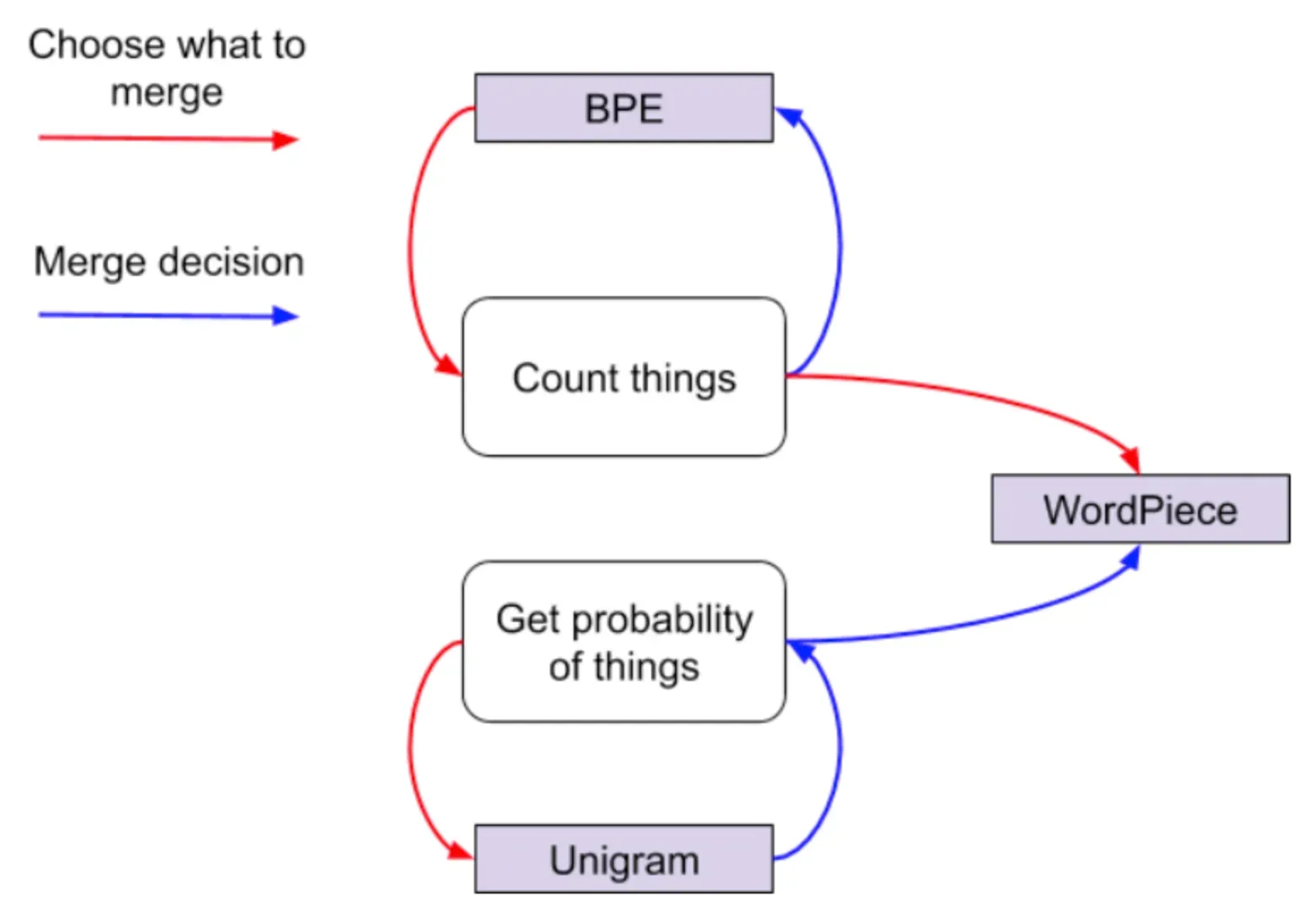

The Variants of WordPiece Tokenization

Let's expand our understanding by looking at some of the variants of WordPiece tokenization and how they differ from the original technique.

SentencePiece: A Variant of WordPiece Tokenization

SentencePiece, an open-source version of WordPiece tokenization, offers a critical advantage: it doesn't require pre-tokenization like its predecessor. Therefore, it allows the training process to be language-independent and can handle raw sentences directly.

Unigram Language Model Tokenization

Inspired by WordPiece tokenization, the Unigram Language Model Tokenization is probabilistic in nature. It trains a sub-word vocabulary and deletes less probable sequences iteratively, resulting in a fixed-size but optimized vocabulary.

Byte Pair Encoding (BPE) and WordPiece Tokenization

Byte Pair Encoding (BPE) is another sub-word tokenization approach, similar to WordPiece. However, while WordPiece is driven by frequency, BPE merges character sequences based on their statistical occurrences, effectively bridging the gap between character-based and word-based models.

Hybrid Models: Combination of WordPiece and Other Tokenization Methods

In an attempt to leverage the best of multiple techniques, hybrid tokenization models have emerged that combine WordPiece with other methods such as morphemes or syllables segmentation. These variants aim at enhancing the tokenization process and push the boundaries of model capabilities.

WordPiece Tokenization and its Application in Modern NLP Models

Let's navigate through its specific applications across several modern NLP models, shedding light on why it is an invaluable asset.

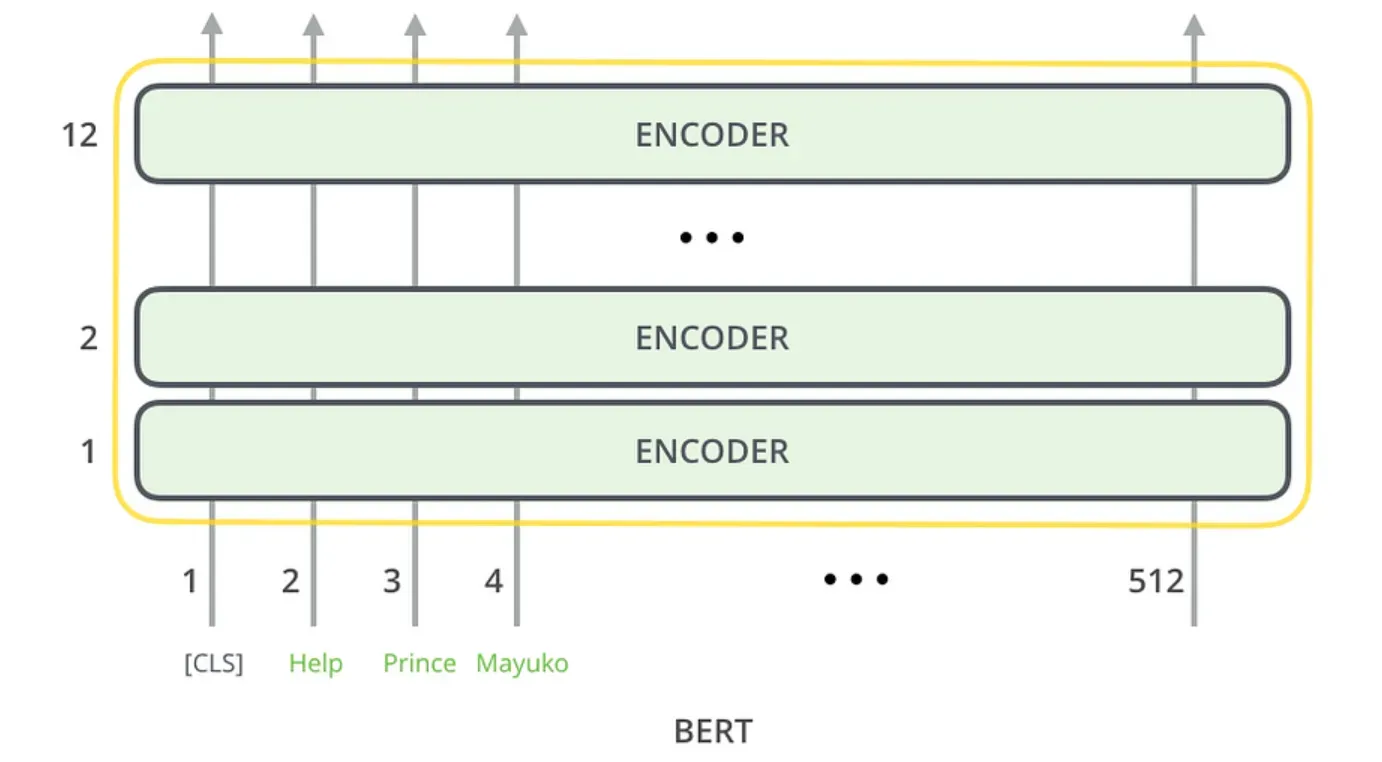

WordPiece Tokenization in Google's BERT

BERT, Google's pre-training model for NLP tasks, utilizes WordPiece tokenization for handling its input. This allows BERT to preserve rich semantic connections while modestly managing its vocabulary size, thereby improving translation quality and efficiency.

Application in Transformer Based Models

WordPiece tokenization is widely used in Transformer-based models, enhancing their capability to handle a broader range of words, manage unknowns and improve the overall efficiency of data processing.

Google's Neural Machine Translation (GNMT)

As mentioned earlier, WordPiece tokenization originates from GNMT. Here, it is used to divide a sentence into tokens while balancing between the flexibility of character-level translation and the efficiency of word-level translation.

Leveraging WordPiece in Multilingual Models

WordPiece tokenization's approach of using sub-word units makes it feasible for multilingual models. With a universal and scalable vocabulary, the models need less training data, and they can support multiple languages seamlessly.

Tackling Problems with WordPiece Tokenization

While WordPiece tokenization is incredibly potent, it isn't without its issues. It's essential to understand and address these challenges to better utilize the technique.

Addressing Token Illusion

Token illusion is a problem where WordPiece tokenization incorrectly splits words into sub-words. These problematic tokens might be genuine words in a language but not in the intended context. Addressing this requires monitoring and contextual comprehension.

Dealing with Sub-Word Ambiguity

Sub-word ambiguity arises when tokenized sub-words have different meanings in different contexts. This requires careful handling and advanced modeling that incorporates a broader understanding of the context.

Overcoming Over-Segmentation

Over-segmentation refers to overly breaking down words into smaller sub-word parts. This often leads to loss of semantic meaning and requires a delicate balance to ensure optimal results from tokenization.

Managing Rare Words with WordPiece Tokenization

While WordPiece tokenization shines with handling unknown and rare words, it is not foolproof. Again, balance is necessary - splitting rare words into sub-word units that may not convey the original meaning can be a challenge.

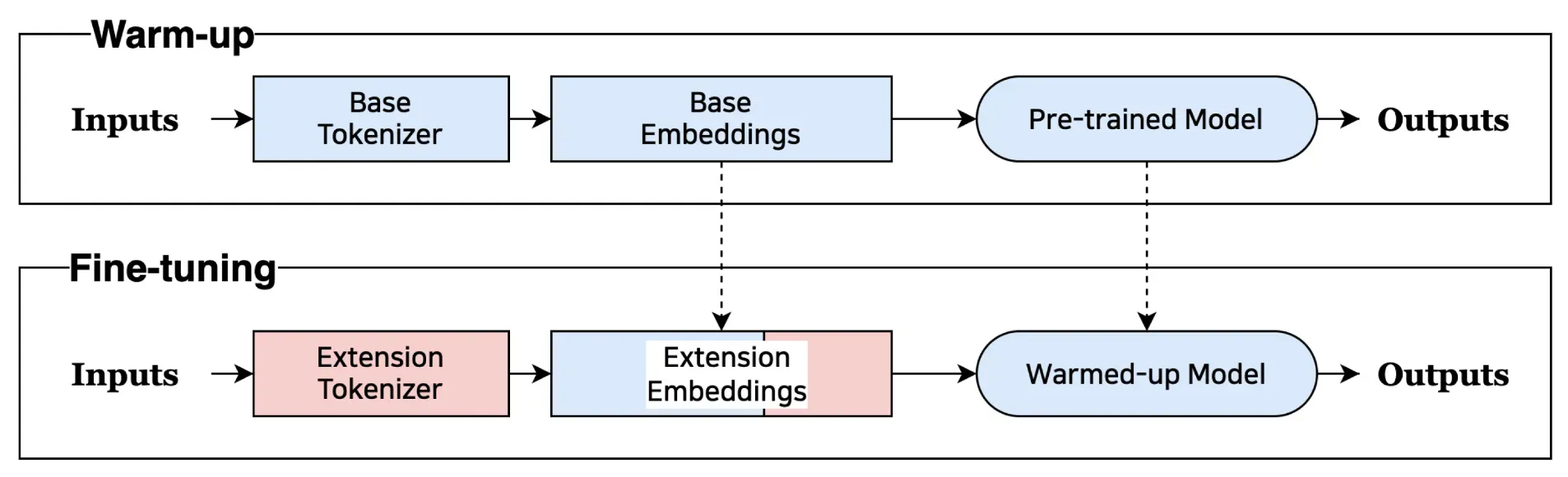

Fine-Tuning WordPiece Tokenization

With an understanding of its challenges, let's discuss how to fine-tune WordPiece tokenization to get the best out of it.

Adapting Distribution of Sub-Word Units

With careful analysis and ongoing adjustments, the distribution of sub-word units can be attuned to the specificities of the dataset to enhance the performance of the language model.

Balancing Granularity in WordPiece Tokenization

While more granularity in tokenization allows handling more rare words and reducing the vocabulary size, it also increases the sequence length. Thus, a precise balance is critical for optimum outcomes.

Fine-Tuning Vocabulary Size in WordPiece Tokenization

While larger vocabulary size can improve the accuracy of the model, it also makes the model more computationally intensive. Therefore, fine-tuning the vocabulary size is an important consideration for improving the effectiveness of WordPiece tokenization.

Addressing Multi-Lingual Challenges of WordPiece Tokenization

For truly global models, fine-tuning WordPiece tokenization requires additional considerations to effectively handle the complexities of various languages. This usually involves diversifying the underlying training data to reflect broad linguistic variability.

Who uses WordPiece Tokenization?

WordPiece Tokenization finds application in various industries and domains that involve NLP tasks. It is widely used by companies, researchers, and developers who deal with large volumes of text data and require effective language understanding capabilities.

Social Media Analysis

WordPiece Tokenization is extensively used in social media analysis to process and analyze large volumes of social media posts, comments, or tweets. It helps in identifying and categorizing user sentiments, detecting trending topics, and extracting meaningful insights from social media content.

Machine Translation

In machine translation tasks, WordPiece Tokenization plays a critical role in breaking down sentences into subword units before translation. This process enables the translation model to handle and translate rare or OOV words accurately and capture the nuances of the language.

Chatbots and Virtual Assistants

Chatbots and virtual assistants leverage WordPiece Tokenization to understand user queries and generate appropriate responses. By breaking down user queries into subword units, chatbots can better comprehend the context and intent behind the input, resulting in more accurate and meaningful interactions.

Named Entity Recognition

Named Entity Recognition (NER) systems rely on WordPiece Tokenization to accurately identify and classify named entities, such as person names, locations, organizations, and dates, within a given text. The subword granularity provided by WordPiece Tokenization enhances the performance of NER models.

Sentiment Analysis

WordPiece Tokenization is employed in sentiment analysis tasks to understand the sentiment or emotion expressed in a given text. By breaking down the text into subword units, sentiment analysis models can capture more nuanced variations in sentiment and provide more accurate sentiment classification.

When should WordPiece Tokenization be used?

WordPiece Tokenization is suitable for various scenarios where the advantages it offers align with the specific requirements of a task or a language. This section explores when WordPiece Tokenization is most effective and highlights the factors to consider when opting for this tokenization method.

Morphologically Rich Languages

WordPiece Tokenization is particularly beneficial for languages with rich morphology, such as Finnish, Hungarian, or Turkish. These languages often have complex word formations, making it challenging to split them into accurate word tokens. WordPiece Tokenization can capture the subword patterns and allow for better understanding of the language.

Handling OOV Words

If the task at hand involves handling OOV words effectively, WordPiece Tokenization can be a suitable choice. By breaking words into subword units, even unseen words can be represented using known units, improving the model's capability to capture their meaning and context.

Domain-Specific Language

When dealing with domain-specific languages or jargon where word tokenization may face difficulties, WordPiece Tokenization can provide a more effective alternative. The subword granularity helps capture the unique language patterns and terminology specific to the domain, resulting in better language understanding.

Large Text Corpus

WordPiece Tokenization is particularly useful when working with large text corpora. Its ability to handle rare or OOV words ensures that the model gets sufficient exposure to subword patterns across a wide range of texts, enhancing its language understanding capabilities.

Computational Resources

Considering the available computational resources is crucial when deciding to use WordPiece Tokenization. As the vocabulary size can be adjusted, it allows for managing memory and processing requirements better. However, larger vocabulary sizes may require more computational resources.

Frequently Asked Questions (FAQs)

What are the benefits of WordPiece Tokenization?

WordPiece Tokenization offers subword granularity, improved OOV word handling, flexible vocabulary size, improved rare-word handling, and parameter optimization compared to other tokenization methods.

What is an example of word tokenization?

An example of word tokenization is splitting the sentence "I love cats." into the tokens ['I', 'love', 'cats.'].

What is an example of a subword tokenization?

An example of subword tokenization is representing the word "unhappiness" as subword tokens ['un', 'happi', 'ness'].

What is the difference between BPE tokenizer and WordPiece tokenizer?

The difference between BPE tokenizer and WordPiece tokenizer is that BPE splits words into subword tokens by merging the most frequent pairs of consecutive characters, while WordPiece also considers the likelihood of a subword appearing as a whole in the training corpus.

What is a sentence with the word token in it?

Here is a sentence with the word "token": “Please hand me a token to use for the bus fare.”