What is Tokenization?

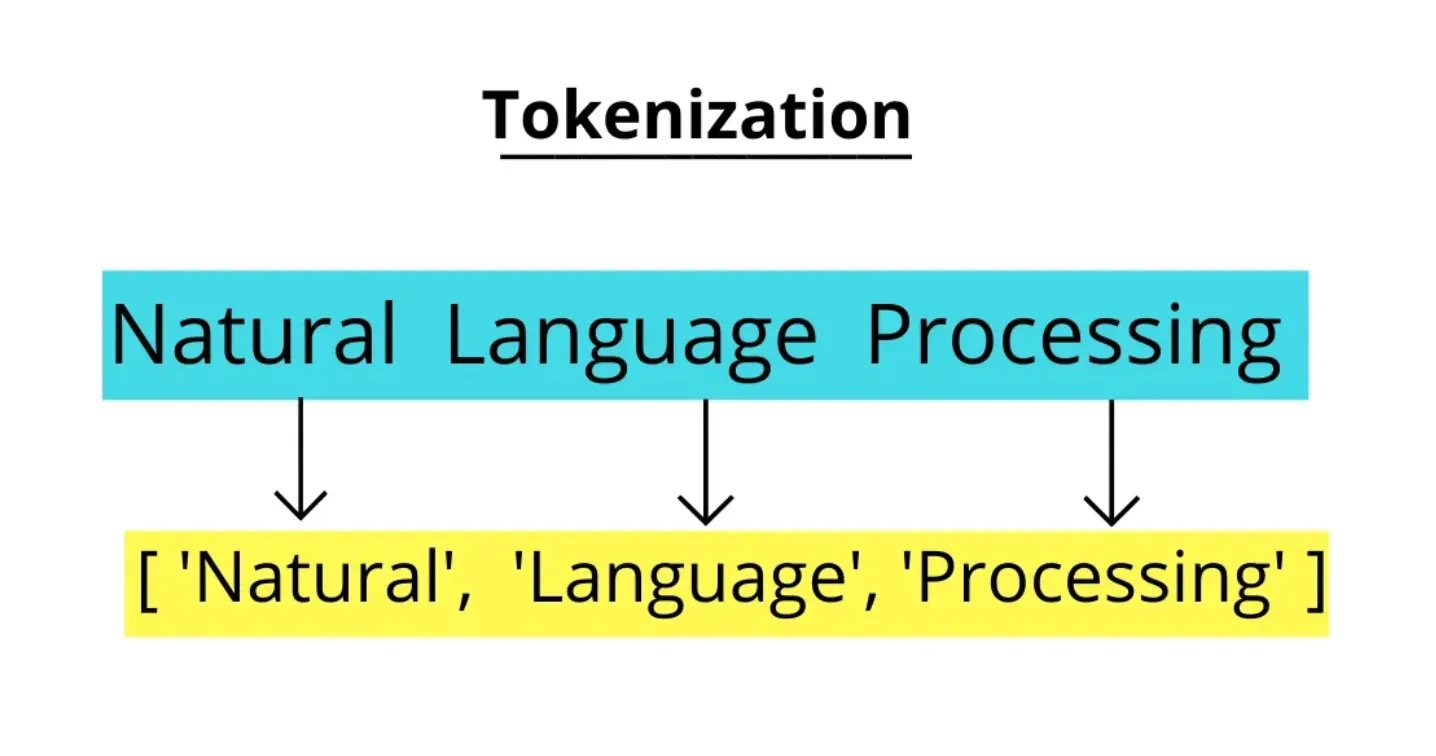

Tokenization is a fundamental step in natural language processing. It's the process of breaking down text into smaller pieces, known as "tokens." Tokens usually represent words, but they can also denote sentences, depending on the task at hand.

Think of tokenization like slicing a pie. If each piece of the pie is a word, then tokenization is the act of making those slices. For a text like "I love apple pie!", tokenization turns that sentence into separate tokens: ["I", "love", "apple", "pie", "!"]. This helps machines understand and process the text more efficiently by focusing on one 'piece' at a time.

Through tokenization, we can transform textual data into a format that's more suitable for analysis or further processing in tasks like part-of-speech tagging, entity extraction, and others. It makes raw text more accessible and manageable for NLP tasks.

Why is Tokenization important?

In this section, we'll discuss the importance of tokenization in natural language processing.

Simplifying Text Analysis

Tokenization helps simplify text analysis by breaking down complex structures into manageable units. This aids in facilitating efficient, structured, and organized text processing.

Facilitates Further Processing

Tokenization serves as a prerequisite to many NLP tasks. Once the text is tokenized into individual words or sentences, further processes like stemming, lemmatization, or part-of-speech tagging can be applied.

Supports Language Understanding

These smaller units, or tokens, help in understanding the context, semantics, and structure of the language. This is vital to enhancing machine comprehension, contributing to the effectiveness of many natural language processing applications.

Improves Model Performance

Tokenization can significantly affect model performance. By correctly identifying tokens, models can more accurately understand the input data, directly influencing tasks like sentiment analysis, translation, or question answering.

Aids in Feature Extraction

In text classification tasks, tokens often serve as features. Clear, well-defined tokens enable more accurate feature representation, thereby improving the overall classification results.

Tokenization thus forms an essential part of the preprocessing pipeline in NLP, playing an important role in helping machines understand and process human language effectively.

How does Tokenization Work?

In this section, we will focus on understanding the tokenization process.

Identifying Token Boundaries

A crucial aspect of tokenization is identifying the boundaries between tokens. These boundaries could be spaces, punctuation marks, or special characters. The tokenization process involves separating the input text into units based on these boundaries, which are then considered tokens.

Techniques for Tokenization

Several techniques can be employed for tokenization, depending on the specific language or rules. Some of these techniques include whitespace tokenization, rule-based tokenization, or more advanced natural language libraries such as NLTK and spaCy. The choice of technique directly impacts the effectiveness and accuracy of further NLP tasks.

Handling Language-Specific Nuances

Tokenization becomes complex when handling language-specific nuances such as contractions, abbreviations, or multi-word expressions. Advanced tokenization algorithms account for these complexities, ensuring better and more contextually accurate representations of the input text.

Importance in Natural Language Processing

Tokenization lays the foundation for numerous NLP tasks like sentiment analysis, machine learning, and machine translation. By converting unstructured text into structured, analyzable units, tokenization enables more efficient and accurate processing for subsequent NLP tasks, ultimately enhancing the outcomes.

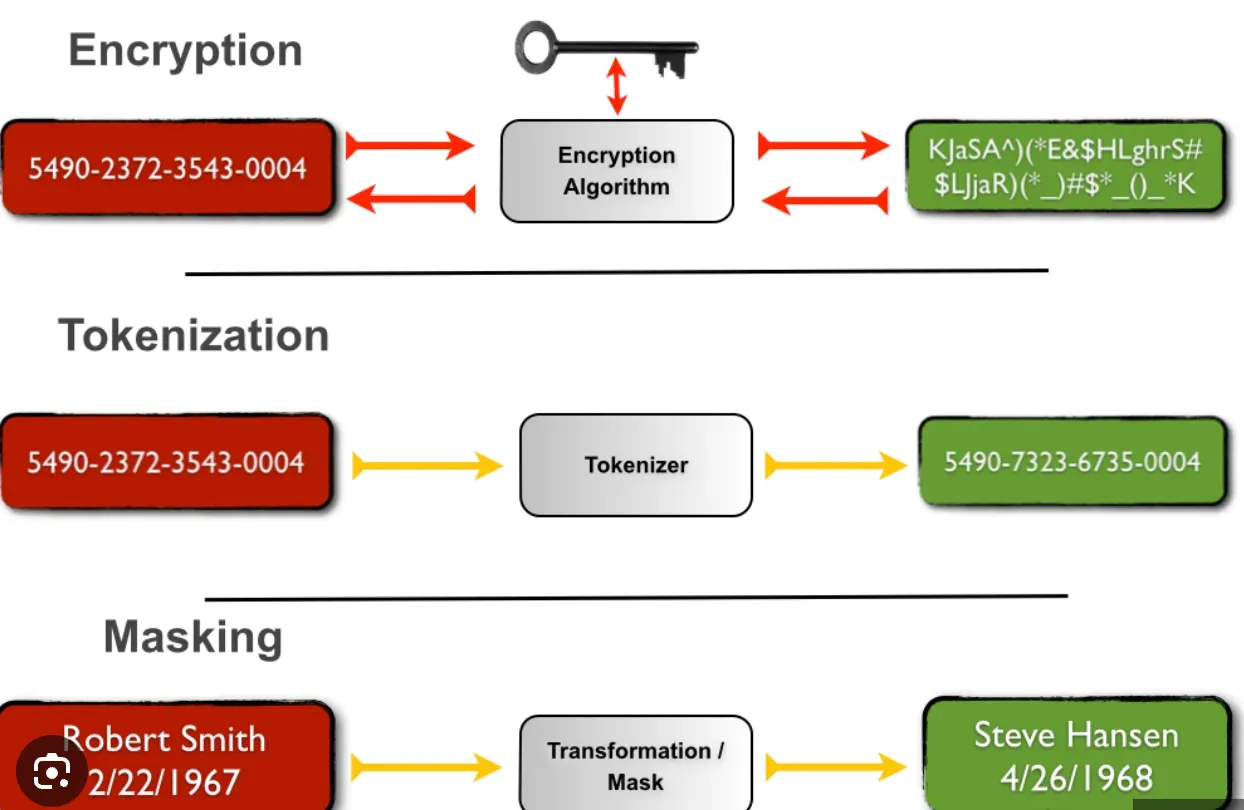

Tokenization vs Encryption vs Masking

In this section, we'll dissect and contrast three distinct data protection measures: tokenization, encryption, and masking, examining their differences based on five categorical parameters.

Methodology of Data Transformation

Tokenization replaces sensitive data with non-sensitive substitutes known as tokens, preserving the original data's length/format. Encryption translates data into a coded version using an algorithm and a key. Masking conceals part of the data, replacing sensitive elements with random characters or other data.

Reversibility and Access to Original Data

While both tokenized and encrypted data can be reverted to their original form, only tokenization ensures this information remains undisclosed during the process. For encryption, anyone with the right keys can access the original data. Masking, however, is typically irreversible.

Use Cases

Tokenization is frequently used in payment processing systems to secure credit card numbers. Encryption is ubiquitous, safeguarding emails, secure websites, and files. Masking is often used for non-production data environments, such as testing or development, to obfuscate sensitive information.

Impact on Data Structure

Tokenization retains the original data's format and structure, which is advantageous for maintaining the functional integrity in systems. Encryption changes data length and format based on the algorithm. Masking also maintains the format but modifies the content, making it unreadable.

Performance and Resource Load

Tokenization provides a robust security measure with a low computational load making it more efficient. Encryption, particularly strong multi-layered encryption, can be resource-intensive. Masking impacts system performance marginally as it usually only modifies a portion of the data.

How do we implement Tokenization?

Tokenization is a versatile technique that is implemented in various systems and industries. Let's explore its practical applications in specific domains:

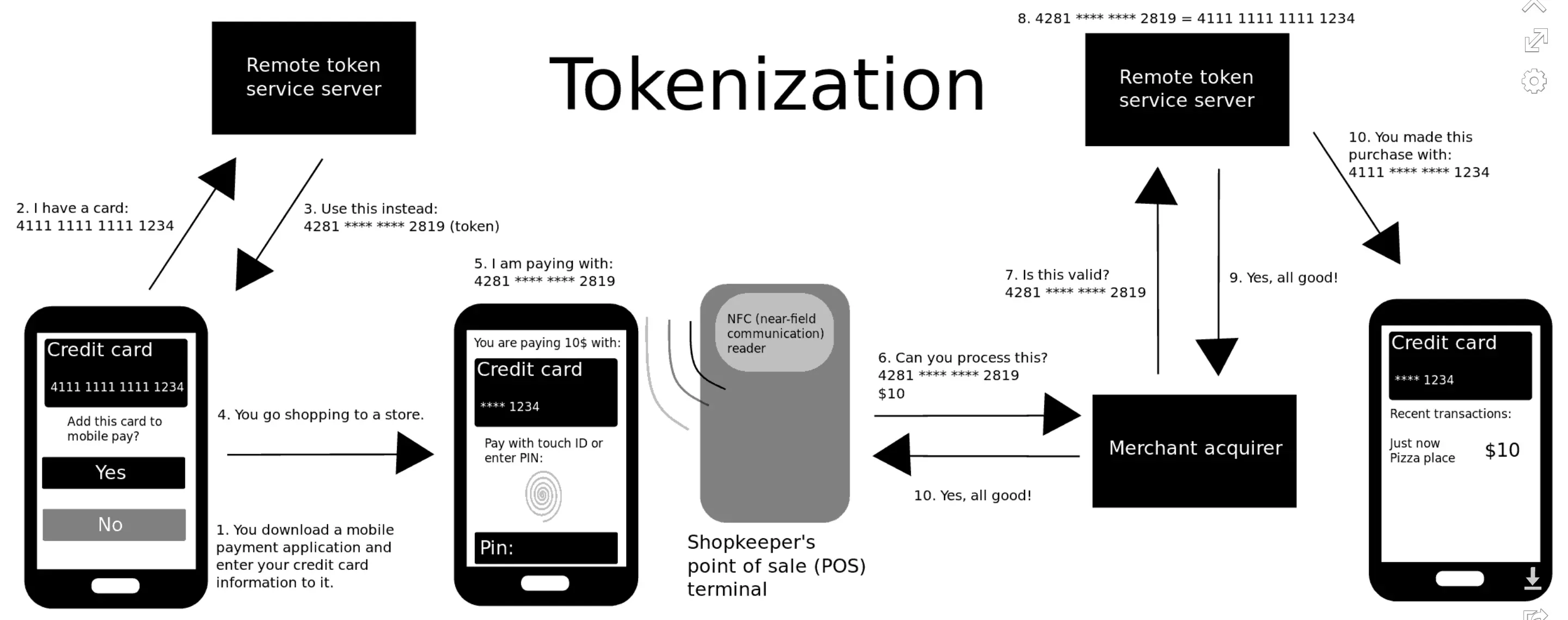

Tokenization in Payment Systems

Tokenization plays a crucial role in securing payment systems by replacing sensitive cardholder data with random tokens. This helps prevent unauthorized access to card information during transactions and reduces the risk of fraud. Payment processors and merchants often employ tokenization to enhance payment security and comply with industry standards, such as the Payment Card Industry Data Security Standard (PCI DSS).

Tokenization in Healthcare Systems

Healthcare systems handle sensitive patient data, making tokenization essential for protecting patient privacy and complying with data protection regulations, such as the Health Insurance Portability and Accountability Act (HIPAA). Tokenization is used to replace personal health information, ensuring that only authorized individuals can access the original data while maintaining data integrity and confidentiality.

Tokenization in E-commerce Platforms

Tokenization plays a significant role in securing e-commerce platforms by safeguarding customer payment information. By replacing credit card details with tokens, online retailers can minimize the risk of data breaches and reduce their liability for storing sensitive information. Tokenization enhances customer confidence in the security of their transactions, as their payment data remains securely stored in token form.

Tokenization in Mobile Applications

As mobile applications continue to gain popularity, tokenization is crucial for securing sensitive data stored or transmitted through mobile devices. Mobile payment systems, loyalty programs, and other applications that handle personal data utilize tokenization to protect user information. By employing tokenization, mobile app developers can ensure the integrity of user data, minimize security risks, and enhance user trust in the application.

Tokenization is a versatile data protection technique implemented in various systems across different industries, demonstrating its effectiveness in securing sensitive information and mitigating potential risks.

Frequently Asked Questions (FAQs)

Can tokenization be language-dependent?

Yes, tokenization can be language-dependent. Different languages may have different rules for separating text into tokens, making language-specific tokenization necessary for accurate analysis.

What is the difference between tokenization and stemming?

Tokenization involves breaking text into tokens while stemming is the process of reducing words to their base or root form. While tokenization focuses on dividing text, stemming focuses on reducing word variations.

What is the impact of tokenization on text analysis accuracy?

Accurate tokenization is crucial for text analysis as it directly impacts the accuracy of tasks like sentiment analysis, entity recognition, and language modeling. Properly tokenized text ensures meaningful and reliable analysis results.

Can tokenization be applied to non-textual data?

Yes, tokenization can be applied to non-textual data like numerical data or sequences of symbols. In such cases, tokens can represent units of data or specific elements of the data, allowing for further analysis and processing.

Is tokenization a reversible process?

Tokenization is not always a reversible process. Depending on the task, some tokens may undergo further processing or modifications that make it impossible to reconstruct the original text accurately.