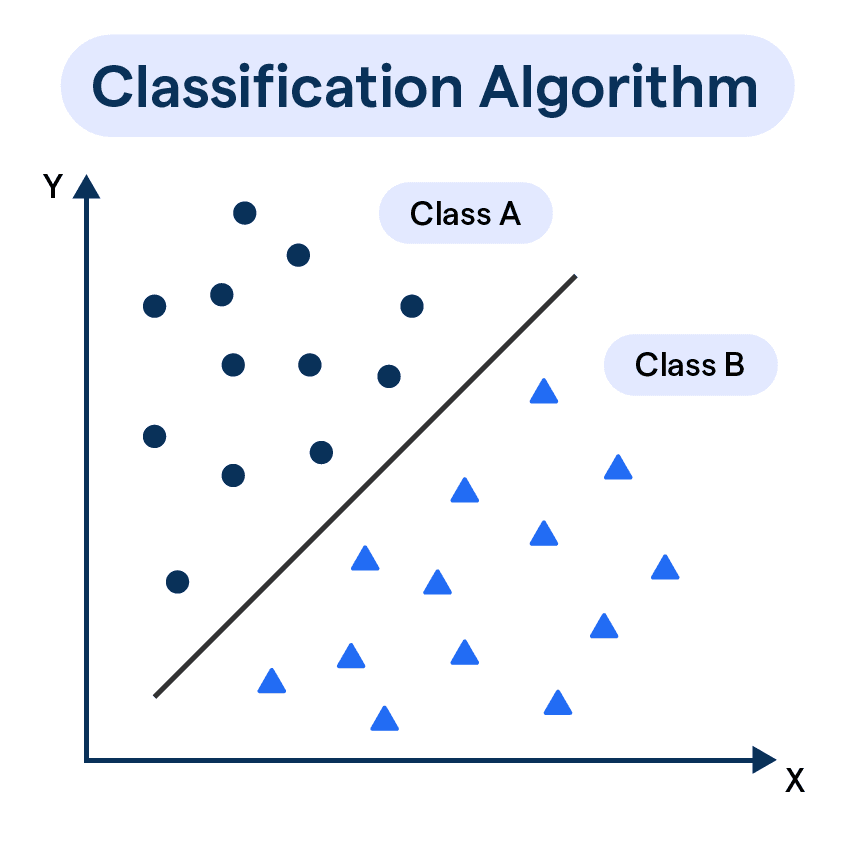

What are Classification Algorithms?

Classification algorithms are a type of machine learning technique used to predict the category or class of an object based on its features.

These algorithms can be employed in numerous applications, including spam filtering, image recognition, and medical diagnosis.

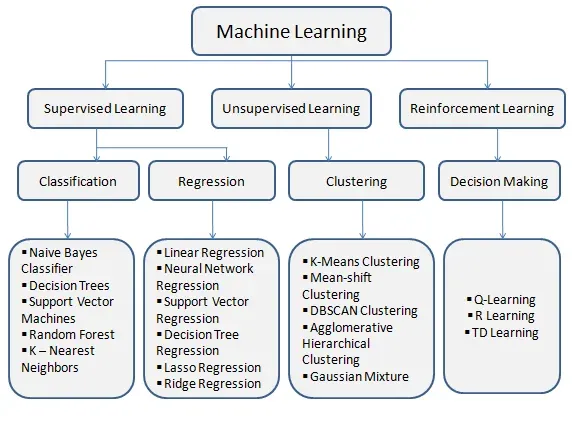

Supervised Learning

In supervised learning, the algorithm uses labeled training data to learn from and make predictions on new, unlabeled data.

It requires a dataset that includes input features as well as the desired output or target variable, which is typically a categorical class.

Suggested Reading:

Unsupervised Learning

Unsupervised learning does not rely on labeled data for training but instead seeks to uncover hidden patterns or structures in the input features.

This type of learning is commonly used for clustering or dimensionality reduction tasks.

Semi-Supervised Learning

Semi-supervised learning combines aspects of both supervised and unsupervised learning.

This approach involves using a small amount of labeled data to guide the learning process among a larger pool of unlabeled data, thus reducing the need for large, fully-labeled datasets.

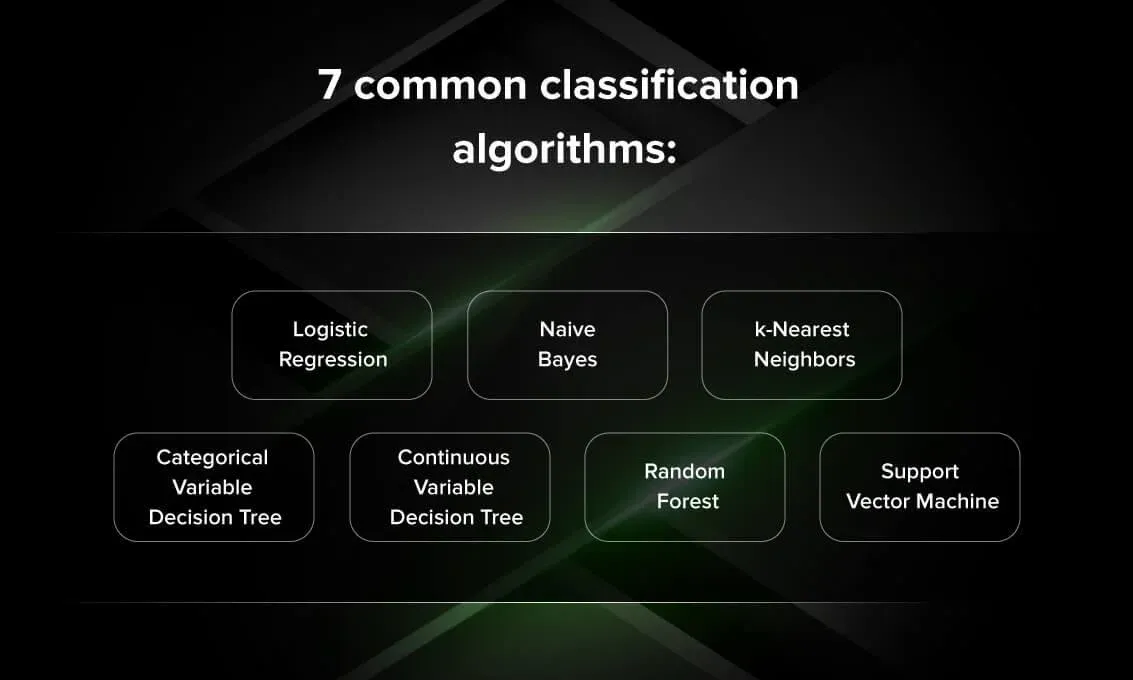

Common Classification Algorithms

There are various classification algorithms available, each with its strengths and weaknesses. This section provides a brief overview of some common techniques.

Logistic Regression

Logistic Regression is a statistical method used to model the probability of a categorical outcome based on input features.

It is a fundamental algorithm in both binary and multi-class classification problems.

Decision Tree

A Decision Tree is a flowchart-like structure in which internal nodes represent feature tests, branches signify the possible outcomes of these tests, and leaf nodes correspond to the class labels.

Decision Trees are simple to understand and visualize, making them popular for various tasks.

Support Vector Machines

Support Vector Machines (SVMs) are a powerful classification algorithm that seeks to find the optimal hyperplane separating data points of different classes.

These algorithms can handle high-dimensional data and are effective even when classes are not easily separable.

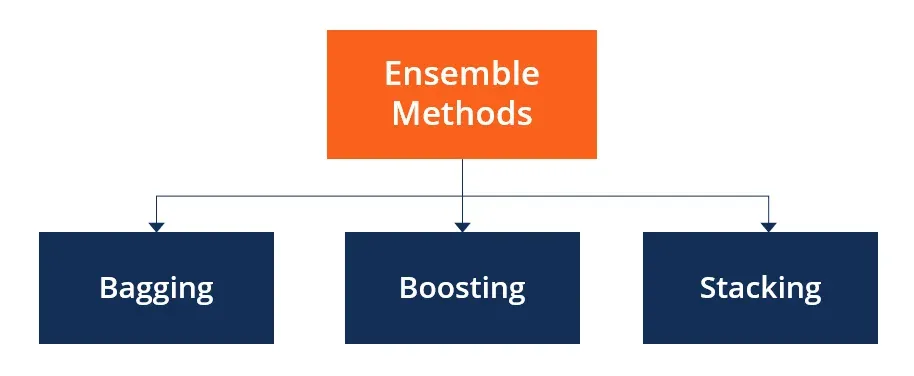

Ensemble Methods

Ensemble methods are techniques that combine predictions from multiple individual models to achieve better overall performance.

Ensemble techniques can be employed to reduce the risk of overfitting and improve the accuracy of classification algorithms.

Bagging

Bagging, short for Bootstrap Aggregating, is an ensemble method that involves creating multiple subsets of the original dataset through resampling with replacement.

A classification algorithm is then trained on each subset, and their results are combined to develop the final prediction.

Boosting

Boosting is an iterative technique that adjusts the weights of training examples based on their performance in previous iterations.

Weak classifiers are iteratively combined into a strong classifier until a certain level of accuracy is reached.

Random Forest

Random Forest is an extension of the Decision Tree algorithm that constructs multiple trees and combines their predictions to minimize variance and improve accuracy.

Each tree is grown by using a random subset of the training data, reducing correlation between trees and enhancing model robustness.

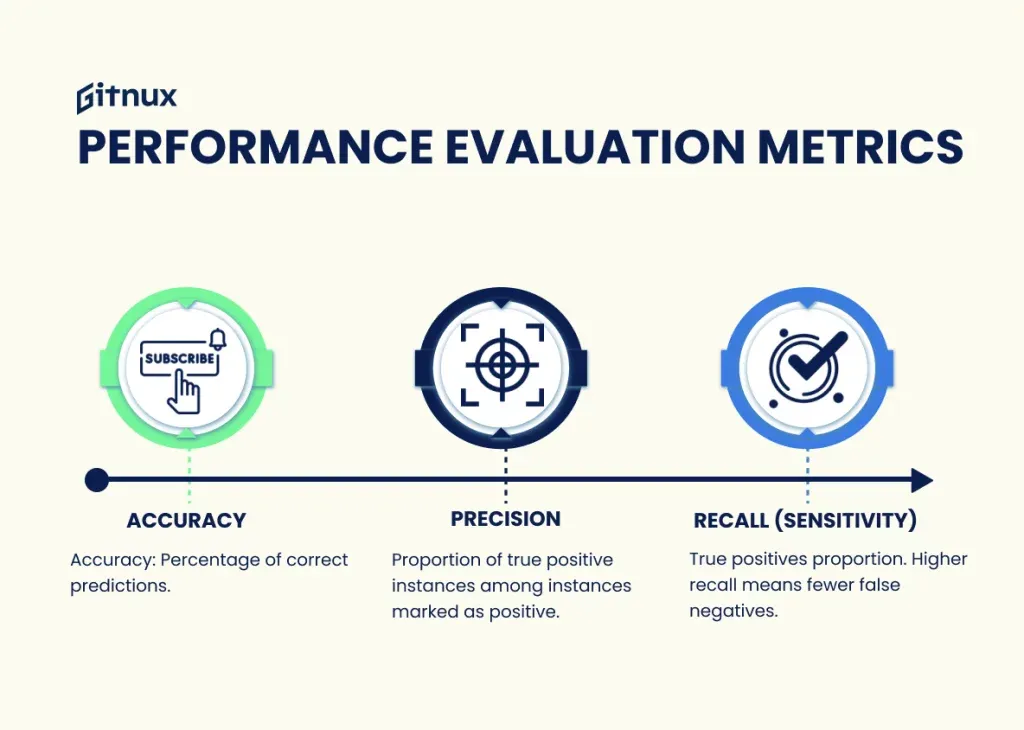

Performance Evaluation Metrics

Classification algorithms can be evaluated through various performance metrics, helping determine their effectiveness and suitability for particular tasks.

Accuracy

Accuracy is the simplest performance metric, calculated as the number of correct predictions divided by the total number of predictions.

Accuracy is useful for balanced datasets but can be misleading when dealing with imbalanced classes.

Precision and Recall

Precision and recall are performance metrics that help address class imbalance issues.

Precision measures how many true positive predictions were made relative to the total number of positive predictions, while recall quantifies the proportion of true positive predictions relative to all actual positive instances.

F1 Score

The F1 Score is the harmonic mean of precision and recall, offering a single-number measure of a model's performance.

F1 Score is particularly useful when both false positives and false negatives are crucial to consider.

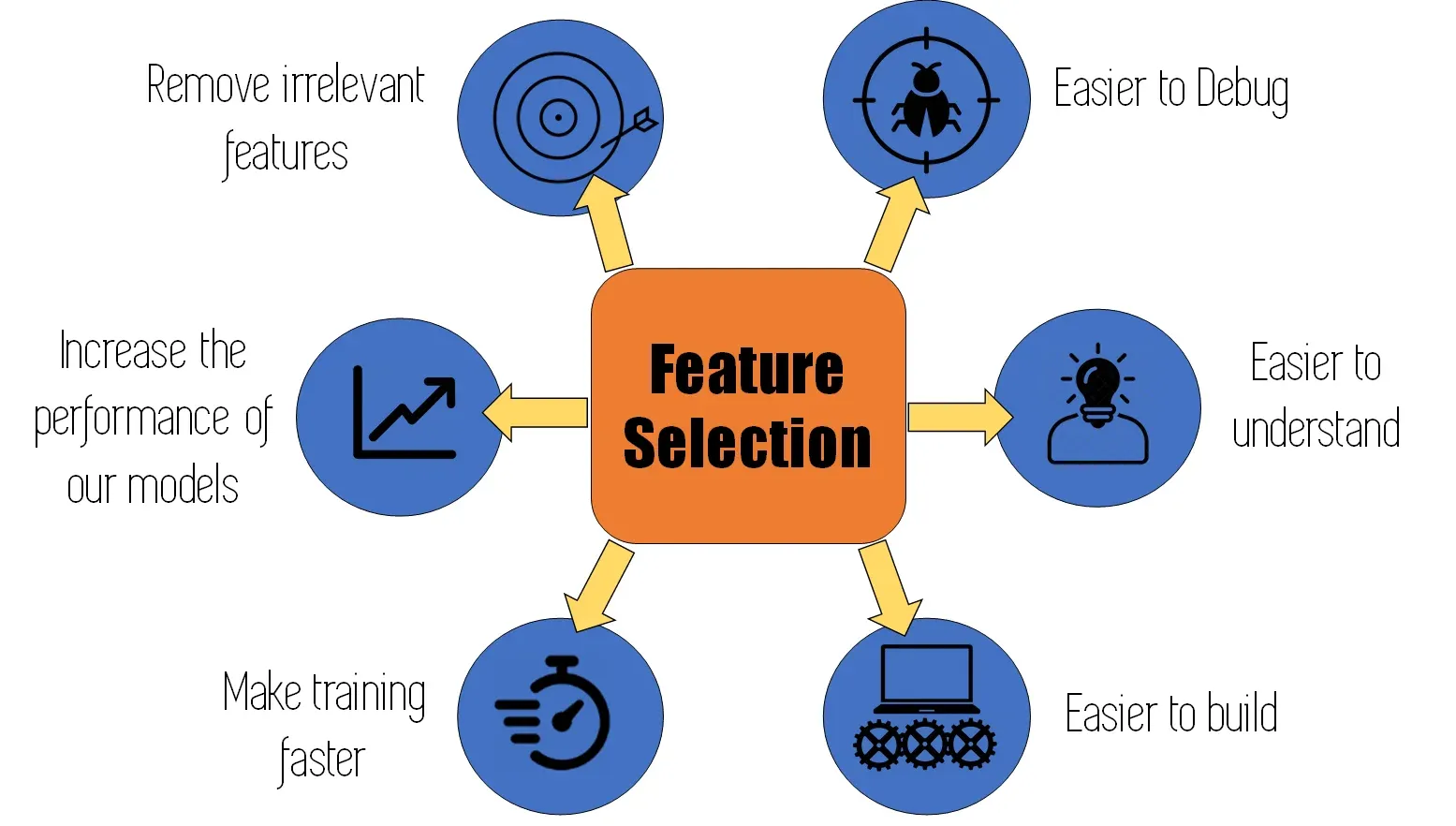

Feature Selection and Engineering

Feature selection and engineering are essential steps in the creation of effective classification models, as they can improve model performance, reduce overfitting, and decrease training time.

Filter Methods

Filter methods involve selecting features based on their statistical properties, such as correlation with the target variable or variance within the dataset.

These techniques are fast but do not consider how each feature interacts with other features within the algorithm.

Wrapper Methods

Wrapper methods evaluate the performance of a classification algorithm with a specific subset of features.

Though computationally expensive, these techniques offer a more accurate assessment of how a feature subset impacts the algorithm’s performance.

Feature Engineering

Feature engineering involves transforming or combining existing features to create new ones that may provide additional value to a classification algorithm.

Examples of feature engineering techniques include transforming numerical data to categorical data or creating interaction features between existing variables.

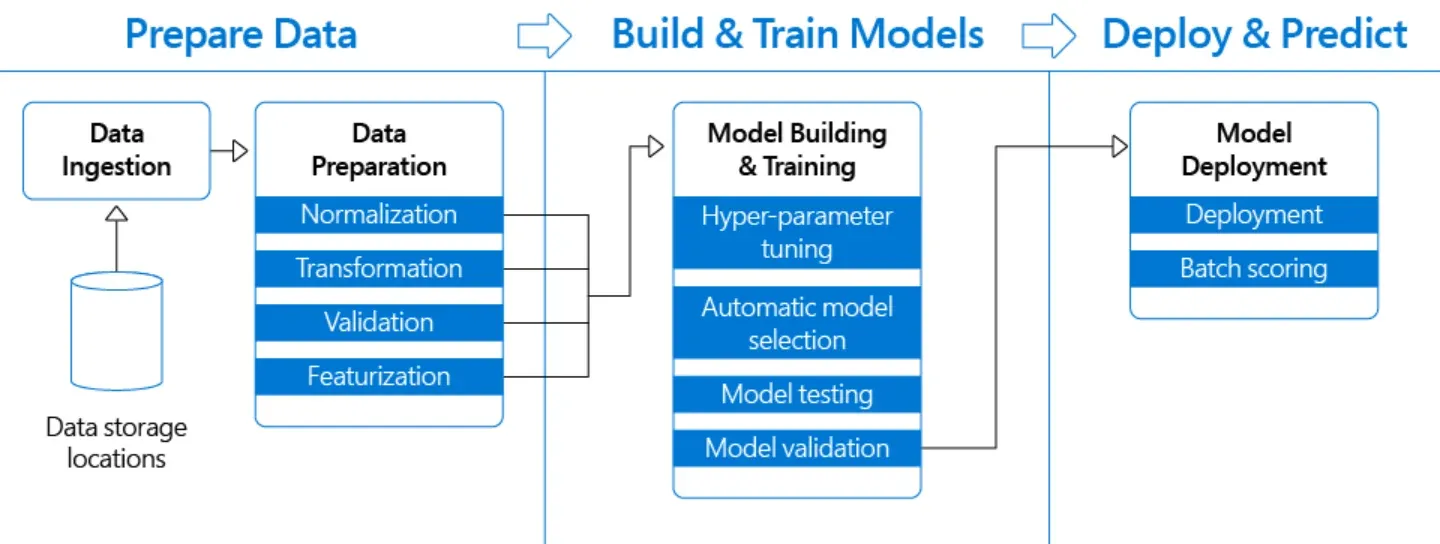

Hyperparameter Tuning

Hyperparameters are parameters of a classification algorithm that can be adjusted to optimize its performance. Tuning these parameters can dramatically improve model accuracy and generalization capabilities.

Grid Search

Grid Search is an exhaustive search technique that tests every combination of hyperparameter values within a predefined search space. It can identify the optimal hyperparameter configuration but requires significant computation time.

Random Search

Unlike Grid Search, Random Search samples a random subset of the search space. This approach takes less computation time and often produces similar results to Grid Search.

Bayesian Optimization

Bayesian Optimization employs a probabilistic model to guide the search for optimal hyperparameter values, utilizing prior knowledge about the problem to minimize the number of iterations needed.

Frequently Asked Questions(FAQs)

What is a classification algorithm?

A classification algorithm is a type of machine learning technique that predicts the category or class of an object based on its features.

Why are there different types of classification algorithms?

Different classification algorithms have distinct strengths and weaknesses, making them suitable for different tasks or data types.

What are ensemble methods?

Ensemble methods combine predictions from multiple individual models to achieve better overall performance, often reducing the risk of overfitting and improving the accuracy of classification algorithms.

What are hyperparameters, and why do they need tuning?

Hyperparameters are adjustable parameters of a classification algorithm that can be fine-tuned to optimize its performance.

What is the difference between supervised and unsupervised learning?

Supervised learning requires labeled training data to make predictions, while unsupervised learning seeks to uncover hidden patterns or structures without relying on labeled data.