MXNet is an open-source deep learning framework with significant traction in the artificial intelligence (AI) community. Developed by Apache and supported by various organizations, including Amazon Web Services, Microsoft, and NVIDIA, MXNet is designed to be flexible, efficient, and scalable, making it a powerful tool for building and deploying deep learning models.

This popularity can be attributed to MXNet's ability to support a wide range of programming languages, including Python, R, C++, and Julia, as well as its seamless integration with cloud platforms and distributed computing environments.

As the demand for AI and deep learning solutions continues to grow across industries, understanding the essentials of MXNet becomes increasingly important for developers, researchers, and organizations seeking to leverage this powerful framework's full potential.

With its strong community support, extensive documentation, and versatile feature set, MXNet offers a comprehensive platform for building and deploying cutting-edge deep learning models.

Setting Up MXNet

Getting started with MXNet is a breeze. Let's dive in and set up this powerful deep learning framework on your machine.

System Requirements

Before we begin the installation, let's ensure your system meets the necessary requirements. MXNet is compatible with a wide range of platforms, including Windows, macOS, and Linux. To ensure smooth performance, it is recommended that you have a computer with at least 8GB of RAM and a modern CPU.

Installing MXNet

Once you've confirmed that your system meets the requirements, it's time to install MXNet. The installation process is straightforward, regardless of your operating system. Detailed installation instructions can be found on the official MXNet website, and binary packages can be pre-compiled for various platforms.

Choosing the Right Tools

To get the most out of MXNet, it's essential to choose the right set of tools. An integrated development environment (IDE) can significantly enhance your productivity when working with MXNet. Popular options like Jupyter Notebook, PyCharm, and Visual Studio Code provide excellent support for deep learning projects.

In addition to the IDE, you'll also need the right libraries. MXNet seamlessly integrates with other Python libraries such as NumPy, SciPy, and Matplotlib. These libraries provide essential functionalities for data manipulation, scientific computing, and visualization.

Verifying Your Installation

Before we plunge into the core concepts of deep learning, it's crucial to verify that your MXNet installation is working correctly. This can be done by running a simple test script that ensures all the necessary dependencies are properly configured.

Core Concepts of Deep Learning

Now that MXNet is up and running, let's explore the core concepts of deep learning. Understanding these concepts will lay a strong foundation for your journey into the world of artificial intelligence.

Artificial Neural Networks (ANNs)

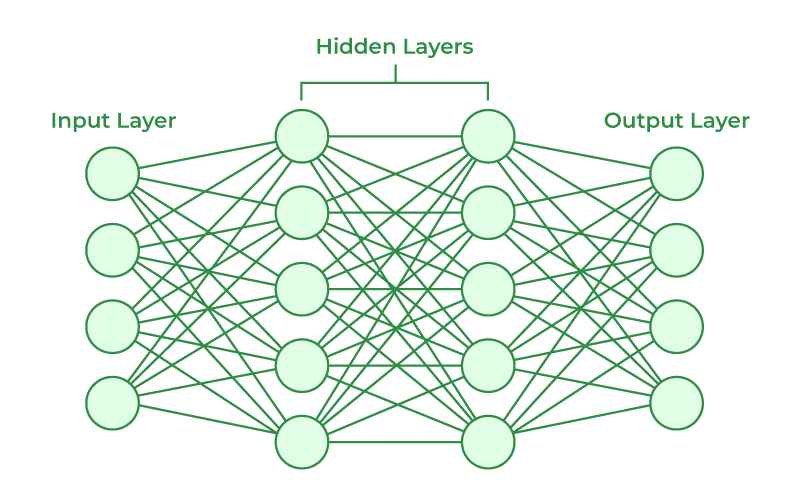

At the heart of deep learning lies the concept of artificial neural networks (ANNs). The structure and function of the human brain inspire these networks. ANNs consist of interconnected nodes called neurons, which simulate the behavior of biological neurons.

Neurons and Activation Functions

Neurons in an ANN receive inputs, perform computations, and produce outputs. Activation functions play a crucial role in determining a neuron's output. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and tanh.

Gradient Descent Optimization

Gradient descent is a powerful optimization algorithm widely used in training deep neural networks. It iteratively adjusts the network's parameters to minimize a loss function, which quantifies the difference between predicted and actual values. Backpropagation, a technique closely tied to gradient descent, efficiently calculates the gradients needed for updates.

Loss Functions and Backpropagation

Loss functions measure the discrepancy between predicted and actual values. They guide the optimization process by providing feedback on the network's performance. Backpropagation, through a recursive process, computes the gradients of the loss function concerning each parameter in the network.

Training, Validation, and Testing

Data is divided into three sets to train a deep learning model: the training set, validation set, and testing set. The training set optimizes the model's parameters, while the validation set monitors its performance during training. The testing set allows the final evaluation of the model's generalization abilities.

By fully grasping these core concepts, you'll be well-prepared to tackle the exciting challenges in your deep learning journey.

Suggested Reading:

Building Your First Deep Learning Model with MXNet

Now that you have a solid understanding of MXNet's setup and the core concepts of deep learning, it's time to build your first deep learning model. Exciting, isn't it? Let's get started!

Choosing a Dataset (e.g., MNIST)

To begin, we need a dataset to train our model. A popular choice for beginners is the MNIST dataset, which consists of thousands of handwritten digit images. MNIST provides a good starting point for understanding image classification tasks.

Data Preprocessing (Loading, Cleaning, Normalization)

Some preprocessing is required before we can feed the data into our model. First, we need to load the dataset into our environment, ensuring it is in a suitable format for MXNet. This typically involves converting the images and labels into numeric arrays.

Once loaded, it's important to clean the data by removing any irrelevant or corrupted samples. Data normalization is another crucial step, as it helps bring all features into a similar range, preventing certain features from dominating others during training.

Defining Your Model Architecture (Layers, Activation Functions)

Now comes the fun part - defining the architecture of your model. In MXNet, this is done by creating a network of layers. The layers determine how information flows through the model and extract relevant features.

Choose the appropriate number and type of layers for your specific task. Experiment with different activation functions to capture complex relationships within the data. Common choices include ReLU, sigmoid, and tanh.

Setting up the Training Process (Loss Function, Optimizer, Learning Rate)

To train our model, we must configure the training process. This involves selecting a suitable loss function that measures the discrepancy between predicted and actual labels. For classification tasks like MNIST, we often use cross-entropy loss.

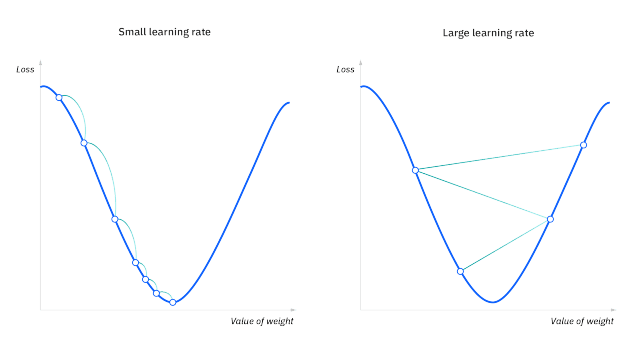

In addition, we need to choose an optimizer that updates the model's parameters based on the calculated gradients. Popular optimizers include Stochastic Gradient Descent (SGD) and Adam. The learning rate controls the step size of parameter updates and plays a crucial role in convergence.

Training and Monitoring the Model (Loss, Accuracy)

With our data, model architecture, and training process set up, it's time to train the model. During training, the model learns to minimize the chosen loss function by adjusting its parameters iteratively. As the training progresses, keep an eye on the loss value. It should gradually decrease, indicating that the model is learning.

Another metric to monitor is accuracy. It measures how well the model predicts the correct labels. Compute accuracy on a separate validation set to assess the model's performance and identify potential overfitting or underfitting.

Evaluating and Interpreting the Results

Once the model is fully trained, evaluating its performance on unseen data is time. Use the testing set, set aside during preprocessing, to assess how well your model generalizes to new examples. Calculate metrics such as accuracy, precision, recall, or F1 score to gain insights into the model's performance.

Interpreting the results is crucial for understanding your model's strengths and weaknesses. Visualize misclassified examples or generate explanation techniques like feature importance to understand which areas the model struggles with.

Suggested Reading:

Advanced Topics

As you progress in your deep learning journey with MXNet, it's essential to explore advanced topics that can enhance the performance and deployment of your models. Let's dive into some of these advanced concepts and techniques.

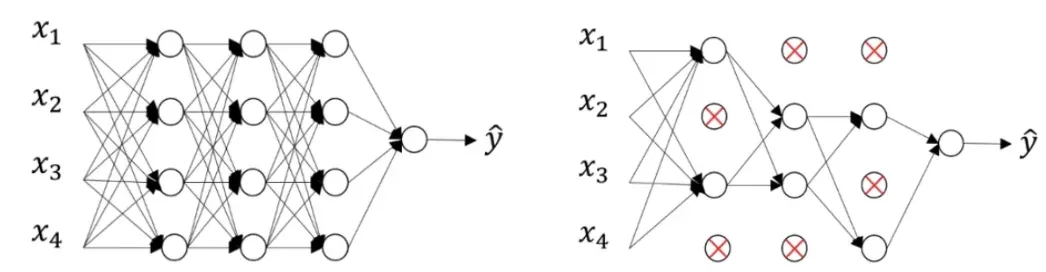

Regularization Techniques (Dropout, L1/L2)

Regularization techniques play a vital role in preventing overfitting and improving the generalization of your models. Overfitting occurs when a model becomes too specialized in the training data and performs poorly on unseen data. MXNet provides various regularization techniques to combat overfitting.

L1 and L2 regularization are other effective methods. L1 regularization adds a penalty term to the loss function based on the absolute value of the model's parameters, encouraging sparsity and feature selection. L2 regularization adds a penalty term based on the squared magnitude of the parameters, which discourages large parameter values and promotes more balanced weights.

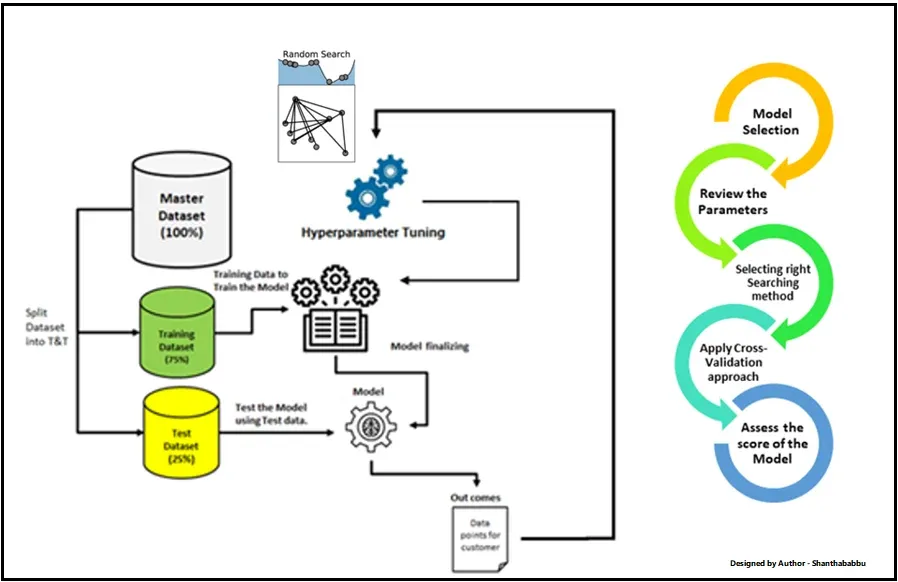

Hyperparameter Tuning

Hyperparameters are parameters that are not learned directly from the data but define the model's behavior and performance. Examples of hyperparameters include the learning rate, batch size, number of layers, and number of neurons per layer. Tuning these hyperparameters is crucial for obtaining optimal model performance.

Evaluation metrics such as accuracy or validation loss are used to assess each combination of hyperparameters. Automated tools like MXNet's AutoGluon can also be leveraged to automate the hyperparameter tuning process.

Model Deployment and Inference

Once you've trained your model, it's time to deploy it and make predictions on new data. MXNet offers various options for model deployment and inference, allowing you to leverage your trained models in real-world applications.

For larger-scale deployments, you may consider using MXNet's model server or deploying your model on cloud platforms like AWS or Azure. These platforms provide scalable and efficient solutions for serving your models and handling high-performance inference requests.

Conclusion

In conclusion, MXNet has established itself as a robust and versatile deep learning framework, offering comprehensive tools and features for building and deploying AI models across various domains. Its ability to support multiple programming languages, seamless integration with cloud platforms, and efficient distributed computing capabilities make it a compelling choice for developers and organizations.

A recent report by MarketsandMarkets estimates that the global deep learning market size will grow from $10.2 billion in 2022 to $94.4 billion by 2030, at a Compound Annual Growth Rate (CAGR) of 32.1% during the forecast period. The increasing adoption of deep learning across healthcare, finance, retail, and manufacturing drives this growth.

Furthermore, a survey conducted by the Eclipse Foundation in 2022 revealed that MXNet is among the top 5 most widely used deep learning frameworks in the enterprise sector. This highlights the framework's appeal and acceptance among organizations prioritizing scalability, performance, and ease of deployment.

As the field of AI and deep learning continues to evolve, MXNet's flexibility, efficiency, and strong community support position it as a powerful tool for researchers, developers, and organizations seeking to leverage the full potential of deep learning technologies.

Suggested Reading:

Why Machine Learning Development is Pivotal for Your Business?

Frequently Asked Questions (FAQs)

Why choose MXNet for deep learning?

MXNet offers ease of use, supports multiple programming languages, and runs efficiently on various hardware platforms.

What are the prerequisites for learning MXNet?

A basic understanding of Python programming and familiarity with linear algebra concepts are recommended.

What can I build with MXNet?

MXNet can be used for various AI tasks like image recognition, natural language processing, and recommendation systems.

How does MXNet compare to other deep learning frameworks?

MXNet is similar to popular frameworks like TensorFlow and PyTorch but offers advantages in flexibility and customization.

Is MXNet difficult to learn?

While deep learning has a learning curve, MXNet's clear documentation and helpful community make it approachable.

What are some real-world applications of MXNet?

MXNet is used in various applications like self-driving cars, medical image analysis, and fraud detection.