What is Preprocessing in Data Mining?

Data preprocessing is an absolutely essential step in the data mining process. It revolves around transforming raw data into an understandable format, ensuring that quality insights can be extracted from the data later on. Real-world data tends to be incomplete, noisy, and inconsistent — data preprocessing helps to address these issues.

Why is Preprocessing necessary in Data Mining?

In this section, we'll delve into reasons why preprocessing is considered a crucial phase in the data mining process.

Handling Missing Values

Data in the real-world is often incomplete. Preprocessing helps to manage these missing values, either by inferring them based on data trends or ignoring them if necessary.

Eliminating Noise

Real-world data is frequently noisy and inconsistent. Preprocessing aids in removing noise from the data and making it more meaningful for analysis.

Facilitating Data Transformation

For many data mining algorithms, certain data formats are required. Preprocessing transforms the data into these required formats, enabling these algorithms to function correctly.

Reducing Dimensionality

Datasets often come with many dimensions, some of which may not be necessary. Preprocessing conducts dimensionality reduction to improve computational efficiency.

Enhancing Scalability

Huge amounts of data can impede data mining. Preprocessing can reduce data size, thus providing scalability and improving the efficiency of data mining algorithms.

Ensuring Data Cleaning

Data cleaning, a part of preprocessing, deals with the identification and removal of errors and inconsistencies in the data.

Assisting Normalization

Normalization modifies scales of the numeric attributes to fit within a small specified range. Preprocessing handles this operation, allowing for better comparison and understanding of the data.

Performing Data Integration

Data from multiple sources needs to be consolidated. Preprocessing performs this data integration, allowing for the cross-referencing of data from different databases.

Improving Data Quality

All these factors contribute to improving the overall data quality, making the data more suitable for data mining and thus increasing the accuracy of the process.

Assuring Better Analysis Results

Ultimately, preprocessing in data mining ensures that the resulting analysis is accurate and meaningful. It enhances the efficiency and effectiveness of the data mining process, leading to reliable results.

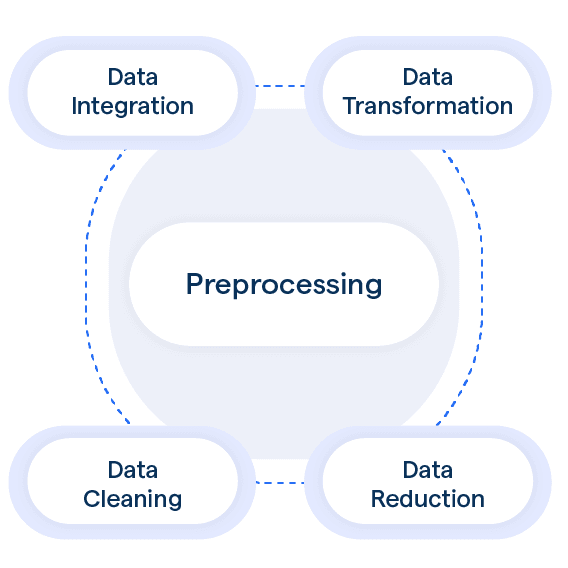

How to perform Preprocessing in Data Mining?

In this section, we’ll discuss the fundamental steps involved in preprocessing data in preparation for efficient data mining.

Data Cleaning

The initial stage of preprocessing consists of data cleaning, where inconsistencies, errors, and discrepancies in the data are identified and corrected. Outliers, irrelevant data, and missing values are taken care of at this stage.

Data Reduction

Datasets can often be extensive, with many dimensions, or attribute types. But not all these dimensions are necessarily useful for data mining. Data reduction strategies reduce the size of data by removing irrelevant attributes or aggregating data at higher levels.

Data Transformation

This step involves converting raw data into a specified format, to meet the assumptions or requirements of the data mining algorithms. The data could be scaled, normalized, or categorical values might be converted to numeric and so on.

Data Discretization

Discretization is an essential step when the algorithm does not support continuous values. It involves dividing the range of a continuous attribute into intervals and substituting the actual attribute value with the interval.

Data Integration

Data commonly comes from a variety of sources. Data integration is the process of consolidating disparate data sets into a cohesive one. It includes identifying and resolving data conflicts.

Data Normalization

The final step, normalization, modulates the values of numeric columns in the dataset to a standard scale. This enables comparison of different datasets in a meaningful and informative way.

By following these steps, the data preprocessing phase ensures the data is suitable and optimized for mining, leading to accurate and efficient analyses.

Different Techniques used in Preprocessing

In this section, we'll explore various techniques used for preprocessing.

Tokenization

Tokenization involves breaking text into individual tokens, usually words, sentences, or phrases. This step is necessary for almost all text-based preprocessing to facilitate further analysis.

Data Cleaning

Data cleaning involves detecting and correcting any inconsistencies, errors, or inaccuracies within the dataset. It may include handling missing, duplicate, or incorrect values, ensuring optimal data quality.

Stop Words Removal

Stop words removal entails eliminating common words, which usually hold little semantic value like 'the' or 'and.' Removing them from text data allows for a more focused analysis on relevant keywords and phrases.

Stemming and Lemmatization

Stemming and lemmatization are techniques to reduce words to their basic form. Stemming involves chopping off prefixes or suffixes while lemmatization considers the context to convert words to their base form. Both techniques aid in text analysis by standardizing terms.

Feature Scaling

Feature scaling centralizes and scales numerical features in your dataset to ensure that they contribute equally during model training. Common techniques include normalization (scaling values between 0 and 1) and standardization (scaling to have mean 0 and standard deviation 1).

Encoding Categorical Features

Encoding categorical features involves converting non-numeric data into a numerical format. Models often require numeric inputs, and encoding techniques like one-hot encoding or label encoding help transform categorical features into a compatible state for analysis or training.

Challenges and Limitations of Preprocessing in Data Mining

In this section, we'll discuss the potential challenges and limitations associated with data preprocessing in the data mining process.

Data Quality Issues

One of the primary challenges in preprocessing is handling data quality issues, such as missing values, noise, and inconsistencies. Incomplete and inconsistent data can lead to inaccurate or unreliable insights.

Feature Selection and Dimensionality Reduction

The process of identifying the most relevant features for analysis can be challenging, as it requires domain knowledge, understanding the importance of each feature, and dealing with high-dimensional data. Dimensionality reduction techniques, like Principal Component Analysis (PCA), can help, but they may not always preserve all necessary information.

Handling Imbalanced Data

Data preprocessing often involves dealing with imbalanced datasets, where some classes have significantly fewer examples than others. This imbalance can bias classifiers towards the majority class and lead to poor performance for the minority class.

Data Transformation and Normalization

Transforming data into a suitable format for analysis can be a complex task. Data normalization, which scales variables to a common range or standardizes them, is crucial to avoid biased results. However, selecting the appropriate normalization technique for a specific dataset is a challenge.

Privacy and Security Concerns

While preprocessing data, privacy and security concerns may arise, particularly when dealing with sensitive information. Proper anonymization and ensuring compliance with privacy regulations are necessary to maintain data confidentiality and protect user privacy.

Computational Complexity

Some preprocessing tasks, like feature selection or handling large datasets, can be computationally intensive, which may limit the efficiency and scalability of the data mining process. The choice of algorithms and optimization techniques plays a significant role in overcoming such challenges.

Frequently Asked Questions (FAQs)

What is preprocessing in data mining?

Preprocessing is the crucial step of cleaning and transforming raw data into a suitable format for analysis. It involves tasks like removing duplicates, handling missing values, and normalizing data.

Why is data preprocessing important?

Data preprocessing ensures the quality and reliability of the data used for analysis. It helps in removing noise, reducing data redundancy, handling missing values, and preparing the data for accurate and efficient analysis.

What are the different techniques used in data preprocessing?

Some common techniques in data preprocessing include data cleaning, data integration, data transformation, and data reduction. Each technique serves a specific purpose in improving the quality of data for analysis.

What is data cleaning in preprocessing?

Data cleaning involves removing or correcting inconsistencies, errors, and outliers from the raw data. It includes tasks like handling missing values, removing duplicates, and correcting format inconsistencies.

What is data transformation in preprocessing?

Data transformation involves converting raw data into a more suitable format for analysis. It can include tasks such as normalization, discretization, and creating new derived features from existing ones.