What is the Vanishing Gradient Problem?

Imagine you're trying to teach a deep neural network and it just isn't grasping the lessons. The culprit could be the vanishing gradient problem.

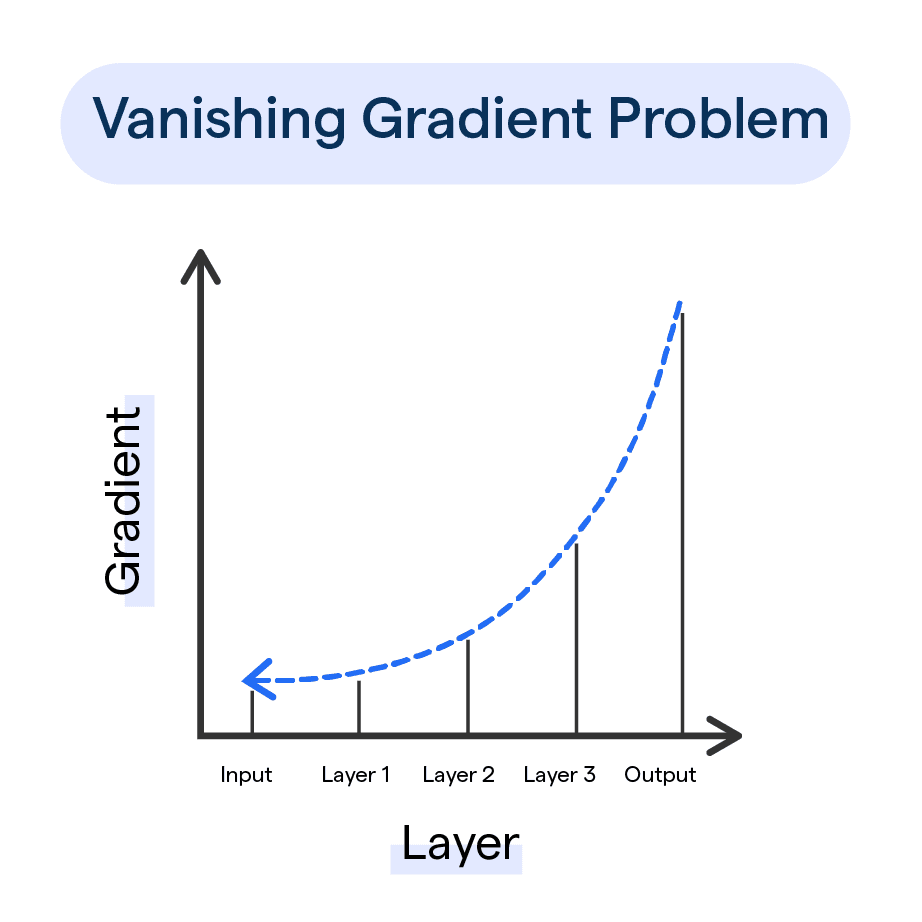

In the simplest terms, when training neural networks, especially deep ones, the gradients of the loss function can shrink exponentially as they backpropagate through the layers.

This phenomenon is known as the vanishing gradient problem.

Significance of Gradient Descent in Neural Networks

Much like we learn from our mistakes, neural networks do the same through a process called gradient descent.

It's a method used to optimize the weights of the nodes in a neural network, basically the teacher in the room.

Gradient descent iteratively adjusts the weights so as to minimize the difference between the network's output and the expected output.

Vanishing Gradient: A Stumbling Block in Deep Learning

The vanishing gradient problem, it's the thorn in the side of deep learning models.

Why, you ask?

Well, as gradients vanish, the weights in the earlier layers of the network are updated less frequently compared to the later layers.

This asymmetry in learning causes the network to underperform since it can't properly fine-tune all of its weights, and that's a big problem for deep learning models.

What are Activation Functions?

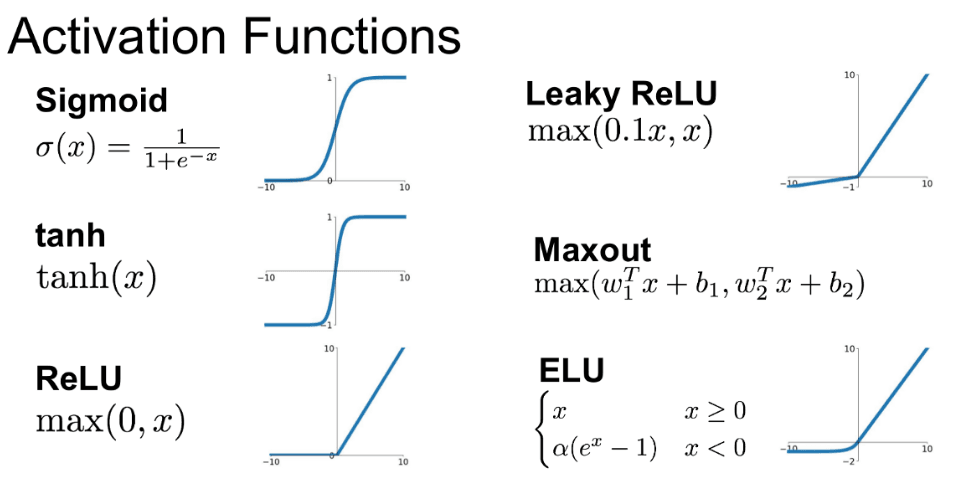

Activation functions are an essential component of artificial neural networks. They are mathematical equations that determine the output of a neural network node given an input or set of inputs.

The main purpose of activation functions is to introduce non-linearity into the neural network. Most data that neural networks model have some non-linear characteristics, so activation functions enable the network to learn these complex relationships.

A common example is the sigmoid activation function. It takes a real-valued input, squashes it between 0 to 1, and outputs this transformed value.

For example, if the input is 50, the sigmoid function transforms this into an output of ~1. The S-shaped curve adds non-linearity to the network. Other activation functions like ReLU also add non-linear behaviors in different ways.

Without activation functions, neural networks would be restricted to modeling linear relationships, limiting their applicability. The non-linear transformations enable them to learn and approximate almost any function given sufficient data.

Activation functions also help normalize the output of a node so that inputs flowing into subsequent layers remain in a range that supports effective learning across the network.

Exploding Gradient Problem

Here is a brief overview of the exploding gradient problem in deep learning:

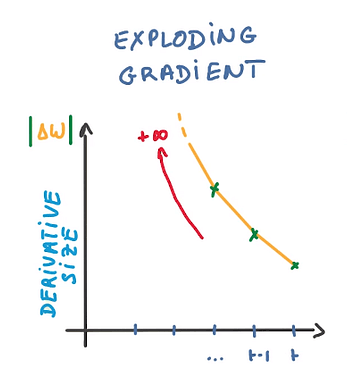

The exploding gradient problem refers to the issue where large error gradients accumulate and result in very large updates to neural network weights during training. This causes the weights to "explode" and take on extremely large values.

This problem typically occurs in deep neural networks that have many layers. During backpropagation, the gradients are multiplied over each layer, which can lead to an exponential growth in the gradients as they get passed back through the network.

Exploding gradients make the model unstable and unable to converge on a solution. They cause saturated neuron activations, slow down training, and lead to NaN values.

Several techniques are used to mitigate exploding gradients:

- Gradient clipping - Thresholding gradients to a maximum value

- Weight regularization - Adding a penalty term to weight updates

- Careful initialization - Starting with small weight values

- Batch normalization - Normalizing layer inputs

- Better optimization functions - Using Adam, RMSprop instead of basic SGD

By identifying and preventing exploding gradients, deep networks can be trained more stably and achieve better performance.

How to calculate gradients in neural networks?

In this section, let's decipher how gradients are calculated in neural networks, diving in from the forward pass all the way to the backpropagation step.

Step 1

Forward Propagation

First off, the neural network takes a journey forward, from the input to the output layer.

The input data passes through each layer of the network, where each node calculates the weighted sum of its inputs, applies a chosen activation function, and forwards the output to the next layer.

This process, known as forward propagation, culminates in the network producing its initial prediction.

Step 2

Calculating the Loss

Next up is measuring how 'off' the network's prediction was from the actual value. Here enters the loss function stage-right! The loss function quantifies the difference between the network’s prediction and the true value.

Step 3

Backward Propagation

After getting a note of the network’s prediction error, it’s time to teach it where it went wrong.

Backward propagation, or backprop, kicks in. It's a process of going back through the layers of the network to calculate the gradient of the loss function with respect to each weight and bias. The Chain rule of calculus is the star of this step!

Step 4

Update Weights and Biases

Informed by the gradients calculated, welcome the final step - the weights and biases across the network are adjusted. Using gradient descent, an optimization algorithm, the weights are updated to minimize the loss.

Step 5

Rinse and Repeat

The entire process of forward pass, loss calculation, backpropagation, and weight update gets repeated over multiple iterations, incrementally refining the model in the pursuit of accurate predictions.

Signs of the Vanishing Gradient Problem

To determine if your model is suffering from the Vanishing Gradient Problem, keep an eye out for the following signs:

- Parameters of higher layers change significantly, while parameters of lower layers barely change (or don't change at all).

- Model weights could become 0 during training.

- The training process progresses at an extremely slow pace, stagnating after a few iterations.

Causes of the Vanishing Gradient Problem

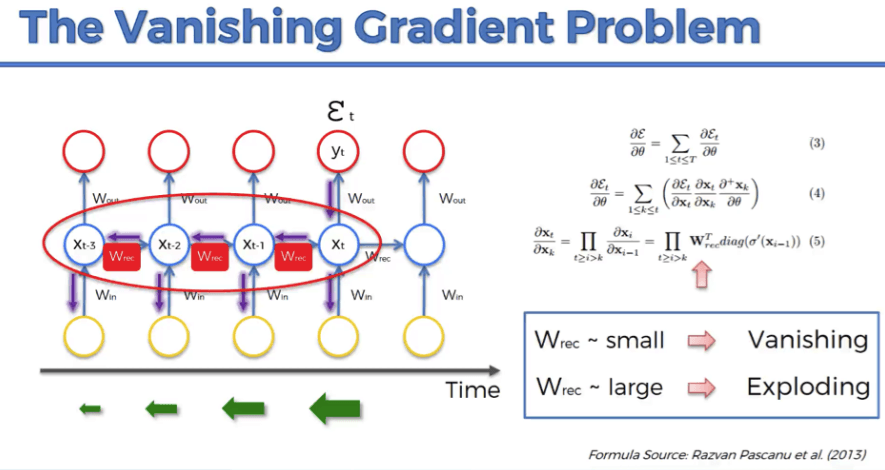

The vanishing gradient problem refers to the tendency of gradient values to become increasingly small during backpropagation in deep neural networks.

This impedes learning as the neural network layers stop updating their weights and biases effectively. There are a few key reasons this happens:

- Repeated multiplication of small values: The chain rule in backpropagation involves multiplying gradients sequentially across each layer. If the values are fractions, they tend to get smaller.

- Saturated activations: When activations saturate at 0 or 1 (like sigmoid), the local gradient is close to zero, leading to vanishing gradients.

- Weight initialization schemes: Some schemes like large random weights can compound the problem due to large or small gradients.

For example, in a deep network of 10 layers, if each layer reduces the gradient by 50% through saturated activations or fractional weight updates, the final gradient value reaches just 0.001% of the starting value. This minuscule gradient is unable to meaningfully update weights in the early layers.

The problem is exacerbated in very deep networks, as the length of the backpropagation chain increases. This effectively blocks the flow of gradient information to early layers, preventing effective learning.

Solutions include using ReLU activations, residual connections, normalization methods, and better weight initialization techniques. But vanishing gradients remain a key challenge in optimizing deep neural networks.

Methods to Overcome the Vanishing Gradient Problem

Several methods have been proposed to mitigate the Vanishing Gradient Problem. Let's explore some of them:

In this section, we'll discuss various methods employed to tackle the vanishing gradient problem commonly encountered in deep learning.

Long Short-Term Memory (LSTM) Networks

LSTM networks are a type of recurrent neural network (RNN) architecture designed specifically to address the vanishing gradient problem by maintaining a consistent gradient flow throughout the learning process.

Gated Recurrent Units (GRUs)

GRUs are a modified version of RNNs conceived as a lighter alternative to LSTMs, which similarly integrate gating mechanisms to mitigate vanishing gradients by maintaining the consistency of gradient flow during training.

Skip Connections

Skip connections in deep neural networks involve bypassing one or more layers with the intention of ensuring a more direct path for gradient flow, thereby alleviating the vanishing gradient problem.

Batch Normalization

Batch normalization is a technique that normalizes the input distribution of each layer within a deep learning model.

This standardization facilitates a more stable gradient flow, aiding in addressing the vanishing gradient problem while accelerating the overall training process.

Gradient Clipping

Gradient clipping sets an upper limit on the gradients during backpropagation, preventing them from exceeding a specified threshold.

In doing so, this technique helps stabilize the learning process by impeding the occurrence of vanishing or exploding gradients.

Frequently Asked Questions (FAQs)

What is the Vanishing Gradient Problem?

The Vanishing Gradient Problem occurs when gradients become extremely small during deep neural network training, slowing down convergence and limiting the model's learning capabilities.

How does the Vanishing Gradient Problem affect deep learning models?

The Vanishing Gradient Problem can cause slow convergence, get the network stuck in suboptimal solutions, and impair the learning of meaningful representations in deep layers.

How can I identify if my model is suffering from the Vanishing Gradient Problem?

Look for signs like parameters of higher layers changing significantly while lower layers barely change, zeroed model weights, and an extremely slow training process.

What causes the Vanishing Gradient Problem?

The problem arises when the gradients of later layers are less than one, leading to rapid vanishing of gradients in the earlier layers during backpropagation.

Are there any methods to overcome the Vanishing Gradient Problem?

Yes, several techniques can help mitigate the problem, such as using residual neural networks (ResNets), ReLU activation functions, batch normalization, and gated architectures like LSTM and GRU.