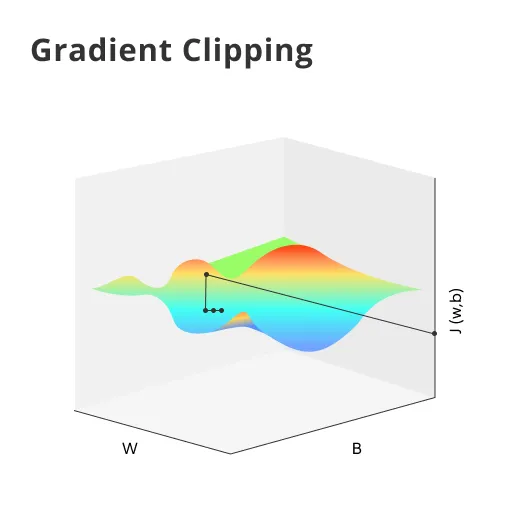

What is Gradient Clipping?

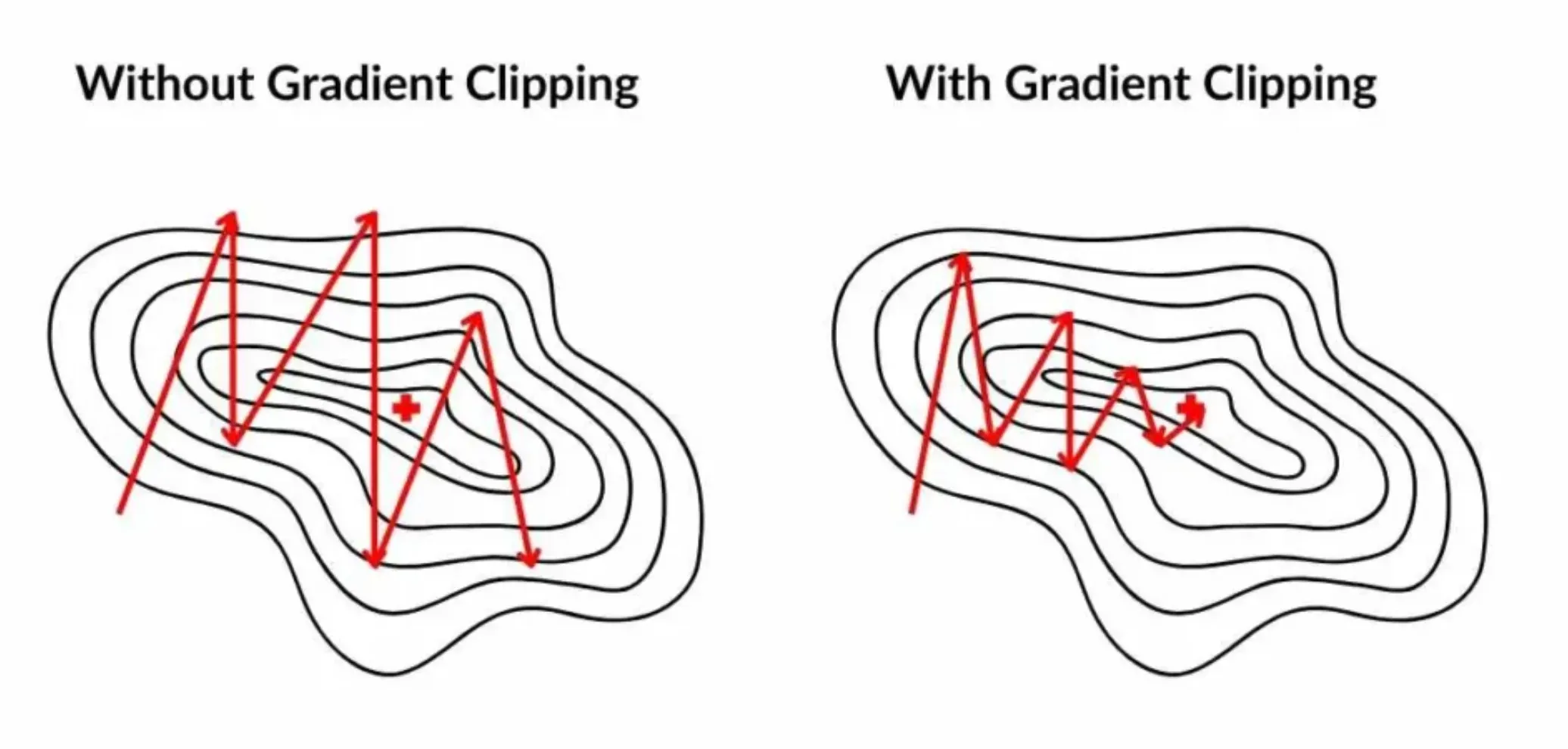

Gradient Clipping is a technique used to prevent exploding gradients in deep neural networks. In other words, it sets a threshold value and reshapes the gradients to ensure they never exceed this value.

Importance of Gradient Clipping

In deep learning, Gradient Clipping restricts the amplitude of gradients. This prevents the occurrence of undesirable changes in model parameters during their update phase.

Types of Gradient Clipping

Gradient Clipping can primarily be of two types - Norm Clipping and Value Clipping. Each has different application cases and their unique pros and cons.

Gradient Clipping Users

Data scientists, machine learning engineers, researchers, and anyone working with deep neural networks, especially LSTM and RNN architectures, can benefit from Gradient Clipping to prevent exploding gradients during training.

Gradient Clipping's Purpose

Gradient Clipping restricts the amplitude of gradients during backpropagation, mitigating the issue of exploding gradients. It sets a threshold value to limit the gradients, preventing undesired changes in model parameters during the update phase.

Appropriate Scenarios for Gradient Clipping

Gradient Clipping is primarily useful in cases of recurrent neural networks (RNNs), long short-term memory networks (LSTMs), and some circumstances in convolutional neural networks (CNNs) that are prone to exploding gradients and unstable training.

Timing for Gradient Clipping

Gradient Clipping should be applied during the backpropagation process, right before the update of model parameters. In software libraries like TensorFlow or PyTorch, this is typically done by including gradient clipping in the optimizer step.

The Need for Gradient Clipping

Exploding gradients can make neural networks challenging to train effectively, reducing their performance and even making them unstable. Gradient Clipping allows for a stabilized learning process, ensuring that the network converges more smoothly and with better overall performance.

Math Behind Gradient Clipping

Diving into the mathematics behind Gradient Clipping can provide better clarity about its workings.

Gradient

A gradient in machine learning is a derivative. It measures how much the output of a function changes if you change the inputs a little bit.

Derivative

A derivative is a concept in calculus that measures how a function dynamically changes at a specific point.

Loss Function

In the context of an optimization algorithm, the function used to evaluate a candidate solution (i.e., a set of weights) is referred to as the objective function. We may refer to it as the loss function.

Gradient Descent

Gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function.

Backpropagation

Backpropagation is a technique to propagate the total loss back into the neural network to know how much of the loss every node is responsible for, and subsequently update the weights in such a way that minimizes the loss by giving the nodes with higher error rates lower weights.

Implementing Gradient Clipping

To utilize Gradient Clipping effectively, it's important to understand its implementation.

In Python Libraries

Libraries like TensorFlow and PyTorch provide direct functions to implement gradient clipping in neural networks easily.

In Custom Neural Networks

For custom modeled neural networks, Gradient Clipping can be implemented manually with slight programming finesse.

Influence on Hyperparameters

Usage of Gradient Clipping may require adjustments in hyperparameters like learning rate and batch size, and may also involve monitoring the ratio of clipped gradients in total gradients.

Clip Value vs. Clip Norm

While implementing, a choice has to be made between clipping the gradient values directly or clipping their norms based on scenarios.

Regularization and Gradient Clipping

Regularization routines like weight decay, dropout, and also early stopping strategies interact with Gradient Clipping, and hence provide more nuanced opportunities for optimization.

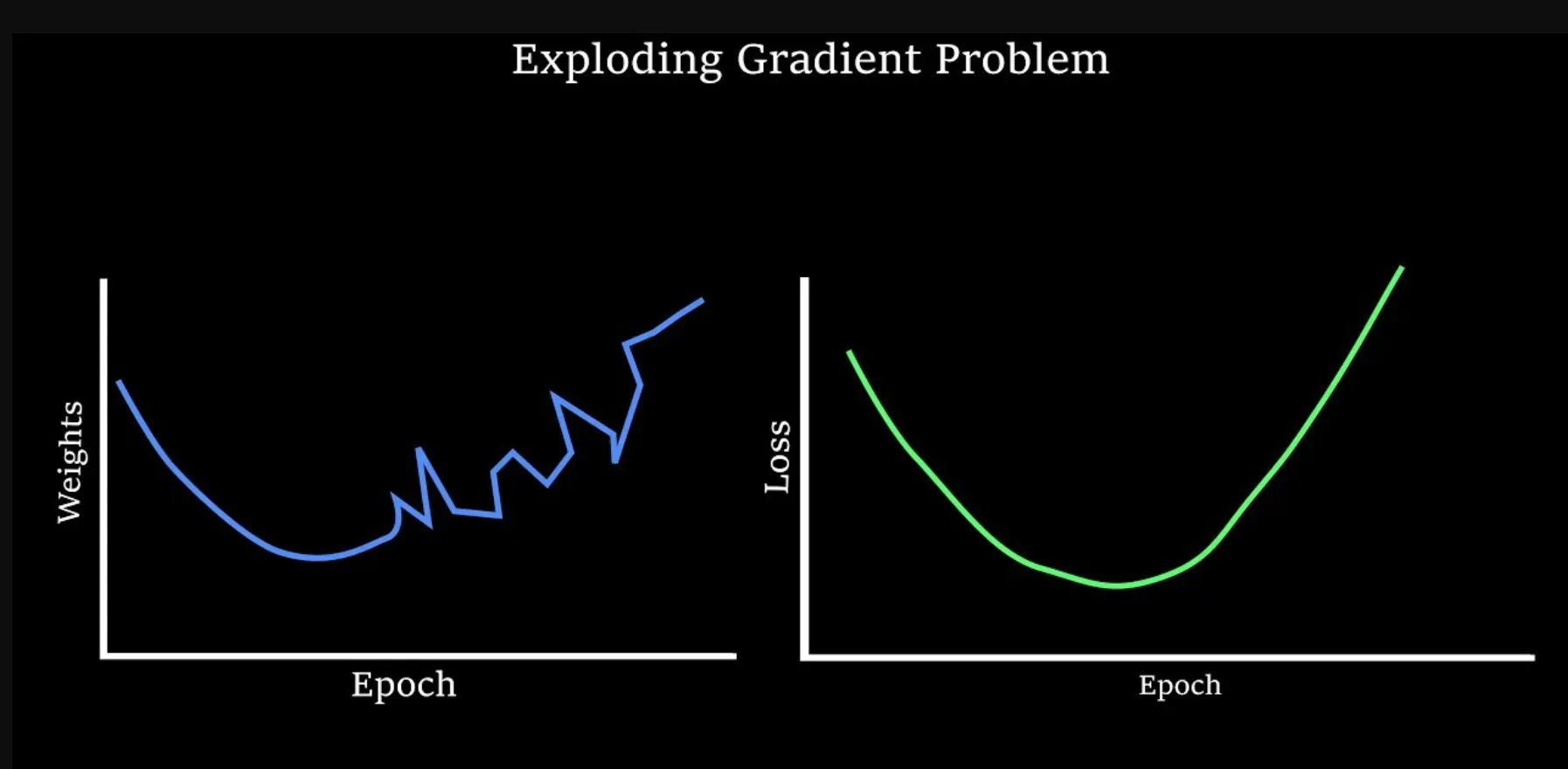

The Issue of Exploding Gradients

Understanding the problem Gradient Clipping aims to solve is crucial.

Explanation of Exploding Gradients

Exploding gradients refer to the large increase in the norm of the gradient during training. Such gradients can result in an unstable network and make the network model weights to become very large, leading to poor model performance.

Identification of Exploding Gradients

Practically, exploding gradients can be detected by monitoring the magnitude of the gradients or the weights. If they become a significantly large number or NaN values pop up in computations, it signals the existence of exploding gradients.

Exploding Gradients In Different Networks

Exploding gradients are a significant problem in certain types of neural networks, like in Recurrent Neural Networks (RNNs) and Long Short-Term Memory Networks (LSTMs).

Implications of Exploding Gradients

If left unattended, exploding gradients can lead to numerical overflow or underflow issues, instability during training, and worse, completely render a network useless.

Initial Strategies to Combat Exploding Gradients

Before the advent of Gradient Clipping,-strategies like careful initialization of the network, smaller learning rate or batch normalization were in practice to dampen the effect of exploding gradients.

Gradient Clipping With Recurrent Neural Networks (RNN)

RNNs notoriously suffer from exploding gradients. Understanding how Gradient Clipping helps here can be beneficial.

Overview of RNN

Recurrent Neural Networks are a class of artificial neural networks designed to recognize patterns in sequences of data, such as text, genomes, handwriting, or the spoken word.

RNN and Exploding Gradients

By design, RNNs have a tendency to accumulate and amplify errors over time and iterations. This makes them particularly susceptible to the problem of exploding gradients.

Role of Gradient Clipping in RNN

For RNNs, Gradient Clipping ensures that errors backpropagated through time don't explode or vanish and thus, help in stabilizing learning.

Different Methods of Clipping In RNN

In the context of RNNs, both norm-based and value-based gradient clipping techniques find prominence based on the use case.

Performance Enhancement in RNN with Gradient Clipping

When used appropriately, Gradient Clipping can significantly improve the convergence speed and stability of RNN models.

Advanced Concepts Related to Gradient Clipping

More adept learning involves understanding the intricacies and associated details of Gradient Clipping.

Gradient Clipping and Vanishing Gradients

Despite its utility in deterring exploding gradients, Gradient Clipping cannot indeed solve the problem of vanishing gradients.

Comparative Analysis of Clipping Techniques

Different scenarios and types of data might warrant the use of different types of Gradient Clipping techniques. A comparative understanding can help decision-making.

Thoroughness and Practicality of Gradient Clipping

Though useful, Gradient Clipping sometimes can be an overly aggressive and crude technique which just hides the underlying problem instead of solving it.

Interaction with other Optimization Techniques

Understanding how Gradient Clipping interacts with other optimization techniques can lead to better and more optimal neural network training.

Advanced Optimization Algorithms

More advanced optimization algorithms, like the adaptive ones, implicitly or explicitly perform similar operations like Gradient Clipping, thus reducing or negating its necessity.

Future of Gradient Clipping

Like every technology, concepts and techniques surrounding Gradient Clipping will evolve. Predicting future trends might aid in staying ahead.

Developing Better Techniques

The need for developing more effective, efficient, and nuanced techniques for mitigating exploding gradients is a pressing requirement given the growth of deep learning applications.

Improvements in Software Libraries

Python libraries for machine learning are getting continually updated with better functionalities for gradient clipping and related practices.

Alternative Strategies

Future research in deep learning will likely focus on alternative strategies like architectural modifications or better training algorithms to tackle the problem of exploding gradients.

With Increasing Network Depth

As neural nets get deeper, the challenges from exploding and vanishing gradients get more critical. The relevance and the techniques of Gradient Clipping need to adapt accordingly.

Next Generation Algorithms

Expectations point towards the development of next-generation optimization algorithms capable of adaptively modulating the gradient clipping threshold, or maybe eliminating the need for it altogether.

Best Practices for Gradient Clipping

To make the most of Gradient Clipping, it's essential to follow tried-and-tested best practices.

Choose the Right Clipping Technique

Select between Norm Clipping and Value Clipping based on the problem and the nature of the data. In many cases, Norm Clipping might provide better overall results compared to Value Clipping.

Finding the Optimal Threshold

Experiment with different threshold values to find the one that works best for your network and dataset. A good starting threshold might be 1 or 5, but consider experimenting, validating, and iterating to find the right value.

Monitoring Gradients

Keep track of gradient magnitudes during training to identify whether Gradient Clipping is effectively managing the gradients or if there is still room for improvement.

Integrating with other Regularization Techniques

Coordinate Gradient Clipping with other regularization methods like dropout, weight decay, and early stopping to create more effective and stable models.

Regularly Update Your Knowledge

Stay updated with the latest research and best practices in Gradient Clipping, as the field of deep learning is constantly evolving.

Challenges in Gradient Clipping

Despite its benefits, Gradient Clipping presents its own set of challenges.

Threshold Selection

Choosing the right clipping threshold can be difficult as there is no one-size-fits-all solution, and it often requires a lot of trial and error.

Vanishing Gradient Problem

Gradient Clipping focuses on solving the problem of exploding gradients but does not address the equally important issue of vanishing gradients, which might require additional techniques or model adaptations.

Interaction with Other Techniques

Effectively coordinating Gradient Clipping with other optimization techniques and regularization strategies can be a complex endeavor, but it's essential for optimal model performance.

Limited Use Cases

Gradient Clipping is predominantly useful in specific neural network types like RNNs or LSTMs. Its relevance is situational and may not be required for simpler networks or specific problems.

Development of Novel Techniques

As new research emerges, determining whether newer techniques for addressing exploding gradients are more suitable than Gradient Clipping can be a challenge.

Frequently Asked Questions (FAQs)

Why is Gradient Clipping essential in Deep Learning?

Gradient Clipping is vital for addressing exploding gradients during deep learning, preventing the model's weights from becoming too large. It promotes model stability, preserving data structure, and reducing the risk of vanishing or exploding gradients.

How does Gradient Clipping enhance model training?

By limiting the gradient values within a specific range, Gradient Clipping ensures that the model update step during backpropagation remains controlled. This results in a more stable training process, preventing gradient explosion, and improving convergence time.

What are the most common Gradient Clipping techniques?

Norm-based and value-based clipping are prevalent techniques. Norm-based clipping scales gradients based on their L2-norm, whereas value-based clipping sets a hard limit on the gradient values, trimming any values exceeding the range.

When should Gradient Clipping be applied in model training?

Gradient Clipping should be applied during backpropagation, when gradients are calculated and used to update the model's weights. It's especially necessary when training deep neural networks or using recurrent neural networks (RNNs), which are prone to exploding gradients.

Can Gradient Clipping hinder model performance?

If applied excessively, Gradient Clipping can negatively impact model performance. Extremely strict clipping thresholds could cause valuable gradient information loss, deteriorating the model's learning ability. Finding an appropriate balance is crucial for optimal results.