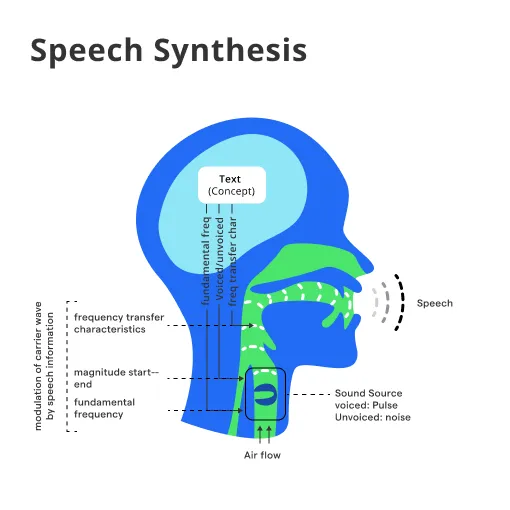

What is Speech Synthesis?

Speech Synthesis, also known as text-to-speech (TTS), is the artificial production of human speech. It's a technology that converts written information into spoken words, allowing computers and other devices to communicate information out loud to a user or audience.

Text-to-Speech Process

We usually start with a written text, which is then processed by a TTS engine to generate the spoken version. This process involves several steps such as text normalization, phonetic translation, and prosodic generation before finally culminating in speech output.

Components

The main components of a TTS system are typically a text processor (also known as a front end) that analyzes the input text, and a speech generator (or back end) that converts the processed text into spoken words.

Purpose

The purpose of speech synthesis is to create a spoken version of text information so devices can communicate with users via speech rather than just display text on a screen. This aids in accessibility and improves user experience by enabling a more natural form of communication.

Architecture

Speech synthesis systems are typically comprised of two parts; a front-end and a back-end. The front end is the part that converts raw text into a phonetic or prosodic version, while the back end converts this representation into speech.

Who uses Speech Synthesis?

Now, let's discuss who uses Speech Synthesis and why it's important for them.

Accessibility

One of the primary uses of speech synthesis is to aid those with visual impairments or literacy issues. By reading the text aloud, TTS technologies make information accessible to people who otherwise might not be able to read it.

Multitasking

TTS can help in scenarios where the user's visual attention might be otherwise occupied, such as while driving, cooking, or exercising. In these cases, synthesizing speech from text information allows for an easier, hands-free interaction with devices.

Language Learning

Speech synthesis is also used to assist in language learning. It helps the learner to hear and understand the pronunciation and rhythm of a new language better.

Telecommunications

Telecommunication services employ speech synthesis for various functions such as reading out messages, providing notifications, enabling voice response systems, and much more.

Entertainment Industry

In the entertainment industry, TTS is used to create dialogues for video games, animations, and even movie dubbing. It saves time and expense on voice-over artists and ensures a consistent output.

When is Speech Synthesis used?

Let's discuss the scenarios and applications where Speech Synthesis is used.

Assistive Technologies

Speech synthesis is vital in assistive technologies like screen readers and communication aids. These devices synthesize speech from text to aid users with visual impairments or speech disorders.

Navigation Systems

Turn-by-turn instructions in navigation systems are usually voiced by a speech synthesis system. This allows the user to focus on driving while receiving auditory directions.

E-Learning

In e-learning platforms, content is sometimes delivered via speech synthesis. This supports diverse learning styles and makes digital content more engaging.

Customer Service

Automated customer service is another common application of speech synthesis. By synthesizing human-like speech, customer service bots can create a more personal experience for the customer.

Alerts and Notifications

Speech synthesis is used to deliver spoken alerts and notifications. Especially in cases of emergency alerts, TTS can be used to provide clear, standardized, and immediately understandable alerts.

Where is Speech Synthesis used?

Now, let's discuss where this technology is most commonly adopted.

Personal Devices

One of the most common places we find speech synthesis today is on personal devices like smartphones, tablets, and computers. It’s used across various applications from reading out notifications, navigating, to helping with accessibility.

Public Places

Public places such as airports, railway stations, and metros utilize speech synthesis for public announcements. This ensures clear and consistent delivery of information.

Homes

With the advent of smart homes, speech synthesis is increasingly being used for communication between humans and their home appliances. This enables a more interactive and intuitive user experience.

Automobiles

In-car systems like GPS navigators, infotainment systems, and voice-activated control systems use speech synthesis to communicate information to the drivers and passengers.

Telecommunication Services

Speech synthesis has been a cornerstone of interactive voice response (IVR) systems in telecommunications services. It’s used to provide automated customer support, notifications, and much more.

How does Speech Synthesis work?

Let's discuss how Speech Synthesis works:

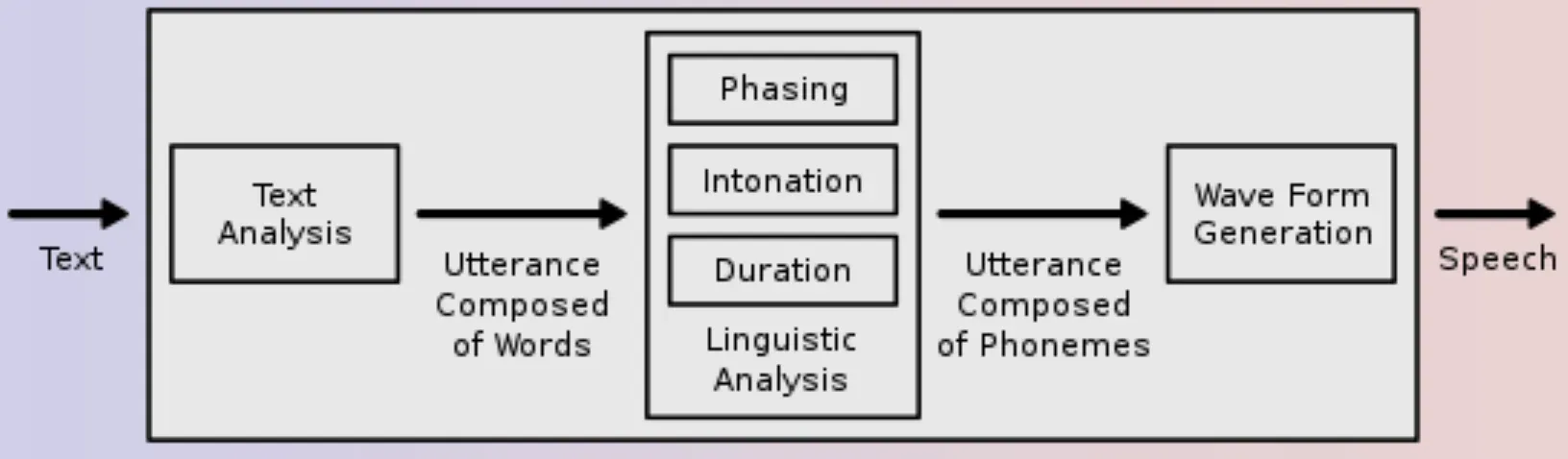

Text Normalization

The first step in TTS is text normalization. This process converts written numbers, abbreviations, and common typographical errors into equivalent verbal forms.

Phoneme Extraction

The next step involves converting the normalized text into phonemes, which are the base units of sound in a language.

Prosody Generation

In this step, the rhythm, stress, and intonation of speech (prosody) are added to the phonemes, giving the speech a more natural sound.

Waveform Generation

Finally, a digital waveform is generated to produce the actual sound of the speech. This can be done through various methods like concatenative synthesis, formant synthesis, or more recently, AI-based methods.

Playback

The synthesized speech is finally outputted either through speakers or saved as an audio file for later usage.

Best Practices for Speech Synthesis

Improving speech synthesis involves balancing technology and user experience. Here’s how it works:

Clarity and Understandability

Every speech synthesis system should prioritize clarity. Choose a voice that is crisp and clearly enunciated. Ensure it can handle complex text passages without confusing the listener.

Natural Sounding Voices

Engaging listeners requires a voice that's pleasant and natural. This means fine-tuning the voice's pitch, tone, pace, and emotion to closely mimic human speech patterns.

Customization Options

Allow users to adjust the voice and speaking rate to suit their listening preferences. Providing a choice of voices, including different genders and accents, can greatly enhance user experience.

Context Awareness

A top-notch system understands the context for proper pronunciation and emphasis. For instance, the way "read" is pronounced changes depending on the sentence it's used in.

Accessibility and Inclusivity

Ensure that your TTS system is accessible to all, including users with disabilities. Include options for visual feedback or transcript displays for those with hearing impairments.

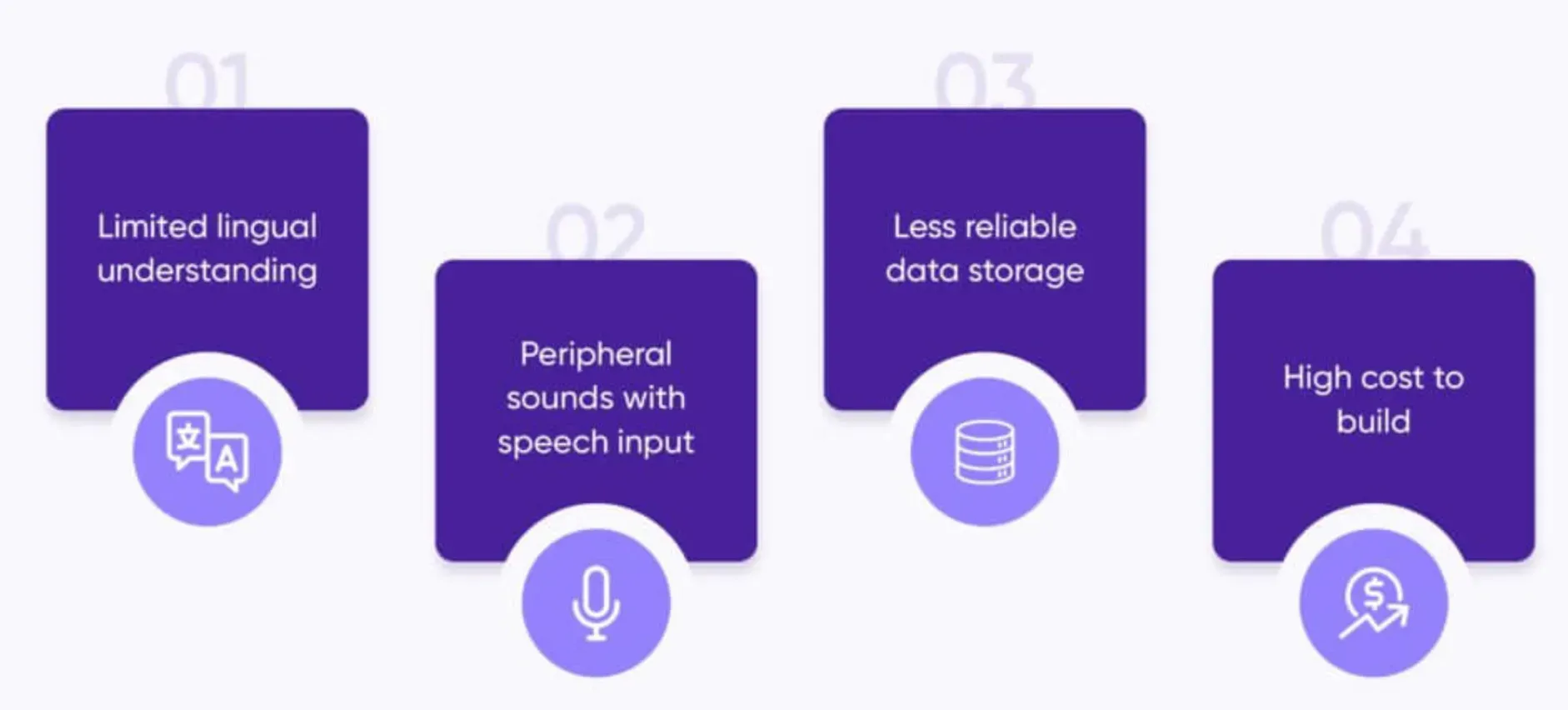

Challenges with Speech Synthesis

While the technology has come a long way, there are still considerable challenges that developers encounter.

Capturing Emotional Nuance

One of the hardest aspects of synthetic speech is giving it the right emotional inflections. Human speech is incredibly complex and emotionally rich, which is not easy for a machine to replicate.

Multilingual Support

Creating a TTS system that supports multiple languages and dialects includes not just vocal adaptations but understanding linguistic nuances, which can be quite challenging.

Processing Speed

Achieving high-quality speech without significant delay is a major obstacle, especially for systems that operate on limited computational resources.

Voice Identity

With personalized voice synthesis, the challenge is not just creating a generic voice but one that could be recognized as belonging to a specific individual.

Continuous Learning

Language is ever-evolving. A robust TTS system should continuously learn and adapt to new linguistic trends and user feedback.

Examples of Speech Synthesis in Action

Let's look at some real-world examples that illustrate how speech synthesis is making a difference.

Assistive Devices for Visually Impaired

Screen readers like JAWS utilize TTS to enable users with visual impairment to access digital content effectively.

Real-Time Translation Services

Google's Translate app offers a conversation mode, using TTS to facilitate cross-language conversations by converting text inputs to vocal outputs in real-time.

Navigation Systems

GPS devices and apps like Waze or Google Maps use speech synthesis to provide turn-by-turn directions, allowing drivers to keep their eyes on the road.

Virtual Assistants

Voice-powered assistants like Amazon’s Alexa and Apple’s Siri use speech synthesis to interact with users, providing a seamless hands-free experience.

E-Books and Online Articles

Applications like Audible and Voice Dream Reader transform written content into audio, allowing users to listen to books and articles on-the-go.

Speech synthesis has evolved from robotic voices to natural-sounding, emotionally expressive speech. It's an essential technology with vast applications that improve accessibility, provide assistance, and enhance user experience.

With ongoing advancements and creative implementations, speech synthesis continues to break boundaries, making digital content more alive and interactive than ever before.

Recent Trends in Speech Synthesis

To wrap things up, let's take a look at some of the recent trends in Speech Synthesis.

Deep Learning Models

Deep learning-based speech synthesis methods are gaining popularity. Models like Tacotron and WaveNet have shown promising results in generating high-quality speech.

Emotion Synthesis

As part of the ongoing effort to make synthesized speech more human-like, adding emotion to synthesized speech is a growing trend.

Personalized Voice Synthesis

This involves synthesizing a user’s own voice from text, which can unlock new potentials in the realm of personalization.

Multilingual Synthesis

In an increasingly globalized world, developing speech synthesis that can handle multiple languages efficiently is widely desired.

Real-time Application

Real-time applications like live readers or voice assistants require rapid response times, pushing advancements in real-time speech synthesis technologies.

Frequently Asked Questions (FAQs)

What technologies are utilized in modern Speech Synthesis?

Modern speech synthesis leverages deep learning techniques such as recurrent neural networks and convolutional neural networks for generating natural-sounding speech.

How does prosody impact Speech Synthesis?

Prosody, which includes rhythm, stress, and intonation of speech, is crucial in speech synthesis for producing natural and expressive synthetic speech. It directly affects the listener's comprehension and the speaker's emotions and intentions.

Can Speech Synthesis create emotive and context-aware speech?

Yes, advanced speech synthesis systems can produce emotive speech by understanding context and desired emotions, thereby tailoring the voice tone, pitch, and speed accordingly.

What is the Role of Text-to-Speech (TTS) Engines in Speech Synthesis?

Text-to-Speech engines convert written text into spoken words, providing the backbone for speech synthesis by generating audible speech from raw text inputs.

How has the WaveNet Model advanced the field of Speech Synthesis?

WaveNet, a deep neural network for generating raw audio, marked a significant advance in speech synthesis through its ability to produce speech that mimics human voice and cadences with high fidelity.