What is LLM Alignment?

In this section, we’ll have a detailed discussion on “what is LLM alignment”, beginning with the definition.

Definition

LLM Alignment refers to the process of ensuring that large language models behave in ways consistent with human values and intentions. This means guiding the AI to make decisions and produce outputs that are beneficial and non-harmful.

Importance of Aligning LLMs

Aligning LLMs is important because these models influence various aspects of our digital lives, from chatbots to content moderation. Proper alignment ensures that AI behaves ethically and delivers outcomes that align with user expectations.

How Alignment Ensures Proper Behavior

Through alignment, the LLM model are trained to prioritize actions that match human goals. This involves refining the model so it doesn’t produce harmful or biased outputs, making AI safer and more trustworthy.

Methods for Aligning LLMs

In this section, you’ll find information on different types of LLM alignment.

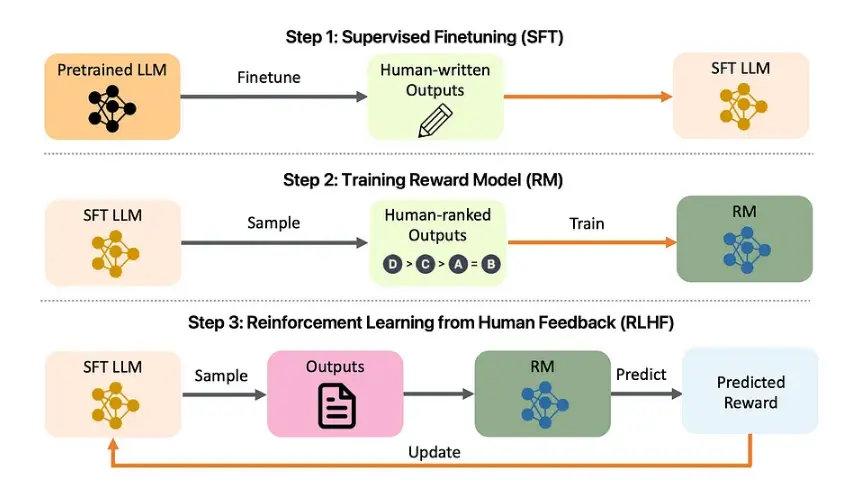

Supervised Fine-Tuning

In supervised fine-tuning, the LLM model is trained with specific labeled examples that show the desired behavior.

For instance, if you want an AI to answer customer queries politely, you would fine-tune it with examples of polite responses. This method helps align the model with expected outcomes.

Reinforcement Learning

Reinforcement learning involves training the LLM model to optimize for specific goals by rewarding desired behaviors and penalizing unwanted ones.

For example, an AI could be trained to prioritize factual accuracy by rewarding correct information and penalizing false statements. This iterative process gradually aligns the model with the intended behavior.

Human Feedback

Involving human evaluators to assess the AI’s outputs is crucial for alignment. Humans review the model’s responses and provide feedback on what’s right or wrong.

The AI then adjusts based on this feedback, ensuring its outputs align more closely with human expectations.

Real-World Applications of LLM Alignment

This section explores the real-world applications of LLM alignment.

Improving AI in Healthcare

LLM Alignment plays a vital role in healthcare, where AI is used to assist in diagnosis or patient communication. By aligning AI with medical ethics and best practices, we ensure that the technology provides accurate and helpful information, improving patient outcomes.

Importance in Customer Support Chatbots

In customer support, aligning the LLM model ensure that chatbots provide accurate, polite, and helpful responses.

For instance, a well-aligned chatbot will handle customer complaints with empathy and efficiency, enhancing the overall customer experience.

Role in Content Moderation and Social Media

LLM Alignment is critical in content moderation, where AI must identify and remove harmful content without infringing on free expression. Proper alignment helps strike the right balance, ensuring social media platforms remain safe and inclusive.

Future of LLM Alignment

The future of LLM alignment is as follows:

Expected Advancements and Ongoing Research

As AI technology evolves, so will methods for LLM Alignment. Researchers are continually exploring new ways to refine and improve alignment processes, making AI systems more reliable and ethical.

Growing Need for Robust Alignment

With AI becoming increasingly integrated into daily life, the need for robust alignment is more critical than ever. Future advancements in LLM Alignment will play a key role in ensuring that AI continues to serve humanity’s best interests.

Challenges in LLM Alignment

The challenges in LLM alignment are the following:

Inherent Biases in Training Data

The LLM model learns from vast amounts of data, which often contains biases. These biases can lead to skewed or unfair outputs.

For example, an AI trained on biased data might make inaccurate assumptions about certain groups of people, leading to unintended consequences.

Difficulty in Aligning with Human Values

Human values are complex and varied, making the LLM model challenging to align AI with them. What one person views as ethical, another might not. This diversity makes it difficult to create a one-size-fits-all alignment approach.

Technical Limitations and Unpredictability of LLMs

The LLM model is incredibly complex and can sometimes behave in unpredictable ways. Even with alignment efforts, there’s no guarantee that the model will always act as expected. This unpredictability poses a significant challenge in ensuring consistent and safe AI behavior.

Frequently Asked Questions (FAQ)

What is LLM Alignment?

LLM Alignment refers to the process of ensuring that large language models (LLMs) behave according to human values and intentions, producing outputs that are ethical, reliable, and aligned with user expectations.

Why is LLM Alignment important?

LLM Alignment is crucial because it helps prevent harmful or biased outputs, builds trust in AI systems, and ensures that AI models act in ways that align with human values and societal norms.

What are the challenges in LLM Alignment?

Challenges in LLM Alignment include dealing with inherent biases in training data, aligning AI with diverse human values, and managing the unpredictability of large language models.

How is LLM Alignment achieved?

LLM Alignment is achieved through methods like supervised fine-tuning, reinforcement learning, and incorporating human feedback to guide the model’s behavior and refine its outputs.

What are the benefits of LLM Alignment?

Benefits of LLM Alignment include safer and more trustworthy AI systems, improved decision-making, better user experiences, and compliance with ethical and regulatory standards.

What is the future of LLM Alignment?

The future of LLM Alignment involves ongoing research and advancements in techniques to ensure AI systems are more robust, ethical, and aligned with the evolving needs of society.