What is an Echo state network (ESN)?

An Echo state network is a neural model that relies on a unique network structure to simplify learning. Instead of training all layers, it only trains the output weights while the rest of the network remains static.

This structure allows it to process and recognize patterns in data with minimal training. Thus making it faster and more resource-efficient than traditional RNNs.

Purpose of Echo State Networks

The main purpose of an Echo state network is to handle complex, sequential patterns often found in time-series data, such as stock trends, speech signals, and weather data.

ESNs make real-time analysis easier by requiring less computational power and shorter training times than traditional RNNs.

This efficiency has made ESNs a popular choice for applications where quick adaptation and minimal training are essential.

Role of Echo State Network Deep Learning (Deep ESN) in ESN

Echo state network deep learning extends ESNs by incorporating multiple stacked reservoirs. Each layer captures increasingly complex temporal features, similar to how deep neural networks learn hierarchical representations.

In Echo state network deep learning, only the output layer is trained, reducing computational complexity while preserving the ability to model intricate temporal dependencies.

Echo state network deep learning excels in areas like speech recognition, time-series forecasting, and control systems. Their ability to process complex temporal data with minimal computational overhead makes them ideal for real-time applications.

Why Are Echo State Networks Different from Traditional RNNs?

Traditional RNNs are known to be difficult to train due to issues like the vanishing gradient problem, where information fades as it moves through the network. This limitation means RNNs can struggle with long-term dependencies in data.

The Echo state network solves this by using a specific structure that simplifies the training process.

How ESNs Overcome the Challenges of Traditional RNNs

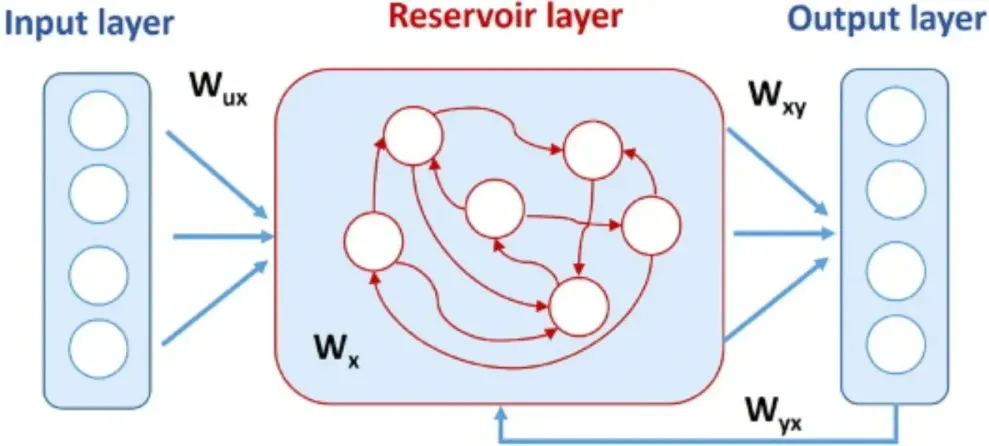

In a standard RNN, all connections must be trained, which is resource-intensive. ESNs, however, do things differently.

They use a "reservoir" of randomly connected nodes and only train the output weights. This design is part of a concept called reservoir computing, where the network's structure allows it to capture complex patterns without intensive training.

By training only the output layer, ESNs sidestep the vanishing gradient problem and can learn faster.

Reservoir Computing: A Simpler Approach

The concept of reservoir computing simplifies the neural network structure by keeping most of it static.

In an Echo state network, the randomly connected reservoir processes incoming data, while only the output layer is optimized. This method requires less data and processing power, making ESNs much easier to work with compared to fully trainable RNNs.

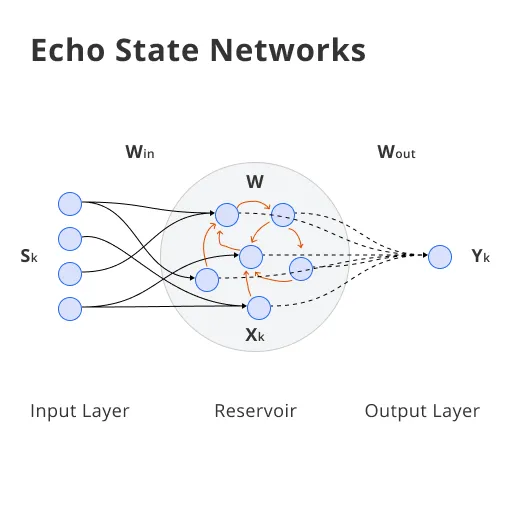

Core Components of an Echo State Network

Understanding an Echo state network requires knowing its core components. Each part plays a role in making ESNs effective yet simple.

Reservoir: The Heart of the Echo State Network

The reservoir is a large collection of nodes that are randomly connected. It acts as a memory, holding past inputs and letting them “echo” throughout the network.

This randomness helps capture diverse patterns in the data without additional training. The reservoir is what allows ESNs to model dynamic data effectively.

Input Layer: Feeding Data into the Reservoir

The input layer connects to the reservoir and feeds data into it. However, unlike in traditional RNNs, the input connections aren’t trained in ESNs.

This lack of training keeps the process efficient while still allowing data to flow into the reservoir.

Echo State Property: Ensuring Stability

The echo state property ensures that the reservoir maintains stability. It means that as data flows through the network, past inputs echo within the reservoir but gradually fade.

This property is crucial for keeping the network from becoming chaotic, ensuring that the network remains stable and reliable.

Output Layer: The Only Trainable Part

The output layer is the only part of an ESN that gets trained. By focusing training efforts here, ESNs avoid the complex optimization process required by traditional RNNs. This approach not only reduces computational load but also accelerates the network's learning speed.

The core components of an Echo State Network work together to efficiently process dynamic data while maintaining stability and simplicity.

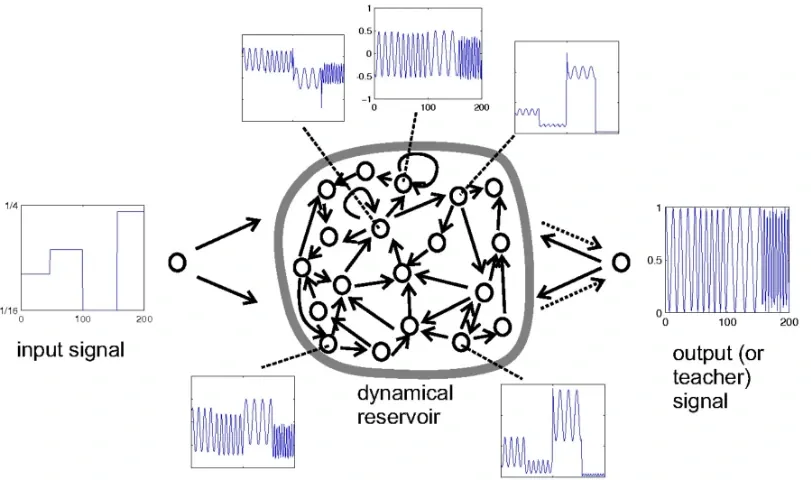

How Does an Echo State Network Work?

The functioning of an Echo state network involves a straightforward, three-step process that maximizes simplicity and efficiency. here are the three steps:

Step 1: Input Feeding

In the first step, data is fed into the reservoir through the input layer. Unlike fully trainable RNNs, this input layer only serves as a conduit for data, passing it to the reservoir without additional processing.

Step 2: Activation in the Reservoir

Within the reservoir, random connections between nodes activate. These nodes process the data in a way that allows the network to capture complex relationships within the sequence.

The randomized connections contribute to diverse activations, enabling the Echo state network to detect subtle patterns.

Step 3: Output Generation

The final step involves the output layer, which has been trained to interpret the activity in the reservoir. This trained output layer transforms the reservoir's responses into predictions or classifications. Since only the output weights require training, this process is much less resource-intensive than traditional RNNs.

An Echo State Network efficiently processes data with minimal training, relying on randomized reservoir activations and trained output weights.

What Are the Key Advantages of Echo State Network?

The unique structure of the Echo state network offers several advantages that make it valuable for specific applications. So here are the key advantages of Echo state network:

Computational Efficiency

Echo state networks require training only the output weights, as the internal and recurrent connections remain fixed after random initialization.

This significantly reduces computational demands and makes them faster to train compared to traditional recurrent neural networks.

Simplified Training

By eliminating the need for backpropagation through time (BPTT), Echo state networks avoid common issues like vanishing and exploding gradients. This simplifies the training process and enhances stability.

Powerful Temporal Dynamics

The fixed reservoir of an Echo state network is designed to capture rich temporal features. Thus making it highly effective for tasks such as time-series forecasting, signal processing, and speech recognition.

Versatility and Robustness

Echo state networks can be tailored to various applications by adjusting parameters like reservoir size and spectral radius. They are also resilient to noisy or incomplete input data.

Integration with Deep Learning

Echo state networks can complement deep learning architectures by providing an efficient mechanism for handling sequential data. Thus enhancing the temporal modeling capabilities of complex models.

Echo State Network advantages like combine computational efficiency, simplified training, and robust temporal dynamics, making them ideal for sequential data tasks.

Limitations of Echo State Network

While Echo state networks offer simplicity, they also come with certain limitations that may restrict their use in some scenarios. So, here are the limitations of Echo state network:

Restricted Learning Scope

Echo state network employ fixed, random reservoir weights and adjust only the output weights during training. This limits their ability to learn complex patterns compared to deep learning models like LSTMs or Transformers, which adapt all parameters.

Scalability Challenges

Unlike modern deep learning architectures, the Echo state network is not inherently scalable. They lack mechanisms for stacking layers, which makes it difficult to build deep hierarchies of features.

Dependence on Reservoir Tuning

The performance of an Echo state network heavily depends on hyperparameters like the spectral radius and reservoir size. This reliance makes model design labor-intensive and prone to inconsistencies.

Sensitivity to Data Noise

Echo state networks often perform poorly in tasks involving noisy data or long-term dependencies. This is where advanced deep learning architectures excel.

No End-to-End Learning

Echo state network does not support end-to-end optimization. It is a hallmark of modern Echo state network deep learning systems and essential for integrating feature learning with task-specific objectives.

Echo State Network have limitations in scalability, learning scope, and noise sensitivity, restricting their use in complex tasks.

Real-World Applications of Echo State Networks

Despite their limitations, Echo state networks have several practical applications across various fields, particularly in time-based and sequential data processing.

Time-Series Prediction

ESNs are effective in time-series prediction tasks, such as forecasting stock prices, predicting weather patterns, and modeling sales trends. They excel in these areas due to their ability to remember past sequences and detect trends over time.

Speech Recognition

In the realm of speech recognition, ESNs can analyze sequences of audio data to identify patterns in language. Their structure is ideal for capturing the sequential nature of speech, which is essential for accurate language processing.

Signal Processing

Signal processing applications, like interpreting sensor data, also benefit from ESNs. The reservoir’s capacity to handle dynamic inputs makes ESNs suitable for applications involving sensor-based feedback, such as medical monitoring or environmental sensing.

Control Systems

In control systems for robotics or automated systems, ESNs can model complex behaviors by learning from past movements or patterns. This ability to “remember” past actions makes them suitable for tasks like robotic navigation or movement planning.

Echo State Network offer practical solutions for time-series prediction, speech recognition, signal processing, and control systems.

Frequently Asked Questions(FAQs)

What is an Echo state network (ESN) used for?

Echo state network is primarily used for time-series data tasks, such as predicting stock prices, speech recognition, and robotics control. Their unique structure makes them ideal for applications needing efficient, fast, and sequential data processing.

How does an Echo state network deep learning differ from traditional RNNs?

Unlike traditional RNNs, Echo state network deep learning doesn’t train all layers. They rely on a randomly connected reservoir and train only the output layer. Thus making them faster, simpler, and less resource-intensive, avoiding issues like vanishing gradients in RNNs.

Why is the Echo State network popular for time-series data?

The echo state network are ideal for time-series data because they retain the memory of previous inputs, allowing them to model complex sequences. Their efficiency in capturing patterns without extensive training makes them popular for sequential data analysis.

What are the main parts of an Echo state network?

An Echo state network has four main parts: an input layer, a reservoir (randomly connected nodes), the echo state property (ensuring stability), and an output layer, which is the only trainable part.

What is the echo state property in ESNs?

The echo state property ensures past inputs echo within the network but eventually fade. This stability feature helps prevent chaotic outputs, maintaining consistent and predictable performance for sequential data tasks.

What are the shortcomings of Echo state networks?

ESNs can be limited by unpredictable reservoir behavior, difficulty in reservoir tuning, and restricted flexibility compared to fully trainable RNNs. These factors may limit their adaptability for certain complex learning tasks.