What is Reservoir Computing?

Imagine your brain. It's brilliant at processing loads of info all at once, right? Reservoir computing tries to mimic a tiny bit of that magic but using computers.

The Basic Idea

At its core, reservoir computing is a type of computing methodology focused on how to process data. It's like having a big tank (that's the "reservoir") where all your data gets mixed and stirred in complex ways to help solve problems, especially in pattern recognition or predicting what comes next in a sequence of information.

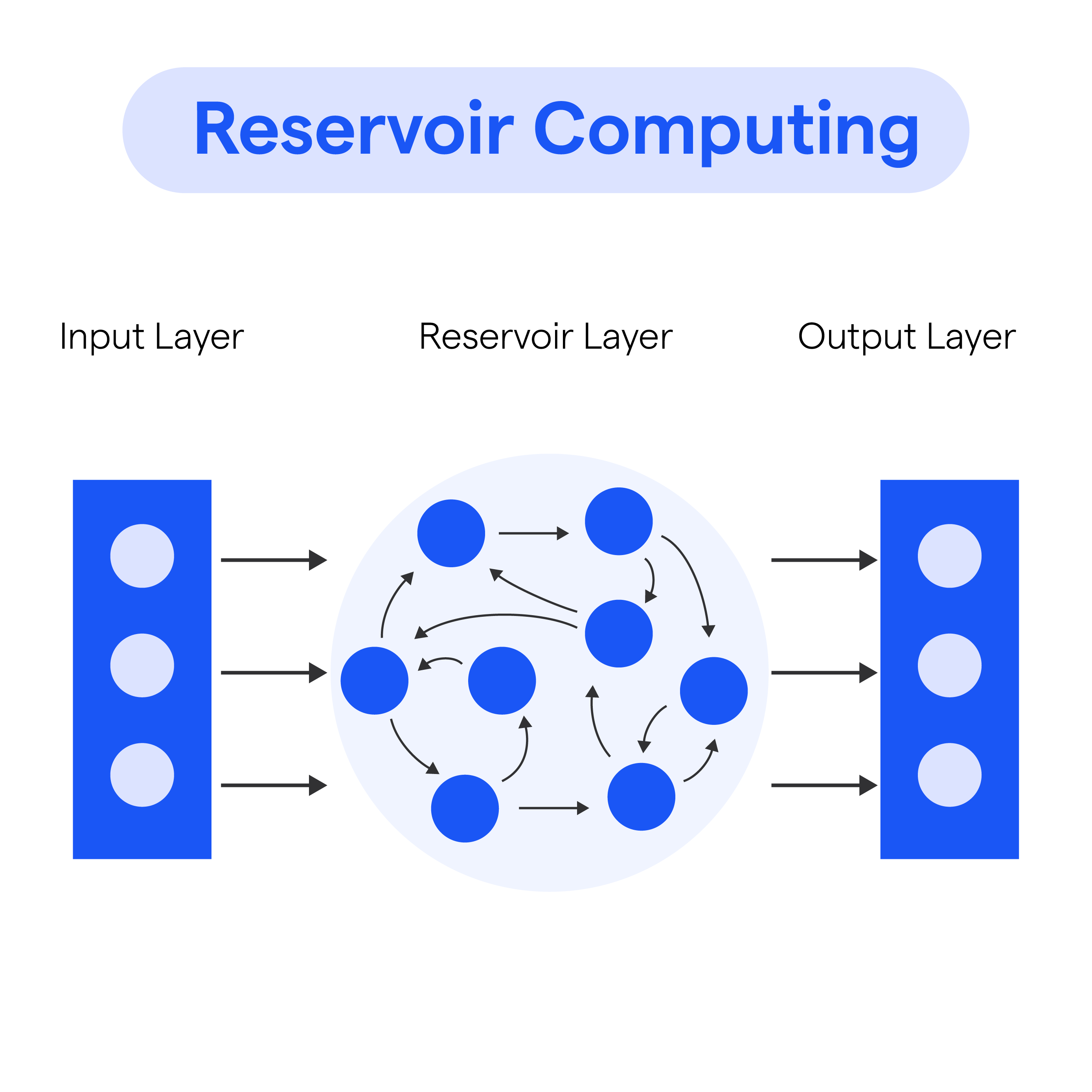

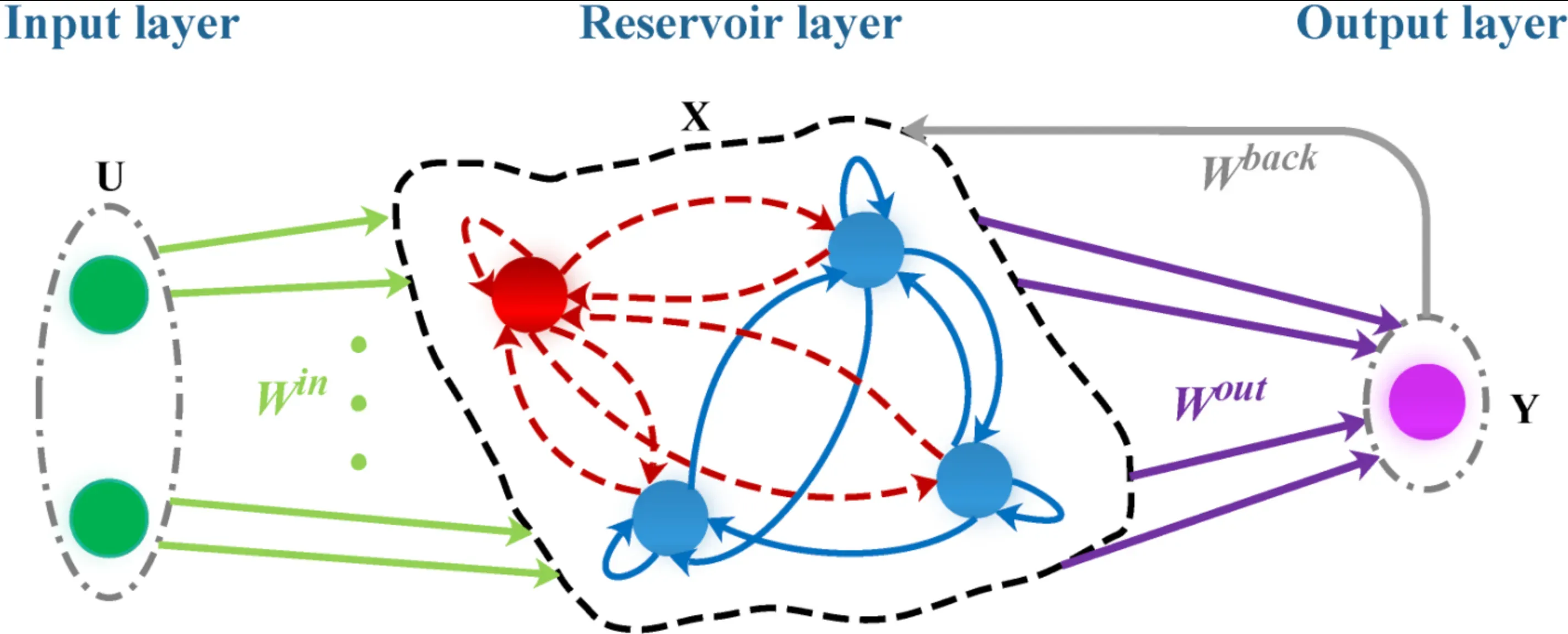

Key Components

There are three main heroes in the story of reservoir computing: the input layer, the reservoir, and the output layer. Imagine pouring water (data) into a sponge (the reservoir); the sponge gets soaked differently depending on how you pour the water. That soaking pattern helps decide what comes out of the sponge.

The Reservoir

This is where the magic happens. It’s a network, usually a large and randomly constructed neural net, that handles the incoming data in very complex, dynamic ways. It's not programmed with a specific task in mind but adapts based on the input it receives.

Training

Unlike traditional neural networks, you don't have to train the reservoir. It's the output that gets tuned. Think of it as not teaching the sponge how to soak up water but rather teaching someone how to squeeze the sponge to get the water you want.

Applications

Reservoir computing shines in areas where real-time processing of time-dependent data is crucial, like predicting stock market trends or understanding how a disease spreads in a population.

Why is it used?

Reservoir computing, a bit of an unsung hero, is particularly useful in scenarios where classical computing or standard neural networks would buckle.

Efficiency

First up, it’s efficient. Traditional models can be like teaching a child to solve a Rubik's Cube by memorizing every possible move. Reservoir computing, by contrast, is like teaching them to notice patterns and use shortcuts. It requires less computational power for certain tasks, making it faster in those scenarios.

Handling Time-Series Data

It’s a champ at dealing with data that changes over time, such as weather patterns, stock prices, or electrical brain signals. Where other models might get overwhelmed, a reservoir computer keeps chugging along, making sense of the chaos.

Minimal Training Required

Remember, the only part you need to train is the output. This is much less work compared to fully training traditional neural networks, saving time and energy.

Flexibility

Reservoir computing isn’t picky about the type of data you throw at it. Numbers, pictures, sounds—it can handle a wide range without needing a complete redesign for each type.

Robustness

It’s tough. Because of the way it operates, it can handle noisy or incomplete data better than some other models, making it quite reliable in real-world scenarios where data isn’t always perfect.

Who uses it?

You might wonder, “Who really uses this stuff?” Well, reservoir computing has found fans across various fields, from academia to the tech industry.

Academics

Researchers and scientists love diving into the depths of reservoir computing to push the boundaries of what it can do, especially in neuroscience and physics.

Tech Industry

Big tech companies and startups alike explore reservoir computing for its efficiency and adaptability, applying it to speech recognition, predictive typing, and more.

Finance

In the fast-paced world of finance, being able to predict market trends, even a tiny bit better, can mean big bucks. Reservoir computing gets deployed here for its prowess in analyzing time-series data.

Healthcare

Predicting disease outbreaks, and understanding patient data over time—healthcare professionals and researchers are beginning to explore how reservoir computing might help in these areas.

Environmental Science

Forecasting weather patterns, climate modeling, and monitoring ecosystems—fields where the amount of data can be overwhelming but where reservoir computing can help make sense of it all.

How does it work?

Let's break down the nuts and bolts of how reservoir computing actually does its thing, without making your head spin.

The Input Layer

This is where data enters the reservoir computing system. Think of it as the door through which information walks in, ready to be processed.

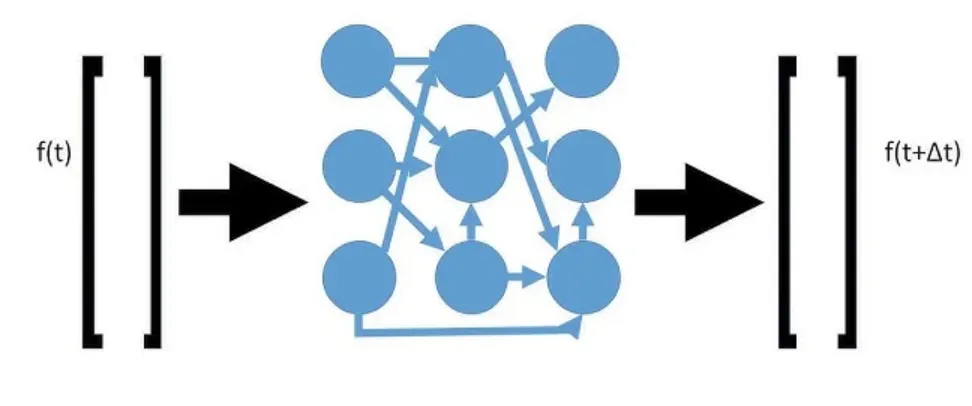

Transformation in the Reservoir

Once inside, the data goes through a whirlwind of transformations. The reservoir manipulates and mixes the data in complex ways, preparing it to be analyzed and understood.

Training the Output

Here's where the unique part comes in. You don’t train the reservoir; you train how to read the output it gives you. It’s like learning to understand a foreign accent rather than teaching everyone to speak in a new way.

Real-Time Processing

One of the cool tricks up its sleeve is handling data in real time, making quick decisions without having to pause and digest all the information in one go.

Adaptability

Reservoir computing systems can adapt to new data as it comes, adjusting their outputs based on the latest information. It’s always learning, in a way.

Suggested Reading:

Cognitive Computing

Where can it be used?

While we touched on applications earlier, let’s dive deeper into where reservoir computing really shines.

Predictive Analytics

Forecasting the future, whether it’s the stock market, weather patterns, or electricity consumption, reservoir computing can crunch the data and help make informed predictions.

Pattern Recognition

Identifying patterns in vast datasets—like spotting a familiar face in a crowd or understanding speech in a noisy room—is a forte of reservoir computing.

Signal Processing

Dealing with real-time, streaming data—such as audio signals or brain waves—reservoir computing can process and make sense of the information on the fly.

Robotics

In robotics, making sense of sensor data to navigate or manipulate objects, reservoir computing offers a way to process this information efficiently.

Anomaly Detection

Spotting the odd one out in a dataset—be it a fraudulent transaction among thousands of legitimate ones or detecting a failing component in a machine—reservoir computing can help identify these anomalies.

Challenges of Reservoir Computing

No technology is perfect, and reservoir computing has its own set of challenges to tackle.

Hardware Limitations

Though efficient, reservoir computing still leans on hardware capabilities. As tasks get more complex, the system’s ability to keep up without significant hardware investment can be a challenge.

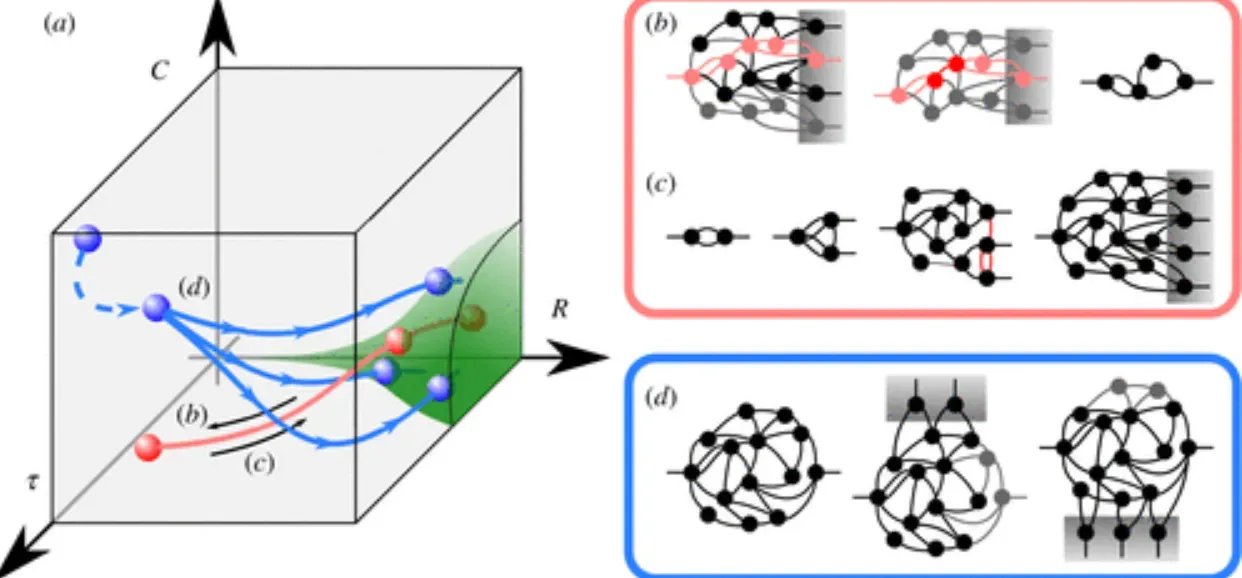

Complexity of Design

Designing and setting up the reservoir can be more art than science. Finding the right balance so that the system can effectively process the intended data types requires experimentation and expertise.

Interpretability

Understanding how a reservoir computing system comes to its conclusions is not always straightforward. This "black box" nature can make some stakeholders hesitant, as they can't easily see the logic behind decisions.

Data Quality

Garbage in, garbage out. The quality of the input data matters a lot. While reservoir computing is robust against noise, overly poor or irrelevant data can still throw it off course.

Lack of Awareness

Reservoir computing is not as widely known or understood as other AI or machine learning methods. This lack of awareness can make it harder to adopt or find expertise in.

Best Practices of Reservoir Computing

Adopting some smart strategies can help make implementing reservoir computing a smoother journey.

Start with Clear Objectives

Know what you’re trying to achieve. Having a clear goal helps in designing the system and measuring its success.

Focus on Data Quality

Ensure your input data is as clean and relevant as possible. Investing time in data preprocessing can pay off in the effectiveness of the reservoir computing system.

Experimentation

Be prepared to experiment. Finding the right configuration for the reservoir takes trial and error. Embrace the process.

Interdisciplinary Collaboration

Collaborating across different fields can provide new insights and approaches to using reservoir computing, broadening its applications and effectiveness.

Keep Up with Research

The field is evolving. New discoveries and methodologies can provide solutions to existing challenges, so staying informed is key.

Trends of Reservoir Computing

Reservoir computing is on the move, with exciting trends shaping its future.

Quantum Reservoir Computing

Combining reservoir computing with quantum computing opens up fascinating possibilities for handling even more complex data at incredible speeds.

Energy-Efficient Computing

In our increasingly eco-conscious world, the efficiency of reservoir computing makes it a candidate for green computing initiatives, aiming to reduce the energy footprint of data processing.

Integration with Neuroscience

Understanding how the brain works can inspire new approaches in reservoir computing, potentially leading to breakthroughs in artificial brain-like computing systems.

Edge Computing

Deploying reservoir computing on the edge—closer to where data is generated (like in IoT devices)—can enhance real-time data processing capabilities.

Expanding Applications

As awareness grows, we’ll likely see reservoir computing being applied in new fields, from smart cities and autonomous vehicles to personalized medicine and beyond.

Frequently Asked Questions (FAQs)

How does reservoir computing differ from traditional neural networks?

Reservoir computing features a dynamic "reservoir" layer that isn't trained, simplifying the training process and making it efficient for temporal data processing.

What types of problems is reservoir computing particularly good at?

It excels at time series prediction, signal processing, and complex dynamic pattern recognition, where temporal dynamics are crucial.

Can reservoir computing operate with unstructured data?

Yes, its ability to handle temporal dynamics makes it adept at managing unstructured or semi-structured temporal data.

How energy efficient is reservoir computing?

Reservoir computing is more energy-efficient than traditional deep learning methods, due to its simplified training mechanism focusing mainly on output weights.

Is specialized hardware required for reservoir computing?

No specialized hardware is required, but some implementations, such as photonic reservoir computing, can leverage specific hardware for performance benefits.