Introduction

Over the last decade, natural language processing (NLP) has exploded in capability and popularity - fueled by computational power increases, troves of text data, and innovators open-sourcing bleeding-edge libraries.

As global NLP markets balloon towards $130 billion by 2028 according to Reports and Data, developer-friendly libraries like SpaCy and NLTK provide accessible gateways to capitalize on growth.

So what underlying capabilities explain SpaCy overtaking longtime incumbent NLTK? Speed and scale. SpaCy’s industrial-strength models blaze through text processing workloads up to 20x faster while integrating seamlessly with pandas DataFrames and numpy arrays for production-grade data pipelines.

For many use cases, both toolkits can accomplish NLP fundamentals. But understanding key advantages empowers matching specific organizational needs. Read on for a breakdown on when reaching for NLTK or SpaCy optimizes workflows.

Overview of Spacy and NLTK

- Spacy: This powerful and efficient NLP library has gained immense popularity for its high performance and wide range of features. It focuses on providing accurate and fast NLP processing capabilities while being developer-friendly.

- NLTK: The Natural Language Toolkit, known as NLTK, is another widely used NLP library. It offers a comprehensive set of tools and resources for NLP, including corpora, tokenizers, stemmers, and parsers. NLTK is well-loved for its educational focus and extensive documentation.

Comparison of Spacy and NLTK

In terms of performance, ease of use, and features, Spacy and NLTK have distinct advantages. Let's take a closer look:

Performance

Spacy is known for its speed and efficiency. It is built with optimization in mind, allowing it to process large amounts of text in a shorter time. NLTK, on the other hand, maybe slower when handling larger datasets due to its design philosophy of offering a wide range of features and options.

Ease of Use

Spacy shines in terms of its user-friendly API design and ease of integration with other tools. Its well-documented API provides clear and concise functions, simplifying the development process. NLTK is more focused on being educational and flexible but may require more effort for beginners to get started.

Features

Both Spacy and NLTK offer a rich set of features. However, Spacy has gained a reputation for its advanced functionality in named entity recognition, dependency parsing, and part-of-speech tagging. NLTK, on the other hand, provides a broader range of NLP tools and resources, making it a great choice for exploring different NLP techniques.

Spacy: A Powerful NLP Library

Spacy is a robust and efficient library for NLP tasks. It boasts high performance, thanks to its optimized processing pipelines and highly accurate pre-trained models. Its main focus is on providing industrial-strength NLP capabilities.

Overview of Spacy and its capabilities

Spacy offers a wide range of NLP functionalities, including tokenization, named entity recognition, part-of-speech tagging, parsing, and text classification. It excels in handling complex linguistic features and has specific capabilities for deep learning integration.

Key features that make Spacy a popular choice for NLP tasks

- Efficiency: Spacy's optimized pipelines and models ensure fast and memory-efficient text processing.

- Accuracy: The pre-trained models in Spacy have been trained on large corpora to provide accurate results out of the box.

- Industrial-strength NLP: Spacy focuses on serving commercial NLP needs, making it a preferred choice for businesses and research institutions.

Compatibility with different programming languages

Spacy supports popular programming languages such as Python, Java, and JavaScript. Its Python library is widely used and provides extensive functionality for NLP tasks.

Spacy Installation and Setup

If you're ready to start with Spacy, here's a step-by-step guide for installing and setting it up:

- Install Spacy using pip: pip install spacy

- Download the required language model: python -m spacy download en

- Verify the installation by importing Spacy in your Python environment: import spacy

Tips and best practices for configuring Spacy

- Consider using Spacy's language-specific models to improve accuracy and performance for specific languages.

- Leverage Spacy's language processing pipelines and pre-trained models to quickly build NLP applications.

- Keep your Spacy library and models up to date by regularly updating them to newer versions.

By following these steps and recommendations, you'll be on your way to utilizing the power of Spacy for your NLP tasks.

Remember, Spacy is constantly evolving, so keep an eye on their documentation, community updates, and resources to stay up to date with the latest developments and best practices. Happy NLP-ing!

Suggested Reading:

Exploring Chatbot Frameworks that Integrate Well with spaCy

Spacy NLP Pipeline

Spacy's NLP pipeline is a well-designed and efficient system that processes text through a series of stages, each responsible for a specific linguistic processing task. Let's dive into the details of Spacy's pipeline and its components.

Understanding Spacy's NLP pipeline and its components

Spacy's pipeline consists of various components, such as tokenization, part-of-speech tagging, dependency parsing, and named entity recognition. Each component adds valuable linguistic annotations to the processed text.

Exploring the different stages of Spacy's pipeline

The pipeline stages in Spacy can be customized according to specific NLP tasks. By default, Spacy runs through the following stages:

- Tokenizer: This stage breaks the text into individual tokens (words, punctuation, etc.), creating a foundation for subsequent processing.

- Part-of-speech (POS) tagging: POS tagging assigns grammatical information to each token, such as whether it is a noun, verb, or adjective. This stage helps in understanding the syntactic structure of the text.

- Dependency parsing: Dependency parsing analyzes the grammatical relationships between tokens, providing a detailed understanding of how words are connected within a sentence.

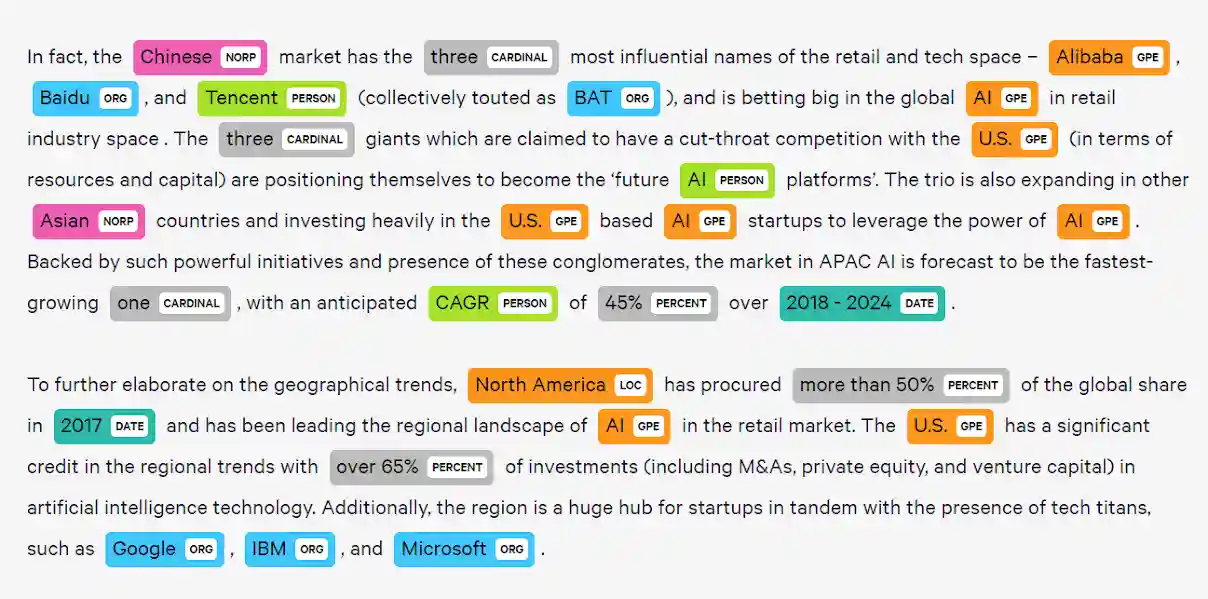

- Named Entity Recognition (NER): NER identifies and classifies named entities in text, such as people, organizations, and locations. It plays a vital role in information extraction and understanding the meaning of the text.

- Text Classification: Spacy also supports text classification tasks, where it assigns predefined categories or labels to pieces of text based on their content.

Customizing the pipeline for specific NLP tasks

Spacy allows customization of the pipeline by disabling or adding components as needed. This flexibility enables users to fine-tune the processing and focus on specific linguistic features relevant to their NLP task.

For example, if your task doesn't require POS tagging or dependency parsing, you can disable these components to speed up processing time. On the other hand, you can add additional components to further enhance the pipeline's capabilities, such as custom named entity recognition models.

Entity Recognition with Spacy

Named Entity Recognition (NER) is a crucial NLP task that involves identifying and classifying named entities in text. These entities can be people, organizations, locations, dates, or any other information of interest. NER is widely used in applications like information extraction, question-answering systems, and sentiment analysis.

Techniques for performing entity recognition using Spacy

Spacy offers pre-trained models for named entity recognition, making it easy to get started. You can use these models or train custom models based on your specific requirements. Spacy's NER models rely on both rule-based and statistical approaches for accurate entity recognition.

To perform entity recognition using Spacy, follow these steps:

- Load the pre-trained Spacy model: nlp = spacy.load('en_core_web_sm')

- Process the text through the pipeline: doc = nlp(text)

- Access the named entities recognized in the text: entities = doc.ents

Suggested Reading:

spaCy LLM: Enhancing Language Modeling with spaCy

Evaluating and fine-tuning entity recognition models in Spacy

Spacy allows you to evaluate the performance of its named entity recognition models using metrics such as precision, recall, and F1 score. By analyzing the model's performance, you can fine-tune it by providing additional training data or modifying the model's parameters. This iterative process helps in improving the accuracy and effectiveness of your entity recognition models.

Beginning with NLP powered chatbot isn't that tough. Meet BotPenguin- the home of chatbot solutions.

With BotPenguin you can easily train your chatbot on custom data, paint them with your logo and branding, and offer human-like conversational support to your customers.

And that's not it. BotPenguin makes sure that you reach your customers where they are by offering AI chatbots for multiple platforms, thus making omnichannel support look easy:

Showcasing real-world examples of entity recognition using Spacy

Let's look at some real-world examples where entity recognition shines:

- News article analysis: Entity recognition helps in automatically extracting key information like names of people, organizations, and locations mentioned in news articles.

- Social media monitoring: By identifying named entities in social media posts, businesses can gain insights into customer sentiment, discover trending topics, and track mentions of their brand.

- Legal document analysis: Entity recognition assists in extracting entities such as case names, organizations, and relevant dates from legal documents, making it easier for lawyers and researchers to navigate and summarize information.

Spacy's robust and accurate entity recognition capabilities make it a valuable tool for a wide range of applications.

NLTK: A Versatile NLP Library

Natural Language Toolkit (NLTK) is a widely used open-source platform that offers a suite of tools and resources for NLP tasks. This versatile library has been instrumental in driving advancements in various fields, including linguistics, computer science, and artificial intelligence.

Key features and functionalities of NLTK for NLP tasks

NLTK's comprehensive set of features and functionalities are valuable for anyone working on NLP tasks. Among its most notable capabilities are its user-friendly interface, rich set of text processing tools, and extensive documentation.

Moreover, NLTK offers the ability to interface with other NLP libraries such as SpaCy and Gensim, which can be beneficial for developers working on complex NLP problems.

Compatibility with Different Programming Languages

NLTK is written in Python, which is a popular programming language used in NLP applications. While Python is the primary language used for NLTK, the toolkit is flexible and can interface with other programming languages such as Java and C.

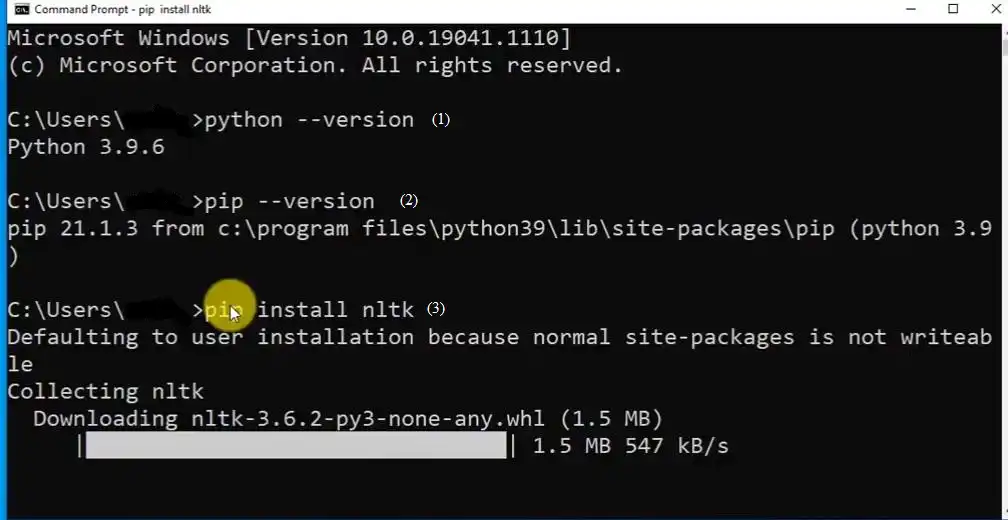

NLTK Installation and Setup

Installing NLTK is simple and can be done via pip, the Python package installer. Here are the steps to install NLTK -

- Open your command prompt or terminal.

- Type pip install nltk and hit enter to install NLTK.

- After installation, download the required text corpora and resources using the following command: import nltknltk.download()

- Follow the on-screen instructions and select the specific corpora and resources you require.

Text Corpora and Lexical Resources in NLTK

NLTK offers a vast variety of built-in text corpora and lexical resources to work with. Learning to navigate and utilize these built-in resources is essential for NLP developers working with NLTK.

Understanding NLTK's built-in text corpora and lexical resources

NLTK offers pre-existing datasets that can be used for tasks such as sentiment analysis, text classification and summarization, speech tagging, and more. These datasets include the Gutenberg Corpus, Brown Corpus, and Reuters Corpus.

Moreover, NLTK offers a range of lexical resources, which include WordNet, FrameNet, and VerbNet. These resources are used to identify synonyms, hypernyms, and other semantic relationships between words.

Adding custom text corpora to NLTK

Developers can also add custom text corpora to NLTK using simple steps. First, the corpus must be prepared and made available in the required format. Then, use the NLTK library to import the custom corpus, making it accessible for subsequent processing.

Text Preprocessing and Tokenization with NLTK

Text preprocessing and tokenization are crucial steps in NLP tasks because they lay the groundwork for more advanced processing steps. NLTK offers a range of techniques for preprocessing and cleaning text, including stemming, stop word removal, and noise removal.

Various tokenization methods available in NLTK

Tokenization is the process of segmenting text into individual tokens or words. NLTK provides several tokenization methods that are effective for different kinds of text.

The most popular tokenization algorithms in NLTK include TreebankWordTokenizer and WordPunctTokenizer. Developers can choose the appropriate tokenizer based on specific requirements, such as preserving punctuation or handling contractions.

Which NLP Library Should You Choose?

When it comes to Natural Language Processing (NLP), there are several tools and libraries available to assist you. Two popular ones are Spacy and NLTK (Natural Language Toolkit). Each has its own unique features and strengths, making it essential to understand their use cases and factors to consider when choosing one over the other.

Use Cases for Spacy

Spacy is a Python library that focuses on efficiency, accuracy, and ease of use. It provides robust capabilities for various NLP tasks, including:

Named Entity Recognition (NER)

Spacy shines in extracting and classifying named entities such as names, organizations, locations, and more. Its pre-trained models ensure accurate entity recognition, thereby saving you time and effort.

Part-of-speech (POS) Tagging

Spacy allows you to determine the grammatical components of a sentence, such as nouns, verbs, adjectives, and more. This information is invaluable when performing syntactic analysis or semantic parsing.

Dependency Parsing

Through dependency parsing, Spacy reveals the hierarchical structure and relationships between words in a sentence. This can be particularly useful for tasks like information extraction, sentiment analysis, and more.

Text Classification

Spacy provides a straightforward way to train and deploy text classification models, enabling you to categorize documents based on topics, sentiment, or any other custom labels you require.

Use Cases for NLTK

NLTK is a comprehensive library for NLP tasks, designed to be educational and research-friendly. Its broad range of functionalities includes:

Tokenization

NLTK provides tools to split text into individual words or sentences, which is fundamental for various downstream tasks.

Sentiment Analysis

With pre-trained sentiment analysis models, NLTK enables you to determine the sentiment expressed in a piece of text, be it positive, negative, or neutral.

Language Processing

NLTK includes resources for language processing tasks such as stemming, lemmatization, and morphological analysis. These features are useful for normalizing text and reducing word variations.

Corpus and Dataset Management

NLTK offers various built-in datasets and corpora, making it easy to access and experiment with pre-processed text data for research or learning purposes.

Factors to Consider

When choosing between Spacy and NLTK, several factors should be taken into account:

- Performance and Efficiency: Spacy is known for its speed and efficiency, making it an excellent choice for large-scale NLP tasks. NLTK, on the other hand, may be more suitable for smaller datasets or research-focused projects.

- Ease of Use: Spacy is designed to provide a user-friendly experience, with well-documented APIs and easy-to-understand code examples. NLTK, although feature-rich, may have a steeper learning curve.

- Community and Support: Spacy benefits from an active and growing community, with regular updates and contributions. NLTK, being an older library, has a large and established user base, along with extensive documentation and resources.

Conclusion

In closing, both NLTK and SpaCy deliver versatile open-source Python libraries for tapping into the immense power of natural language processing - fueled by over a decade of development by dedicated communities. While NLTK offers a more academic toolkit oriented towards fundamentals, SpaCy provides industrial-grade capabilities for scaling state-of-the-art NLP initiatives.

For many projects, either library can accomplish the needed preprocessing, modeling, and analysis. But understanding key factors like processing speeds, scalability needs, and production requirements helps determine optimal tooling. According to the 2022 KDnuggets survey, over 60% of developers now opt for SpaCy over NLTK - a nearly 10% flip in just two years. This preference gain highlights SpaCy’s blistering speeds, robust capabilities, and seamless integration with data science stacks.

Yet as global natural language AI markets expand beyond $120 billion by 2028 predicts Reports and Data, both tools will continue advancing considerably thanks to dedicated steward communities. Already, connectivity plugins like nltk2spacy simplify combining strengths when needed for bespoke workflows. The boomtimes are only beginning!

In the end, matching organizational maturity to library strengths maximizes productivity and impact. For academics and early NLP education, NLTK still reigns supreme. But for enterprises intent on extracting production value from natural language AI at scale, SpaCy delivers the performance and functionality needed for the future. Let your ambitions guide tool selection, then blaze new trails with Python’s NLP vanguard!

Suggested Reading:

How to use Spacy for Text Analysis?

Frequently Asked Questions (FAQs)

What are the main differences between Spacy and NLTK?

Spacy is known for its efficient processing speed and pre-trained models, while NLTK is more focused on providing a wide range of NLP tools and resources. Choose based on your specific needs.

Can both Spacy and NLTK handle common NLP tasks?

Yes, both libraries are capable of handling common NLP tasks such as tokenization, part-of-speech tagging, named entity recognition, and more. However, the implementation and performance may differ slightly.

Is Spacy easier to use compared to NLTK?

Spacy is often considered more user-friendly due to its consistent API and intuitive documentation. It provides a streamlined approach to NLP tasks, making it easier for beginners to get started.

Which library is more suitable for research purposes?

NLTK is widely used for research and education purposes due to its vast collection of linguistic resources and modular design. It allows for more flexibility and experimentation in NLP research.

Can I use both Spacy and NLTK together in my projects?

Absolutely! Spacy and NLTK complement each other well. You can leverage Spacy's efficient processing and pre-trained models alongside NLTK's extensive NLP tools to create powerful and accurate NLP pipelines.