Natural language processing is being transformed by advanced large language models like GPT-3. These foundations enable unprecedented text generation, comprehension, and summarization.

According to Anthropic, GPT-3 processes nearly 500 billion words, 5X more than any other model. To leverage these innovations, spaCy recently introduced an LLM module integrating platforms like OpenAI.

spaCy's pipeline architecture makes combining statistical NLP with LLMs simple. Developers can now enrich linguistic analysis, entity extraction, sentiment detection and more using a unified framework. This provides the benefits of both rule-based logic and learned patterns from massive data.

Early benchmarks show spaCy's LLM module matches or exceeds the accuracy of end-to-end fine-tuned models. And the unified approach prevents fragility caused by chaining APIs.

It's evident that spaCy's LLM integration empowers developers to quickly apply state-of-the-art language intelligence to drive innovation and boost capabilities.

So continue reading to know more about enhancing language modeling with spaCy LLM.

What is spaCy LLM?

Language modeling is the backbone of many natural language processing tasks, and spaCy LLM is here to revolutionize the way we approach it. In this section, we'll dive into the details of what spaCy LLM is all about.

Understanding spaCy LLM

spaCy LLM, short for spaCy Language Model, is a cutting-edge framework that enhances language modeling capabilities. It is built on top of the popular spaCy library, known for its efficiency and simplicity.

spaCy LLM takes advantage of spaCy's robust features and combines them with advanced language modeling techniques to provide developers and researchers with a powerful tool.

Key Features of spaCy LLM

Here are the key features of spaCy LLM:

- Efficiency: spaCy LLM is designed to be fast and efficient, allowing you to process large amounts of text with ease.

- Flexibility: With spaCy LLM, you have the flexibility to customize and fine-tune your language models according to your specific needs.

- Extensibility: spaCy LLM seamlessly integrates with other spaCy components, making it easy to incorporate into your existing NLP pipelines.

- State-of-the-art Algorithms: spaCy LLM utilizes state-of-the-art algorithms to improve language modeling accuracy and performance.

Why Choose spaCy LLM?

Given below are some of the many reasons why you should consider spaCy LLM:

Performance Improvements

spaCy LLM offers significant performance improvements over traditional language modeling frameworks. By leveraging spaCy's efficient implementation and optimized algorithms, spaCy LLM can process text faster and more accurately, saving you valuable time and resources.

Efficiency Gains

With spaCy LLM, you can train and deploy language models more efficiently. The framework provides streamlined workflows and optimized processes, allowing you to focus on your NLP tasks without getting bogged down by complex setups or training procedures.

Flexibility for Various NLP Tasks

spaCy LLM is not limited to a specific NLP task. Whether you're working on text generation, sentiment analysis, named entity recognition, or any other language-related task, spaCy LLM can adapt and deliver impressive results.

How Does spaCy LLM Work?

Here's how spaCy works:

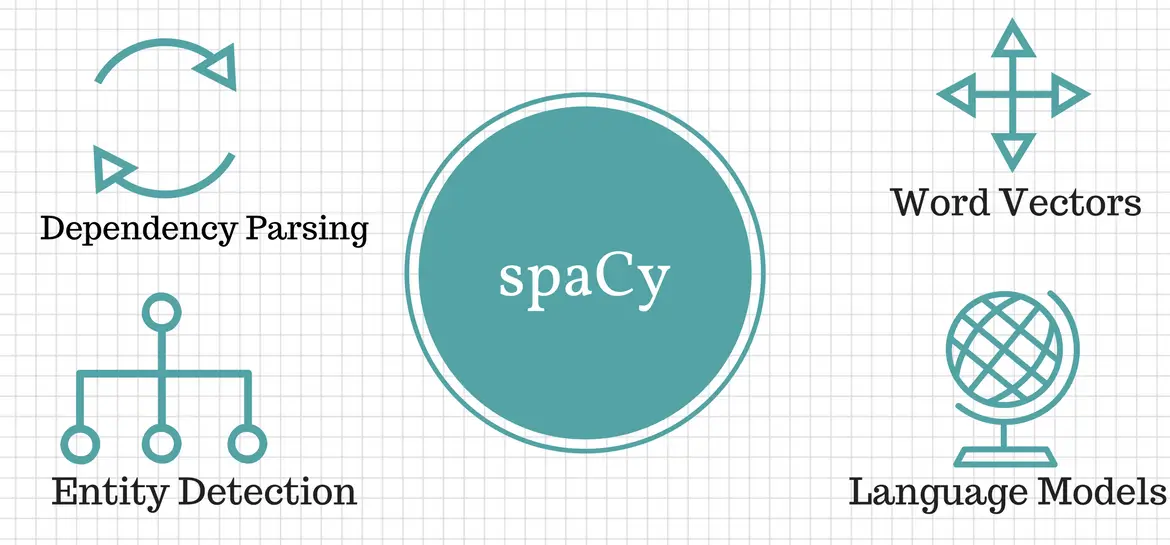

Underlying Architecture and Components

spaCy LLM builds upon the solid foundation of the spaCy library. It leverages spaCy's tokenization, part-of-speech tagging, and dependency parsing capabilities to preprocess and analyze text data.

Additionally, spaCy LLM incorporates advanced language modeling techniques, such as recurrent neural networks (RNNs) or transformers, to generate meaningful and context-aware predictions.

Training Process and Data Requirements

To train a language model with spaCy LLM, you'll need a large corpus of text data. The training process involves feeding the model with sequences of words and training it to predict the next word in a given context. The more diverse and representative your training data is, the better the language model will perform.

Techniques and Algorithms

spaCy LLM employs various techniques and algorithms to enhance language modeling. These may include recurrent neural networks (RNNs), long short-term memory (LSTM) networks, transformers, or a combination of these. These algorithms enable the model to capture complex patterns and dependencies in the text, resulting in more accurate predictions.

Suggested Reading:

Use Cases of spaCy LLM

There are many use cases of spaCy LLM. Here are some of them:

Text Generation

You can unleash your creativity with spaCy LLM! Whether you're building chatbots, generating content, or assisting in creative writing, spaCy LLM can generate coherent and contextually relevant text.

Say goodbye to generic and repetitive responses, and let spaCy LLM bring your text generation tasks to life.

Sentiment Analysis

Understanding the sentiment behind text is crucial in today's data-driven world. With spaCy LLM, you can train powerful sentiment analysis models that accurately classify the sentiment of a given text.

Gain valuable insights from user feedback, social media posts, or customer reviews, and make data-driven decisions with confidence.

Named Entity Recognition

Identifying and classifying named entities in text is a common challenge in information extraction tasks. spaCy LLM can be your secret weapon in this domain.

Train models that can accurately recognize and classify names of people, organizations, locations, and more. Unlock the potential of named entity recognition with spaCy LLM.

Getting Started with spaCy LLM

spaCy's LLM module allows you to leverage the power of large language models like GPT-3 in your natural language processing projects. To begin:

First, install spaCy v3.2 or later, which contains the LLM integration. Make sure you have a valid API key for a large language model provider like OpenAI.

Next, load spaCy's LLM class, specifying your API key and the LLM provider:

```python

from spacy.lang.en import EnglishWithLLM

nlp = EnglishWithLLM(OpenAIApiKey("sk-xxx"))

```

Now you can use nlp() on text to generate LLM predictions. Try conversation, summarization, translation, and more:

```python

doc = nlp("Hello, how are you today?")

print(doc._.lm.generate(max_length=30))

```

Additionally, access named entity recognition, sentiment analysis and other spaCy pipeline components powered by the LLM:

```python

print(doc.ents)

print(doc.sentiment)

```

With just a few lines of code, spaCy's LLM module makes it easy to integrate state-of-the-art models into your NLP applications. The unified pipeline seamlessly combines rule-based logic, statistical models, and large language model predictions.

Conclusion

spaCy has transformed the landscape for working with natural language in Python. Its statistical models strike the perfect balance between industrial-strength performance and approachable APIs.

Core capabilities like POS tagging and dependency parsing combined with seamless transformer integration provide the essential building blocks for language understanding.

Companies worldwide now integrate spaCy to extract insights and enhance products with linguistic intelligence.

Usage has skyrocketed with over 150,000 data scientists leveraging spaCy’s capabilities. Intuitive abstractions reduce time spent on preprocessing so practitioners can focus on modeling.

With comprehensive support for 50+ languages, spaCy is driving global progress in NLP. Thanks to its vibrant open-source community, spaCy continues to lead advances in efficient, accessible language modeling.

As natural language understanding becomes increasingly crucial for AI systems, spaCy will no doubt continue to push boundaries in the field!