What are Sequence-to-Sequence Models?

Sequence-to-sequence (Seq2Seq) models are a class of deep learning models designed to transform input sequences into output sequences. They're widely used in tasks like machine translation, natural language generation, text summarization, and conversational AI.

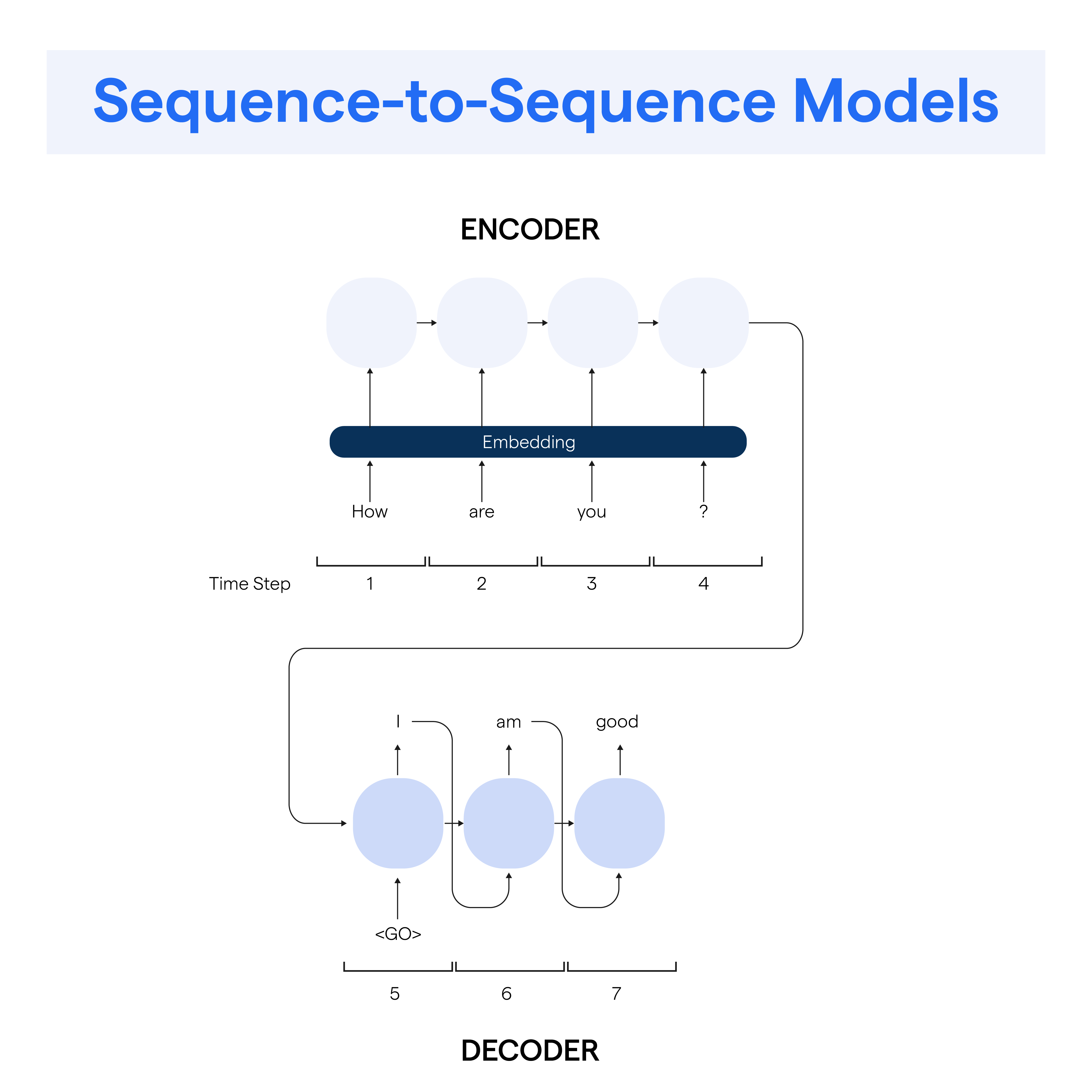

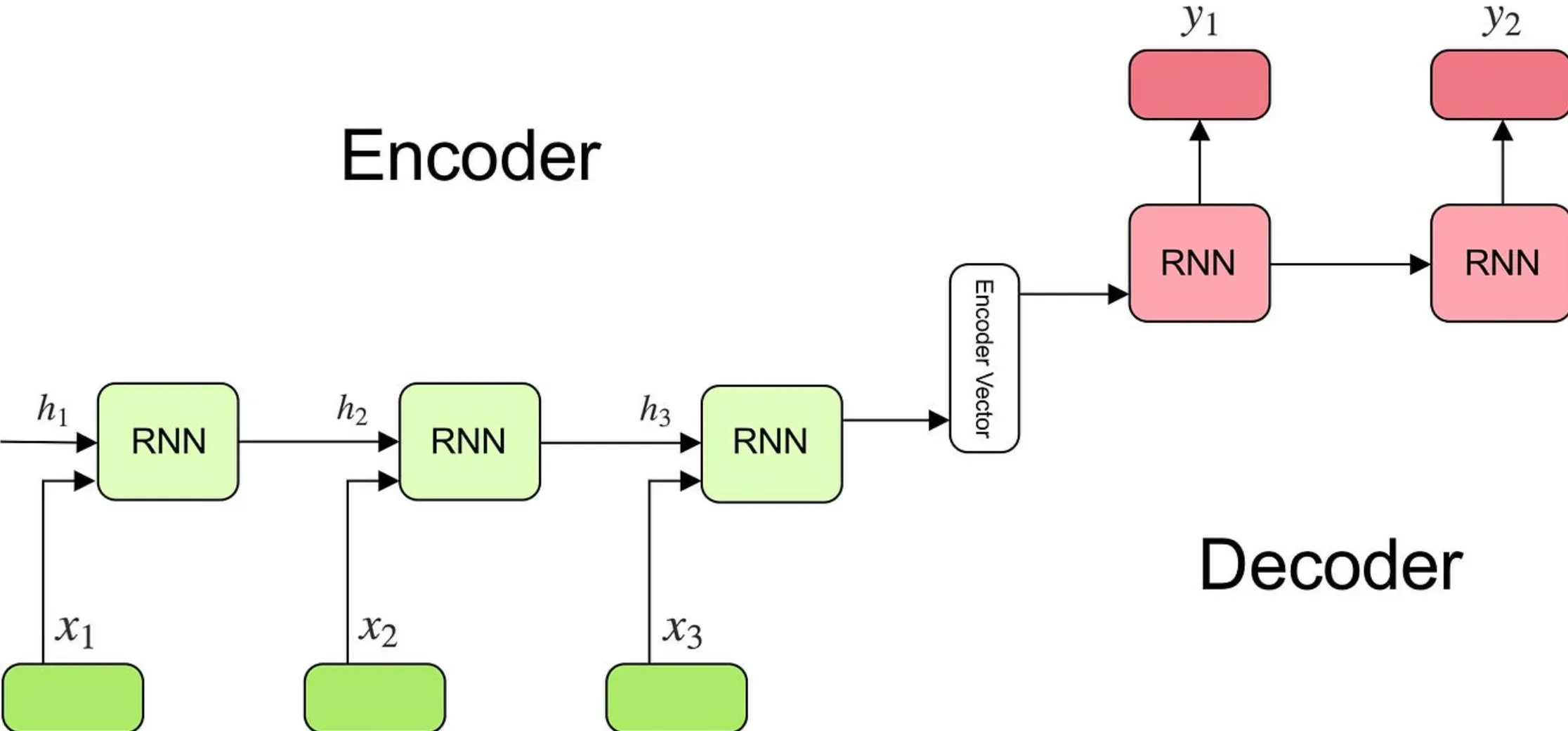

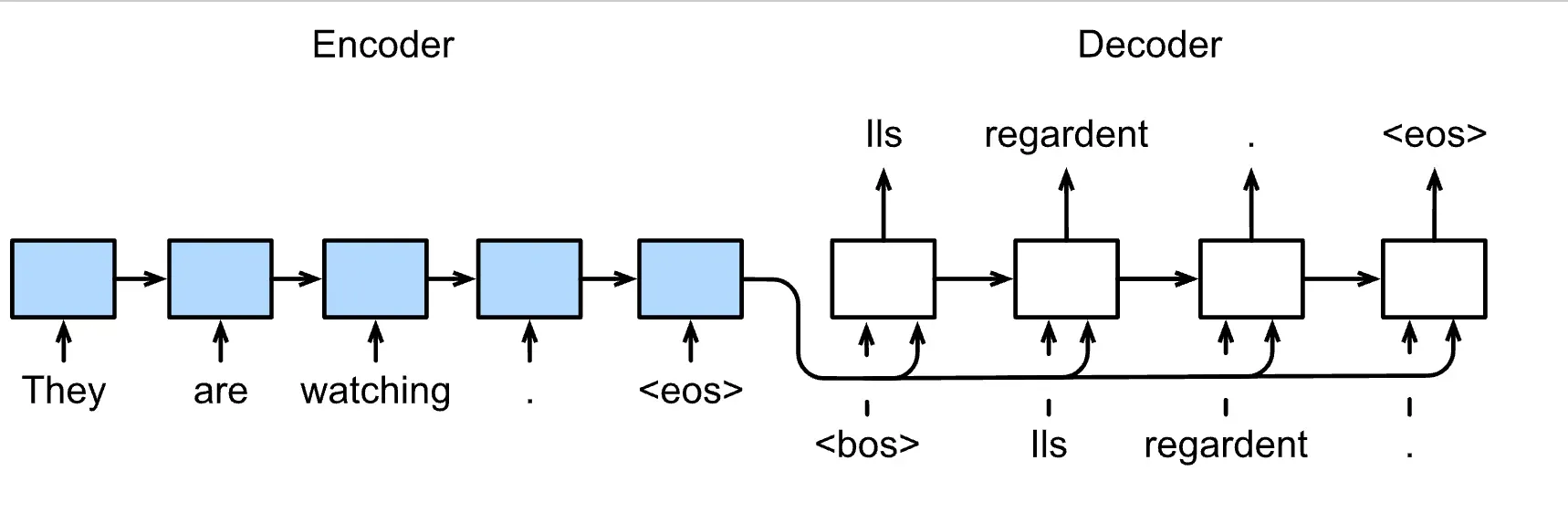

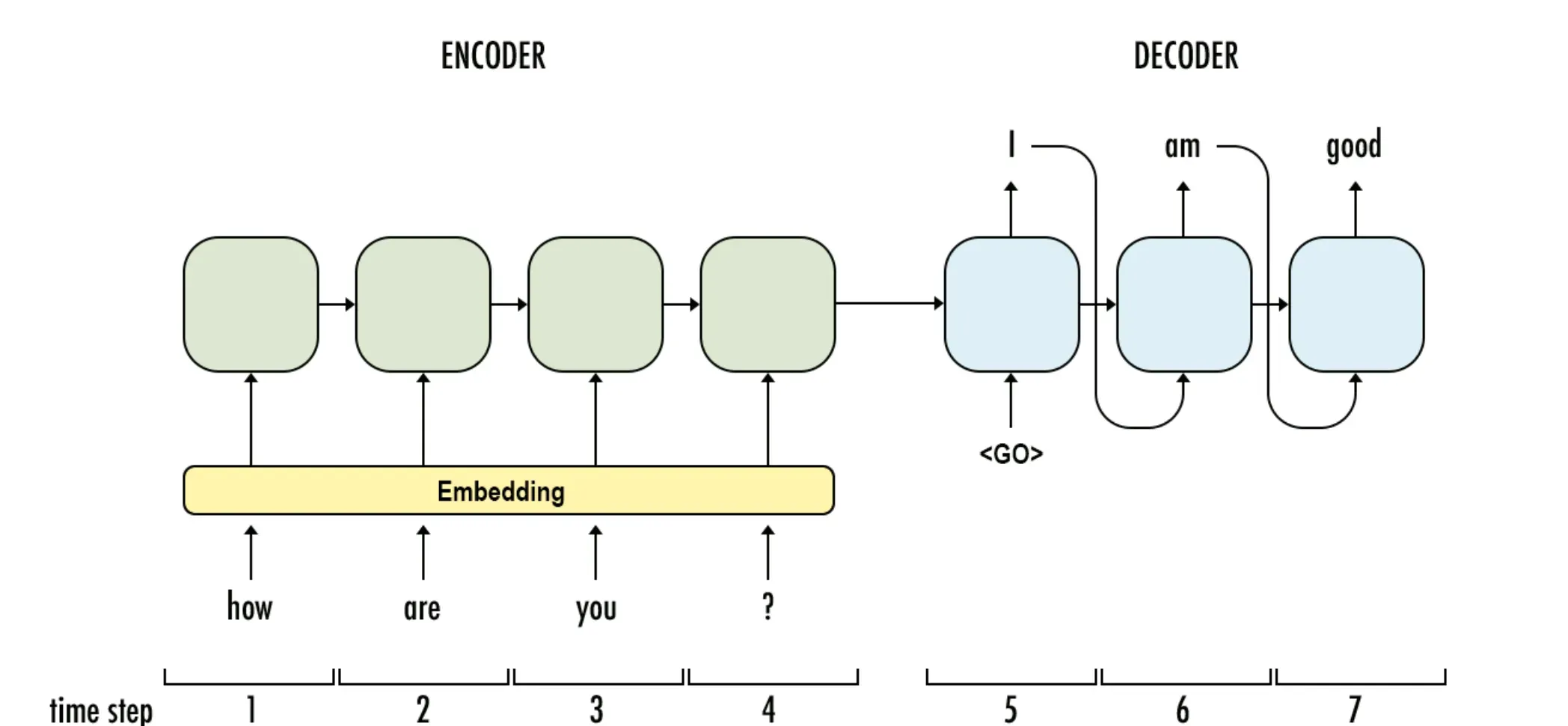

Structure of Sequence-to-Sequence Models

Two primary components form the architecture of a Seq2Seq model: the encoder and decoder. The encoder processes the input sequence, capturing its essential information, while the decoder generates the desired output sequence based on the encoder's internal representation.

Improving Sequence-to-Sequence Models

There are several strategies to refine Seq2Seq models:

- Attention mechanisms allow the model to focus on specific parts of the input sequence while decoding, improving the model’s ability to handle long inputs.

- Beam search enhances decoding by considering multiple sequences simultaneously instead of selecting a word greedily.

- Curriculum learning trains the model on simpler tasks before tackling more complex ones, making the learning process more efficient.

WHY are Sequence-to-Sequence Models Important?

Understanding the significance of sequence-to-sequence models will help us appreciate their value in diverse application areas.

Handling Variable-Length Sequences

Seq2Seq models naturally handle variable-length input and output sequences since the encoder and decoder can work with sequences of arbitrary length.

Improving Natural Language Processing

Seq2Seq models have brought substantial improvements to numerous natural language processing tasks, such as machine translation and text summarization, making machine-generated text more fluent and accurate.

Supporting Complex Relationships

Seq2Seq models can learn complex input-output relationships, making them invaluable tools in areas like speech recognition, music generation, and even image captioning.

Wide-ranging Applications

Seq2Seq models boast a diverse range of applications across industries, including customer support, healthcare, finance, and more, due to their ability to understand and generate human-like text.

WHO uses Sequence-to-Sequence Models?

Exploring the users of sequence-to-sequence models sheds light on their relevance and versatility across various disciplines.

Data Scientists

Data scientists utilize sequence-to-sequence models for tasks such as predictive modeling and sequence generation, where traditional models may fall short.

Natural Language Processing Researchers

Seq2Seq models are popular among NLP researchers as they continue to push the boundaries of machine-generated text for tasks like translation, summarization, and dialogue.

AI-driven Industries

Industries powered by artificial intelligence, like healthcare, entertainment, and customer support, employ Seq2Seq models to improve their services, build innovative solutions, and enhance user experiences.

Academic Institutions

Universities and research facilities harness sequence-to-sequence models to drive academic insights in natural language processing, computer vision, and other related fields.

WHEN to use Sequence-to-Sequence Models?

Let's discover the instances where sequence-to-sequence models make the best choice for tackling specific challenges.

Machine Translation

When translating text between languages while preserving the context and meaning, Seq2Seq models excel.

Speech Recognition

Seq2Seq models are effective when transforming spoken language into written text, as they can adapt to different speakers, accents, and background noise.

Conversational AI

In building chatbots and virtual assistants capable of understanding and generating complex responses, sequence-to-sequence models are indispensable tools.

Abstractive Text Summarization

When creating concise summaries of lengthy documents while retaining key information, Seq2Seq models are the go-to choice.

WHERE are Sequence-to-Sequence Models Applied?

In this section, we'll delve into the wide variety of applications for sequence-to-sequence models across domains and industries.

Machine Translation

Seq2Seq models play a critical role in translating text between languages in tools like Google Translate, helping break language barriers and facilitate communication worldwide.

Healthcare

In healthcare, Seq2Seq models assist in generating medical reports, predicting disease progression, and even improving mental health support through chatbots.

Customer Support

Sequence-to-sequence models are used in AI-driven customer support solutions to automate responses, resolve problems efficiently, and provide users with personalized recommendations.

Finance

In finance, Seq2Seq models enhance fraud detection, assist with text analysis for sentiment trading, and improve chatbot capabilities, making financial operations more efficient and secure.

HOW do Sequence-to-Sequence Models Work?

To truly appreciate the inner workings of sequence-to-sequence models, let's traverse through their underpinnings and the processes that drive them.

Encoder Architecture

Seq2Seq models employ an encoder, typically a recurrent neural network (RNN), to process the input sequence one element at a time and produce an internal, fixed-length representation known as the context vector.

Decoder Architecture

The decoder, also an RNN, takes this context vector and generates the output sequence element by element, leveraging the encoder's representation to capture the essence of the input sequence.

Training Sequence-to-Sequence Models

Seq2Seq models are typically trained using a technique called teacher forcing. During this process, the true (correct) output sequence is provided to the decoder while training, helping the model learn more efficiently.

Inference with Sequence-to-Sequence Models

At the inference stage, the true output sequence is not available. Instead, the model generates one output element at a time, feeding each generated output back into the decoder to produce the next element of the sequence.

Best Practices for Implementing Sequence-to-Sequence Models

Now that we're familiar with sequence-to-sequence models let's talk about some best practices that can help you build more effective and efficient models. Whether you're a budding researcher, a seasoned data scientist, or curious about AI, these practices will provide a solid foundation for implementing and improving your own Seq2Seq models.

Choosing the Right RNN Architecture

Your choice of RNN architecture in the encoder and decoder plays a fundamental role in the performance of your Seq2Seq model. Although standard RNNs can be used, in practice it's common to use more advanced architectures like LSTM (Long Short-Term Memory) or GRU (Gated Recurrent Unit). These RNNs are designed to better deal with the infamous vanishing gradient problem, enabling your model to learn and retain information from earlier time steps in the sequence more effectively.

Leveraging Teacher Forcing

During the training process, utilize the technique known as teacher forcing. This practice involves using true output sequence during training as the input to the decoder at the next time step instead of using predicted output from the previous step. This makes training more efficient and can often lead to better-performing models.

Utilizing Attention Mechanisms

When dealing with long input sequences, consider implementing attention mechanisms. These mechanisms enable your model to focus on specific parts of the input sequence that are most relevant for each step of the output sequence, which can significantly improve performance. They're particularly beneficial for tasks like machine translation and text summarization, where understanding the context of the entire sequence is crucial.

Applying Beam Search during Inference

At the prediction stage, or during inference, apply the beam search method. This technique considers multiple possible next steps rather than choosing the single most likely next word as in greedy decoding. Although it adds computational complexity, beam search generally leads to better results by exploring a wider range of potential outputs.

Experimenting with Model Improvements

Don't be afraid to experiment with different improvements and variations. These could include using pre-trained word embeddings, using more layers in your encoders and decoders, incorporating dropout for regularization, or fine-tuning hyperparameters like learning rate and batch size. Exploring these options and others can lead to significant improvements in the performance of your Seq2Seq model.

Keeping Track of Your Experiments

While experimenting, it's critical to keep track of your tests, the configurations you've tried, and the results you've obtained. This will help you understand which configurations lead to the best results and enable you to iteratively improve your model. Using tools like TensorBoard or other experiment-tracking tools can make this process more manageable.

Frequently Asked Questions (FAQs)

What are sequence-to-sequence models used for?

Sequence-to-sequence (seq2seq) models are used for tasks that require input and output sequences of varying lengths, such as language translation, speech recognition, and text summarization.

How do seq2seq models work?

Seq2seq models involve encoding an input sequence into a fixed-length representation and then decoding that representation to generate an output sequence. This requires an encoder network, a decoder network, and potentially an attention mechanism.

What are some common challenges in seq2seq modeling?

Some common challenges in seq2seq modeling include dealing with long input/output sequences, avoiding overfitting, and handling rare or out-of-vocabulary words. Other challenges may depend on the specific task or dataset being used.

What are some methods for improving seq2seq model performance?

Methods for improving seq2seq model performance include adding an attention mechanism, using pre-trained word embeddings, increasing training data, and fine-tuning the model's hyperparameters.

How do you measure the performance of a seq2seq model?

Several metrics can be used to evaluate the performance of a seq2seq model, including perplexity, BLEU score, and accuracy. Qualitative analysis can also be performed by inspecting the generated output sequences and comparing them to the ground truth.