What are Sequence Models?

Sequence Models are a subset of machine learning models that work with sequences of data. These models leverage the temporal or sequential order of data to perform tasks such as natural language processing, speech recognition, time-series forecasting, and more.

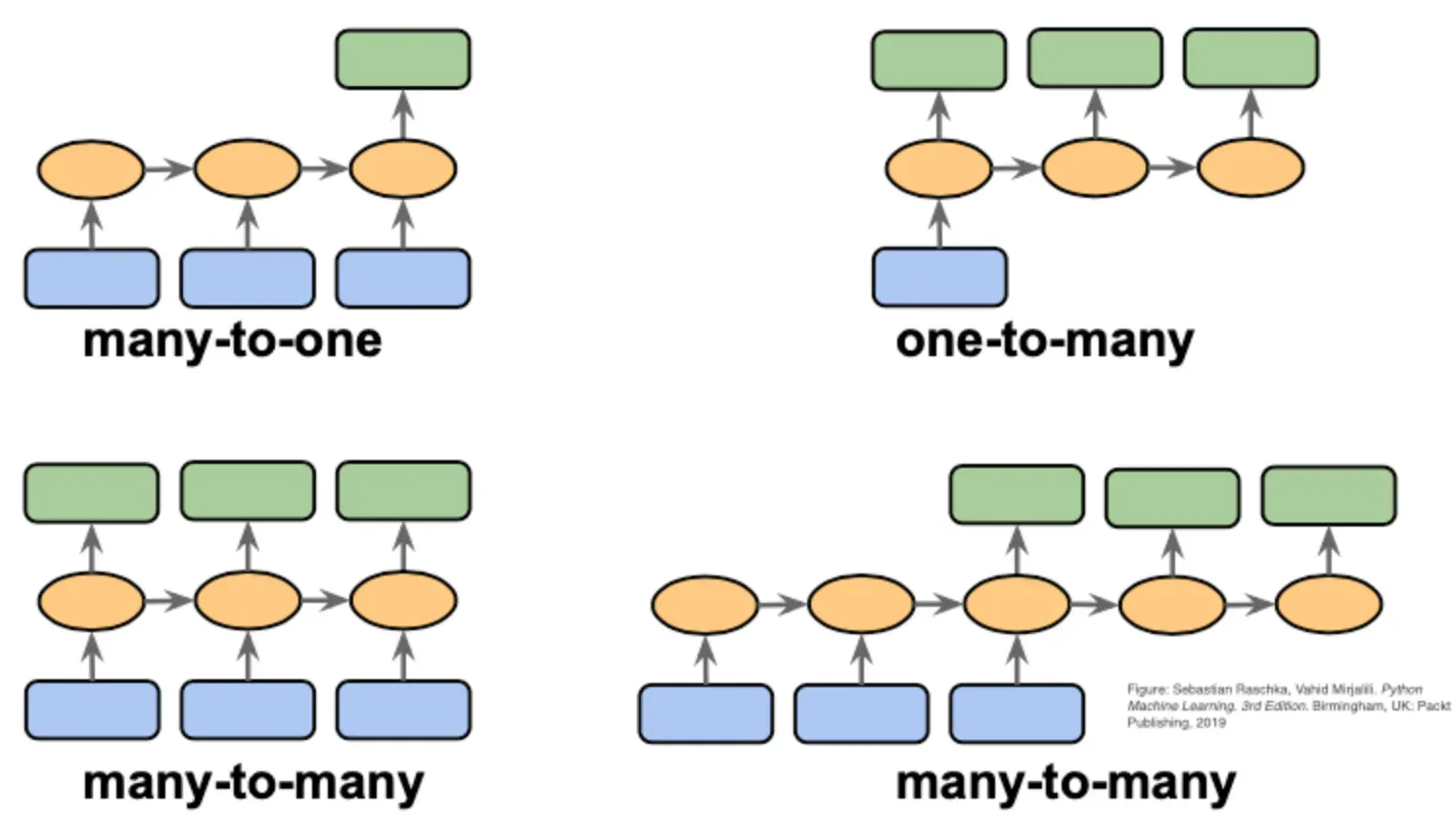

The most pivotal feature of sequence models is their capability to process inputs and outputs of varying lengths. They aren't restricted to one-to-one mapping, as seen in traditional ML models.

The capacity to handle temporally structured data is attributed to their architecture, with popular choices being Recurrent Neural Networks (RNNs), Long Short Term Memory (LSTM) units, and Gated Recurrent Units (GRUs).

Why use Sequence Models?

The main advantage of sequence models is their ability to capture temporal dynamics, making them exceptionally suited for tasks involving sequence data. They can recognize patterns over time and their memory of previous inputs aids in processing new ones.

Additionally, sequential modeling is versatile, and capable of functioning in complex use cases like machine translation, where the length of the input sequence (source language) does not necessarily match the length of the output sequence (target language). These models also facilitate 'many-to-one' tasks like sentiment analysis, where the entire text sequence gets evaluated for output, and 'one-to-many' tasks like music generation, where a single input triggers a series of outputs.

Who Uses Sequence Models?

Sequential modeling is utilized extensively across various sectors:

Tech companies use sequence models in voice-assistance and speech recognition features.

Healthcare relies heavily on them for medical diagnostics using patient history.

Financial institutions leverage them in predicting stock market trends.

In e-commerce and entertainment, they help generate personalized product and media recommendations based on user sequence data.

Researchers use sequence models extensively in the field of natural language processing.

When to Apply Sequence Models?

Sequence models are beneficial when the data has a temporal or sequential structure; that is, when the order of data points influences the outcome. Some situations where sequence models prove powerful include:

Predicting future events based on time-series data (e.g., stock prices, weather)

Recognizing speech and translating text

Processing video data for object recognition

Predicting the next word in a sentence

Generating music

Where are Sequence Models Utilized?

The applications of sequential modeling span myriad fields and technologies:

Natural Language Processing (NLP) - Used in language translation, sentiment analysis, text generation, and text summarization.

Speech Recognition - Powers voice assistants and transcription services.

Video Processing - Useful in action recognition, video tagging, and surveillance.

Medical Diagnostics - Helps in predicting disease progression based on patient history.

Financial Analysis - Employs sequence models for time-series forecasting such as stock price prediction.

In essence, Sequence Models bring an enhanced level of functionality in handling and processing sequential data, ensuring practical applications across diverse industries and sectors. Their ability to handle varying-length inputs and outputs, capture temporal dynamics, and process sequential data effectively paves the way for advancements in technologies, making them potent tools in the machine-learning arsenal.

The Different Types of Sequence Models

Sequence Models come in various forms, each with their unique architecture and purpose to handle specific types of sequential data. Here are some of the most common types of Sequence Models.

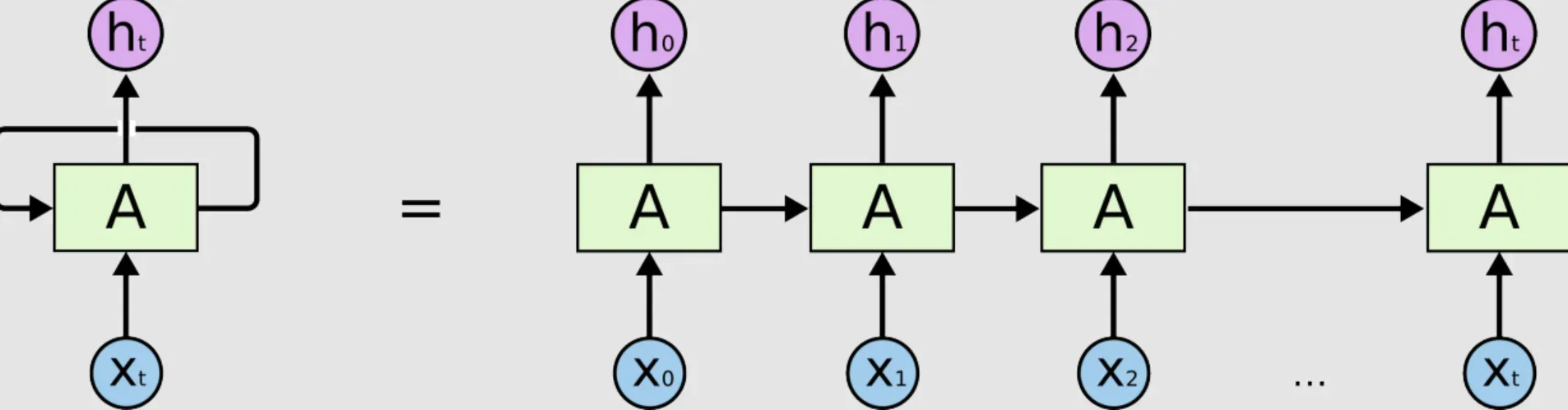

Recurrent Neural Networks (RNNs)

RNNs are one of the simplest forms of sequence models, designed to recognize patterns in sequences of data. They achieve this by having 'memory' about previous inputs through the use of hidden states.

This architecture allows an RNN to take information from both the current input and what it has learned from inputs it received earlier. One disadvantage of RNNs is the 'vanishing gradient' problem, making it difficult for them to learn and retain information from longer sequences.

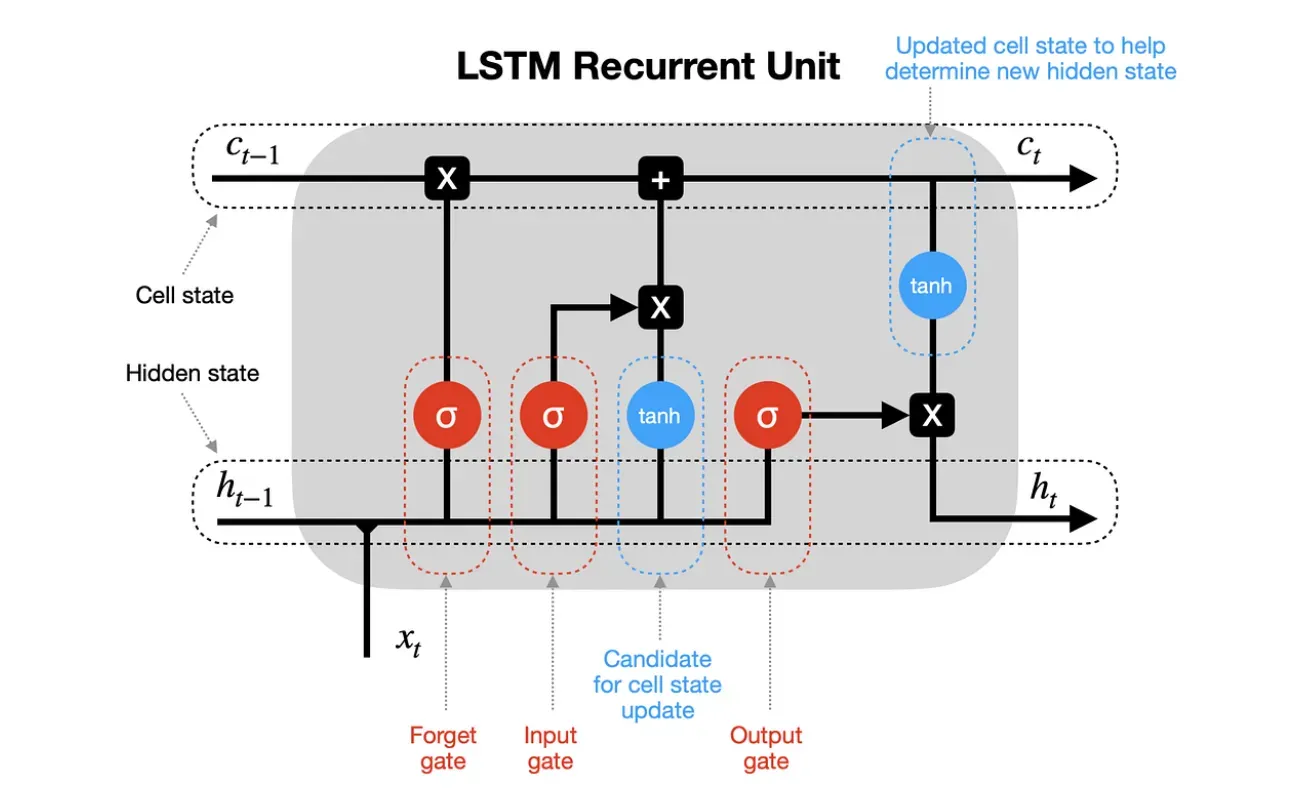

Long Short-Term Memory Units (LSTMs)

LSTMs are a specific type of RNN engineered to combat the vanishing gradient problem found in standard RNNs. They're designed to remember patterns over long durations of time.

LSTMs use 'gates' to control the flow of information into and out of memory, and they can keep important information in prolonged sequences and forget irrelevant data. This makes them particularly good at tasks such as unsegmented, connected handwriting recognition and speech recognition.

Gated Recurrent Unit (GRU)

A GRU, like an LSTM, is a variation of a standard RNN that can keep information over long periods. However, unlike LSTM, it achieves this using fewer tensor operations, making it a more efficient model.

There are only two gates in a GRU: a reset gate and an update gate. The reset gate determines how to combine the new input with the previous memory, while the update operator defines how much of the previous state to keep. GRUs perform similarly to LSTMs for certain tasks but provide computational efficiency.

Bi-directional RNNs and LSTMs

Bi-directional RNNs and LSTMs exploit the input sequence in both directions with two separate hidden layers: one for the past and the other for the future. Each hidden state in these models reflects past knowledge (backward from its point in the sequence) and future context (ahead from its point in the sequence).

This is highly beneficial in tasks that require understanding of context from both directions, such as language translation and speech recognition.

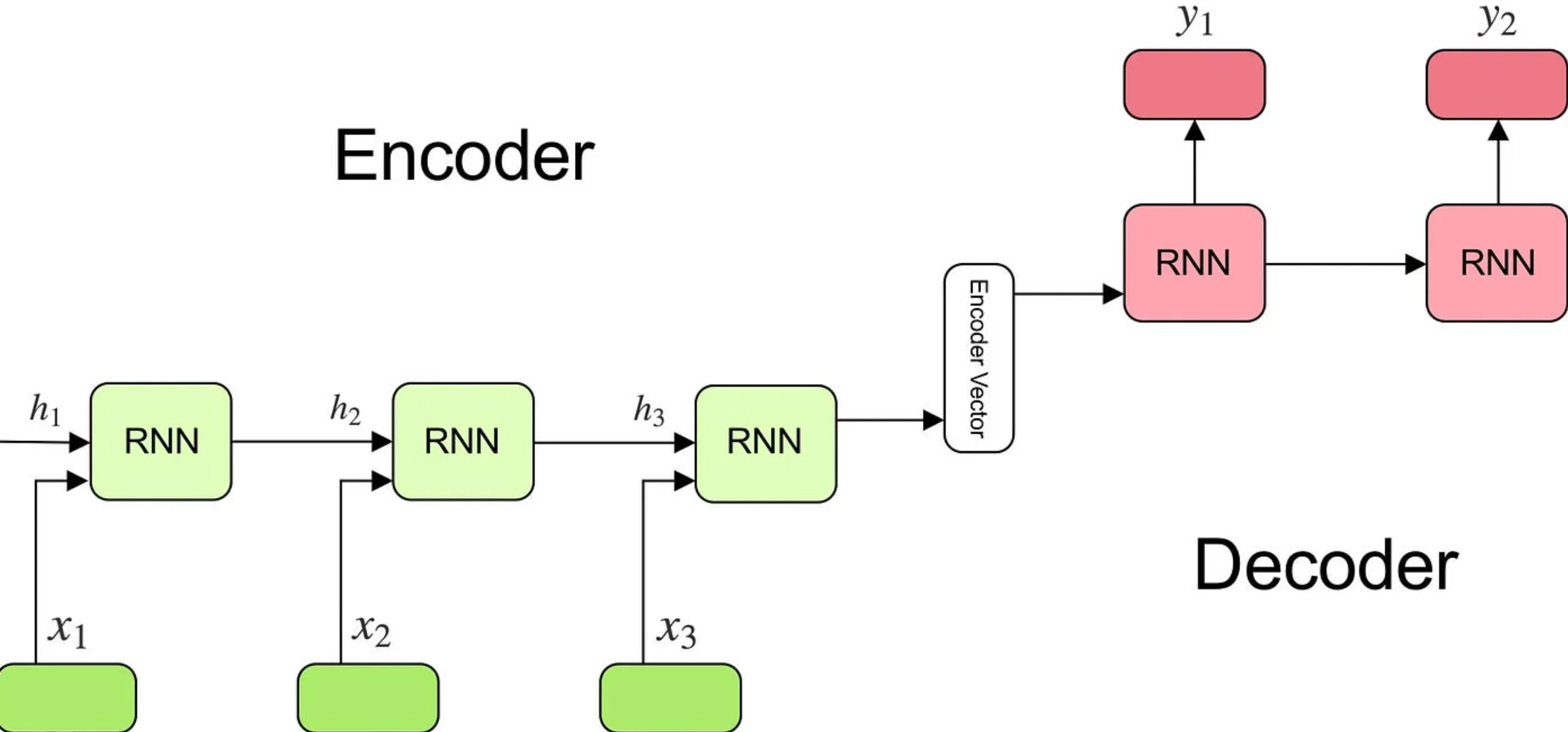

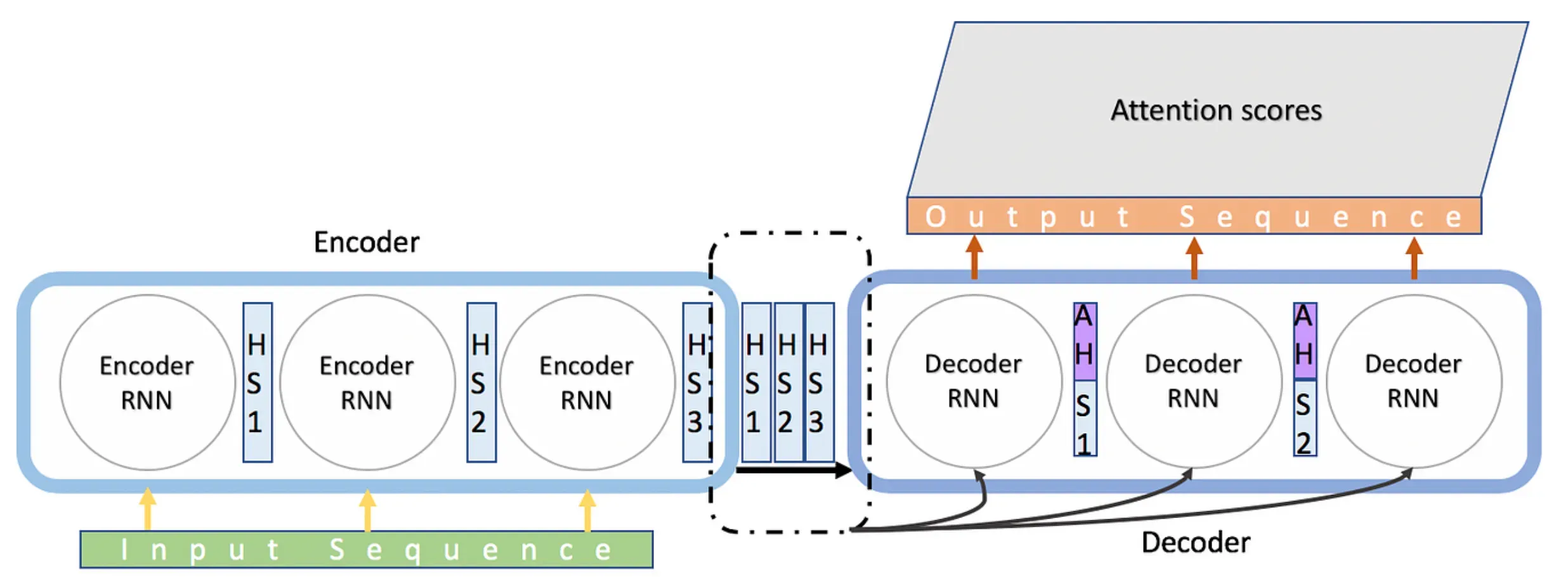

Encoder-Decoder Models

Encoder-Decoder models, often combined with LSTM or GRU, are prevalent in tasks like machine translation where both input and output are sequences of varying lengths.

The encoder captures context from the input sequence and encodes it into a fixed-length vector. The decoder then processes this fixed-length vector to generate a variable-length output sequence.

Transformers

Transformers are currently state-of-the-art in handling sequential data. Unlike other sequence models, they don't process sequences incrementally but instead process all input elements at once through self-attention mechanisms. Transformers led to the creation of models such as BERT, GPT, and T5, which revolutionized Natural Language Processing.

Each of these Sequence Models has unique strengths and weaknesses, and the choice depends on the specific task at hand, computational resources, and the nature of the data.

Implementation Guide for Sequence Models

Sequence models have transformed language processing and understanding tasks. Follow this brief guide to explore key steps in implementing sequence models effectively.

Choose the Right Model

Select an appropriate sequence model for your task, such as RNNs, LSTMs, or GRUs. These models have varying capabilities and complexity, making it essential to pick one tailored to your specific problem.

Prepare the Dataset

Gather a dataset relevant to your task, ensuring it's large enough for training the model. Preprocess the data by tokenizing it, converting tokens into vectors, and organizing the data into input-output pairs.

Split the Data

Split the data into training, validation, and testing sets. Typically, an 80-10-10 or 70-15-15 split is considered appropriate, with the primary focus on the training dataset.

Model Architecture and Training

Build the model architecture, defining layers and configuring hyperparameters. Compile the model with suitable loss functions, optimizers, and performance metrics before training it on the dataset.

Evaluate and Fine-tune the Model

Evaluate your model's performance on the validation set. Look for patterns in errors and focus on hyperparameter tuning, regularization, or altering the model architecture to enhance performance.

Test and Deploy

Test the model on the testing dataset to ensure it generalizes well. Once satisfied with the results, deploy the model for real-world applications, and monitor its performance for continuous improvement.

Pros and Cons of Sequence Models

Like any tool, sequence models have their own set of pros and cons. Let's take a look at both sides of the coin:

Pros of Sequence Models

Ability to Handle Sequential Data

Sequence models efficiently deal with sequential input data, preserving temporal dynamics that traditional models often fail to capture.

Applicability Across Domains

These models are highly versatile and can be used in a wide array of applications, including natural language processing, sentiment analysis, speech recognition, and time series prediction.

Variable Input and Output Sizes

Sequence models can handle varying lengths of input and output sequences, making them flexible and adaptable.

Cons of Sequence Models

Difficulty in Capturing Long-Term Dependencies

While theoretically, sequence models (especially RNNs) can capture long-term dependencies in sequences, in practice they often struggle due to the vanishing gradient problem. LSTM or GRU architectures somewhat address this issue.

Time and Computational Intensity

Training sequence models, particularly on long sequences, can be time-consuming and computationally intense.

Overfitting Risks

Sequence models have a tendency to overfit, particularly in cases with limited data or highly complex architectures.

Choosing a sequence model should be guided by a careful analysis of the benefits it can provide, the resources available, and the trade-offs you're willing to make. Remember, no model is a universal solution—it all depends on your unique requirements and constraints.

Examples of Sequence Models

To get a better grasp of sequential modeling in action, here are a few examples:

Sentiment Analysis: Using sequence models to analyze the sentiment of text reviews, enabling businesses to gauge customer opinions effectively.

Machine Translation: Employing sequence models to translate text from one language to another, revolutionizing communication and breaking down language barriers.

Stock Market Prediction: Utilizing sequence models to predict future stock prices based on historical data, aiding investors in making informed decisions.

Frequently Asked Questions (FAQs)

What is a sequence model and how does it work?

A sequence model is a statistical model that handles sequential data. It considers the order of inputs to generate predictions, making it suitable for tasks like language modeling and time-series analysis.

What are the advantages of using sequence models?

Sequence models are effective in capturing temporal dependencies in data, making them useful for tasks like natural language processing and speech recognition. They can provide valuable insights and make accurate predictions based on sequential patterns.

What are the different types of sequence models?

Some types of sequence models include Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM), Gated Recurrent Units (GRUs), and Convolutional Neural Networks (CNNs).

How can I implement a sequence model?

To implement a sequence model, you can use recurrent layers to capture information from previous inputs, embedding layers to convert categorical or textual data into meaningful representations, and convolutional layers for capturing important features in the sequential data.

What are the pros and cons of using sequence models?

Some pros of using sequence models include their effectiveness in understanding sequential data and capturing temporal dependencies. However, they can be computationally expensive, may struggle with long sequences, and require a large amount of labeled data.