What is Sequence Modelling?

Sequence Modelling is a type of machine learning technique that involves the use of algorithms to predict or classify sequences of observable data points. At its core, sequence modelling is all about understanding the sequential information within data, where the position and order of input has a meaningful impact on the outcomes.

Use Cases of Sequence Modelling

This technique finds broad applications in various sectors such as natural language processing, speech recognition, video recognition, and even financial forecasting. You'll find it hard at work behind the scenes of your voice assistant, genre prediction in video platforms, or trying to figure out the trend of the stock market.

Importance of Sequence Modelling

Sequence modelling is all about context. Its power comes from how it applies this understanding across diverse sectors, helping everything from language translation services to healthcare providers make better, more informed decisions.

Types of Data in Sequence Modelling

In sequence modelling, there are two types of data. Univariate data is a single sequence of observations while multivariate data are sequences of tuples.

Key Concepts in Sequence Modelling

Now, let's dive into the conceptual underpinnings of sequence modelling and hash out some of the main terms you will come across.

Sequence and Sequency Dependency

In sequence modeling, a sequence is an ordered list of numbers or events in which repetition is allowed. Sequency dependency is how these numbers or events influence each other across a sequence.

Stateful and Stateless Models

In Sequence modelling, models can be stateful or stateless. Stateless models consider each individual sequence in a dataset independently, whereas stateful models maintain information about the state of the model across different sequences.

Time Step

A time step represents a single point in time within a sequence, often corresponding to a single observation within the sequence.

Hidden State

The hidden state of a model is a representation of the information which the model has remembered from past inputs, stored for use in future predictions.

Types of Sequence Modelling Algorithms

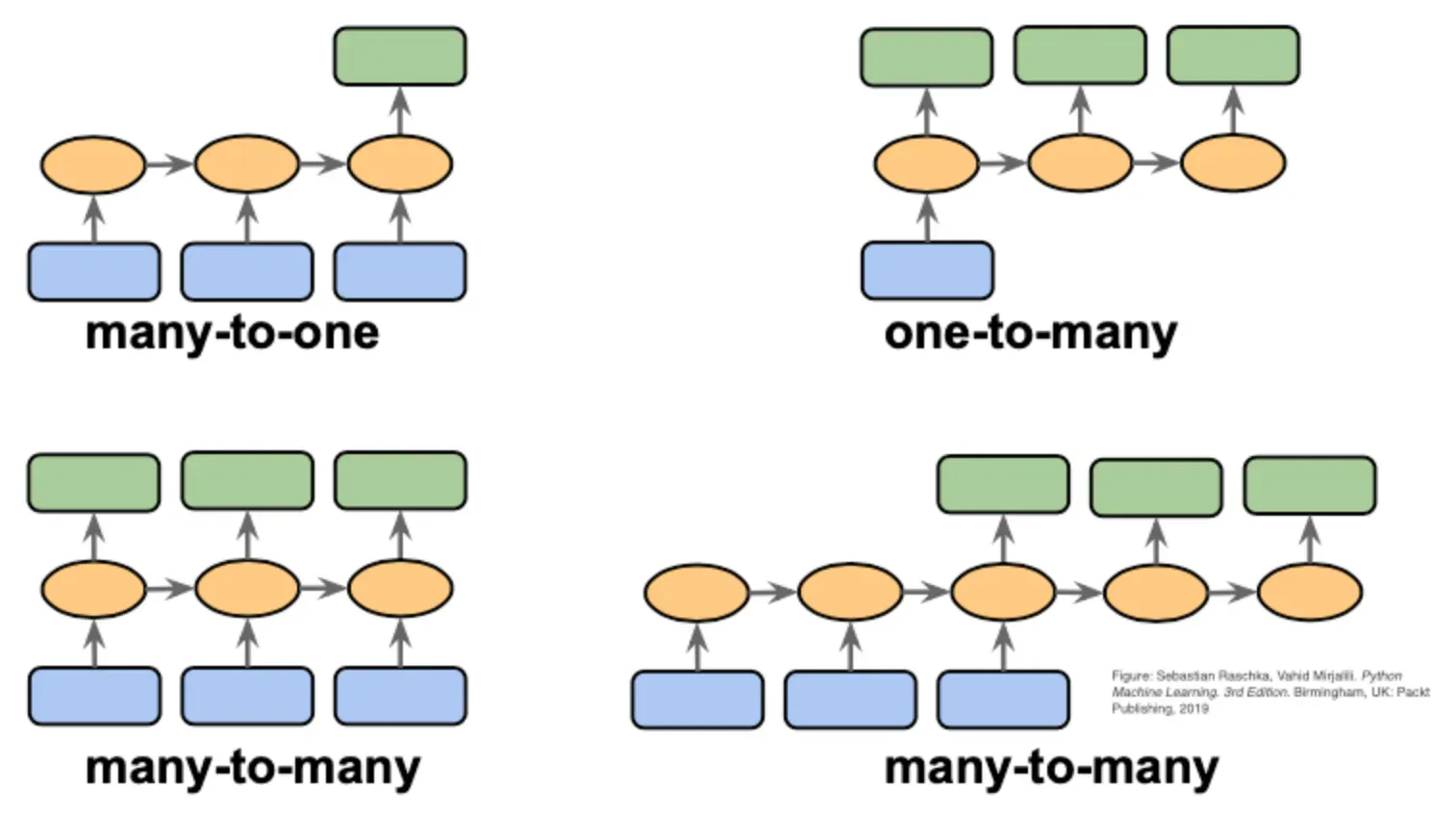

There are a variety of algorithms used in sequence modelling, each with its unique strengths and applications.

Recurrent Neural Networks (RNNs)

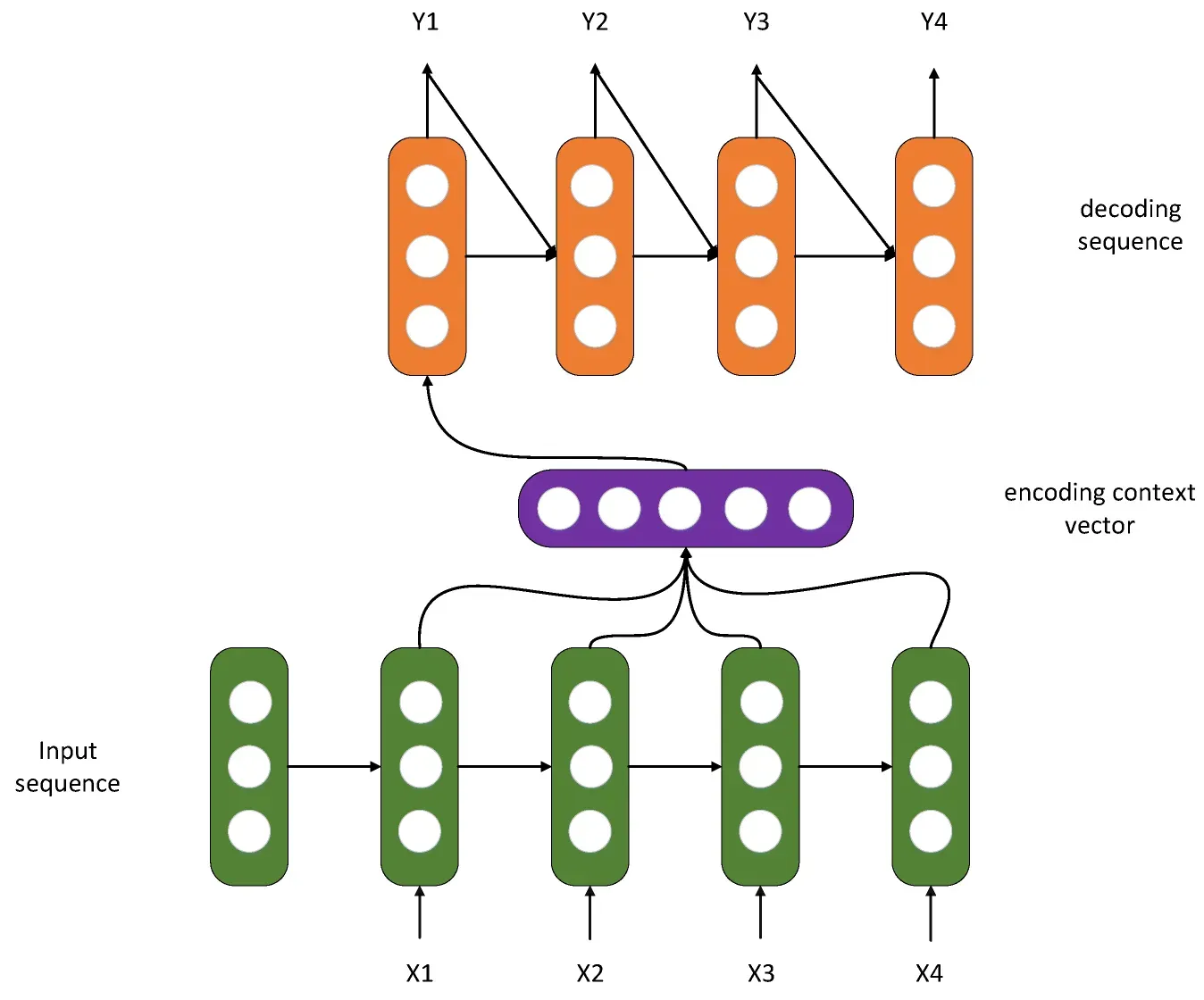

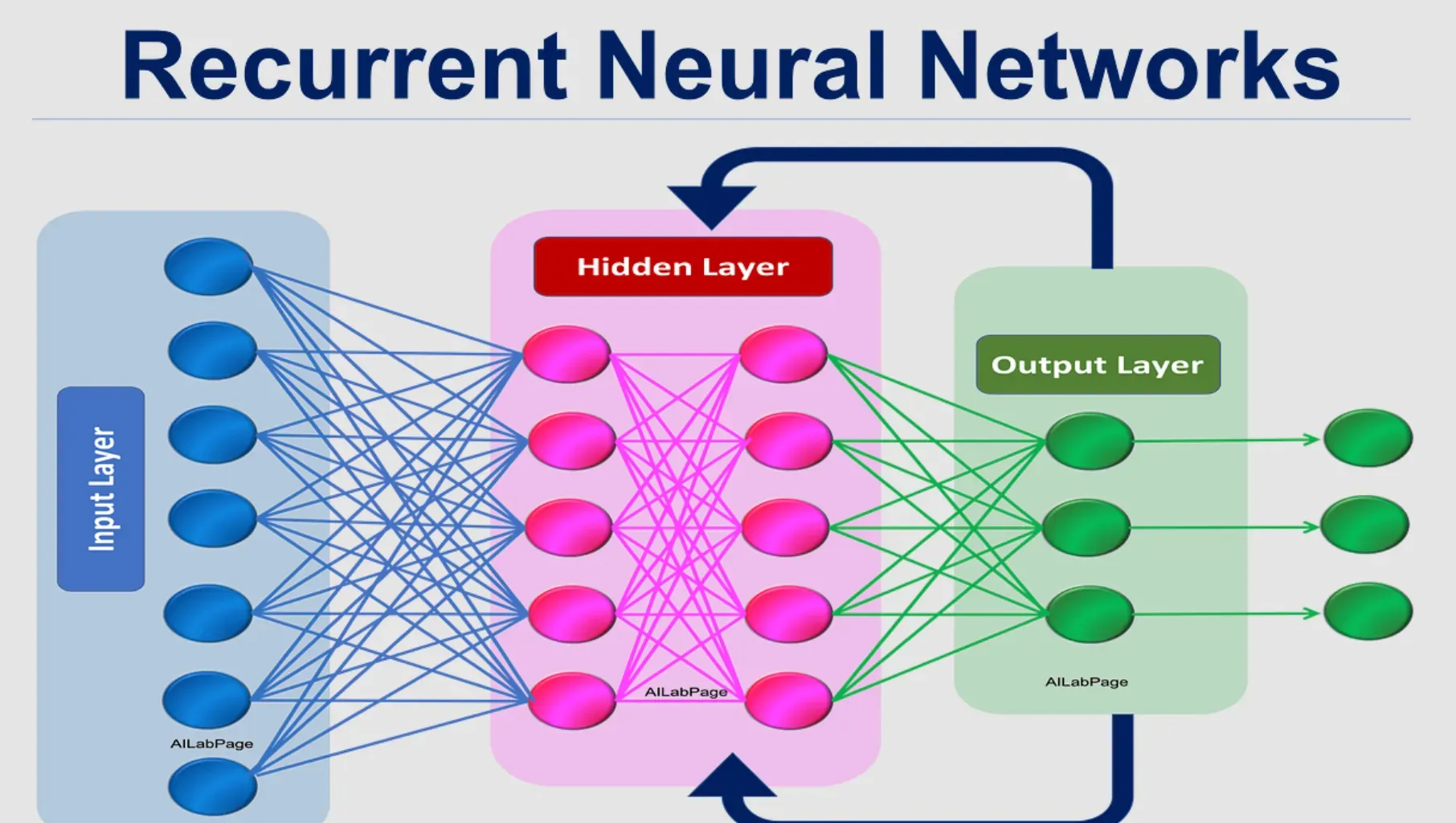

RNNs are designed for handling sequential data. They maintain a hidden state from time step to time step, allowing them to capture temporal dependencies in the data.

Long Short-Term Memory (LSTM)

LSTMs are a type of RNN designed to remember long-term dependencies by introducing a memory cell that can maintain information in memory for long periods of time.

Gated Recurrent Units (GRUs)

A variation of RNNs, GRUs are simpler than LSTMs and are designed to solve the vanishing gradient problem by introducing gating mechanisms.

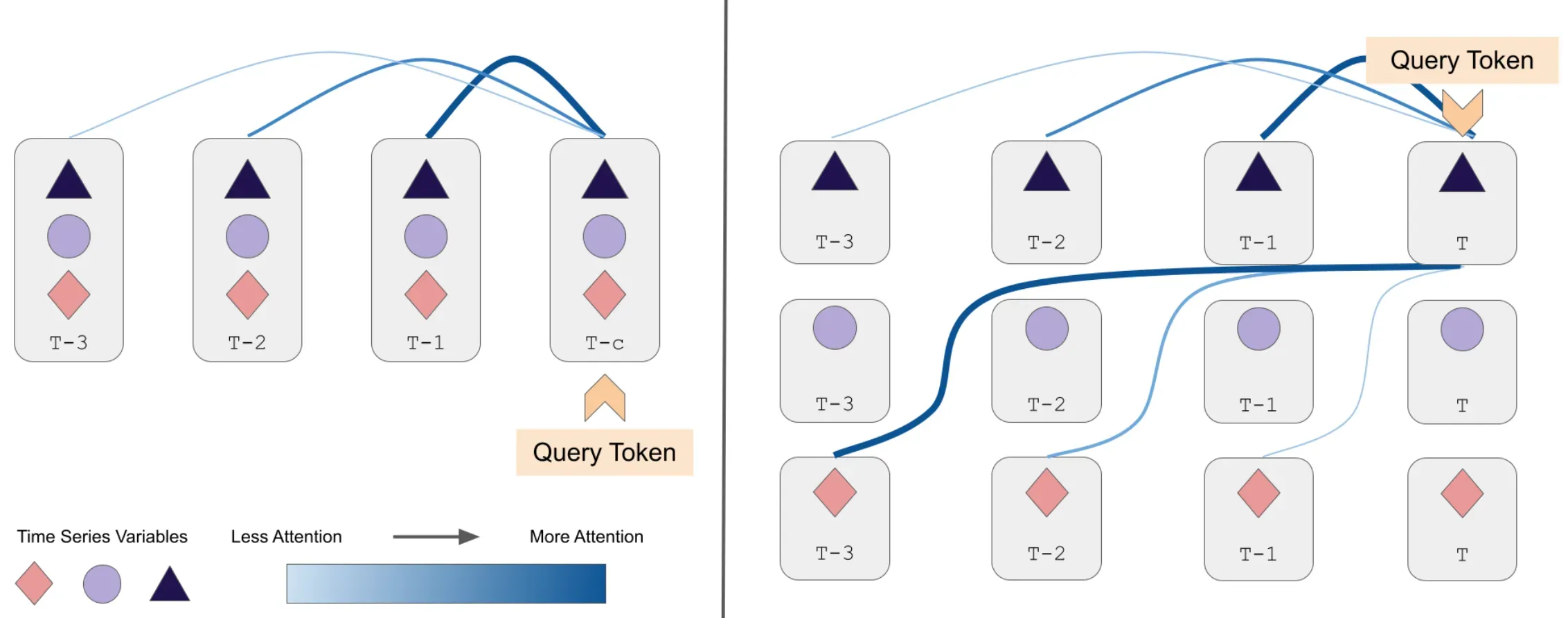

Transformers

Transformers are a type of model that use attention mechanisms and self-attention to weigh the importance of different parts of a sequence when making predictions.

Sequence Modelling Constructs and Mechanisms

Now, let's get to grips with the foundational building blocks and key mechanisms that sequence modelling algorithms use to process data.

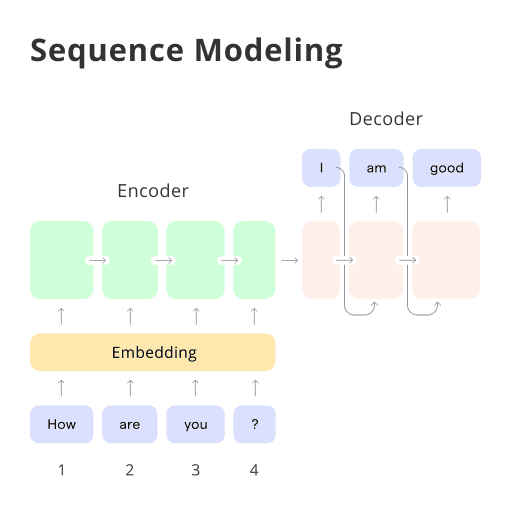

Embedding Layer

The embedding layer is the first layer of a model used to transform input sequences into dense vectors of fixed size that are more suitable for a neural network.

Activation Function

These are mathematical equations that determine the output of a neural network. They decide whether a neuron should be activated or not.

Loss Function

A loss function measures how well your model's predictions match the target values. The function’s output is used during the training phase to adjust the model's weights.

Backpropagation Through Time (BPTT)

BPTT is a technique used to train neural networks with sequence data. It's an extension of traditional backpropagation but applied to the entire sequence of data.

Sequence Modelling Challenges

No technique is without its hurdles. Here are some unique challenges you may encounter in the realm of sequence modelling.

Vanishing and Exploding Gradients

In the training phase of deep networks, the gradients often get smaller (vanish) or larger (explode) as they are backpropagated through time, leading to slow learning or unstable training.

Sequential Dependencies and Temporal Distortions

The need for sequence modelling to recognize patterns across different time steps can be challenging particularly in the case of long sequences.

Resource Constraints

Training sequence models, especially on large datasets, can be resource-intensive in terms of computation and memory.

Overfitting

This happens when your sequence model is too complex and ends up learning not just the underlying patterns but also the noise in the data, leading to poor generalization.

Sequence Modelling in Natural Language Processing (NLP)

Sequence modelling plays a crucial role in NLP. Let's explore how it's used in this area.

Language Modelling

This involves predicting the next word in a sequence, a fundamental task in NLP which serves as the basis for tasks like text generation and machine translation.

Text Classification

Sequence modelling is also used for classifying text into predefined categories. Examples include sentiment analysis where a sequence of words (text) is classified into positive, negative, or neutral sentiments.

Named Entity Recognition

A task in Information Extraction, Named Entity Recognition (NER) involves categorizing specific entities in the text into predefined category types such as names, organizations, locations etc., using sequence modelling.

Text Summarization

Sequence modelling can aid with automatic text summarization by understanding the sequence of words and generating a shorter version of the text that retains the original meaning.

Sequence Modelling in Time Series Analysis

Not just in NLP, sequence modelling also finds crucial applications in time series analysis.

Forecasting

Sequence models are used for predicting future values of a time series based on historical data. For example, they can be used to forecast future stock prices, weather, or electricity demand.

Anomaly detection

In the context of time series, sequence models can detect unusual patterns or behaviors, which are considered anomalies. This has applications in various domains like cybersecurity, healthcare and finance.

Trend Analysis

Understanding and predicting trends is another application of sequence modelling in time series, helping in making strategic decisions in businesses.

Sequence Labeling

Labeling individual elements in a sequence using annotations for example in bioinformatics to predict the sequence of amino acids in a protein.

Evaluation Metrics for Sequence Modelling

We evaluate sequence models based on certain metrics. Let's dive into those.

Accuracy

One of the most common metrics, this quantifies the number of correct predictions made by the model compared to the total predictions.

Precision and Recall

Precision measures the number of true positives against all positive results. Recall, on the other hand, represents the number of true positives against all actual positive cases.

F1 Score

F1 Score is the harmonic mean of Precision and Recall and provides a balance between them. It's especially useful in situations where the data might be imbalanced.

Loss

In sequence modelling, we want to minimize the loss function to improve the model's performance. Commonly used loss functions include Mean Squared Error for regression problems and Cross-entropy for classification.

Future of Sequence Modelling

Finally, let's discuss the way forward for sequence modelling.

AutoML for Sequence Modelling

The future may lean towards automatic design of machine learning models, or AutoML, making sequence modelling more accessible for non-experts.

Integration into Business Processes

As sequence modelling becomes more accessible and interpretable, we may see it increasingly integrated into business decision-making processes.

Large-Scale Deployment

Next-generation hardware and software will make it possible to deploy large-scale sequence models in real-time scenarios across industries. Overcoming current limitations.

Ethics and Fairness

Growing awareness of the ethical implications of AI and ML suggests a future where there will be more emphasis on ensuring fairness, transparency, and interpretability in sequence models.

Frequently Asked Questions (FAQs)

Sequence Modeling What is Sequence Modelling?

Sequence modeling refers to the use of statistical modeling techniques to predict the next value(s) in an ordered set of data. It's primarily used in fields like natural language processing, handwriting recognition, speech recognition, and more.

Sequence Modeling How are RNNs used in Sequence Modelling?

Recurrent Neural Networks (RNNs) are often used in sequence modelling due to their ability to "remember" previous input in the sequence through hidden layers, thereby effectively modeling temporal dependencies.

Sequence Modeling What is Long Short-Term Memory (LSTM)?

Long Short-Term Memory (LSTM) is a special kind of RNN that can learn long-term dependencies, which makes it highly efficient for sequence prediction problems. It achieves this by protecting "important" information and forgetting "less useful" information through its cell states.

Sequence Modeling How are Sequence Models different from traditional Machine Learning Models?

Traditional machine learning models do not maintain any state from one prediction to the next. Whereas, sequence models maintain a "state" from one-step prediction to the next, allowing them to understand the "context" in sequence data.

Sequence Modeling What are some practical applications of Sequence Modelling?

Sequence modeling is used in a variety of applications including, but not limited to, speech recognition, language translation, stock prediction, musical notes generation, and even in DNA sequence analysis.