Deep learning has become an increasingly popular field in machine learning thanks to its ability to analyze and understand complex patterns in data. One of the key steps in deep learning is model training, which involves optimizing a neural network model to make accurate predictions.

Model training is a fundamental process that empowers neural networks to learn and make predictions. It's a complex but crucial step in building AI systems.

According to a report by CrowdFlower, over 75% of the time spent on AI projects is typically dedicated to data preparation and model training.

Mastering model training can unlock the full potential of your AI systems. It's the key to transforming raw data into intelligent insights, enabling machines to learn, adapt, and make decisions that rival human capabilities.

In this blog, we will explore the concept of model training in deep learning and its various aspects.

So let's get started!

What is Model Training?

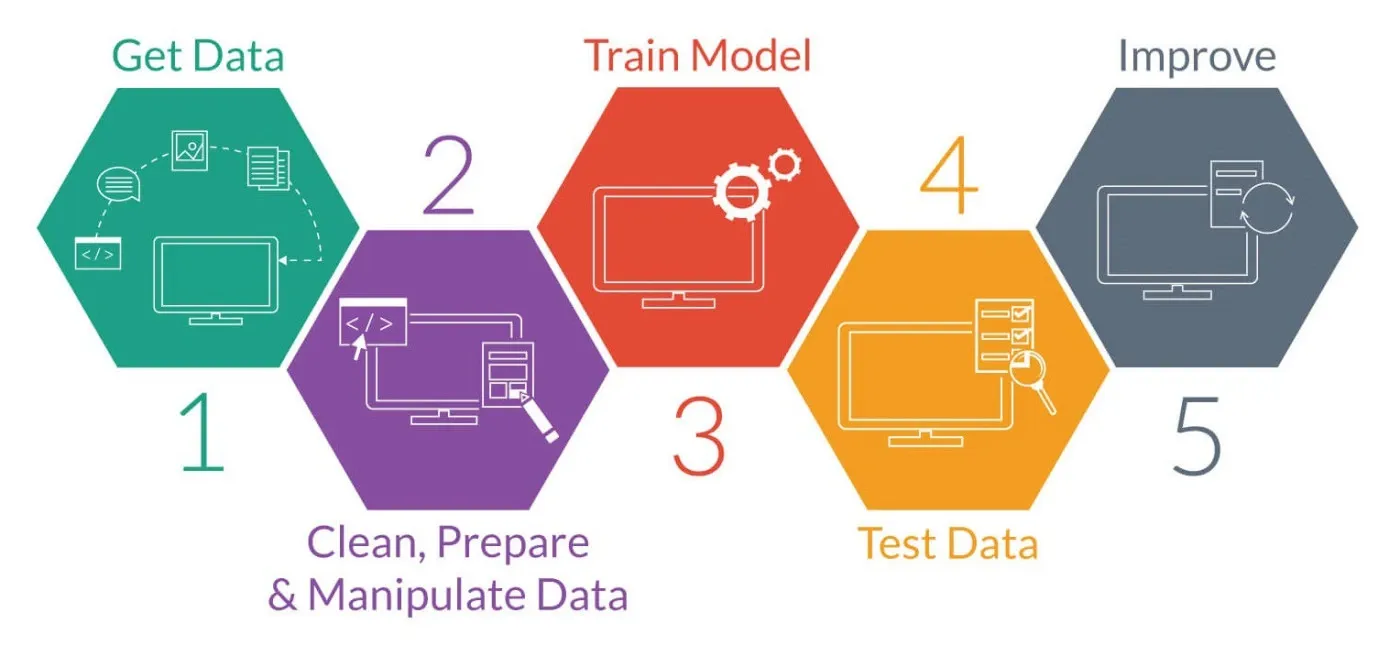

Model training is the process of teaching a deep learning model to make accurate predictions on new, unseen data.

It involves providing the model with a large dataset and a set of labels or targets and then optimizing its parameters to minimize the difference between its predictions and the true labels.

What is the Importance of Data in Model Training?

In deep learning, data plays a crucial role in training models. The more diverse and representative the data is, the better the model will perform.

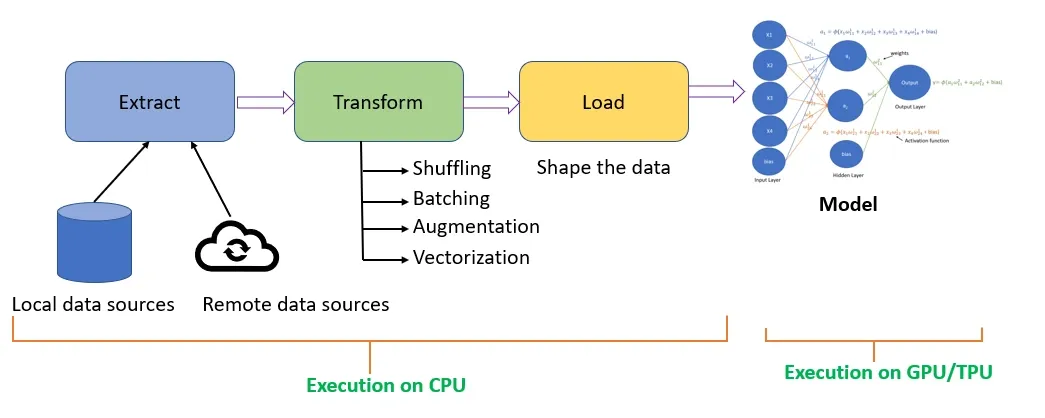

It is important to gather and preprocess the data to ensure its quality and suitability for the task at hand.

Data preprocessing may involve steps like normalization, scaling, and feature engineering.

How to Choose the Right Model Architecture?

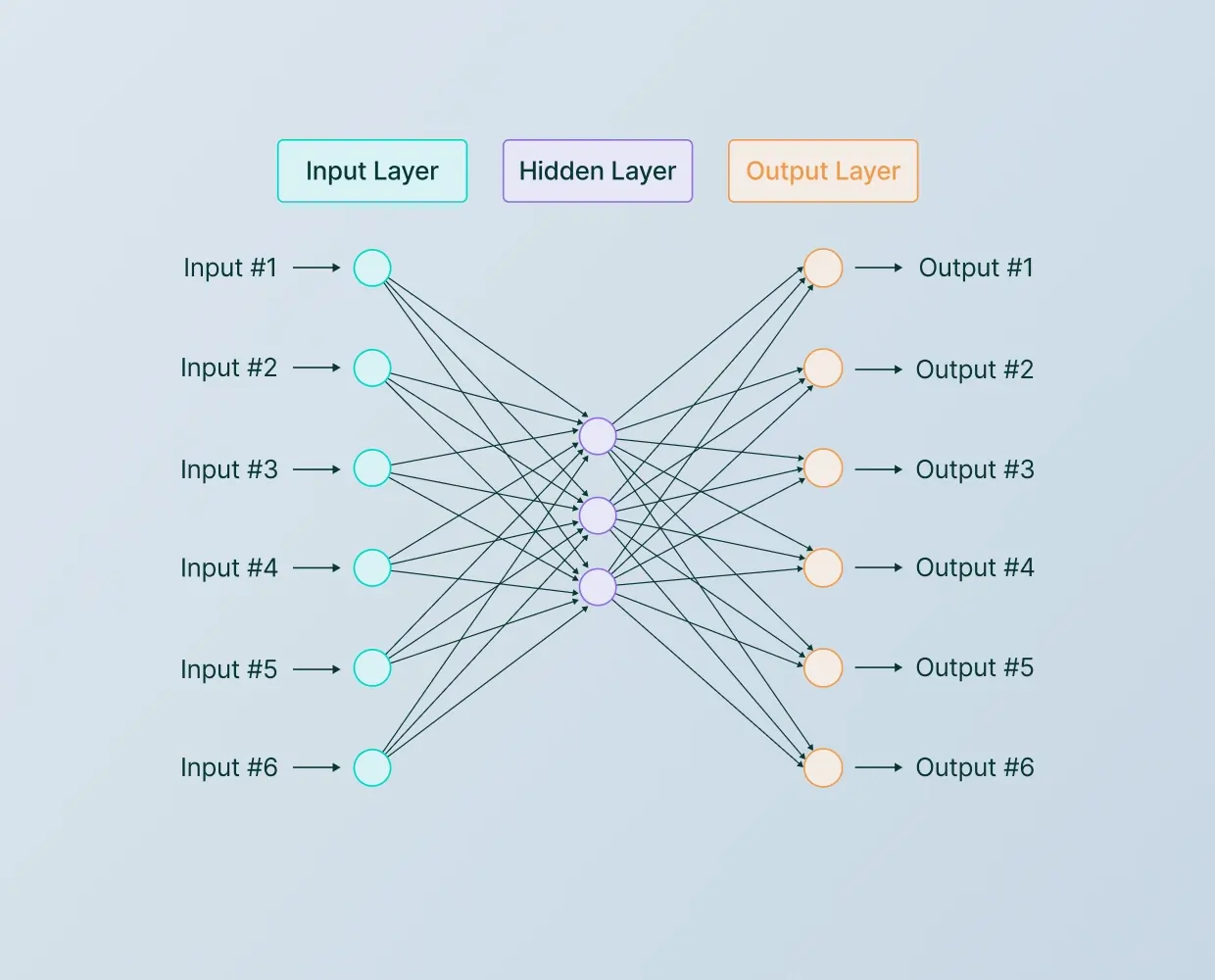

The architecture of a deep learning model determines its ability to learn and represent patterns in the data.

Different architectures, such as convolutional neural networks (CNNs) for image data or recurrent neural networks (RNNs) for sequential data, are suited to different tasks.

Choosing the right architecture for the problem being solved is important to ensure optimal performance.

Get your own deep trained Chatbot with BotPenguin, Sign Up Today!

Loss Functions and Optimization Algorithms

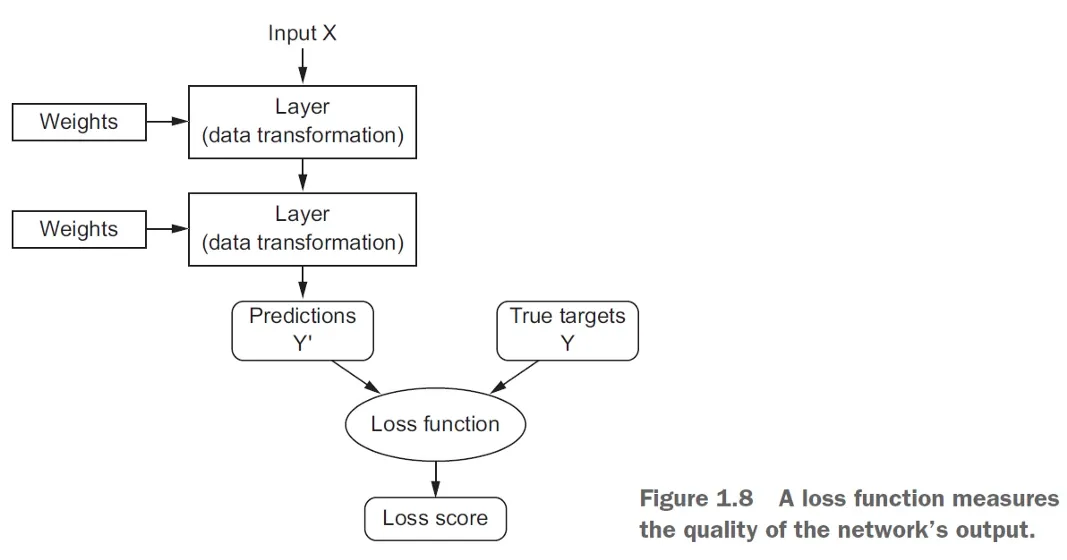

During model training, a loss function measures the difference between the model's predictions and the true labels. The loss function's choice depends on the problem's nature, such as regression or classification.

Optimization algorithms like gradient descent are then used to update the model's parameters in the direction that minimizes the loss function.

Overfitting and Regularization Techniques

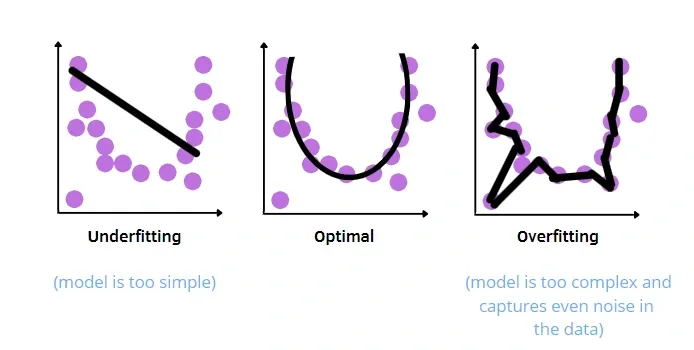

One common challenge in model training is overfitting, where a model performs well on the training data but needs to generalize to new, unseen data.

To mitigate overfitting, various regularization techniques can be employed. This includes methods like dropout, which randomly drops neurons during training to prevent the model from relying too heavily on specific features.

Hyperparameter Tuning

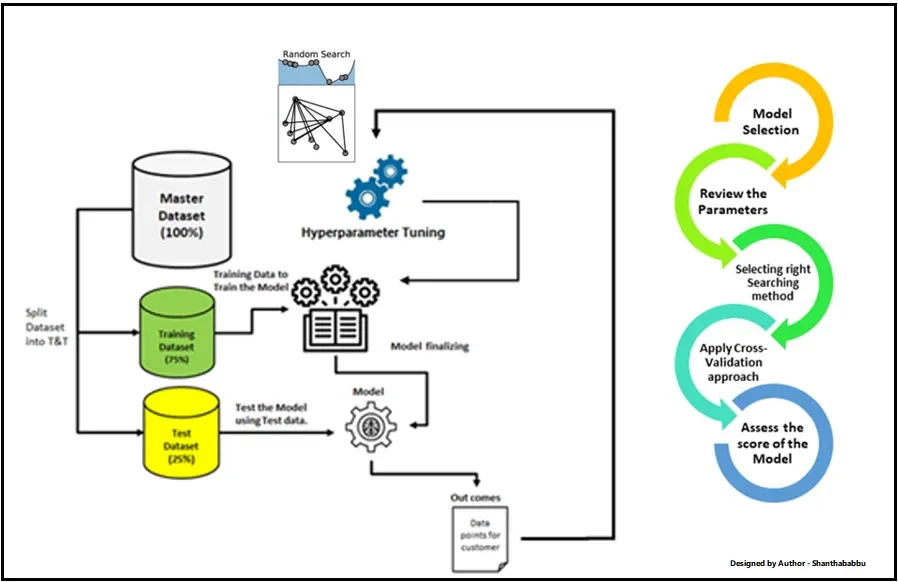

Hyperparameters are parameters not learned during model training but set manually before training.

Examples of hyperparameters include the learning rate, batch size, and number of hidden layers. Finding the right combination of hyperparameters is essential for optimal model performance.

Hyperparameter tuning involves experimenting with different values and evaluating the model's performance to find the best combination.

Evaluating Model Performance

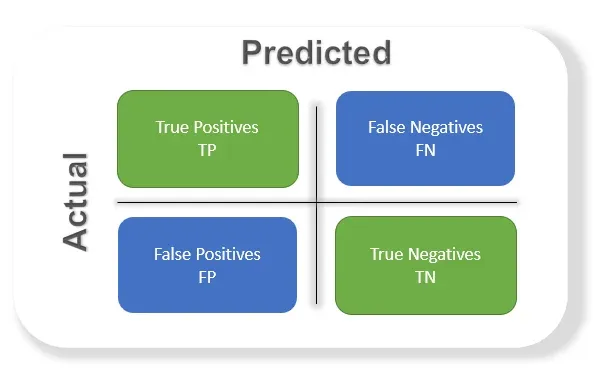

Once the model is trained, evaluating its performance on unseen data is important. Depending on the task, this is often done using accuracy, precision, recall, and F1-score metrics.

Evaluating the model's performance aids in determining its dependability and reveals its strengths and limitations.

Conclusion

Model training is an important phase in deep learning that entails optimizing the parameters of a neural network model to generate accurate predictions on incoming data.

It requires careful architectural selection, data pretreatment, selection of the loss function and optimization method, and consideration of regularization approaches.

Hyperparameter tuning and model performance evaluation are key aspects of the model training process.

By understanding the intricacies of model training in deep learning, we can build powerful models that can handle complex tasks and make accurate predictions.

So, let's start training those models!