Introduction

Are you eager to enhance your chatbot's conversational abilities? This blog dives into Falcon LLM, an advanced language model tailored for chatbot applications.

Falcon LLM outperforms other models like GPT-3, empowering your chatbots with superior natural language comprehension and context awareness.

Since, it is important to know how to train the model for your specific needs and fine-tune it for optimal performance. We'll guide you through incorporating Falcon LLM, from selecting the appropriate package to acquiring API credentials.

Keep reading to explore Falcon LLM's remarkable capabilities and create chatbots that truly understand and engage with your users on a deeper level.

Understanding Falcon Language Model (LLM)

Falcon Language Model (LLM) is an advanced technology crucial in chatbot development.

With LLM, chatbots can more effectively understand and interpret human language, enhancing user interactions and the overall experience.

Let's delve deeper into Falcon LLM and how it differs from other language models like GPT-3.

What is Falcon LLM?

Falcon Language Model (LLM) is a state-of-the-art language model explicitly developed for chatbot applications.

It is designed to comprehend natural language inputs and generate meaningful and contextually relevant responses.

Falcon LLM utilizes deep learning techniques to analyze and understand the meaning and nuances of human language. It has been trained on vast amounts of text data to develop a robust knowledge base, enabling it to provide accurate and helpful responses.

How does Falcon LLM Differ from Other Language Models like GPT-3?

Falcon LLM differs from other language models like GPT-3 in key ways.

Firstly, Falcon LLM has been explicitly fine-tuned for chatbot applications, making it highly specialized in understanding conversational context.

While GPT-3 is a more generalized language model, Falcon LLM optimizes natural language understanding and generates relevant responses for chatbot interactions.

Secondly, Falcon LLM offers better scalability and cost-effectiveness compared to GPT-3.

Falcon LLM packages are designed to suit various chatbot development needs and budgets, allowing developers to choose a plan that aligns with their requirements.

This makes Falcon LLM a more accessible and affordable option for integrating powerful language processing capabilities into chatbots.

Key Features and Capabilities of Falcon LLM

Falcon LLM boasts a range of features and capabilities that enhance chatbot development. These include:

- Contextual Understanding: Falcon LLM excels at comprehending the context of a conversation. It can consider previous interactions and user queries to generate more accurate and contextually relevant responses.

- Intent Recognition: Falcon LLM can discern the underlying intent behind a user's query, enabling chatbots to provide more precise and appropriate responses. This helps in delivering a more personalized and satisfying user experience.

- Sentiment Analysis: Falcon LLM can analyze the sentiment expressed in a user's message, allowing chatbots to respond with empathy and understanding. This emotional understanding enhances the conversational flow and improves user engagement.

- Language Generation: With Falcon LLM, chatbots can generate natural and coherent responses that mimic human conversation. This makes the interactions with the chatbot more seamless and increases user satisfaction.

- Multilingual Support: Falcon LLM supports multiple languages, making it a versatile option for chatbot development in various regions and languages. This enables the chatbot to cater to a wide range of users and effectively communicate in their preferred language.

Next, we will cover the benefits of integrating Falcom LLM with a chatbot development.

Benefits of Integrating Falcon LLM in Chatbot Development

Integrating Falcon LLM into chatbot development brings several significant benefits. Let's explore these advantages in more detail.

Improved Natural Language Understanding

Incorporating Falcon LLM into your chatbot development can significantly enhance the chatbot's ability to understand natural language.

Falcon LLM's contextual understanding and intent recognition abilities enable the chatbot to grasp user queries more accurately and provide relevant responses. This improves user satisfaction and creates a more seamless and human-like conversational experience.

Enhanced Conversational Flow

Falcon LLM enables chatbots to generate natural and coherent responses, improving conversational flow.

The chatbot can respond more smoothly, considering the context and previous interactions. This creates a more engaging and interactive conversation with the user and helps maintain their interest and attention.

Personalization and Customization Options

With Falcon LLM, chatbots can offer personalized responses tailored to individual users.

By analyzing previous conversations and user preferences, the chatbot can provide recommendations, suggestions, and relevant information to each user.

This personalization leads to a more personalized and immersive user experience, fostering more profound engagement.

Suggested Reading:

Falcon LLM vs. Other Language Models: A Comparative Analysis

Handling Complex Queries and Scenarios

Falcon LLM's robust language processing capabilities make it adept at handling complex queries and scenarios. It can understand and respond to intricate user questions or situations that involve multiple intents.

This enables chatbots to confidently handle a wide range of user queries, providing accurate and helpful responses even in challenging or ambiguous circumstances.

Next, we will cover how to get started with Falcom LLM integration.

Getting Started with Falcon LLM Integration

Integrating Falcon LLM into your chatbot development process is straightforward. Here's what you need to know to get started.

Prerequisites for Incorporating Falcon LLM in your Chatbot Development Process

Before incorporating Falcon LLM into your chatbot development process, you need to have an understanding of the following:

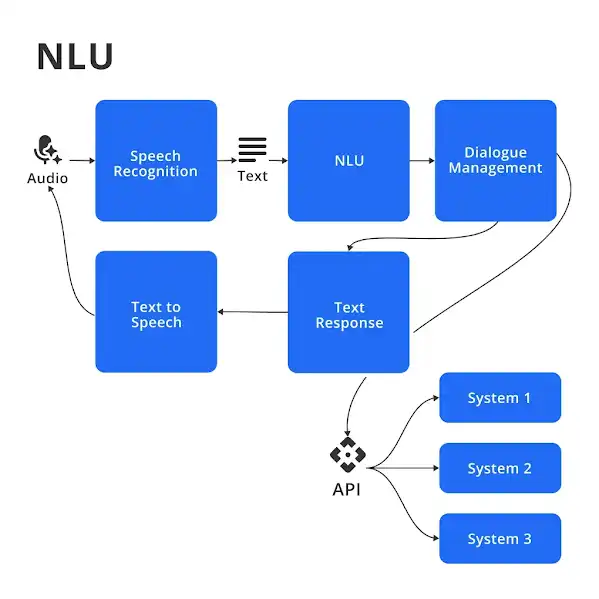

- Chatbot Development Framework: You must have a chatbot development framework or architecture. Falcon LLM can be integrated into various chatbot development platforms, including DialogFlow, Rasa, and Microsoft Bot Framework.

- API Calls: API calls are essential to incorporating Falcon LLM into your chatbot development process. You must be familiar with making API calls to external services.

- Basic Programming Skills: Basic programming knowledge is necessary for Falcon LLM integration. Knowledge of programming languages such as Python, JavaScript, or C# is preferred.

And, if you want to begin with chatbots but have no clue about how to use language models to train your chatbot, then check out the NO-CODE chatbot platform, named BotPenguin.

With all the heavy work of chatbot development already done for you, BotPenguin allows users to integrate some of the prominent language models like GPT 4, Google PaLM and Anthropic Claude to create AI-powered chatbots for platforms like:

- WhatsApp Chatbot

- Facebook Chatbot

- Wordpress Chatbot

- Telegram Chatbot

- Website Chatbot

- Squarespace Chatbot

- Woocommerce Chatbot

Choosing the Right Package or Plan

Falcon LLM is available in various packages or plans, making it accessible to many developers. The package you choose depends on your chatbot requirements, the number of API calls, and the features you need.

The different Falcon LLM packages include:

- Free Plan: The free plan offers 100 API calls per month with limited features.

- Basic Plan: The basic plan starts at $29 monthly and provides 5,000 API calls. It includes key features such as contextual understanding, intent recognition, sentiment analysis, and language generation.

- Premium Plan: The premium plan starts at $299 monthly and offers 50,000 API calls. It includes all the basic plan features and additional features such as multilingual support, advanced analytics, and custom entity recognition.

Setting up Falcon API and Obtaining the Necessary Credentials

Once you have chosen the Falcon LLM package that suits your needs, setting up the Falcon API and obtaining the necessary credentials is the next step. Here's what you need to do:

- Sign up for an account: The first step is to sign up on the Falcon website. You must provide your name email address, and create a password.

- Create a project: After signing up, create a project in the Falcon dashboard to use Falcon LLM. Give your project a unique name and choose the language you want to use.

- Obtain an API key: To access Falcon LLM API, you need an API key. In the Falcon dashboard, navigate to the "API Keys" section and create a new API key for your project.

- Integrate Falcon LLM into your chatbot development framework: The final step is to integrate Falcon LLM into your chatbot development framework.

You can do this by making an API call to the Falcon API, passing the input query, and receiving the output response.

Next, we will cover how to train Falcom LLM for chatbot purposes.

Training Falcon LLM for Chatbot Purposes

Training Falcon LLM for chatbot purposes involves data collection, preparation, fine-tuning, and evaluating the performance of the trained model.

Let's explore each step in detail.

Data Collection and Preparation for Training Falcon LLM

You must collect and prepare the correct data to train Falcon LLM effectively for chatbot purposes. Here's what you need to do:

- Gather relevant data: Collect diverse data covering the topics and conversations your chatbot will encounter. This data includes chat logs, customer support interactions, social media conversations, and relevant text sources.

- Preprocess the data: Clean and preprocess the collected data to remove irrelevant or sensitive information. This may involve removing personally identifiable information, anonymizing data, or removing noisy or duplicate data.

- Format the data: Format the collected data into a suitable format for training Falcon LLM. This usually involves converting the data into a structured format such as JSON or CSV.

Ensure that the data is organized into input-output pairs, where the input is the user query and the output is the expected chatbot response.

- Split the data into training and validation sets: The prepared data will be split into training and validation sets. The training set will be used to train Falcon LLM, while the validation set will be used to evaluate its performance during training.

Fine-Tuning Falcon LLM for Specific Use Cases

Fine-tuning Falcon LLM for specific use cases involves training the model on your prepared data to make it more contextually relevant and accurate. Here's how you can fine-tune Falcon LLM:

- Load the pretrained model: Start by loading the pretrained Falcon LLM model that serves as the base model for fine-tuning. This pretrained model provides a good starting point, as it is already trained on a wide range of text data.

- Define the specific task: Specify the specific task or use case for which you are training Falcon LLM. This could be customer support, FAQ handling, or any other chatbot application.

- Train the model: Use techniques like transfer learning to Train Falcon LLM on your prepared data. Fine-tuning allows the model to adapt to your specific use case, improving its ability to generate accurate and contextually relevant responses.

- Experiment and iterate: To enhance Falcon LLM's performance, experiment with different hyperparameters and training strategies. This may involve adjusting the learning rate, batch size, or number of training epochs.

Iterate on the training process until you achieve the desired performance.

Suggested Reading:

Falcon 40b: The Future of AI Language Modeling

Evaluating the Performance and Accuracy of the Trained Model

After training Falcon LLM for chatbot purposes, evaluating its performance and accuracy is crucial. Here's how you can assess the trained model:

- Use validation set: Use the validation set prepared earlier to evaluate how well Falcon LLM performs on unseen data. Measure accuracy, precision, recall, and F1 score to quantify the model's overall performance.

- Analyze user feedback: Collect or conduct user testing to gauge how well Falcon LLM performs in real-world chatbot interactions. Feedback can help identify areas for improvement and uncover any limitations or issues with the trained model.

- Continuous improvement: Continuously monitor Falcon LLM's performance in production and fine-tune the model as needed. By collecting user feedback and addressing any shortcomings, you can improve Falcon LLM's accuracy and effectiveness over time.

Next, we will cover the best practices for using Falcom LLM in chatbot development.

Best Practices for Utilizing Falcon LLM in Chatbot Development

To make the most of Falcon LLM in chatbot development, it's essential to follow best practices that optimize conversation flows, implement proactive suggestions, and handle user feedback.

Let's explore these practices in detail.

Designing Conversation Flows for Optimal Utilization of Falcon LLM

Designing conversation flows that maximize the utilization of Falcon LLM involves structuring user interactions to leverage the capabilities of the language model.

Here are some best practices to consider:

- Use context-rich conversations: Design conversation flows that provide ample context to Falcon LLM. Instead of single-turn interactions, aim for multi-turn conversations that allow the model to understand the context and generate more accurate responses.

- Utilize system prompts: Incorporate system prompts or messages at appropriate intervals during the conversation to guide the user and set expectations.

These prompts can help steer the conversation and elicit specific information from the user, leading to more accurate responses from Falcon LLM.

- Handle fallbacks gracefully: Implement a fallback mechanism if Falcon LLM cannot respond satisfactorily. Ensure that the fallback response is informative and provides an alternative way for the user to find the required information.

Implementing Proactive Suggestions and Recommendations

Implementing proactive suggestions and recommendations can enhance the user experience by anticipating user needs and providing relevant information.

Here's how you can leverage Falcon LLM for this purpose:

- Analyze user queries: Use Falcon LLM's language understanding capabilities to analyze user queries and identify patterns or intents.

This analysis can help generate proactive suggestions relevant to the user's current context or previous conversations.

- Offer relevant recommendations: Based on the analysis of user queries, Falcon LLM can suggest relevant information, resources, or actions that users might find helpful.

These recommendations can be presented to the user as quick replies, buttons, or other interactive elements to facilitate easy access.

- Personalize suggestions: Tailor the proactive suggestions based on user preferences, history, or any available user profile information.

Personalization can enhance the relevance of the recommendations and improve user engagement.

Handling User Feedback to Improve the Model Continuously

Handling user feedback is crucial for continuously improving the performance and accuracy of Falcon LLM. Here are some practices to consider:

- Gather user feedback: Encourage users to provide feedback on their interactions with the chatbot. This can be done through in-app surveys, feedback forms, or actively soliciting feedback during the conversation.

Ensure the feedback mechanism is user-friendly and easily accessible.

- Analyze and categorize feedback: Analyze the collected user feedback and categorize it based on different aspects such as accuracy, relevance, clarity, and user satisfaction.

This analysis helps identify common issues or areas for improvement.

- Iterate and fine-tune the model: Use the user feedback to improve Falcon LLM's performance iteratively. Fine-tune the model based on the feedback to address identified issues, enhance accuracy, and improve the chatbot experience.

- Continuous monitoring and maintenance: Continuously monitor Falcon LLM's performance and make necessary updates and adjustments as new feedback is received.

This ensures that the model stays up-to-date and delivers accurate and relevant responses.

Next, we will see some challenges and solutions in Falcom LLM integration.

Challenges and Solutions in Falcon LLM Integration

Integrating Falcon LLM into chatbot development comes with its own set of challenges.

Understanding these challenges and implementing effective solutions is crucial for a smooth integration process.

Let's delve into the common challenges faced and strategies to overcome them.

Common Challenges Faced during Falcon LLM Integration

When integrating Falcon LLM into chatbot development, several challenges may arise, including:

- Data preparation complexities: Preparing the training data for Falcon LLM can be time-consuming and challenging, as it requires cleaning, formatting, and structuring large datasets for optimal training.

- Model fine-tuning difficulties: Fine-tuning Falcon LLM to suit specific chatbot use cases may pose challenges, especially in adjusting hyperparameters, selecting the right training strategies, and achieving the desired performance.

- Integration with existing systems: Smoothly integrating Falcon LLM with existing chatbot platforms, APIs, or databases can be complex and require careful planning to ensure compatibility and functionality.

Suggested Reading:

Falcon 40B vs GPT-3: Which Language Model is Better?

Strategies and Solutions to Overcome these Challenges

To overcome the challenges encountered during Falcon LLM integration, consider implementing the following strategies:

- Automated data preprocessing tools: Utilize automated data preprocessing tools and libraries to streamline the data preparation process, making it faster and more efficient.

- Transfer learning techniques: Leverage transfer learning techniques to expedite the fine-tuning of Falcon LLM for specific chatbot use cases, reducing the complexity of adjusting hyperparameters and training strategies.

- API endpoints for integration: Create API endpoints that facilitate seamless integration of Falcon LLM with existing chatbot systems. Thus allowing for easy communication and data exchange between the model and other components.

Tips for Troubleshooting and Optimizing Falcon LLM Performance

To troubleshoot issues and optimize the performance of Falcon LLM in chatbot development, consider the following tips:

- Monitor model performance: Regularly monitor Falcon LLM's performance metrics, such as accuracy and response times, to identify any deviations from expected performance and troubleshoot issues promptly.

- Experiment with hyperparameters: Conduct experiments to fine-tune hyperparameters such as learning rates, batch sizes, and optimizer settings to optimize Falcon LLM's performance for specific chatbot tasks.

- Data augmentation techniques: Explore data augmentation techniques to enhance the diversity and quality of training data, improving Falcon LLM's ability to generate accurate and contextually relevant responses.

Conclusion

In conclusion, to elevate your chatbot's conversational abilities to unprecedented levels Falcon LLM is the best option. This powerful language model, tailored explicitly for chatbot applications, offers a comprehensive solution to create truly intelligent and engaging chatbots.

From understanding context and intent to generating natural responses and analyzing sentiment, Falcon LLM's cutting-edge capabilities ensure your chatbot delivers an exceptional user experience. With its scalability and cost-effectiveness, integrating Falcon LLM into your development process is a strategic investment.

Follow the step-by-step guide in this blog to seamlessly incorporate Falcon LLM, fine-tune its performance, and continuously improve based on user feedback. Overcome integration challenges with proven strategies, and optimize your chatbot's performance with expert tips.

Don't settle for ordinary chatbots. Embrace the future of conversational AI with Falcon LLM and unlock a world of possibilities for your business or application.

Start your journey today and witness the transformative power of this remarkable technology.

Frequently Asked Questions (FAQs)

What are the technical requirements for integrating Falcon LLM?

You'll need a Hugging Face API token and libraries like Transformers and LangChain for interaction and prompt design.

Is any coding knowledge required to integrate Falcon LLM?

While some coding experience is helpful, libraries like LangChain simplify the process by providing pre-built functionalities.

Can Falcon LLM be used for free in chatbots?

Falcon requires accessing the Hugging Face API, which might incur usage fees depending on the chosen plan.

How does Falcon LLM handle conversation history in chatbots?

Integration with tools like LangChain allows for preserving chat history and using context to deliver more relevant responses.

What are some limitations of using Falcon LLM in chatbots?

Falcon LLM models can be computationally expensive, requiring significant resources for real-time interaction.

How can I ensure factual accuracy in responses generated by Falcon LLM?

Providing the LLM with access to reliable data sources and implementing fact-checking mechanisms are crucial.