Introduction

AI and Natural Language Processing (NLP) are the new language for humans to communicate with computers. Hugging Face, a leading AI platform, empowers developers and researchers to harness the full potential of these cutting-edge technologies.

From text classification to language generation, the Hugging Face model equips you with powerful tools and resources. Seamlessly integrate state-of-the-art models into your projects, streamlining the development process.

So if you consider Hugging Face as your ultimate tool for AI and NLP projects, then proper guidance is necessary.

In this comprehensive guide, we'll dive deep into the world of Hugging Face. Discover how to kickstart your AI journey with ease, leverage pre-trained models for lightning-fast development, and fine-tune models to perfection.

But that's not all! We'll also explore deployment strategies, ensuring your models reach production environments smoothly. Gain insights into monitoring and performance optimization techniques, ensuring your AI solutions operate efficiently and reliably.

Continue reading to uncover more information about Hugging face.

Getting Started with Hugging Face

Getting started with the Hugging Face model is a breeze, even if you are new to AI and NLP.

In this section, you’ll find information about getting started with a Hugging face.

Step 1

Sign Up for an Account

The first step to embark on your journey with Hugging Face is to create an account. Head to the Hugging Face website and click the "Sign up" button. Fill in your details, choose a strong password, and voila!

Step 2

Installation and Setup Process

Now that you have an account, it's time to set up Hugging Face on your local machine.

The installation process is straightforward and well-documented. Depending on your preferences, you can install Hugging Face either through pip or conda.

Simply open your terminal or command prompt and run the relevant installation command, following the instructions. Once installed, you can proceed with the setup process. This involves configuring your environment and dependencies.

Hugging Face provides clear and concise instructions on their website, ensuring a smooth setup process for all users.

Step 3

Hugging Face Libraries and Tools

Hugging Face offers powerful libraries and tools that streamline AI and NLP development.

One of the most popular libraries is Transformers. It provides a comprehensive collection of state-of-the-art pre-trained models, allowing you to perform various NLP tasks efficiently.

You can use cutting-edge models like BERT, GPT, and T5 with transformers.

In addition to Transformers, Hugging Face provides Datasets, a library that simplifies data preprocessing and loading for NLP tasks.

Datasets offer a vast collection of publicly available datasets, empowering you to access and preprocess data for your projects quickly.

Another notable library is Tokenizers, which enables efficient text tokenization. Tokenizers support various programming languages and offer customizable tokenization strategies, ensuring flexibility and seamless integration with NLP pipelines.

Lastly, Hugging Face offers an intuitive and user-friendly interface called the Hugging Face Hub.

The Hub serves as a hub (pun intended) for sharing and accessing models, datasets, and other resources within the Hugging Face community.

Whether you want to showcase your own models or explore the models others share, the Hub provides a centralized platform for discovery and collaboration.

Now, we will see how to use pre-trained models.

Utilizing Pre-trained Models

In this section, we will explore the concept of pre-trained models, guide you on finding and downloading them, and show you how to integrate these powerful models into your projects.

What Pre-Trained Models are?

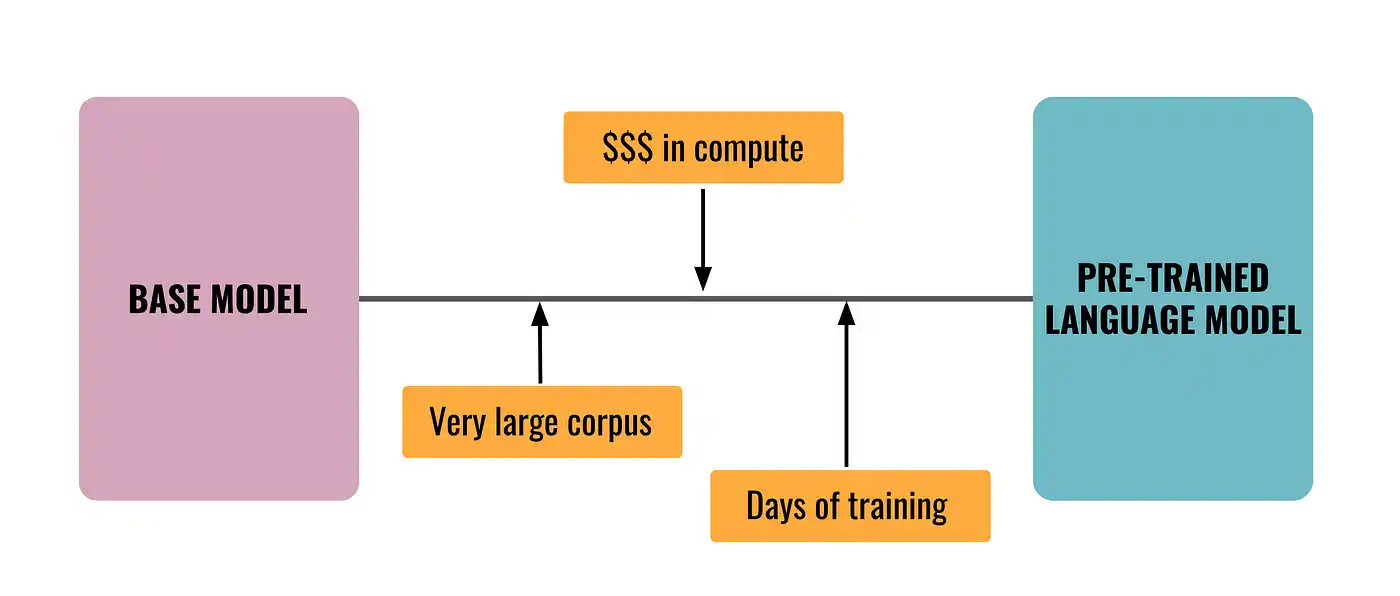

Pre-trained models form the foundation of Hugging Face's success. These models are trained on massive amounts of data and have learned to understand the intricacies of language, enabling them to perform a wide range of NLP tasks.

The training process involves exposing the models to diverse texts, allowing them to learn patterns, semantics, and context.

By utilizing pre-trained models, you can leverage the expertise and knowledge of these models without the need to train them from scratch.

This saves considerable time and computational resources, allowing you to focus on fine-tuning tasks specific to your project.

How to Find and Download Pre-Trained Models

Finding and downloading pre-trained models is a straightforward process with Hugging Face.

The Hugging Face Hub is the go-to platform for accessing a vast library of pre-trained models contributed by the community.

Simply navigate to the Hub and search for the specific NLP task or model architecture you are interested in. Once you find a model that suits your requirements, downloading it is as easy as a single click.

Hugging Face provides direct links to the model files, ensuring a seamless downloading experience. Whether you need a BERT model for text classification or a GPT (Generative Pre-Trained Transformers) model for language generation, the Hub offers a wide variety.

Suggested Reading:

Types of Applications You can Develop Using Hugging Face

Integration of Pre-Trained Models into your Projects

Now that you have downloaded your desired pre-trained model, it's time to integrate it into your projects.

Hugging Face model libraries, such as Transformers, provide a simple and efficient way to incorporate pre-trained models into your codebase.

To integrate a pre-trained model, you must first load it using the library's appropriate function. Once the model is loaded, it can be used for NLP tasks such as text classification, named entity recognition, or text generation.

The Transformers library offers a unified API, making adapting your code for different models and tasks easy.

In addition to loading pre-trained models, you can fine-tune them for your specific dataset to achieve better performance on your target task.

Fine-tuning involves training the pre-trained model on your dataset, allowing it to adapt to the nuances and characteristics of your data.

Hugging Face provides detailed guides and examples on fine-tuning models, ensuring optimal results for your projects.

Next, we will cover fine-tunning models with hugging face model.

Fine-Tuning Models with Hugging Face

Fine-tuning models is a key step in maximizing the performance of pre-trained models.

In this section, we will explore the importance of fine-tuning, provide a step-by-step guide on fine-tuning models using Hugging Face, and share tips and best practices for achieving optimal results.

Importance of Fine-Tuning Models

While pre-trained models offer impressive capabilities, fine-tuning allows you to adapt these models to your specific task or domain.

Fine-tuning is essential because pre-trained models are trained on general datasets. And they may not capture all the nuances or domain-specific information required for your application.

By fine-tuning a pre-trained model on your target dataset, you can improve its performance by allowing it to learn from data more closely related to your task.

This process enables the model to understand the intricacies of your specific problem and make more accurate predictions.

Suggested Reading:

How to Use Hugging Face for Chatbot Development?

Step-by-Step Guide on Fine-Tuning Models

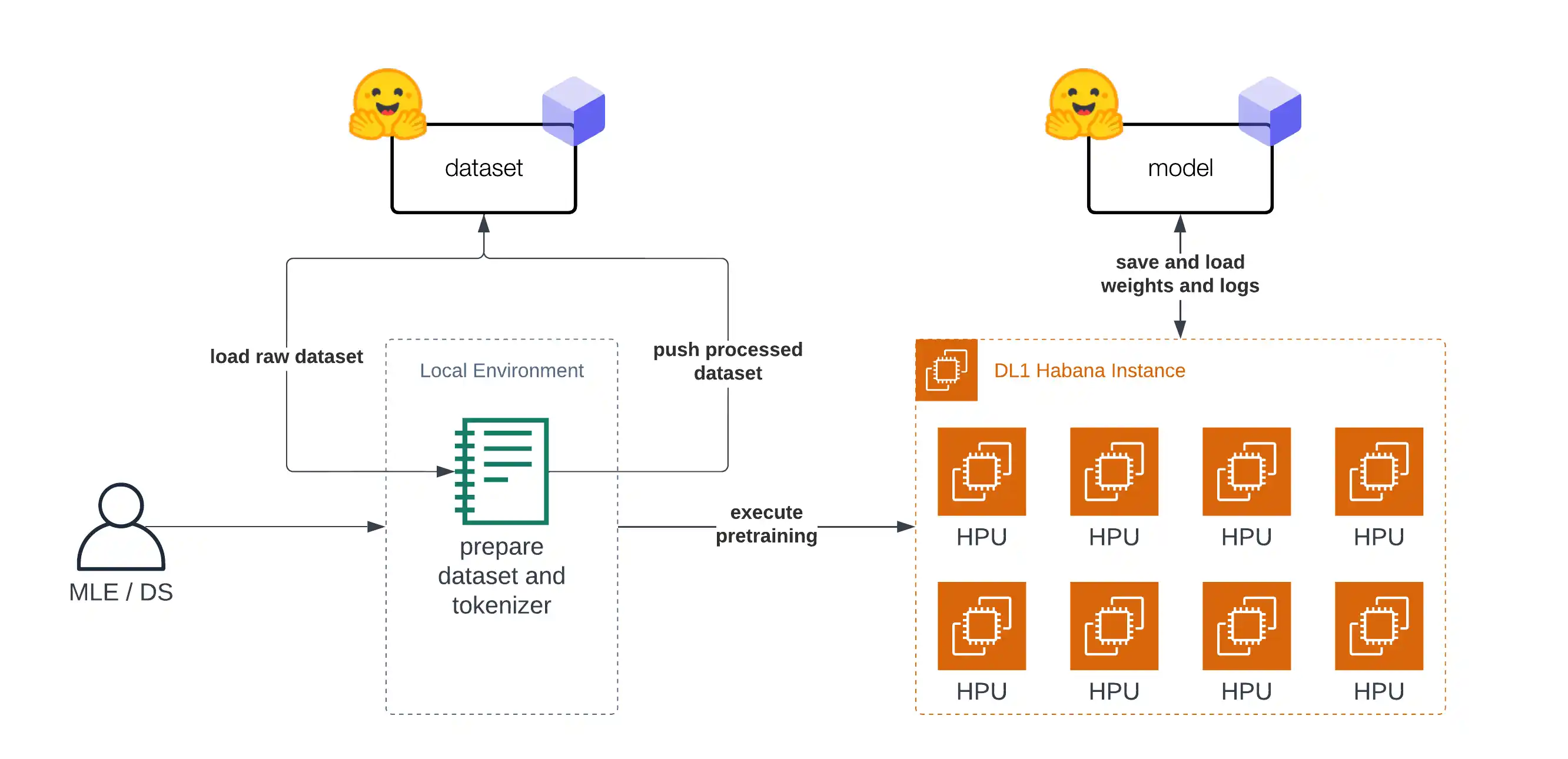

To begin the fine-tuning process, you should first prepare your dataset.

Ensure the data is properly formatted and split into training and evaluation sets. Hugging Face's Datasets library can be incredibly useful for this step, simplifying data preprocessing and loading.

Next, load the pre-trained model of your choice using Hugging Face's Transformers library. Depending on your task, you can choose models based on their architecture, such as BERT or GPT. Loading the model is as simple as a single line of code.

Once the model is loaded, you need to configure the training settings. This involves defining the optimizer, learning rate, and other hyperparameters to fine-tune the model effectively.

Experimentation and iteration are crucial here, as these settings can significantly impact the model's performance.

With the data and model prepared, it's time to begin fine-tuning. Iterate through your training data, feeding it to the model and adjusting the weights based on the model's predictions.

This process allows the model to learn from the data and improve performance.

After training for sufficient epochs, evaluate the model's performance on the evaluation set. This step provides insights into how well the model is generalizing and helps identify areas that require further improvement.

Iterate and fine-tune the model as needed. You can adjust hyperparameters, experiment with different architectures, or even add additional layers to enhance its performance.

Beginning with NLP powered chatbot isn't that tough. Meet BotPenguin- the home of chatbot solutions. With all the heavy work of chatbot development already done for you, deploy chatbots for multiple platforms:

Tips and Best Practices for Fine-Tuning

To achieve optimal results with fine-tuning, here are some valuable tips and best practices to keep in mind:

Start with smaller datasets: When fine-tuning, starting with a smaller subset of your data is often beneficial.

This can help you iterate and quickly assess the impact of different settings without spending excessive time on training large models.

Gradual unfreezing: Freezing and gradually unfreezing the layers of the pre-trained model can help avoid catastrophic forgetting.

Start by training only the output layer and gradually unfreeze deeper layers to allow the model to adapt while minimizing the risk of overfitting.

Regularization techniques: Regularization methods like dropout and weight decay can prevent overfitting and improve generalization.

Experiment with different regularization techniques to find the optimal balance between performance and robustness.

Learning rate scheduling: Adjusting the learning rate during training can significantly impact the model's performance.

Techniques like learning rate decay or cyclic learning rates can help the model converge faster and find better optima.

Evaluate on a validation set: Monitoring the model's performance on a separate validation set allows you to make informed decisions during the fine-tuning process.

Use various evaluation metrics to assess the model's effectiveness and make necessary adjustments.

Next, we will cover how to deploy hugging face model in production.

Deploying Models in Production

Deploying NLP models in production can be a complex and challenging task. This section will provide an overview of deploying models and techniques for deploying models with Hugging Face model.

We will also explore monitoring and performance optimization strategies to ensure models function as intended in production environments.

Deploying Models

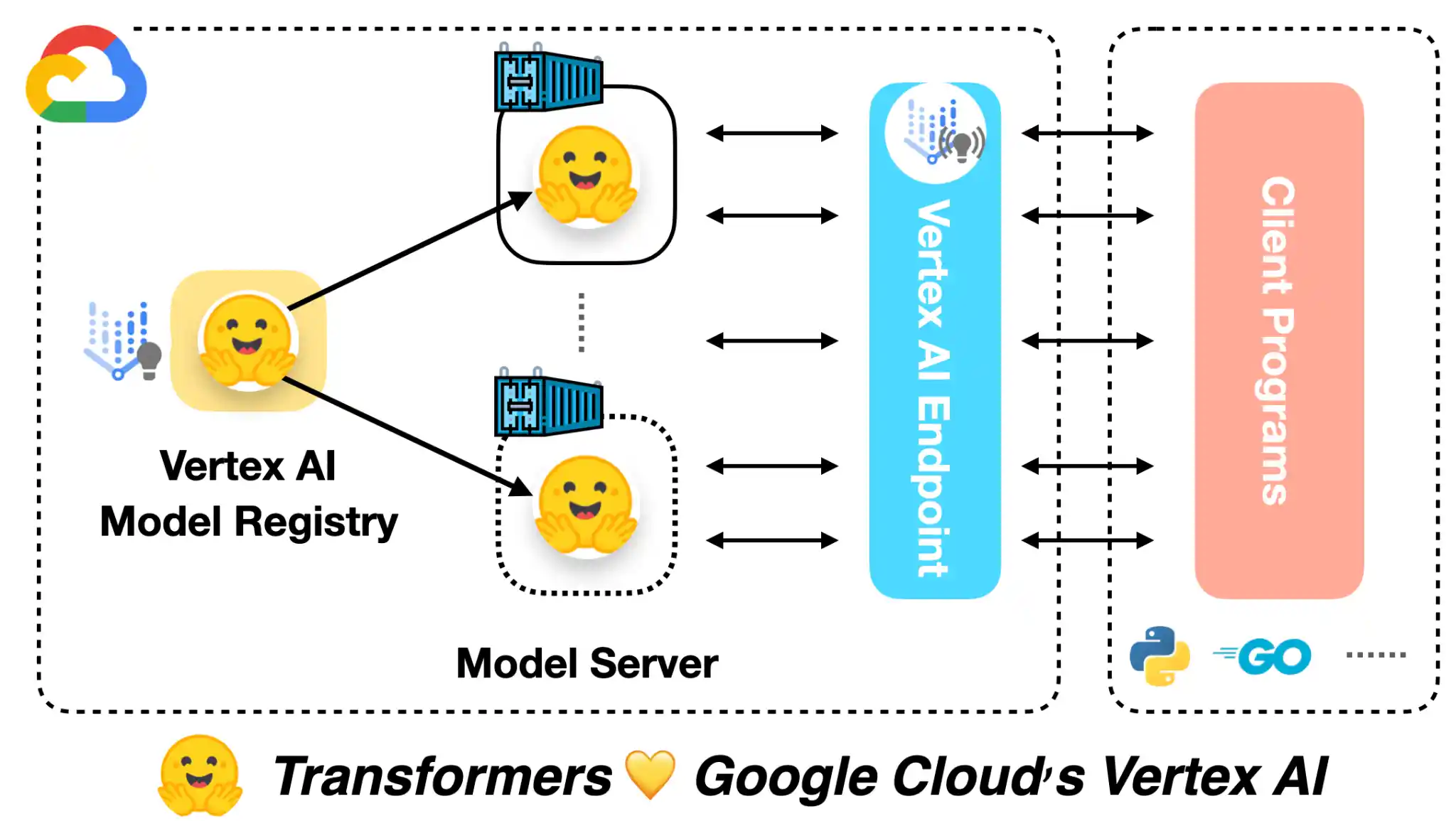

Deploying models in production means making your NLP models available to users in a scalable, reliable, and efficient way.

The process may involve integrating models with existing infrastructure, creating APIs or microservices, or deploying to cloud platforms like AWS or Google Cloud.

Deploying models in production also means handling problems like scalability, security, and maintenance. You must ensure the models can accommodate high traffic, are secure from malicious attacks, and continue functioning even as dependencies and infrastructure evolve over time.

Techniques for Deploying Models with Hugging Face

Hugging Face makes deploying models in production easier by providing several tools and integrations to streamline the process. Here are some techniques for deploying models with Hugging Face:

Hugging Face Transformers pipelines: Hugging Face provides pre-built pipelines that allow you to run NLP tasks like text classification or language generation in real time.

These pipelines wrap around your trained models, enabling you to deploy them quickly without much configuration.

Hugging Face inference API: The inference API allows you to easily deploy your model as a RESTful API to handle incoming requests.

You can deploy your model on Hugging Face's infrastructure or use the Hugging Face inference container to deploy it on your own infrastructure.

Model Optimization Techniques: Hugging Face offers several model optimization techniques in the Transformers library that allow you to reduce the size of your models while maintaining performance.

This can be useful for deploying models where speed and memory usage are crucial.

Containerization: Containerization can help isolate dependencies and simplify deployment by packaging the model and its dependencies into a Docker container.

Hugging Face provides tensorflow-serving containers with optimized configurations that can be used to deploy models on Kubernetes or other container orchestration platforms.

Monitoring and Performance Optimization

Deploying models in production requires careful monitoring to ensure they perform as intended. Some strategies for monitoring include:

Logging: Collecting and analyzing logs from your model can give you insight into its performance and how it's handling incoming requests.

Metrics and alerts: Setting up metrics and alerts can help you detect anomalies or issues with the model's performance. You can also define thresholds to notify you when input from the model exceeds certain thresholds.

A/B Testing: A/B testing can help you compare different model versions and configurations to determine which is more effective. This can help refine the model and optimize its performance over time.

Performance optimization is critical for deployed models, and some strategies include:

Efficient data handling: Optimize data handling by caching results or utilizing more efficient data formats. This can help reduce model inference time and improve performance.

Distributed inference: Take advantage of distributed inference environments to scale up model performance. Using batch processing or parallelism can significantly speed up inference and reduce latency.

Model Optimization Techniques: Use techniques like pruning, knowledge distillation, or quantization to reduce the size of your models while maintaining performance. This can reduce load times and improve model efficiency.

Next, we will see the future of hugging face model.

Future of Hugging Face

Hugging Face has been at the forefront of innovation in the NLP field, continuously pushing the boundaries of what is possible.

In this section, we will explore the future growth and advancements and speculate on the possibilities in AI and NLP.

Growth and Advancements in Hugging Face

Hugging Face has multiplied, becoming a go-to platform for NLP practitioners. With a vibrant community and a wide range of tools and models, Hugging Face is poised for further growth. Advancements in the pipeline include:

Expansion of model offerings: Hugging Face will continue to expand its repository of pre-trained models, covering more languages, domains, and tasks.

This will give users a richer selection and cater to a broader range of NLP applications.

Improvements in model architectures: Hugging Face will keep pace with the latest advancements in NLP research and update their models accordingly.

The community can expect access to state-of-the-art architectures that deliver better performance and enhanced capabilities.

Enhancements in usability and integrations: Hugging Face understands the importance of usability and integration to make NLP accessible to a broader audience.

They will continue to refine their tools, provide better documentation, and offer integrations with popular frameworks. Thus making it easier for users to leverage the power of Hugging Face in their projects.

Speculations and Possibilities in AI and NLP with Hugging Face

The future possibilities in AI and NLP with Hugging Face are vast. Here are some speculations on what lies ahead:

Advancements in multi-modal models: As NLP progresses to incorporate multi-modal learning, Hugging Face model is likely to offer models that can understand and generate text, images, and other modalities collectively.

This could revolutionize multimodal data applications, such as image captioning or video summarization.

Domain-specific models: Hugging Face could develop more domain-specific models trained on specialized datasets.

This would enable users to fine-tune models specifically for their unique domains. Thus improving performance and accuracy in niche applications.

Research-driven innovations: As Hugging Face continues collaborating with researchers and academia, they will unlock new research-driven innovations.

These advancements will contribute to cutting-edge technologies and drive the evolution of NLP and AI.

Conclusion

The future of AI and NLP beckons, brimming with possibilities. Hugging Face stands ready to propel you into this uncharted territory, arming you with the tools to conquer any challenge.

State-of-the-art models? Check. Powerful libraries tailored for efficiency? Check. An ever-growing community fostering collaboration and innovation? Check. Hugging Face has it all.

Whether you're refining language models, exploring multimodal applications, or venturing into niche domains, this platform equips you for success. Seamless deployment, performance optimization, and cutting-edge research – the path is clear with Hugging Face.

Seize the moment. Embrace the future of intelligent systems today. Join the pioneers reshaping our world with Hugging Face as your guide. Frontiers await; forge ahead with confidence.

Frequently Asked Questions (FAQs)

Is Hugging Face difficult to use?

Hugging Face model offers tools for beginners and experienced developers alike. They provide tutorials, documentation, and a user-friendly interface to get started with NLP projects.

What kind of NLP tasks can I do with Hugging Face?

Hugging Face model can be used for a wide range of NLP tasks, including text classification, sentiment analysis, question answering, machine translation, and text summarization.

Do I need to be a programmer to use Hugging Face?

While some programming knowledge is helpful, Hugging Face model offers tools with a graphical user interface (GUI) that can be used without extensive coding experience.

What is the Hugging Face Model Hub?

The Hugging Face Model Hub is a central repository for pre-trained NLP models. You can search, download, and fine-tune these models for your specific needs.

Can I share my own models on Hugging Face?

Yes, Hugging Face encourages sharing! You can upload your own NLP models and datasets to the Hub for others to use and improve upon.

What are some of the limitations of Hugging Face?

While Hugging Face is a powerful tool, it's important to remember that NLP models can be biased and may not always produce perfect results.

Is Hugging Face free to use?

Hugging Face offers a free tier with access to many resources. They also have paid plans with additional features for enterprise use.