Imagine a world where technology not only understands human language but also generates human-like text. This is the power and potential of large language models (LLMs). Crafting such sophisticated systems might seem like an ambitious expedition, but with the right guidance, the path to mastery is within your grasp.

Training LLMs is more than just tweaking algorithms; it's the art of shaping powerful AI intellect. How can a beginner make sense of it all? What are the essential elements to understand and the pitfalls to avoid in this journey?

Embark on an intricate yet exciting exploration of LLMs; from understanding the ethos of language models, and the nuances of natural language processing, to the intricate process of training. Whether you are a total novice or familiar with AI, this journey is sure to transform you into a competent LLM trainer.

Anticipate challenges, successes, and epiphanies as you navigate your way through the fascinating landscape of LLM. Ready to undertake this adventure and reveal the thrill that lies in training large language models? Let's dive in!

Preparing for Training

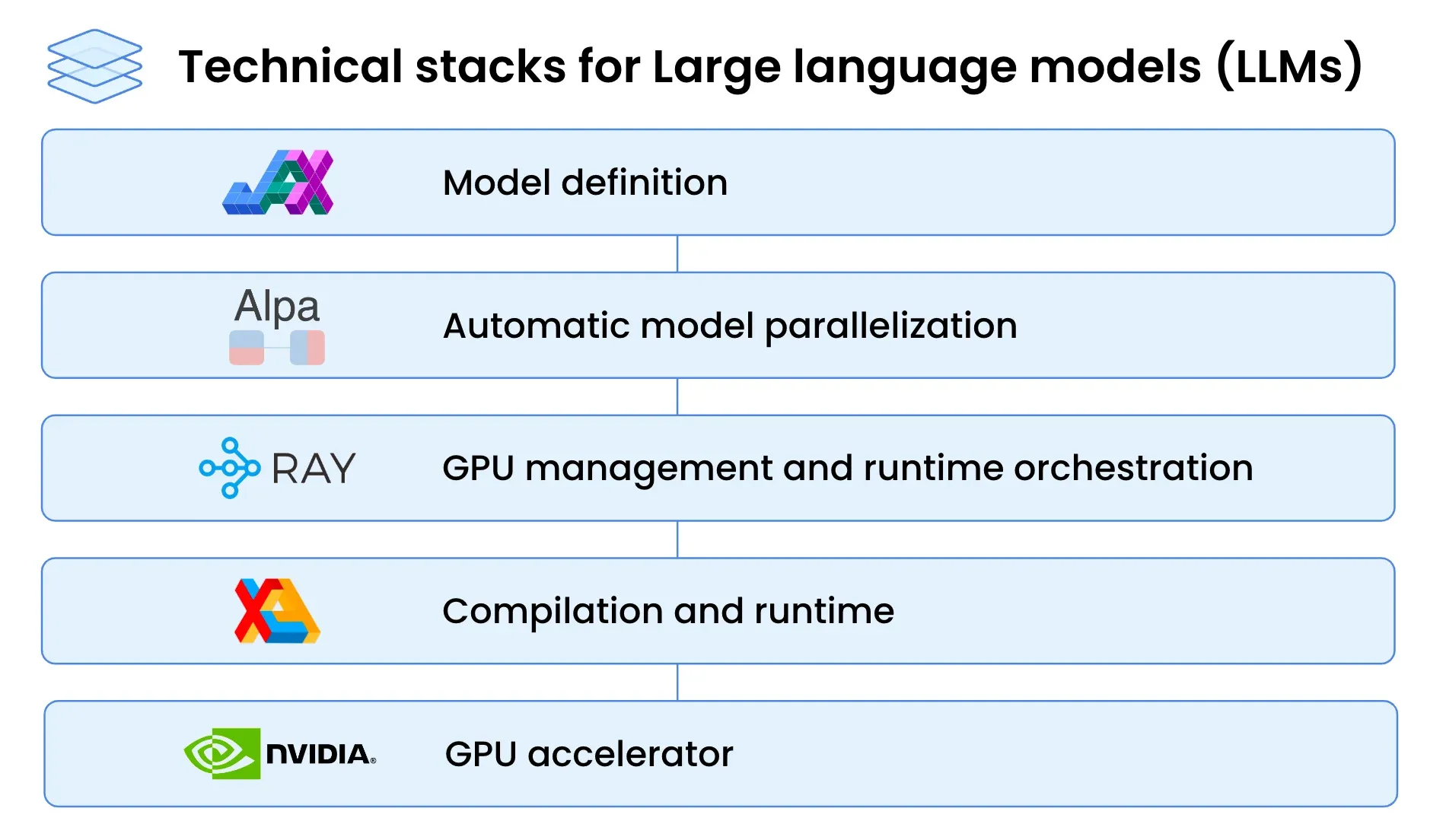

Training large language models requires hardware resources like GPUs or TPUs, which excel at parallel processing.

Additionally, utilizing software tools and libraries such as deep learning frameworks, data preprocessing tools, distributed computing frameworks, pre-trained models, and version control systems is key in efficient training and managing large language models.

Hardware Requirements for Training Large Language Models

Training large language models requires substantial computational resources due to the immense amount of data that needs to be processed and the complexity of the models.

The hardware requirements depend on the size of the model and the dataset used for training. A high-end CPU with good RAM may be sufficient for smaller models and datasets.

However, using graphical processing units (GPUs) or tensor processing units (TPUs) is highly recommended for larger models and datasets.

GPUs and TPUs excel at parallel processing, which helps accelerate the training process by performing multiple computations simultaneously.

Their high memory bandwidth and processing power enable faster training of large language models. Using multiple GPUs or TPUs to process large-scale models efficiently is advisable.

Additionally, cloud-based services providing access to powerful hardware can be cost-effective for training large language models.

Software and Libraries Needed for Training

To train large language models effectively, several software and libraries are essential. Here are some commonly used ones:

- Deep Learning Frameworks: TensorFlow, PyTorch, and Keras support efficiently building and training large language models on GPUs or TPUs.

- Data Preprocessing Tools: NLTK and spaCy are widely used for text data preprocessing—offering tokenization, stemming, lemmatization, and part-of-speech tagging essential for dataset preparation.

- Distributed Computing Frameworks: Apache Spark and Ray enable the distribution of workloads across multiple machines, which is crucial for efficiently training large language models.

- Language Model Architectures: Libraries like Hugging Face's Transformers and OpenAI's GPT offer pre-trained models and architectures, facilitating fine-tuning for specific tasks with convenient APIs.

- Version Control and Collaboration: Git and platforms like GitHub/GitLab are essential for managing code, tracking changes, and collaborative development on large language models.

Gathering and Preparing Data

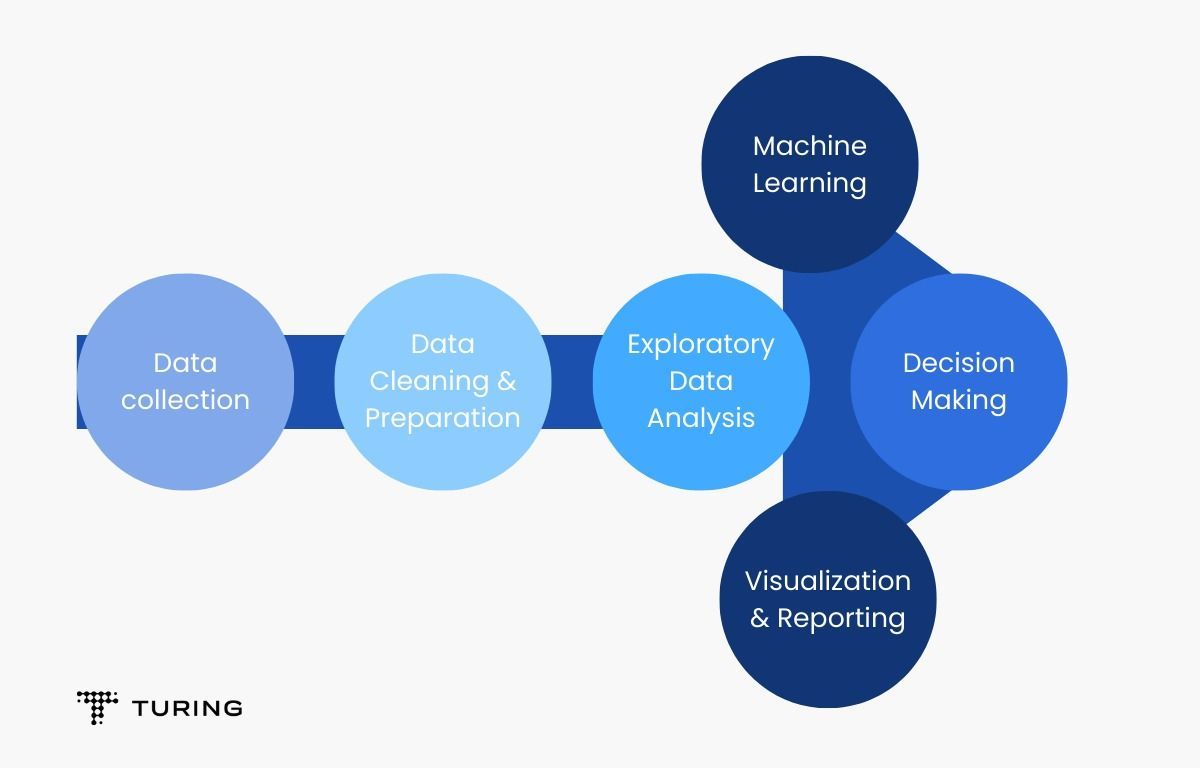

Gathering relevant data from various sources and properly preprocessing it is essential in training large language models.

Identifying diverse and representative data sources and following good data cleaning and preprocessing practices ensure the model's performance and ability to tackle real-world language tasks effectively.

Identifying and Collecting Relevant Data Sources

The first step in training large language models is identifying and collecting relevant data sources. The data's quality and diversity directly impact the model's performance.

Here are some strategies for gathering data:

- Publicly Available Datasets: Diverse datasets like Wikipedia articles, news, books, social media posts, and scientific papers are accessible for training large language models, providing a strong foundation.

- Web Scraping: Extracting relevant text data from websites via custom scrapers or tools like BeautifulSoup or Scrapy. It's crucial to adhere to website terms and legal constraints.

- Domain-Specific Data: Collecting domain-specific data such as medical literature or electronic health records enhances model expertise in specific medical fields.

- Augmented Data: Techniques like paraphrasing or generating synthetic data using language models augment the dataset, increasing its size and diversity for improved model training.

Cleaning and Preprocessing the Data

Once the relevant data is collected, it must be cleaned and preprocessed before training the language model. Data preprocessing steps typically include the following:

- Clean Text: Remove junk like symbols, HTML tags, and noise.

- Split Words: Break text into individual words or parts for easy understanding.

- Make Consistent: Lowercase, remove punctuation, and handle contractions to keep things uniform.

- Trim the Extras: Toss out common, less meaningful words.

- Shorten Words: Simplify words to their base form for clarity.

- Divide Data: Split your dataset for training, tweaking, and testing the model.

Suggested Reading:

Choosing a Language Model Architecture

Ultimately, the choice of a language model architecture should be based on a combination of these factors, considering the specific requirements of the task, the available data, computational resources, interpretability needs, and time constraints.

Evaluating and experimenting with different architectures can help determine the most suitable option for the scenario.

Overview of Popular Language Model Architectures

When choosing a language model architecture, familiarity with popular options is essential. Here are some widely used language model architectures:

- RNN: Captures sequential data but struggles with long-term dependencies.

- LSTM: Improves RNNs by retaining information over longer sequences.

- GRU: Similar to LSTM but more computationally efficient.

- Transformer: Efficiently captures long-range dependencies using self-attention.

- BERT: Utilizes bidirectional representation learning for contextual understanding.

Factors to Consider When Selecting an Architecture

When selecting a language model architecture, several factors need to be considered:

- Task Needs: Choose based on dependency capture or contextual understanding.

- Data: Availability impacts models like GPT or BERT that require vast data.

- Resources: Complex models need significant computational power.

- Interpretability: RNNs offer more interpretability than Transformers.

- Time: Some models demand more time and resources for development and training.

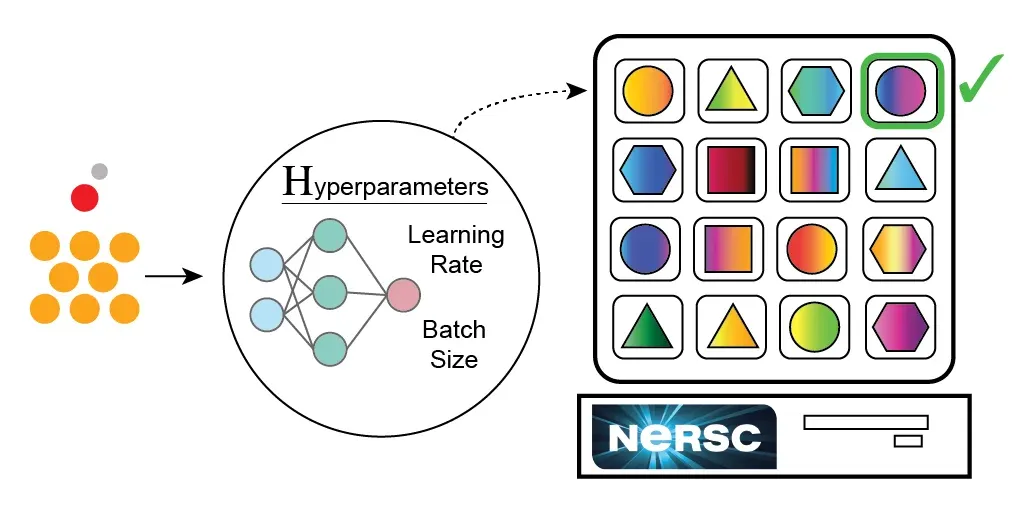

Setting Hyperparameters

Understanding key hyperparameters and their impacts on training and deploying effective hyperparameter tuning strategies are critical for optimizing the performance of language models.

Fine-tuning hyperparameters can improve convergence, better generalization, and enhance model performance for specific downstream tasks.

Explanation of Key Hyperparameters and Their Impact on Training

Hyperparameters are parameters not learned from the data but are set before training a model.

They directly impact the model's training process and performance.

Understanding key hyperparameters and their impacts is crucial for effectively training language models. Here are some important hyperparameters and their explanations:

- Learning Rate: Controls the step size in parameter updates. Higher rates converge faster but risk instability. Lower rates offer stability but slower convergence. Optimizing it finds the balance between speed and performance.

- Batch Size: Dictates the number of examples per iteration. Larger sizes speed up training but might need more memory, leading to poorer generalization. Smaller batches generalize better but might take longer to train.

- Dropout Rate: Randomly deactivates neurons to prevent overfitting. Higher rates increase regularization, preventing overfitting, but can cause underfitting if too high.

- Number of Layers: Influences model depth and complexity. More layers capture intricate patterns but increase computation and overfitting risks. Balancing model capacity with resources is crucial.

Strategies for Tuning Hyperparameters to Optimize Performance

Tuning hyperparameters can greatly impact the performance of language models. Here are some strategies for hyperparameter tuning:

- Grid Search: Exhaustively explores hyperparameter combinations. It is computationally expensive but systematically finds the best values.

- Random Search: Randomly samples hyperparameters within defined ranges. Less computationally intensive but still covers a wide range of exploration useful with limited resources.

- Bayesian Optimization: It uses a model-based approach to intelligently select hyperparameters, which is more efficient than grid/random search by iteratively exploring promising settings.

- Early Stopping: Halts training when validation performance declines, preventing overfitting and finding the optimal training epochs.

- Cross-Validation: Systematically splits data into subsets for training/validation, offering robust evaluation and identifying hyperparameters that generalize well.

- Transfer Learning: Utilizes pre-trained models or components to initialize parameters, accelerating training and enhancing performance, especially with limited labeled data. Fine-tuning specific hyperparameters on top of pre-trained models improves performance further.

Suggested Reading:

Training Strategies

These techniques are just a few examples of how language model training challenges can be addressed.

Experimentation and adaptation to the specific context and requirements of the task may be necessary to overcome training challenges and optimize the model's performance effectively.

Overview of Different Training Strategies and Their Use Cases

Training strategies play a crucial role in the successful training of language models. Here are some commonly used training strategies and their use cases:

- Supervised Learning: Models learn from labeled data (input-output pairs). Ideal when annotated data exists and is used in tasks like text classification or machine translation.

- Transfer Learning: Pre-trains a model on a broad dataset and fine-tunes it on a specific task. Utilizes pre-trained models like BERT or GPT for impressive results with limited labeled data.

- Reinforcement Learning: Trains models by interacting with an environment and receiving feedback through rewards/penalties. Less common in NLP but applicable in tasks like dialogue generation or text-based gaming.

- Unsupervised Learning: Trains models without labeled data. Useful when labels are expensive. Techniques like autoencoders or GANs generate representations or synthetic data for downstream tasks.

Techniques for Dealing with Out-of-Memory and Other Training Challenges

During language model training, various challenges can arise, such as running out of memory or difficulties with convergence. Here are some techniques for addressing these challenges:

- Batch Training & Gradient Accumulation: Dividing data into mini-batches helps with memory issues. Accumulating gradients across these batches aids when memory limits the batch size.

- Gradient Clipping: Controls exploding gradients by scaling them if they surpass a set threshold, which is crucial for RNNs or deep models.

- Regularization Techniques: Dropout, L1/L2 regularization, or weight decay prevent overfitting, enhancing generalization by avoiding noise memorization.

- Early Stopping: Monitors validation set performance, stopping training when it starts worsening, preventing overfitting, and finding the best epoch for trade-offs.

- Learning Rate Scheduling: Alters learning rates during training via decay or cyclic patterns, boosting stability and convergence.

- Model Parallelism/Distributed Training: For massive models or limited resources, splitting the model across devices or machines enables parallel processing, reducing memory needs.

- Curriculum Learning: Gradually exposes the model to data in a structured order, starting with simpler examples and progressing to complex ones, enhancing stability and convergence.

Conclusion

Training large language models is a complex yet fascinating area that allows AI systems to achieve impressive performance on language-understanding tasks.

Through techniques like self-supervised pretraining on massive text datasets and careful architecture optimization, models with billions of parameters are emerging.

While training such large models requires substantial data, computational resources, and ML expertise currently accessible to only Big Tech firms, open-source libraries like TensorFlow and PyTorch lower the barriers. Emerging accelerator chips also show promise for wider access.

For beginners, this guide covered key concepts in language model training, like model scaling laws, computing infrastructure needs, dataset collection, transfer learning approaches, and weight quantization to optimize deployment.

Mastering these techniques will enable the creation of high-performing models for language apps.

Going forward, responsible and ethical development of ever-larger models should incorporate principles of transparency, accountability and avoidance of algorithmic biases.

Training language models unlock immense potential to augment human communication and understanding.

Suggested Reading:

Frequently Asked Questions (FAQs)

How do I train a large language model?

Training a large language model typically involves a lot of computational power and infrastructure. Using pre-trained models and fine-tuning them on your specific task is recommended.

What are the challenges in training large language models?

Challenges in training large language models include the enormous amount of computational resources required, the problem of overfitting, and the difficulty of pre-processing large amounts of data.

What are some best practices for training large language models?

Some best practices for training large language models include using a suitable hardware or cloud provider, exploring various pre-processing techniques, monitoring the model's progress, and using pre-trained models for transfer learning.

How can I fine-tune a pre-trained large language model for my task?

Fine-tuning a pre-trained large language model involves adding task-specific layers to the pre-trained model and training the entire model on a smaller dataset. The fine-tuned model can then be evaluated and iteratively improved as necessary.