TensorFlow models are powerful tools in machine learning and artificial intelligence.

Developed by Google, TensorFlow Models provide a comprehensive framework for simple neural networks to complex deep learning architectures.

According to KDnuggets, TensorFlow is the world's most popular machine learning framework. The same study also shows that over 2 million developers actively use TensorFlow.

It makes TensorFlow one of the most powerful tools in the data science community.

But what exactly are TensorFlow models?

Leveraging a flexible and scalable platform, TensorFlow Models enable users to experiment with different algorithms, data structures, and optimization techniques.

Over 100,000 organizations use TensorFlow, including Google, Facebook, Uber, and Netflix. The most popular TensorFlow models are natural language processing, image recognition, and machine translation.

In this blog, we'll take you on a comprehensive walkthrough of machine learning using TensorFlow.

What is TensorFlow?

TensorFlow is an open-source, user-friendly machine learning (ML) framework. Google Brain made TensorFlow and its models. Since its public release in 2015, it has become incredibly well-liked among programmers and researchers.

TensorFlow is fundamentally a library that enables users to build computational graphs to build and train machine learning models.

These graphs are collections of mathematical procedures with nodes as their representation. The edges stand in for the mathematical methods, and each node represents a data point.

Some of the most advanced uses of artificial intelligence, including self-driving cars and virtual assistants, are powered by TensorFlow.

What is the Importance of TensorFlow in Machine Learning?

TensorFlow plays a pivotal role in the field of Machine Learning due to its significance in various aspects:

- Versatile Framework: TensorFlow offers a universal framework to build and deploy ML models across various domains and applications.

- Deep Learning: TensorFlow excels in deep neural networks. It enables the development of complex architectures for tasks like image recognition, NLP, and more.

- Abstraction and Flexibility: TensorFlow provides high-level abstractions through its APIs, like Keras. It simplifies model development while allowing low-level control for advanced users.

- Scalability: Its distributed computing capabilities facilitate scaling from local environments to clusters, making it suitable for handling large datasets and complex models.

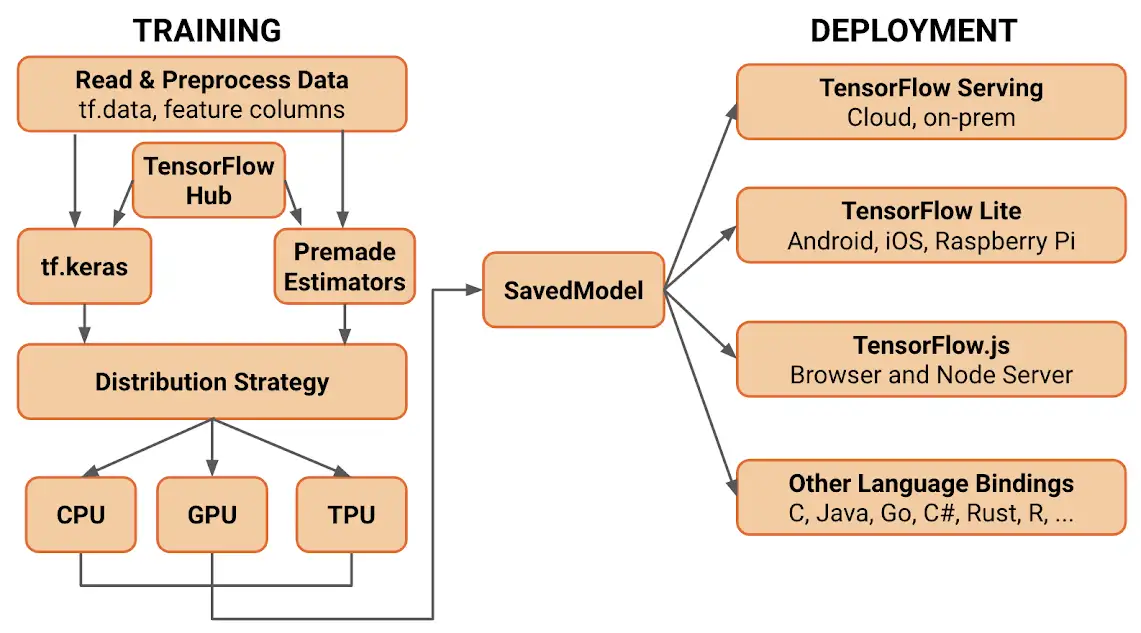

- Deployment: TensorFlow supports deployment across multiple platforms from edge devices to cloud environments. It ensures seamless integration of models into real-world applications.

- Community and Resources: With a large user base, TensorFlow boasts extensive documentation, tutorials, and community support, aiding beginners and experts.

- Research and Innovation: TensorFlow is widely used in academia and research, contributing to advancing ML techniques and algorithms.

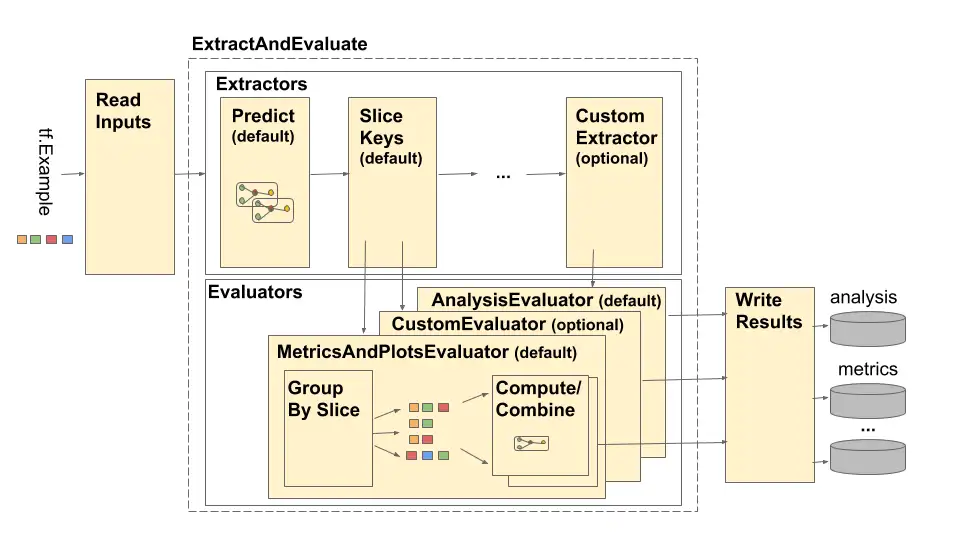

- Ecosystem and Tools: TensorFlow's ecosystem includes TensorFlow Extended (TFX) for production pipelines, TensorFlow Lite for mobile and embedded devices, and TensorFlow Serving for model deployment.

- Open Source: Being open-source fosters collaboration, innovation, and continuous improvement within the ML community.

What are the Applications of TensorFlow?

The applications of TensorFlow are vast and diverse. Let's explore some of the domains where TensorFlow has made a significant impact:

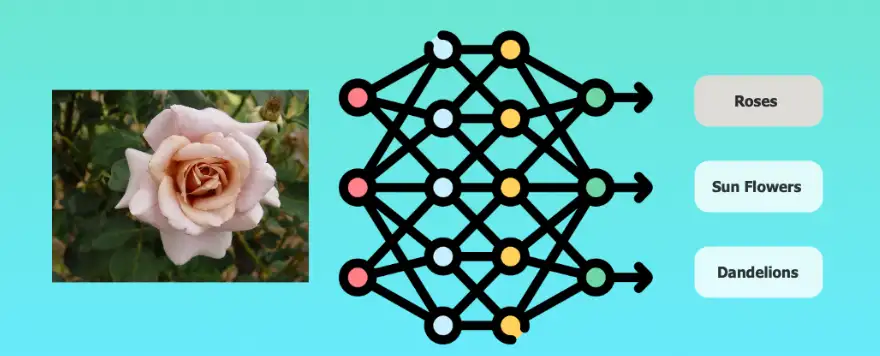

Image Recognition

TensorFlow has been instrumental in advancing the field of image recognition. It has enabled researchers to create deep learning models to classify and detect objects in images accurately.

From healthcare (detecting diseases in medical images) to self-driving cars (identifying pedestrians and road signs), TensorFlow has revolutionized how we analyze and understand images.

Natural Language Processing

Natural language processing is another domain where TensorFlow has proven its mettle. It has empowered researchers and developers to build intelligent chatbots, language translation systems, and sentiment analysis tools.

With the help of recurrent neural networks and TensorFlow's powerful algorithms, you can build complex language models. Then, you can train it to understand and generate human-like text.

Recommendation Systems

TensorFlow has also been widely used to develop recommendation systems. Recommendation systems analyze user behavior and preferences to provide personalized and customizable recommendations.

Whether it's suggesting movies on Netflix or products on Amazon, TensorFlow's deep learning capabilities have made these recommendation systems more accurate and efficient.

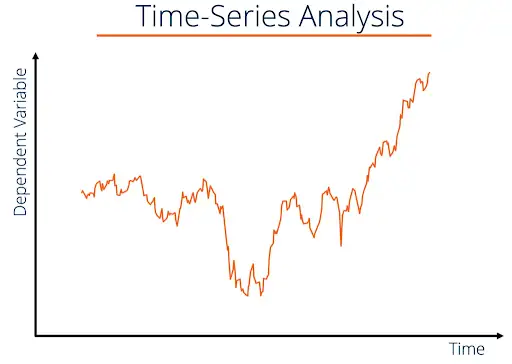

Time Series Analysis

Time series analysis is crucial in many fields, including finance, weather forecasting, and stock market prediction.

TensorFlow can handle sequential data. And its recurrent neural network capabilities make it a perfect tool for analyzing and predicting time series data.

What are the Basics of TensorFlow Models?

This section will explore a typical TensorFlow model's basic structure and components. So, let's dive in!

Structure of TensorFlow Models

At its core, a TensorFlow model consists of two main components - the computational graph and the session.

- Computational Graph: A computational graph is a series of mathematical operations of TensorFlow models represented as nodes.

Each node represents a data point, and the edges represent the mathematical operations. It is the backbone of a TensorFlow model that defines the computations necessary to perform a task.

- Session: A session is an interface that allows you to run operations defined in the computational graph.

Session encapsulates the state of the TensorFlow runtime. It includes variables, queues, and other resources required to execute the graph.

Components of a Typical TensorFlow Model

Now that we understand the structure of a TensorFlow model let's explore the key components that make up a typical model.

- Variables: Variables are the parameters of the model that learns during training. They represent the weights and biases of the model.

And variables get updated after each iteration of the training process. Initialize the Variables before the training begins and can be saved and restored for future use.

- Placeholders: Placeholders are nodes in the computational graph that feed input data into the model during training.

They act as empty placeholders that get filled with data during the session. Placeholders require a specified shape and data type. They can feed in multiple data points simultaneously.

- Tensors: Tensors are the basic building block component of a TensorFlow model. They represent the data flowing through the computational graph.

Further, it gets manipulated by the mathematical operations defined in the graph. Tensors have different types of rank, shape, and data. It can be sliced, reshaped, and concatenated to perform complex operations.

- Loss Function: The loss function is an essential component of a TensorFlow model that measures how well the model is performing.

It is a mathematical function that calculates the difference between predicted and actual output. TensorFlow allows the model to adjust its parameters accordingly.

- Optimizer: The optimizer is the algorithm used to minimize the loss function during training. It adjusts the model's variables to reduce the difference between predicted and actual output. Ultimately, it improves the accuracy of the model.

- Layers: Layers are the primary building block component of a deep neural network. They are composed of multiple neurons that perform weighted sums and apply activation functions to produce the output of each layer.

Layers can be stacked to create complex neural networks capable of performing sophisticated tasks.

Suggested Reading:

Crafting Smart Chatbots Using TensorFlow

Commonly Used TensorFlow Models

In this section, you will find three commonly used TensorFlow models.

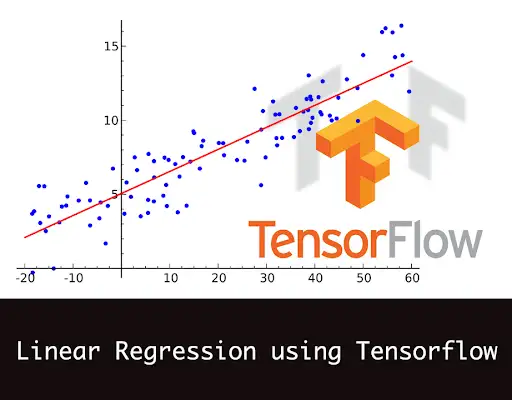

Linear Regression Model

Linear regression, a TensorFlow model, is a simple yet powerful model. It can predict a continuous target variable based on one or more input features.

TensorFlow provides a convenient way to implement linear regression models using its computational graph and optimizer functions.

Structure of a Linear Regression Model

The structure of a linear regression model consists of a single layer with a set of input features and a single output neuron.

Each input feature is assigned a weight, and the model calculates the weighted sum of the inputs to produce the final output. The model then uses an optimization algorithm, such as gradient descent, to adjust the weights and minimize the loss function.

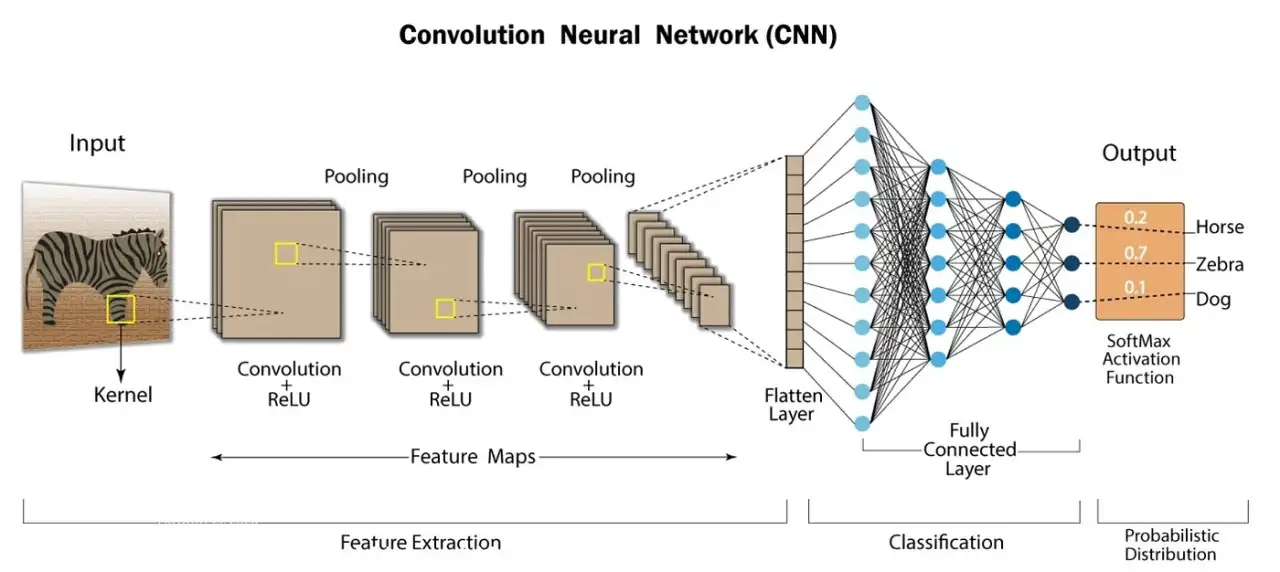

Convolutional Neural Network (CNN)

Convolutional Neural Networks, or CNNs, are widely used in computer vision tasks. It includes image recognition and object detection.

CNNs have successfully achieved state-of-the-art performance in various image-related functions by leveraging the concept of local receptive fields and shared weights.

Structure of a CNN

A CNN consists of multiple layers, including pooling, convolutional, and fully connected layers. The convolutional layer applies filters to the input image, performing local feature extraction.

The pooling layer reduces the spatial dimensions of the feature maps, reducing the computational complexity. Finally, the fully connected layer combines the features learned by the convolutional and pooling layers and produces the final output.

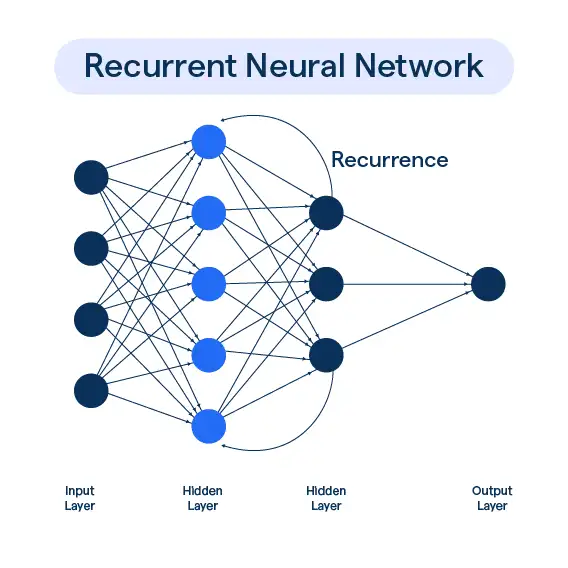

Recurrent Neural Network (RNN)

Recurrent Neural Networks, or RNNs, are commonly used in tasks involving sequential data, such as natural language processing and time series analysis.

RNNs can model the temporal dependencies in the data by using recurrent connections within the network.

Structure of an RNN

An RNN consists of a series of recurrent units, each taking an input and producing an output. The output of each unit is fed back as the input to the next unit in the sequence.

This feedback mechanism allows the network to maintain memory and capture long-term dependencies in the sequential data.

RNNs, such as Gated Recurrent Unit (GRU) and Long Short-Term Memory (LSTM), can vary. It mitigates the vanishing gradient problem that commonly occurs in traditional RNNs.

Suggested Reading:

How to Install TensorFlow: A Step-by-Step Guide

Advanced TensorFlow Models

Advanced TensorFlow models have revolutionized the field of machine learning. It has been used extensively in various domains of different industries.

So, let's learn more about these advanced TensorFlow models: dive in!

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs), a type of neural network, can generate realistic data samples from a given distribution.

GANs have successfully developed realistic images, videos, and even music.

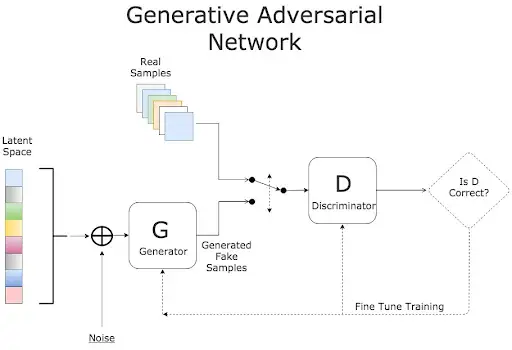

Structure of a GAN

The Generative Adversarial Network has two types of network - a generator network and a discriminator network.

The generator network takes random noise as input and generates a sample of data that can be passed off as real data. The discriminator network takes the generated and real data as input and tries to distinguish between them.

The generator network has the training to generate realistic data that can fool the discriminator network. Meanwhile, the discriminator network can correctly distinguish between accurate and generated data.

Applications of GANs

GANs have been used in various domains. It includes image and video synthesis, data augmentation, image-to-image translation, and drug discovery.

Reinforcement Learning Models

Reinforcement learning models, a type of machine learning, can take action in any environment to maximize a reward.

Reinforcement learning models have achieved state-of-the-art performance in various domains. It includes game playing, robotics, and natural language processing.

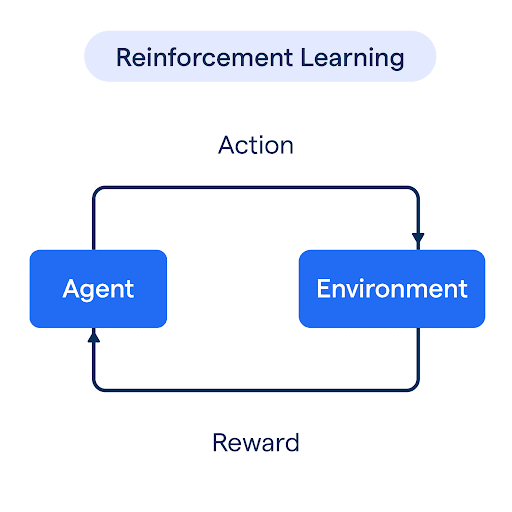

Structure of a Reinforcement Learning Model

A Reinforcement Learning Model consists of an agent, a set of actions, and an environment in which the agent operates. The agent learns to take action in the environment to maximize a reward signal received from the environment.

The Reinforcement learning model agent can learn through trial and error. It aims to understand an optimal policy that maximizes the expected reward over the long run.

Applications of Reinforcement Learning Models

Reinforcement learning models are used in game playing, robotics, and autonomous driving.

Suggested Reading:

spaCy vs TensorFlow: Selecting the Ideal NLP Framework

Transfer Learning with TensorFlow Models

This section will explore transfer learning with TensorFlow models - what it is, how it works, and its applications.

What is Transfer Learning?

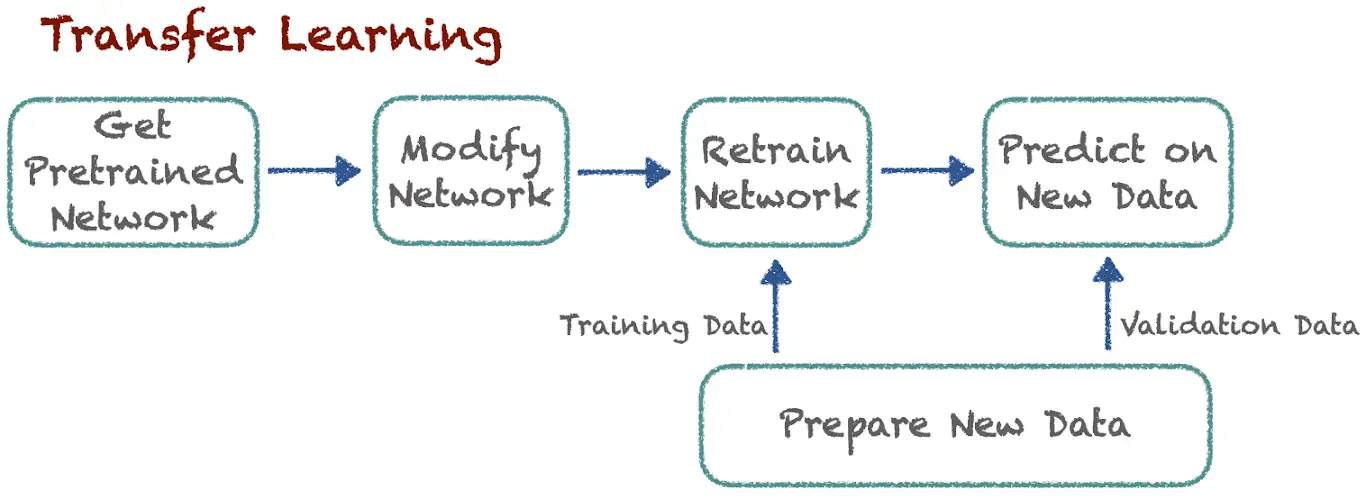

Transfer learning is about taking a pre-trained model and using it as a starting point to solve a new task. The pre-trained model is typically trained on a large dataset, such as ImageNet, and already has learned useful feature representations.

By fine-tuning the TensorFlow model on a smaller dataset specific, it is possible to reuse the feature representations mentioned above in a new task.

Transfer learning has become a popular technique. Because it often results in faster training and improved accuracy when applied to new tasks.

How does Transfer Learning work with TensorFlow?

TensorFlow provides a convenient way to perform transfer learning using its pre-trained models, which are trained on the ImageNet dataset. The pre-trained models include VGG, ResNet, Inception, and MobileNet,

These models can be used as a feature extractor to extract relevant features from new data. The last few layers of the pre-trained model are removable.

And new layers can be added that are specific to the new task. These new layers are then trained on the smaller dataset to fine-tune the model.

Applications of Transfer Learning with TensorFlow Models

Transfer learning with TensorFlow models has been used in various domains. Here are some examples of Transfer Learning:

- Object detection: Object detection is known to identify objects in an image and localize them.

Transfer learning with TensorFlow models can be used to train a new object detection model on a smaller dataset specific to the new task. Such as it can detect tumors in medical images.

- Image classification: Image classification is classifying images into a fixed number of categories.

Transfer learning with TensorFlow models can train a new image classification model on a smaller dataset specific to the new task. Such as, it can detect different types of flowers in an image.

- Natural language processing: Natural language processing is the task of analyzing and generating human language.

Transfer learning with TensorFlow models can train a new natural language processing model on a smaller dataset specific to the new task. Such as, it can work as sentiment analysis.

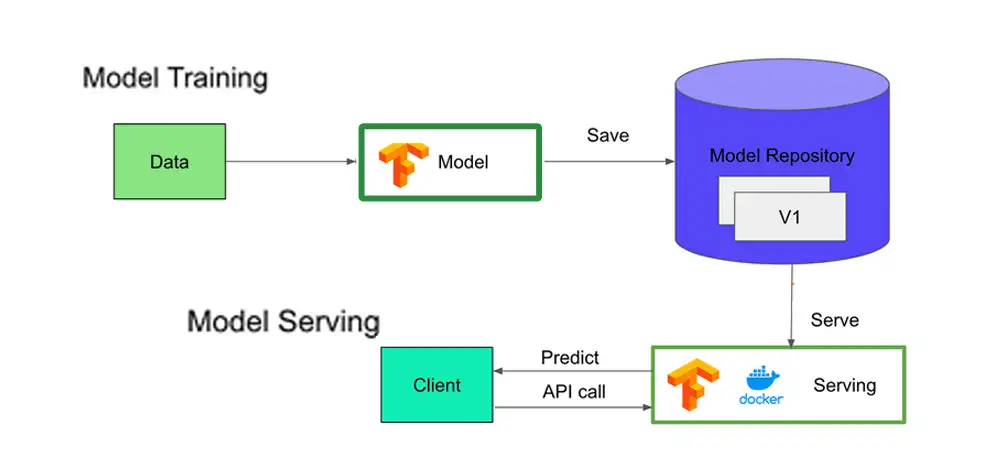

Deploying TensorFlow Models

Deploying TensorFlow models is a crucial step in the machine learning pipeline. It involves taking a trained model and making it available for real-world applications.

In this section, we will explore the process of deploying TensorFlow models, including different deployment options, considerations, and best practices.

Choosing a Deployment Option

When it comes to deploying TensorFlow models, several options are available. It depends on the specific requirements of your business's application. Let's explore some of the most common deployment options:

- Deploying on Cloud Platforms: Cloud platforms provide infrastructure and services to host and deploy TensorFlow models.

It includes Google Cloud, Amazon Web Services, and Microsoft Azure. Cloud platforms offer scalability, reliability, and ease of management.

You can leverage services like Google Cloud ML Engine, or AWS SageMaker to deploy your models on the cloud.

- Deploying as Web Services: Another popular option is deploying TensorFlow models as web services using frameworks like Flask or Django.

It allows you to create APIs that other applications or systems can access. It provides flexibility and enables integration with various platforms and technologies.

- Deploying on Edge Devices: Deploying TensorFlow models on edge devices allows real-time inference without a network connection.

It includes mobile devices or Internet of Things (IoT) devices.TensorFlow provides tools, like TensorFlow Lite, for optimizing and deploying models on edge devices with limited computational resources.

Considerations for TensorFlow Model Deployment

When deploying TensorFlow models, there are specific considerations that ensures a smooth and efficient deployment process. Here are a few important considerations:

- Model Size and Complexity: Consider the size and complexity of your model when choosing a deployment option.

More extensive TensorFlow models may require more computational resources. Deploying on-edge devices with limited memory and processing power can be challenging.

- Latency and Throughput: Consider your model deployment's desired latency and throughput depending on your application's requirements.

Cloud-based solutions may provide higher scalability and throughput. Meanwhile, edge devices ensure lower latency and real-time inference.

- Security and Privacy: Ensure your deployed TensorFlow model complies with security and privacy requirements, especially when handling sensitive data.

Implement proper authentication and encryption mechanisms to protect your models and the data they process.

Best Practices for TensorFlow Model Deployment

To make the deployment process smoother and more efficient, here are some best practices to keep in mind:

- Versioning and Reproducibility: Maintain proper versioning of your TensorFlow models to enable reproducibility and facilitate updates or rollbacks.

Use tools like Git to manage code and models and document any changes or dependencies.

- Monitoring and Scalability: Implement proper monitoring mechanisms to track the performance of your deployed TensorFlow models.

Use tools like TensorFlow Serving or Kubernetes to enable scalability and handle increasing workloads.

- Continuous Integration and Deployment: Embrace a continuous integration and deployment (CI/CD) approach to automate the deployment process.

Enable automatic testing, version control, and code reviews to ensure seamless and error-free deployments.

Conclusion

With its wide range of pre-trained models and powerful tools, TensorFlow empowers researchers and developers to tackle complex tasks easily. As per TensorFlow, there are over 30 million active users of TensorFlow.

TensorFlow has wide applications including image classification, natural language processing, and object detection.

And with transfer learning and deployment options, the potential for innovation and real-world applications is limitless.

Suggested Reading:

MXNet vs TensorFlow: Which is Best for You?

Frequently Asked Questions (FAQs)

Can I use pre-trained TensorFlow models for my applications?

Yes, TensorFlow offers a collection of pre-trained models like MobileNet, BERT, and ResNet. These models are customizable to fine-tuned or used directly for various tasks.

By using predefined TensorFlow Models, you can save time and resources in the development process.

What are the hardware requirements for running TensorFlow models efficiently?

TensorFlow supports hardware accelerators, including GPUs and TPUs, to speed up model training and inference. It enhances overall performance.

How do I optimize the performance of my TensorFlow models?

Techniques like model quantization, pruning, and hardware-specific optimizations can significantly improve model efficiency. It can also reduce memory and computation requirements.

What are some real-world applications of TensorFlow models?

Some real-world applications of TensorFlow models include self-driving cars and Ai in healthcare, finance, and entertainment.