Artificial intelligence (AI) has transformed over the past several years from fad to a commonplace fact.

AI is used widely daily, from innovative medical research to smart assistants like Siri and Alexa.

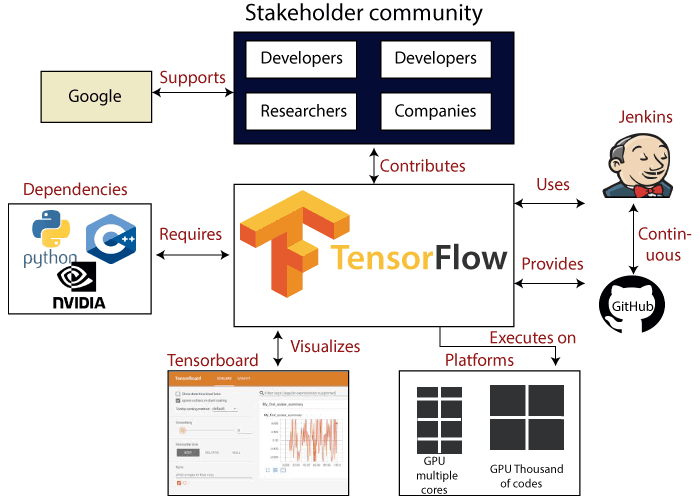

TensorFlow, an open-source library & a versatile framework for developing, training, and deploying deep learning models, is responsible for much of the force behind this seismic change. It was created by Google’s AI team in 2015.

It has developed into a vital tool for data scientists and a driving force behind the adoption of AI in a variety of sectors.

In this thorough guide, we'll look at how companies of all sizes can employ TensorFlow to access the game-changing potential of deep learning.

Let us start the guide with knowing what Tensor flow is and how does it works.

What is TensorFlow and How Does it Work?

TensorFlow is an end-to-end open-source platform for creating artificial neural networks and other deep learning architectures.

It helps developers build smart, self-learning models like the human brain. Here’s a quick look under the hood:

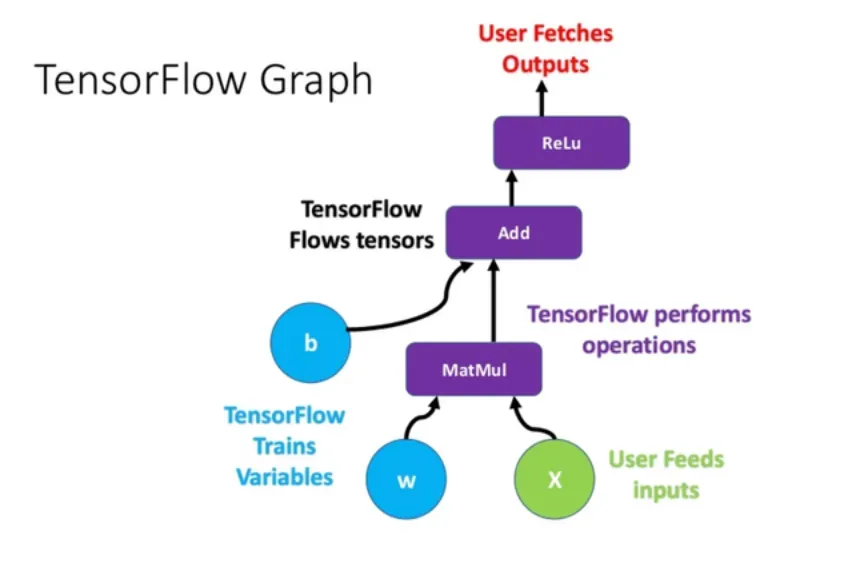

- Neural networks: TensorFlow uses nodes and edges to construct layers of neural networks. Each layer consists of nodes that perform mathematical operations on input data. Connections between these nodes are called edges. As data flows through the layers, the neural network learns over time.

- Flexible computational graph: TensorFlow programs are structured as computational graphs to map out the logical flow of operations. This modular design makes it easy to shape and reshape networks as needed.

- Optimized performance: TensorFlow leverages GPU acceleration under the hood to deliver high-performance results even with massive datasets and complex models.

- Portability: Models can be deployed across a vast range of platforms including mobile, desktop, cloud, edge devices, and more.

While TensorFlow is incredibly powerful, it minimizes the need for deep expertise in programming or data science.

The modular design, abundance of pre-built components, and intuitive syntax abstract away some model complexity. This opens the door for more businesses to adopt deep learning.

Now its time to see why do deep learning matters.

Why Deep Learning Matters?

Many companies are still trying to wrap their heads around terms like deep learning and neural networks.

However, these advanced techniques offer immense opportunities for business growth and efficiency.

Here's a quick primer on why deep learning matters:

- Making sense of unstructured data: Deep learning identifies complex patterns and extracts meaning from unstructured data like text, images, video, and audio. IBM represents 80% of all business information but remains largely untapped by traditional analytics.

- Hyper-accuracy: Deep learning models significantly outperform older machine learning approaches across a range of real-world problems from object detection to speech recognition. Their ability to keep improving over time compounds these accuracy gains.

- Human-like cognition: Advanced deep learning models can mimic human cognitive abilities like reasoning, creativity, and problem-solving. This level of intelligence is key to use cases like self-driving cars and conversational bots.

- Ease of scaling: Once trained, deep learning models can be deployed at scale across large datasets, new use cases, and different platforms without major modifications.

- Increased automation: Deep learning delivers the algorithmic muscle needed to automate an expanding array of tasks. Everything from document processing to quality control on the factory floor.

For businesses, this combination of performance, flexibility, and automation unlocks game-changing opportunities in analytics, operations, customer engagement and beyond.

After looking into the reasons why it matters its time to see the real-world business use cases.

Real-World Business Use Cases

Get ready for real-world examples of cutting-edge AI in action. Then keep reading! This section explores how industries are leveraging deep learning to drive innovation and solve complex challenges.

From healthcare to retail to automotive - discover how TensorFlow powers intelligent applications that are transforming businesses and improving lives.

Let's dive into these fascinating use cases below.

Financial Services

- Analyze mortgage documents and loan applications for risk assessment and automated approval.

- Detect credit card fraud and money laundering transactions in real-time.

- Forecast stock volatility to optimize high-frequency trading systems and algorithms.

Healthcare

- Diagnose medical conditions and track disease progression through medical image analysis.

- Provide personalized medicine recommendations based on patient health records.

- Identify patients with high readmission risk to improve care management.

Retail

- Recommend products and optimize discounts based on customer transaction history and demographics.

- Analyze in-store traffic patterns to improve store layout, displays, and inventory.

- Dynamically adjust prices across sales channels based on store traffic, inventory, and competitor data.

Automotive

- Enable camera-based advanced driver assistance systems (ADAS) for collision avoidance, lane keeping, and other self-driving features.

- Continuously analyze sensor data from vehicles to predict maintenance needs.

- Optimize routes for EVs based on real-time traffic patterns, road conditions, and charging station availability.

Oil and Gas

- Scan geological surveys, well logs, and seismic data to identify promising exploration sites.

- Optimize drilling operations and costs by predicting future oil reservoir production.

- Improve drilling safety by detecting emerging equipment abnormalities and maintenance needs.

Entertainment

- Generate personalized movie, music, and TV recommendations tailored to customer preferences.

- Analyze viewer engagement metrics to optimize streaming content catalogs.

- Automatically transcribe and translate video and audio content into other languages.

Now its time to see how businesses can start using TensorFlow.

Suggested Reading:

How Businesses Can Start Using TensorFlow

TensorFlow offers powerful tools to boost profits, optimize processes and delight customers.

Keep reading to discover how your company can start leveraging the power of deep learning today.

Start with a Well-Defined Use Case

Don't let TensorFlow's advanced capabilities distract from business goals. The first step is identifying a solid, high-value use case aligned to company priorities.

Good options include optimizing an expensive process, boosting revenue for a profitable product line, or improving customer satisfaction metrics.

Starting small reduces risk and ensures stakeholder support.

Leverage Transfer Learning

Training deep learning models from scratch requires massive datasets and computing resources. Transfer learning sidesteps this by starting from pre-trained models and then customizing to new tasks.

For example, TensorFlow Hub provides access to thousands of reusable models for vision, NLP, forecasting and more. Leverage these resources to accelerate development.

Evaluate TensorFlow Extended (TFX)

TFX is an end-to-end platform for deploying TensorFlow models to production. It encapsulates best practices for the model lifecycle including data ingestion, feature engineering, model training, and monitoring. For many, TFX will provide the right out-of-the-box solution.

Partner with Experts

TensorFlow is powerful but has a learning curve. Most companies will benefit from partnering with experienced data scientists or ML engineers, especially for initial implementations.

Consider contracting external consultants for a pilot project or building in-house capabilities over time.

Focus on Real-World Impact

Avoid getting distracted by incremental accuracy gains. The priority is deploying models that improve measurable business KPIs. Maintain focus on optimizing real financial and operational results rather than computer science metrics.

Plan for Integration and Maintenance

Like any enterprise software, TensorFlow models require ongoing monitoring, maintenance, and enhancement. Architect the solution for maintainability with considerations like modularity, logging, and instrumentation. Plan to integrate models into existing data and analytics pipelines.

Build for Gradual Adoption

View initial pilots as stepping stones to larger-scale adoption. Use reproducible workflows, enable batch or real-time scoring, and containerize models for portability across environments.

Success depends on models that seamlessly integrate alongside existing business processes while delivering tangible value.

Now, we will walk together through the best practices for advanced implementation.

Best Practices for Advanced Implementations

In just a few lines, learn proven methods to supercharge your models and take full advantage of TensorFlow's capabilities.

Follow these tips to develop powerful production-grade systems that drive real results. Ready to get started? Keep reading!

Take a Full Lifecycle Approach

TFX provides an end-to-end platform for the full model lifecycle including data ingestion, feature engineering, model training, deployment, and monitoring. Leverage these best practices even with custom TensorFlow code.

Expand Training Datasets

More training data leads to better model accuracy, especially for complex tasks. Explore techniques like data augmentation to synthetically expand datasets. For NLP models, scraping relevant websites can generate ample text data.

Iteratively Improve Model Architecture

Taking an agile approach allows testing of different model architectures, hyperparameters, and techniques. Don't settle on the first design. Refine models iteratively to boost accuracy.

Deploy to Diverse Platforms

Productionize models across web, mobile, embedded systems, edge devices, and other platforms. TensorFlow makes models portable. Take advantage of this flexibility.

Enable Real-Time Scoring

For many applications, integrating predictions into business processes requires millisecond response times. Use REST APIs, messaging, gRPC, or TensorFlow Serving to enable real-time scoring.

Monitor Models Closely

Actively monitor model performance across metrics like latency, drift, skew, and accuracy.

Watch for any degradation and trigger retraining when necessary. This ensures sustained reliability in production.

While mastering TensorFlow takes experience, following these steps lays a strong foundation for long-term success. Think big, start small, and expand the business impact over time.

Finally, you are at the end of this blog, its time to see the future of TensorFlow.

The Future of TensorFlow

The possibilities for TensorFlow seem endless as new frontiers in deep learning are conquered.

Advancements in model architectures, ease-of-use, speed and scale will see more complex applications than ever before - from computer vision systems that can see like humans to natural language models that can converse on any topic.

Meanwhile, optimizations for edge devices promise to bring real-time AI to the fingertips of billions.

Industry-specific solutions will further lower the bar, allowing organizations across healthcare, retail, automotive and more to leverage pre-built tools customized for their unique needs. As best practices mature, TensorFlow projects will only grow tighter with key business metrics.

Perhaps most exciting is the democratization of deep learning - new low-code tools aim to open the door for non-specialists to apply AI across the enterprise.

The future with TensorFlow looks bright as it drives disruption and shapes intelligent solutions that enhance our lives in ways we can only begin to imagine.

Conclusion

This article gave a thorough overview of TensorFlow, covering everything from its features and use cases to best practices for implementation and the platform's future.

Following TensorFlow's modular approach will help you create new value for your company, whether you're just starting started or optimizing sophisticated models.

Watch for cutting-edge methods that expand the realm of the possible as AI continues to change industries.

The future of using deep learning to solve your most pressing problems is bright if you have an open mind and use TensorFlow as your tool.

Frequently Asked Questions (FAQs)

What is TensorFlow and how can it be used for business purposes?

TensorFlow is an open-source library developed by Google for machine learning. It is used for building models for various tasks, such as image recognition, natural language processing, and recommendation systems.

What are some practical applications of TensorFlow in the business sector?

TensorFlow can be used for a variety of tasks in the business sector, such as image recognition and object detection in manufacturing and quality control processes, natural language processing for sentiment analysis in customer support systems, and predictive modeling for finance and investment analysis.

How can TensorFlow enhance data analysis and decision-making in business?

TensorFlow can enhance data analysis and decision-making by enabling businesses to process vast amounts of data quickly and accurately. Models built with TensorFlow can analyze patterns in data to make accurate predictions, detect anomalies, and optimize processes. This can help businesses make better decisions, improve efficiency, and reduce costs.

In which industries can TensorFlow be beneficial for business purposes?

TensorFlow has applications in multiple industries, including healthcare and medical research for faster and more accurate diagnosis and treatment, finance and investment for predicting risk and improving decision-making, and e-commerce for implementing recommendation systems that improve customer experiences.

What are the challenges and limitations of implementing TensorFlow in a business setting?

Challenges and limitations of implementing TensorFlow in a business setting may include data privacy and security concerns, the need for specialized skills and technology infrastructure, and the interpretability of models for ethical decision-making.

What are the future trends and developments to look out for in TensorFlow for business purposes?

Future trends in TensorFlow for business purposes include advancements in deep learning research and algorithms, integration with cloud services and edge computing for faster processing, and improved interpretability and explainability of models for more ethical and transparent decision-making in business.