What is Transfer Learning?

In machine learning, transfer learning is the method of leveraging the knowledge or patterns learned in one problem domain (the source domain) and applying it to a new, related domain (the target domain). This crucial technique accelerates learning and improves performance when data is scarce.

Importance

Transfer learning enables developers to train smaller models or utilize less data, which is a competitive advantage in many applications where data quantity is a limitation.

Use Cases

Transfer learning's most common use is in deep learning, particularly for image and text recognition tasks, where high-quality labelled data is challenging to accumulate.

Comparison with Traditional Learning

Unlike traditional learning where models are trained from scratch for each new problem, transfer learning reuses existing models' knowledge, thereby saving computational resources and time.

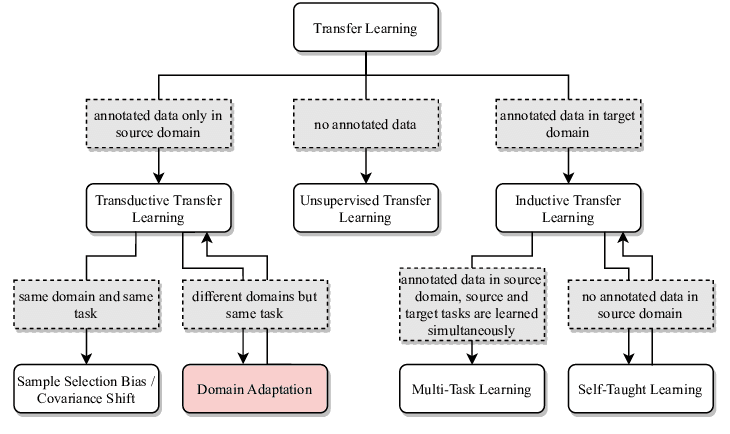

Types of Transfer Learning

There are several different types of transfer learning you can utilize depending on the problem you're trying to solve.

Inductive Transfer Learning

Inductive transfer learning involves transferring the learning from a source task to the targeted task, given that both tasks share the same input space but a different output space.

Transductive Transfer Learning

In transductive transfer learning, both the input and output spaces for the source and target tasks are different. You can think of it as translating knowledge from a different learning environment but with a similar language.

Unsupervised Transfer Learning

Here, transfer learning is applied to unsupervised learning tasks. The goal is to apply knowledge gained from an unsupervised learning task in the source domain to another in the target domain.

Multitask Learning

Multitask learning is a type of transfer learning, different related tasks are learned simultaneously in parallel. The tasks collectively help each other, leading to better performance.

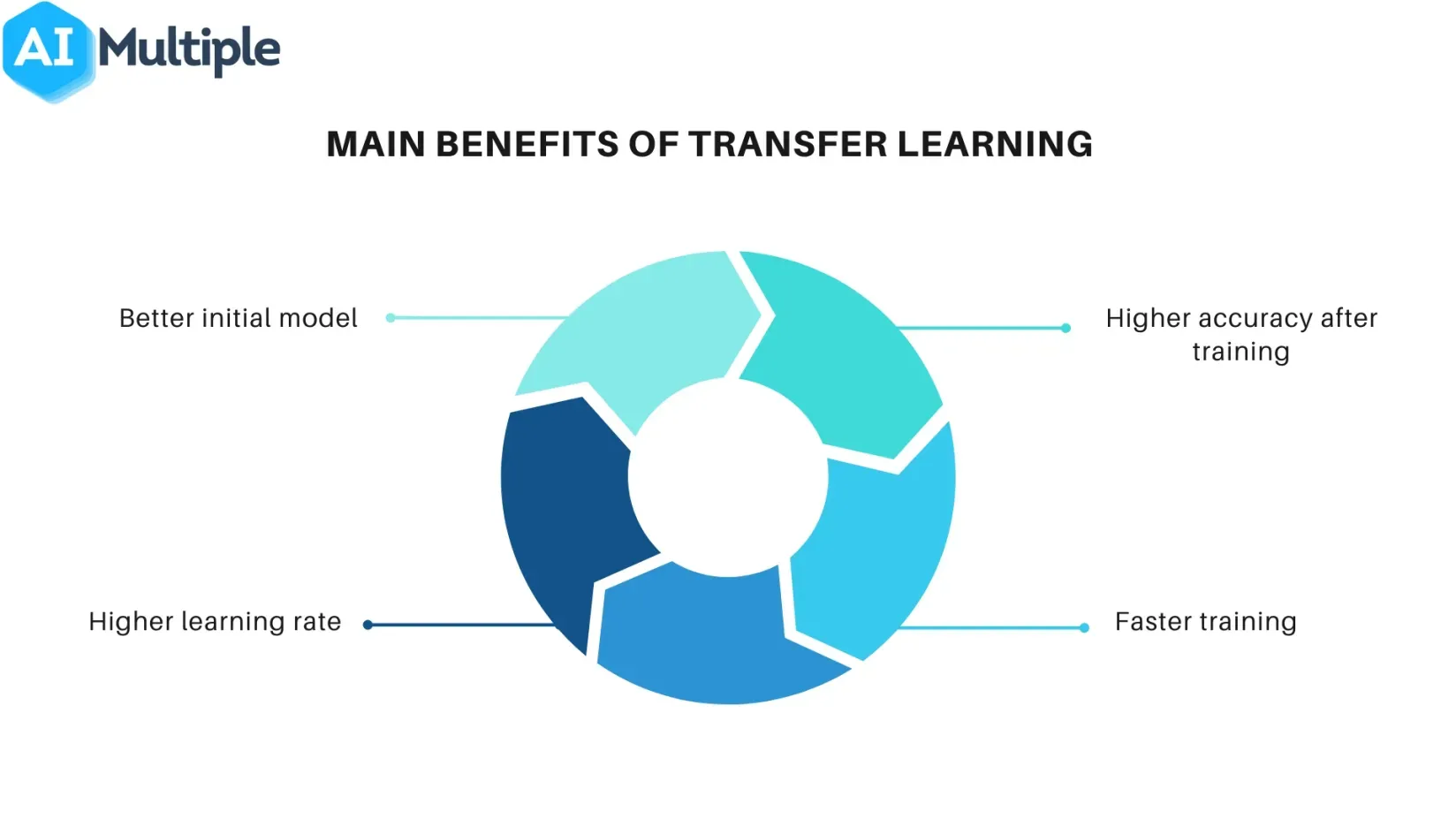

Advantages of Transfer Learning

Transfer learning offers several advantages, irrespective of industry or application. Here's why it comes in handy.

- Saving Time and Computational Resources: With transfer learning, models don't have to start learning from scratch and require less computational power, consequently saving time and resources.

- Making Use of Small Datasets: Transfer learning is extremely beneficial when the dataset is limited. Smaller datasets can then piggyback on learning from larger, often public, datasets.

- Better Initial Model: The initial models for transfer learning are usually pre-trained on massive datasets. So, the initial model comes with a strong prior, making the optimization easier.

- More Generalizable Models: Due to their exposure to various tasks, models modified through transfer learning are often more capable of generalizing to unseen data than their from-scratch counterparts.

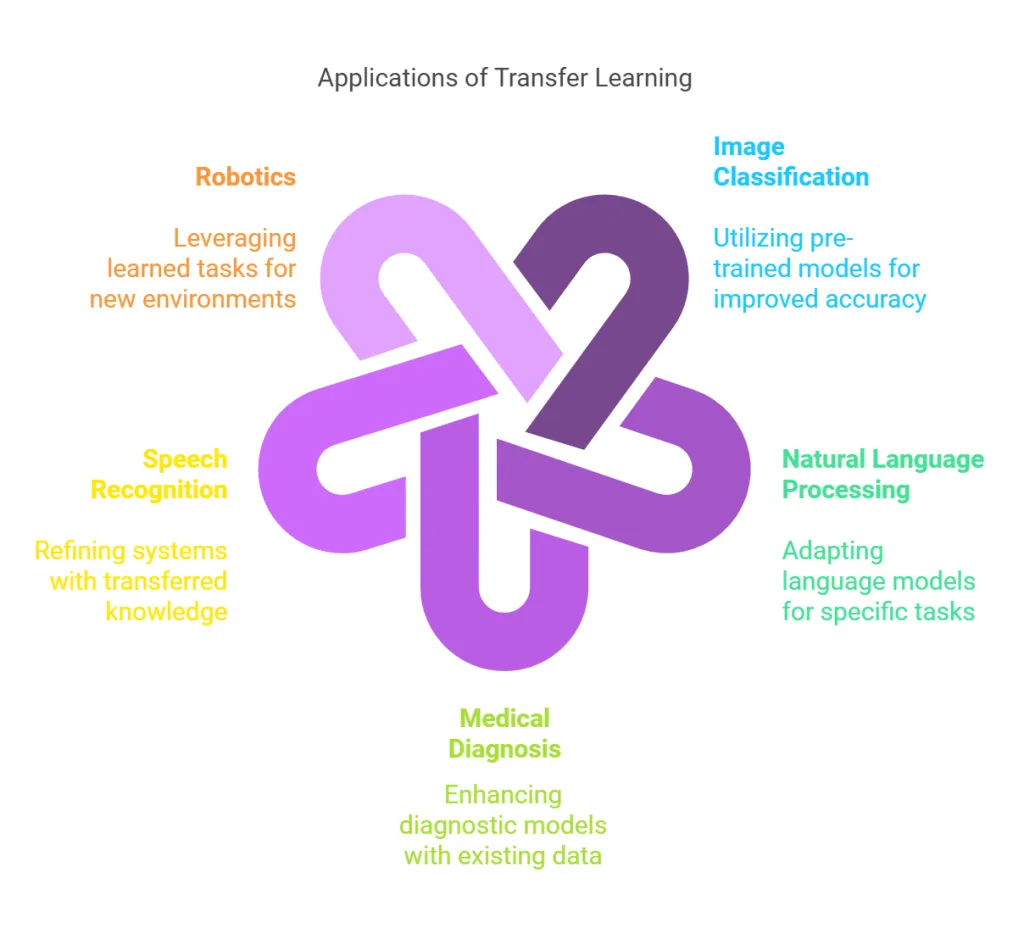

Applications of Transfer Learning

Transfer learning is a handy tool in several applications. Let's explore its most notable utilization.

- Image Classification: Transfer learning is used extensively in image classification tasks, leveraging pre-trained models like ResNet or VGG for feature extraction before fine-tuning for new tasks.

- Natural Language Processing (NLP): In NLP, pre-trained models such as BERT or GPT serve as basic models for tasks like sentence classification, sentiment analysis, and named entity recognition.

- Reinforcement Learning: In reinforcement learning, transfer learning can leverage prior knowledge to solve new tasks, improving learning efficiency and overall agent performance.

- Medical Imaging: In medical imaging, transfer learning is used to deal with the scarcity and imbalance of annotated datasets, improving detection and diagnosis outcomes.

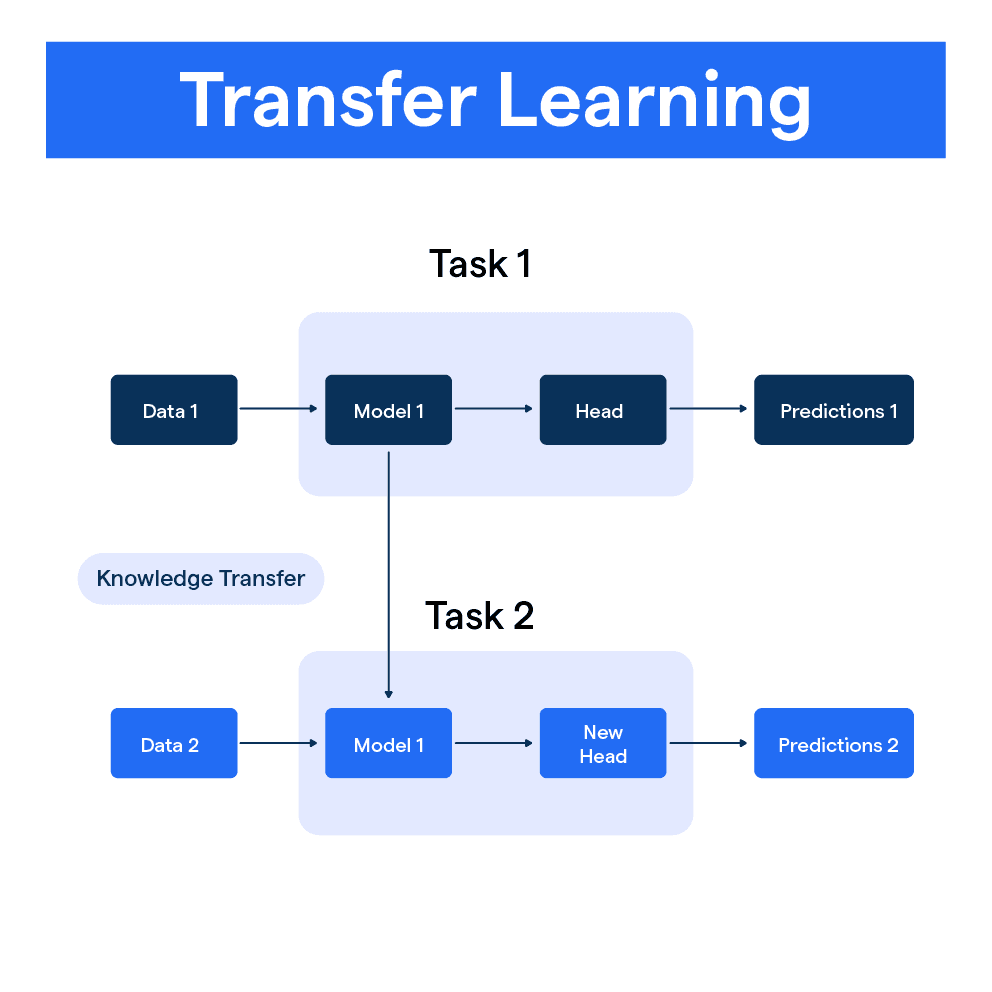

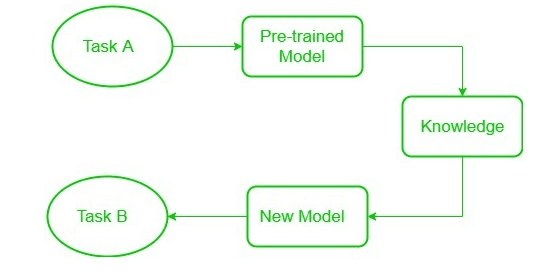

The Process of Transfer Learning

Implementing transfer learning involves several steps. Let's take a glance at the typical transfer learning process.

- Choosing a Pre-Trained Model: The first step is to find a suitable pre-trained model on a related task. Choosing a model that has been sufficiently trained on a large, diverse dataset is usually the best start.

- Adjusting the Model: The next step is to adjust the pre-trained model to suit the new task. This typically involves adding or modifying layers and fine-tuning.

- Training and Fine-tuning: The adjusted model is then trained on the new task's data. Depending on the similarity between tasks, only the added layers might need training, or the whole model might need fine-tuning.

- Evaluating the Model: Finally, the retrained model's performance is evaluated on the new task against a hold-out test set and tweaked as necessary for better results.

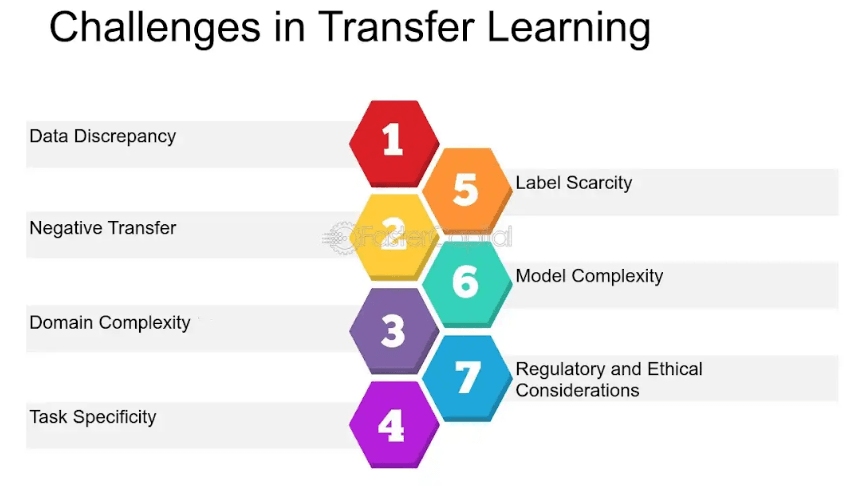

Limitations and Challenges of Transfer Learning

While beneficial, transfer learning also has limitations and poses certain challenges.

- Task Relevance: For transfer learning to be effective, the source and target tasks need to share some relevance. Transferring learning from completely unrelated tasks might lead to poor performance.

- Negative Transfer: Sometimes, applying learned knowledge from the source task to the target task can lead to degraded performance, a phenomenon known as negative transfer.

- Domain Adaptation: Adapting a model trained on a particular domain to another, possibly very different domain, is a significant challenge in transfer learning.

- Overfitting on Small Datasets: While transfer learning can work well with small datasets, there's also a risk of overfitting if the model is overly complex for the limited amount of data.

Frequently Asked Questions (FAQs)

How does transfer learning help improve the performance of machine learning models?

Transfer learning enables models to leverage pre-existing knowledge from one task to improve performance in a related task. By transferring learned features, models can learn new tasks more effectively and efficiently.

When should you consider using transfer learning in my machine learning project?

Transfer learning is particularly useful when labelled data is scarce in the target domain or when time and resource constraints exist. It can also help overcome limitations with computing resources.

How do you implement transfer learning in my project?

To implement transfer learning, choose a pre-trained model relevant to your task, fine-tune it on your target domain, and evaluate its performance. Adjustments can be made based on the evaluation results.

What are some popular applications of transfer learning?

Transfer learning has been widely used in image recognition, autonomous driving, natural language processing (NLP), gaming, and speech recognition tasks. It enhances performance and enables models to learn faster.

What are the challenges in transfer learning and how can you address them?

Challenges in transfer learning include overfitting, biases, domain shifts, and reduced interpretability. To address these, use regularization techniques, carefully select and augment datasets, and explore methods for increased model interpretability.