What is Semi-Supervised Learning?

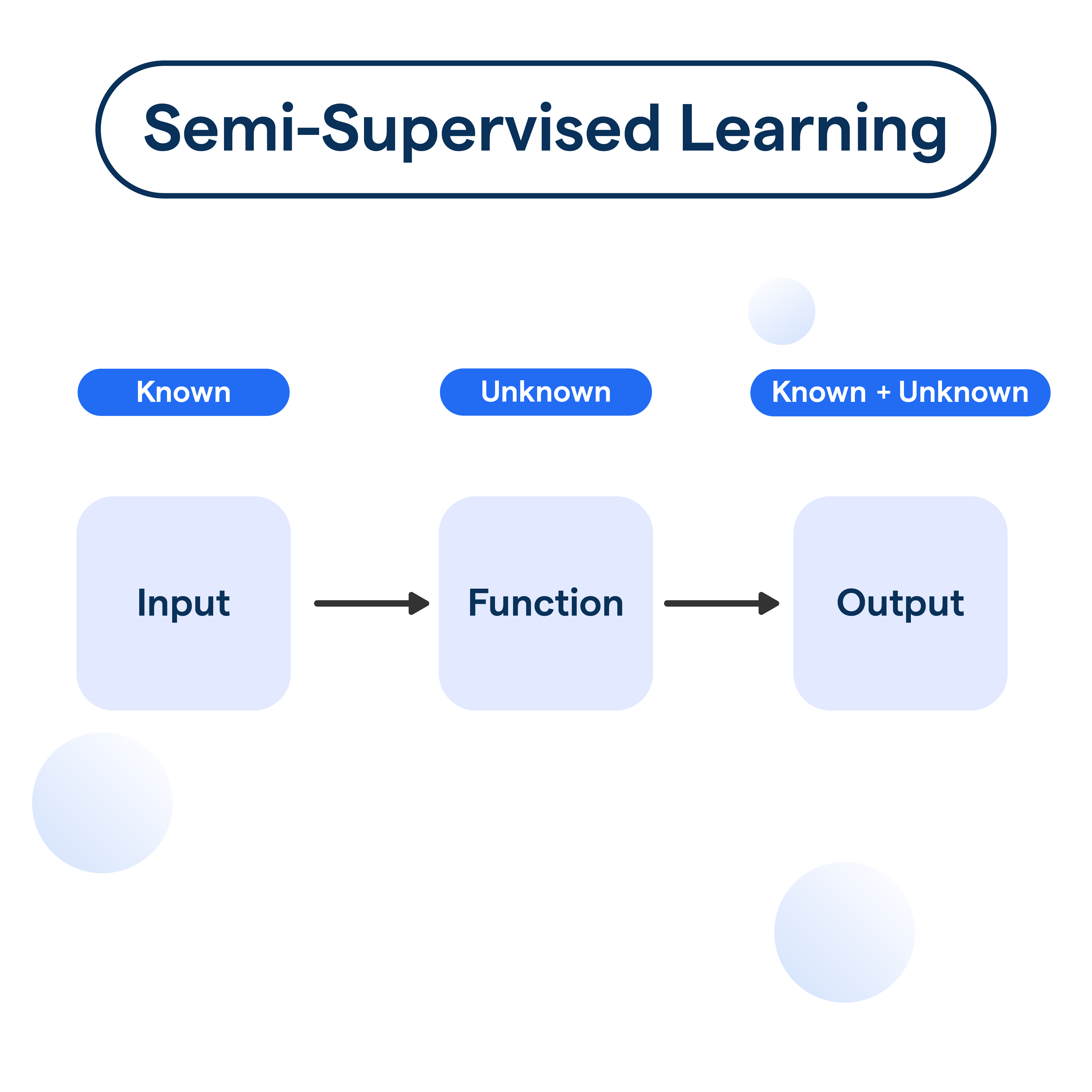

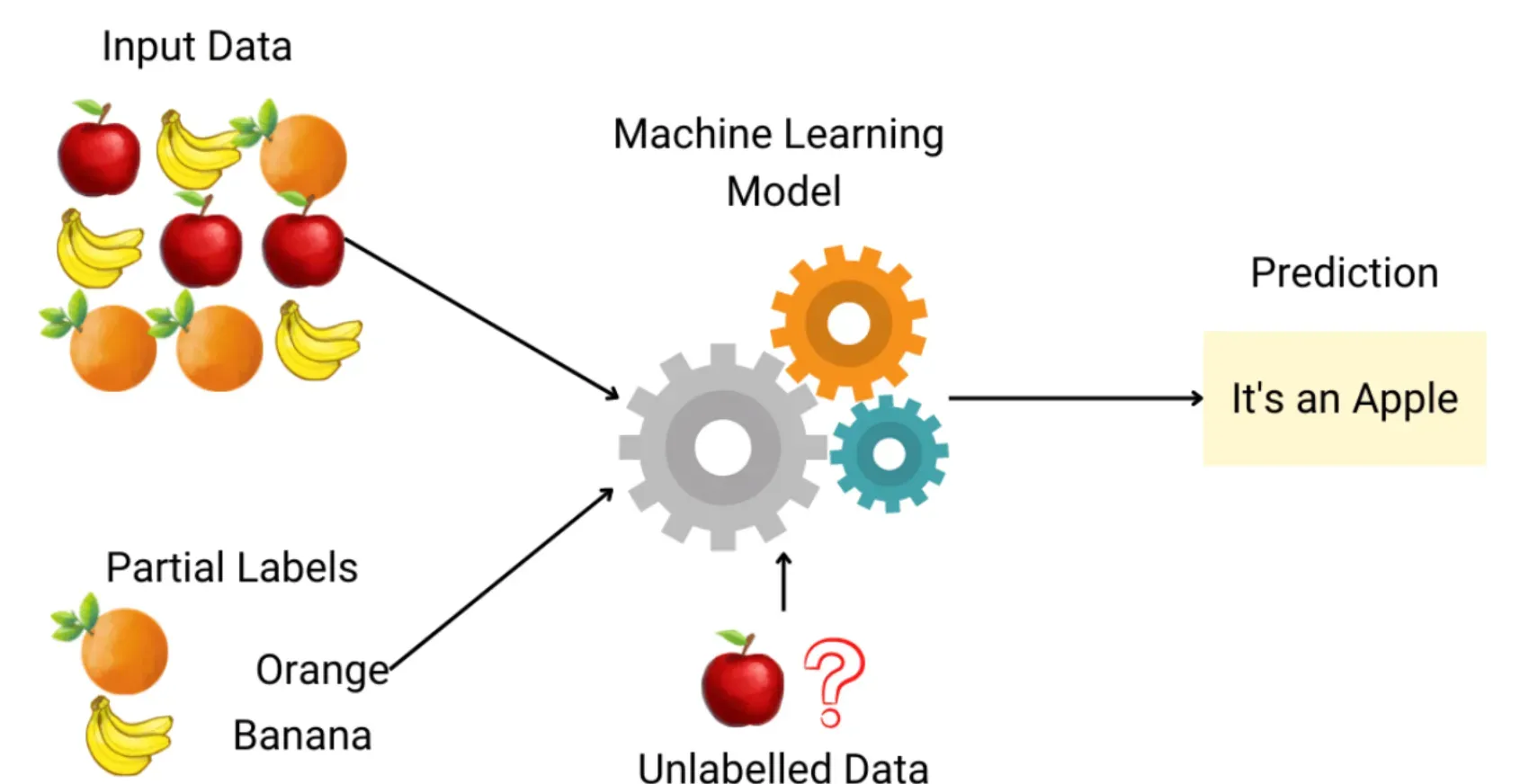

Semi-supervised learning is a machine learning paradigm that deals with training models using a combination of a small amount of labeled data and a large amount of unlabeled data.

It bridges the gap between supervised and unsupervised learning algorithms, especially when it's expensive or impractical to obtain a fully labeled training set.

How Does Semi-Supervised Learning Work?

In semi-supervised learning, the model uses a small set of labeled data to learn an initial model. This model is then used to classify the unlabeled data, creating an expanded labeled dataset.

Then, the model retrains on this new dataset to refine its predictions. This process can loop multiple times, each instance enabling the model to improve its performance.

In real-world scenarios, it's often expensive or time-consuming to manually label large amounts of data, yet unlabeled data is abundant.

Semi-supervised learning helps to leverage this unlabeled data in tandem with a smaller amount of labeled data to create models that perform comparably or sometimes even better than fully supervised models.

Semi-Supervised Learning vs Supervised and Unsupervised Learning

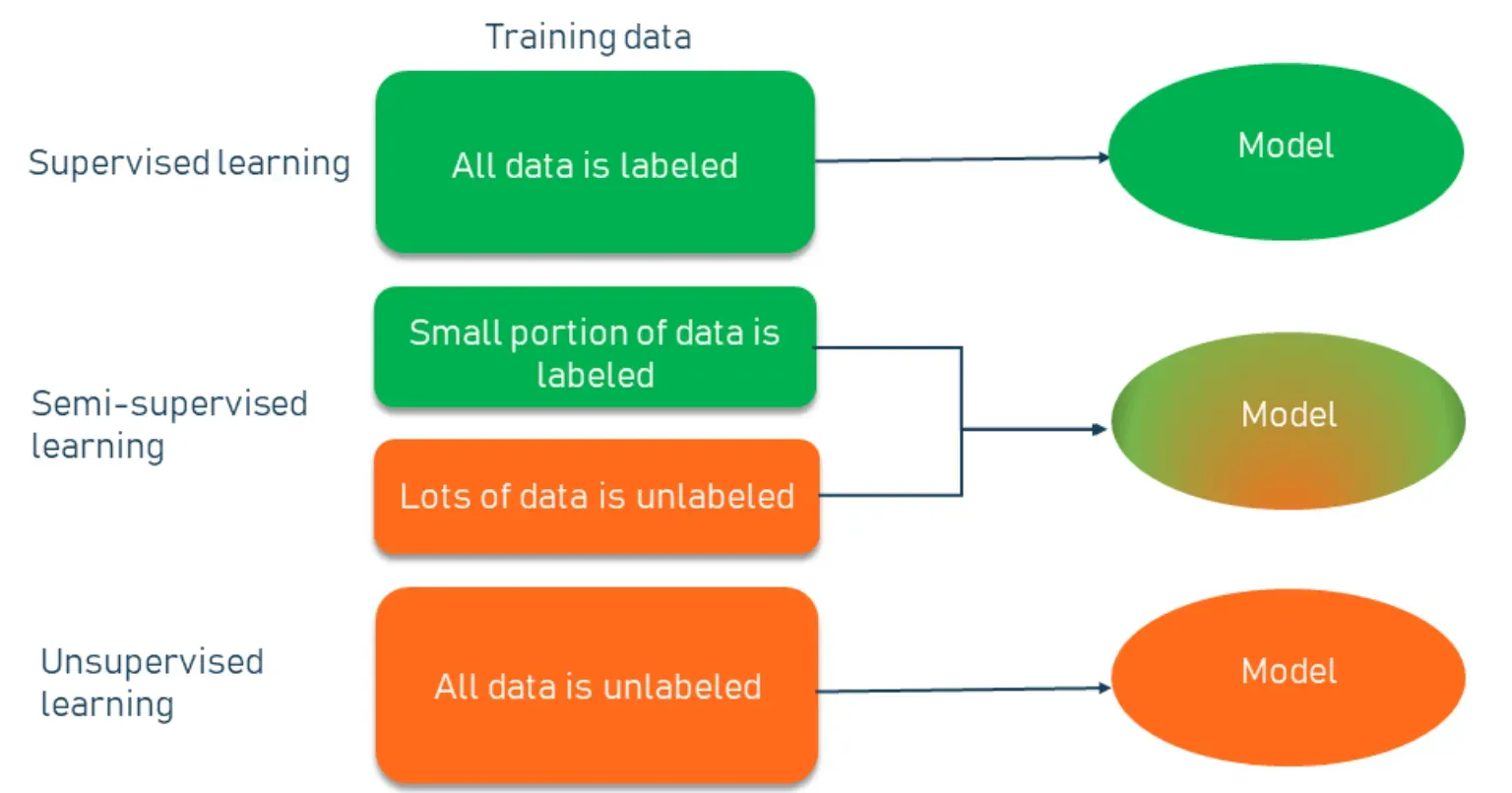

Unlike supervised learning, which requires fully labeled training data, or unsupervised learning, which works solely with unlabeled data, semi-supervised learning provides a middle ground.

It combines the predictive power of supervised learning with the ability of unsupervised learning to explore data structure and relationships in unlabeled data.

Techniques in Semi-Supervised Learning

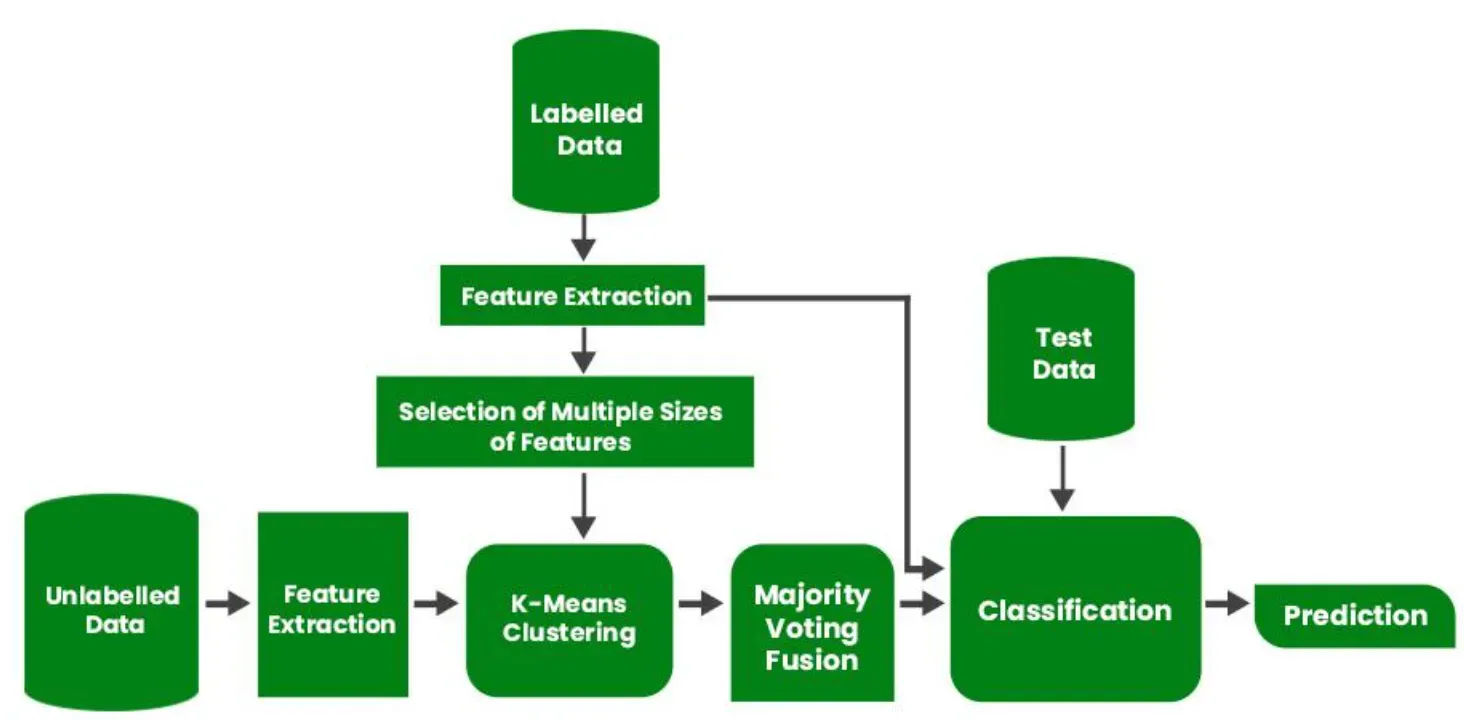

One of the simplest methods of semi-supervised learning is Self-Training. The algorithm first trains a model using the labeled data and then uses this model to label the unlabeled data.

The model is retrained using both the original labeled data and the newly labeled data, iteratively improving the model's performance.

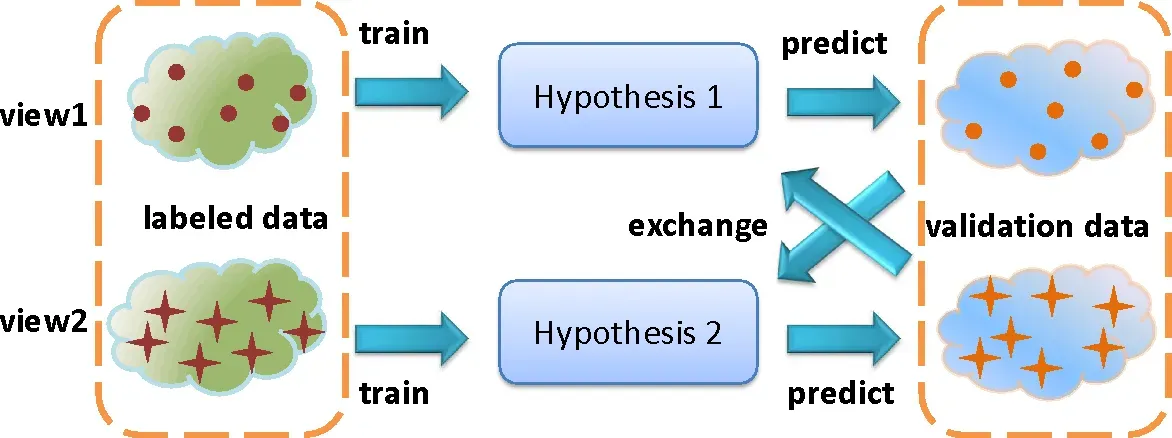

Multi-View Training

Multi-view training is based on the assumption that if we can obtain different views or features of the same data and if these views are sufficiently independent, then classifiers trained on these views should agree on the labels of unlabeled instances.

Co-Training

Similar to multi-view training, Co-Training divides the data into two distinct views. The algorithm trains two classifiers independently on each view.

These classifiers then teach each other by labeling the unlabeled instances they are most confident about, expanding the training set for each other.

Generative Models

Generative Models capture the joint probability distribution of the input data and labels and then use this to categorize new instances.

The model learns to generate new instances similar to the ones in the training set.

Challenges in Semi-Supervised Learning

Most semi-supervised techniques rely on certain assumptions about the data, such as the cluster assumption, manifold assumption, or smoothness assumption. If these assumptions don't hold, the algorithms can perform poorly.

Model Misleading

With incorrect or weak label propagation from the self-labeled data, the model might be misled.

This 'Semi-Supervised Learning Dilemma' shows that sometimes including unlabeled data can actually cause the model's performance to decline.

Data Privacy and Security

The use of unlabeled data may involve handling sensitive information, potentially raising privacy and security concerns, especially in tightly regulated sectors.

Complexity and Cost

Though semi-supervised learning aims to overcome the labeling cost problem, the increased complexity of the involved algorithms could offset that advantage.

Applications of Semi-Supervised Learning

Semi-supervised learning is often applied for web content classification, as the sheer volume of web content means manually labeling everything is impractical.

Image and Speech Recognition

In domains like image and speech recognition, semi-supervised learning helps improve model performance without the need for large labeled datasets.

Natural Language Processing (NLP)

In diverse NLP tasks such as sentiment analysis, language translation, and information extraction, semi-supervised learning can help harness the vast space of text data on the internet.

Medical Diagnosis

In healthcare, semi-supervised learning aids in predicting diseases and conditions where a medical labeled dataset is limited due to privacy concerns or rare occurrences of certain conditions.

Advantages of Semi-Supervised Learning

One of the main advantages of semi-supervised learning is the ability to use plentiful unlabeled data, reducing the need for expensive labeled data.

Improved Accuracy

In incorporating the structure and distribution information from the unlabeled data into the model, semi-supervised learning can often achieve better prediction accuracy.

Scaling Up Easily

Semi-supervised learning is highly scalable, as it's designed to deal with large amounts of unlabeled data.

Adaptability

Given its method of iteratively refining its predictions, semi-supervised learning models can also adapt more effectively to changing scenarios.

Limitations of Semi-Supervised Learning

Since semi-supervised learning depends on the initial labeled data for training, the quality of this data is inherently critical for reliable predictions.

Assumptions about Data

As mentioned earlier, semi-supervised learning typically assumes specific characteristics about the underlying data, such as adjacent data points sharing the same label, which may not always hold true.

Difficulty in Evaluating Results

Since part of the data used to train the model is unlabeled and has no ground-truth labels to compare against, it can be difficult to evaluate and compare different semi-supervised learning methods.

Sensitive to Noise

Given the nature of how semi-supervised learning works, noise in the data can negatively impact the model's performance more significantly.

Exploring these different facets, it becomes clear that semi-supervised learning offers a versatile and scalable way to construct machine learning models when the labeled data is scarce, bridging the gap between supervised and unsupervised learning paradigms.

While not without its challenges, it represents a potent weapon in the data scientist's armory, and its adoption is poised to grow as we grapple with increasingly large and complex datasets.

Frequently Asked Questions (FAQs)

How does semi-supervised learning differ from supervised and unsupervised learning?

In semi-supervised learning, we use both labeled and unlabeled data for training, whereas supervised learning uses only labeled data and unsupervised learning uses only unlabeled data.

What are the advantages of using semi-supervised learning?

Semi-supervised learning allows us to leverage the large amounts of unlabeled data available, reducing the need for extensive labeling efforts. It can potentially improve model performance compared to using just supervised learning.

What strategies can be used to overcome the limited labeled data in semi-supervised learning?

Some strategies include utilizing techniques like self-training, co-training, or using generative models to generate synthetic labeled data. Additionally, active learning can be applied to smartly select which data points to label.

How do we ensure the quality of the unlabeled data in semi-supervised learning?

Ensuring data quality involves carefully preprocessing and cleaning the unlabeled data to remove noise and inconsistencies. It's important to verify that the unlabeled data is representative of the underlying distribution to avoid biased or inaccurate models.

What are the assumptions made in semi-supervised learning, and how can they impact the model's performance?

Semi-supervised learning assumes that the distribution of labeled and unlabeled data is similar and that the model can generalize well from the labeled data. Violations of these assumptions can lead to poor model performance or biased predictions, warranting careful consideration and evaluation.